Introduction

Welcome to Day 3 of our coverage of the NVIDIA GTC conference. Yesterday, NVIDIA announced the next generation H100 data center GPU. The keynote did not go into much detail about some of the new features like FP8 format and the new Transformer engines. So, when we were invited to attend a special briefing session diving deep into the Hopper architecture, we got all our questions ready. In this post, we will cover what NVIDIA shared with us about Hopper, as well as some insightful sessions on data-centric AI, object tracking, drones, graph neural network, and transformers. We have also covered days 1 and 2 of GTC, so please check them out if you have not already done so.

Please note that the assigned day for an event would be different for different time zones. For example, an event that may have taken place on Day 2 for North American participants may have taken place on Day 3 in Asia.

Day 3 of GTC had many sessions on applications and theory of deep learning. In this post, we have curated the following sessions for you:

- Deep dive into NVIDIA Hopper architecture

- The Data-centric AI movement

- Object tracking made easy

- Steel Defect Detection with AI

- A new era of Autonomous Drones

- Graph Neural Networks

- AI-based sensor fusion in autonomous vehicles

- Deploying AI at the edge

- AI in semiconductor manufacturing

- Autonomous Marine Navigation with AI

- Robustness of Vision Transformers

We recommend watching the talks after reading about them here, since some aspects of the talk, like images and video demos, cannot be covered in a written format. Also, if you haven’t already done so, please register for GTC and you could win a 3080 Ti GPU. This is the last and final chance to do so, since tomorrow is the last day of the conference.

Deep dive into Hopper architecture

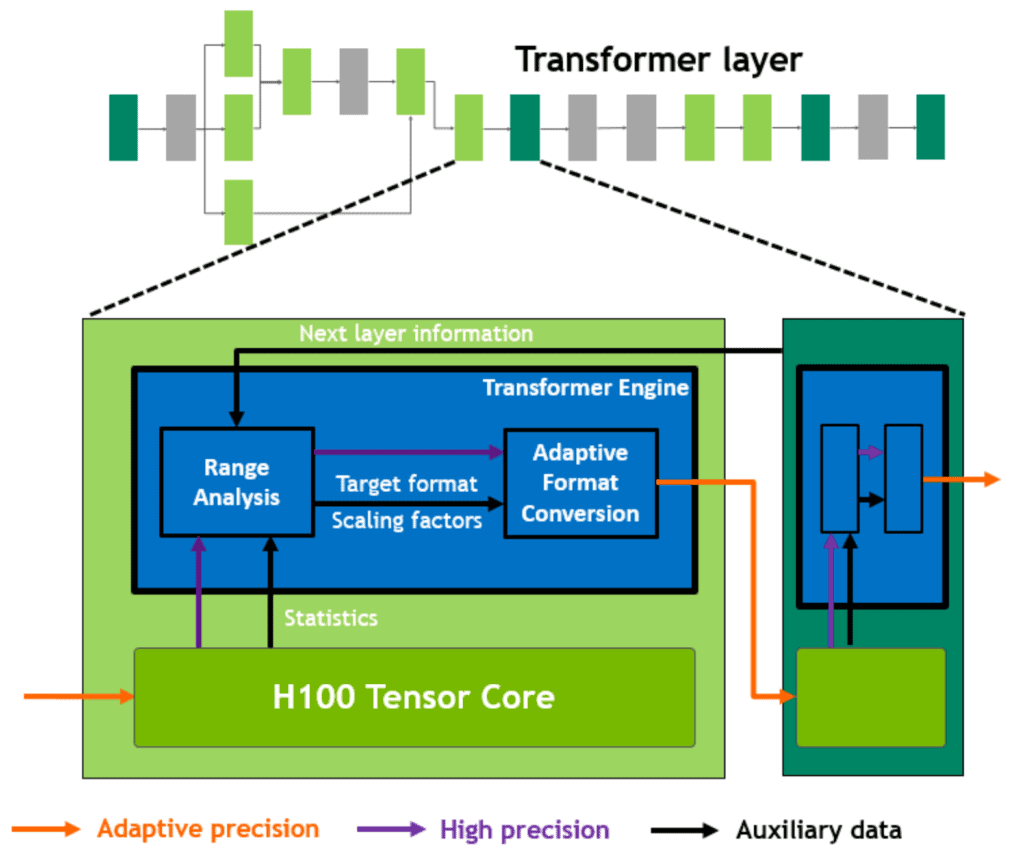

Figure 1. Overview of the Hopper transformer engine (Credit: H100 white paper)

This was a briefing session by NVIDIA for the media and was not recorded for viewing by GTC participants. We will write a much more detailed post explaining all the new features for AI in the Hopper architecture soon, but for now, here is all you need to know about the AI specific features.

- Transformer engine: The H100 contains a new version of Tensor Cores (TCs) present in the earlier Ampere and Volta architectures. The Ampere TCs are fused multiply and add (FMA) units which perform operations like A*X+B in one single instruction. Hopper TCs contain an additional statistics analyser on top of the FMA units which produce attention scores in the transformer layers. The statistics analysers evaluate the range of scores output by the TC and store them at lower precision of FP16 or even FP8. This allows all layers of a transformer to be accelerated while maintaining accuracy, so developers get the best of both worlds. NVIDIA claims that intelligently managing data casting and scaling enables the H100 to deliver up to 9x faster training performance and 30x faster inference performance on transformer models. The Hopper TCs by themselves are up to 6x faster than Ampere TCs.

- 8-bit floating-point data format: One of the keys to faster transformer performance is the intelligent data format management from FP32 all the way down to a new format called FP8. The Hopper architecture white paper revealed that the FP8 format is basically two different data formats containing: 4 exponential bits, 3 mantissa bits (E4M3), and 5 exponential bits, 2 mantissa bits (E5M2). The remaining bit denotes sign. In the press briefing, NVIDIA revealed that they have found E4M3 to be more suitable for forward propagation and E5M2 to be better for backpropagation. As per NVIDIA’s statements, except for one case, the reduced precision of FP8 does not hurt performance but delivers much faster training due to reduced bandwidth. The only scenario where FP8 reduces performance is for small vision transformer models, where the accuracy of the model trained in FP8 is ~1% lower than that in FP32. Though they did not specify the exact model where this occurs, our guess is MobileViT. One of the major benefits of training in FP8 is that no quantization is required for inference. Thus, post-training quantization or quantisation aware training are not required.

- DPX instructions: COVID has shaped our world in the last two years, and the need for individual genomic sequencing for COVID testing has exploded. Genomic sequencing problems fall into the category of dynamic programming problems. People working in reinforcement learning may recall dynamic programming as one of the simplest classical RL algorithms. The Hopper architecture includes a special instruction set called DPX for dynamic programming problems. The DPX instructions accelerate these problems in H100 by a whopping 40x compared to the A100.

- Memory bandwidth: As AI models become larger, there is a need for processing huge vectors which may not all fit into one Streaming Multiprocessor (SM). Until now, SM to SM communication in CUDA was mediated by the global memory. Hopper does away with this limitation and allows SMs to communicate directly with each other. This reduces memory access latency by 7x.

- Tensor Memory Accelerator (TMA): Since the transformer engines are fast, they need to be constantly fed with a lot of data to maximise hardware utilisation. In Ampere and earlier architectures, data movement was controlled by software running in the SMs, which introduced a lot of overhead and added latency. Hopper introduces a hardware solution for data movement. TMAs are Direct Memory Access (DMA) engines embedded directly into the SMs which move data to and from the global memory into shared memory. TMAs take care of boundary conditions such as zero padding and strides for commonly used tensors (1D to 5D).

We asked some questions about AI specific workloads:

LearnOpenCV: Will FP8 and transformer engine utilization be exposed to AI frameworks like PyTorch and TensorFlow?

NVIDIA: Initially, support for FP8 training and transformer engines will be available in transformer specific meta frameworks such as NVIDIA’s Megatron. We are working with framework developers and making all the information available to them, but it is up to them to implement support for these features.

LearnOpenCV: When will TensorRT be updated to take advantage of the FP8 format?

NVIDIA: We are working hard on it, and expect to ship these features by the end of this year, but we can’t commit to a specific timeline yet.

LearnOpenCV: For models trained on FP8, do you recommend FP8 or INT8 for inference?

NVIDIA: If the model is trained on FP8, we absolutely recommend inference in FP8 itself since it gives you the native performance seen during training.

Another Journalist: Which part of the transformer engine is specific to transformers? Why can’t the same performance enhancements be obtained for convolutional networks?

NVIDIA: Hardware-wise there is nothing specific to transformers. The same math operations can be used to accelerate other kinds of AI workloads, but the domain knowledge that NVIDIA has gained by training huge language models allows us to use things like FP8 to deliver 6x the TC performance for transformer training. Also, note that the transformer engine functionality is a software thing, not a hardware thing.

LearnOpenCV: What drives the 2x performance gain of the Hopper Tensor Cores v/s Ampere at the same clock frequency?

NVIDIA: The gains are driven by enhancements to the CUDA programming model. Things like asynchronous memory copy and data locality have a huge effect.

LearnOpenCV: Since there is such a huge emphasis on transformers, given that the attention layers require a softmax operation, are there any hardware units for accelerating softmax? Especially since you use just 8-bit floating point precision, things like look up tables start looking realistic.

NVIDIA: There are no special hardware units for accelerating softmax. Softmax relies entirely on special function units. Also, we store the results of softmax in a higher precision than FP8.

The Data centric AI movement [S42658]

Andrew Ng is a well known name in the AI community. As one of the earliest researchers in the AI community to understand the power of GPUs as well as one of the first to create online courses about machine learning, his contributions have been immense. In this talk, he explained his approach to building successful AI products, which he calls ‘data centric AI’.

- Andrew started with a simple observation that AI is basically a combination of data and code (AI = Data + code).

- Of these, the machine learning community loves to optimize the code and create newer models, but when one steps out of academia and wants to build successful products that deliver value, the best bet would be to curate and improve the quality of the data.

- Currently, there are two barriers to widespread adoption of AI: small datasets in industries and the long tail problem of having multiple different problem statements in different industries.

- Data labeling consistency is very important. He gave the example of defect detection in medicine pills, and that consistency among team members on bounding box size, class of defects and number of bounding boxes allows models to learn optimally even from limited data.

- Andrew stressed that for industrial applications, having standards and manuals for humans on how to label datasets is extremely useful. He went so far as to say that creating these manuals is an even better use of time than optimizing the architecture of the network.

- Datasets can sometimes have errors in the labels. Error analysis can identify the subset of data where the network performs poorly, and this information can be used to improve the dataset quality such as by correcting labeling errors. Even standard datasets like ImageNet and MNIST have labeling errors.

- Improving image quality can sometimes be a much easier way to improve performance than collecting more data or tuning the hyper parameters of the model.

- It is not the quantity but the quality of data that matters. For instance, in the case of defect detection in pills, if the model performs poorly on a certain class, collecting more data and clean data on that class would have a much better effect than collecting data for all classes.

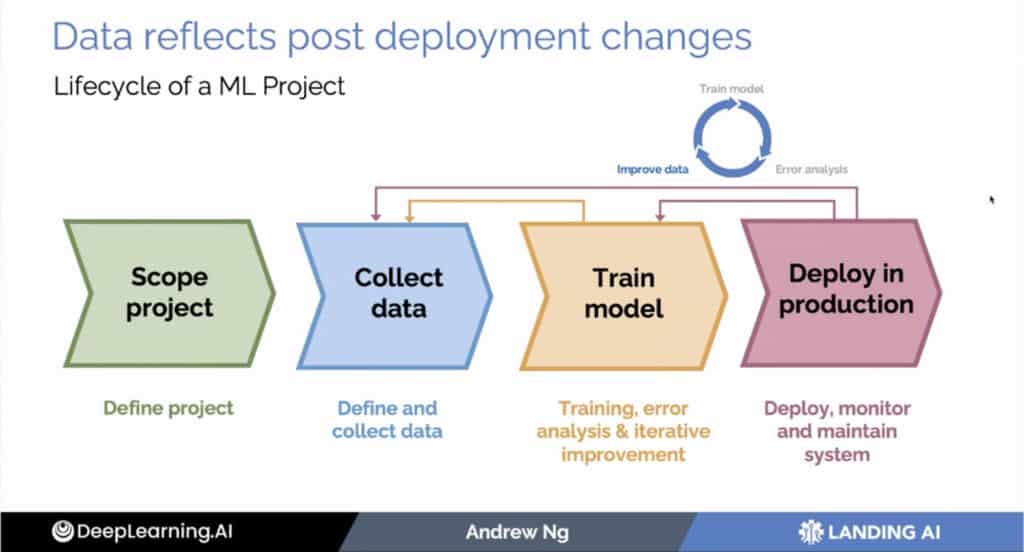

- Finally, Andrew ended his presentation by remarking that in the context of building AI products, data cleaning should be a part of the iterative ML development workflow, as shown in the figure below.

Figure. Slide from Andrew Ng’s presentation [Credit: Andrew Ng, GTC]

Object tracking made easy [S41300]

In this engaging presentation, Assistant Professor Fisher Yu of ETH Zurich laid out the process for creating an object tracking application. Current tracking algorithms have several components:

- Object detection

- Motion estimation

- Computing appearance similarity

- Associating detections to tracks

All these components come with their own bells and whistles which makes tracking hard. Is there a better way? Prof. Yu walks through a simple example of object tracking to show that humans can perform tracking using only object detection and appearance association. With this idea, he proposes doing appearance association with quasi dense matching. When done smartly with ROI alignment, this turns out to be enough for performing object tracking and achieves a really good MOTA score on the MOT17 dataset. Please checkout the full talk for all the details. The paper and code are available here and here.

Steel Defect Detection with AI [S41334]

This talk presented a really great use case of AI in manufacturing. Here are the highlights:

- Severstal is a steel manufacturer and has thus far used traditional computer vision algorithms to detect defects in the manufactured steel sheets.

- The poor performance of these outdated algorithms motivated the company to develop a deep learning based solution.

- They collected 10,000 images of steel sheets containing 17 different types of defects.

- As mentioned in Andrew Ng’s talk, different people tend to label defects differently, so to improve performance, Severstal decided to use Weighted Boxes Fusion or WBF.

- Using Mask RCNN + FPN based on ResNet-101 backbone, they could achieve superior defect detection as well as reduce the time required for defect inspection.

This was a great talk for people in manufacturing looking for case studies of applications of AI to their business.

A new era of Autonomous Drones [S42167]

In this short session, Swift Lab from Kenya outlined their work on designing and manufacturing drones for humanitarian causes. The specific use cases for their drones are in medical logistics and farming. Swift Labs introduced their Jetson Nano powered fully autonomous drone with 70 km range that can carry 4 kg of payload. In medical logistics, they are focused on delivering test kits and test results to remote communities, while in agriculture, they are making rapid strides in using ML for crop detection and yield analysis at scale.

Graph Neural Networks [DLIT1650 & S42406]

Here, we will describe two sessions. Graph neural networks operate on graph data, such as data from social networks, financial transactions, maps etc. The first of these sessions provided an excellent introduction to GNNs and GNN concepts like graph convolutions and message passing. The bulk of the presentation was implementing GCN and GraphSage architectures in DGL. Deep Graph Library or DGL is a framework for training and deploying graph neural networks. DGL is framework agnostic and can be thought of as ‘Keras for graph neural networks’.

The latter of these talks was focused on applications of GNNs to chemistry. Emma Slade from the drug company GSK explained how adding symmetries into the architectures of GNNs can help AI predict molecular properties. The key to adding these inductive biases lies in group theory, a mathematical framework for rigorously studying symmetry. By building in equivariance to the Euclidean and conformal groups (basically translations, rotations, reflections and inversions), GNNs are able to learn extremely efficiently. However, this only works if the underlying dataset has these symmetries.

These ideas are extremely relevant to robotics as well, where, depending on the problem, equivariance or invariance of a neural network to the SO(3) rotation group can simplify motion planning and trajectory generation.

AI based sensor fusion in autonomous vehicles [S42372]

A member company of the German automaker Volkswagen, CARIAD is a company looking to develop autonomy features for Levels 2, 3, 4 and beyond. In this talk, Christina from CARIAD introduced their approach to AI based sensor fusion. Christina started off by explaining that there are simply too many edge cases in sensor fusion to build effective algorithms by hand. Using deep learning allows sensor fusion performance to be data driven rather than human programming driven. The foundational idea of their AI based sensor fusion is to feed raw data from all sensors into neural networks to extract spatial features. All these features are combined by another neural network and predictions for objects are performed all at once using data from all sensors rather than from each sensor individually.

Deploying AI at the edge using TensorRT [S42143]

In this session, Zoox, a subsidiary of Amazon developing autonomous vehicles, introduced their experience with deploying AI at the edge using TensorRT (TRT). The presentation introduced TRT as well as some of the new features in TRT 8. Then, in a brief walkthrough of the API, engineers from NVIDIA explained the process of creating as well as inspecting a TRT inference engine. Once the engine is created, certain considerations should be kept in mind while designing an inference pipeline for optimal performance. Equally important are layer profiling and identifying any engine layers that cause large deviations from their PyTorch or TensorFlow counterparts. We have previously posted a detailed blog post on TensorRT explaining the various concepts of the TRT API. We recommend checking it out before listening to this talk.

AI in semiconductor manufacturing [S41579]

Semiconductors have been in the news lately because of the semiconductor shortage. Whenever the shortage is brought up, people wonder why we cannot just manufacture more of it, just like we did with COVID PPE and masks at the height of the pandemic. The secret to profitable high volume semiconductor manufacturing lies in tight process control, which few companies have accomplished. In this session, Jacob George from KLA Tencor gave an overview of how AI could be used to enhance semiconductor chip yields and lower costs. AI is being used in metrology and inspection of patterned wafers (which are a perfect fit for computer vision algorithms).

According to George’s presentation, the semiconductor industry is facing some of the same problems as most other industries for adopting AI, such as limited labeled data, variance among human annotators, model maintenance, and the long tail problem Andrew Ng mentioned in his talk on data-centric AI. However, some problems such as limits of optics are domain specific.

We note that since KLA occupies a small part of the equipment maker space, this presentation covers only a small slice of the otherwise vast landscape of using AI in semiconductor manufacturing and design.

Autonomous Marine Navigation with AI [S41814]

Just like autonomous cars can enhance safety and improve the quality of life of its users, autonomous boats can do the same. In this session, Donald Scott from Submergence LLC introduced the Mayflower autonomous ship (MAS), an exploratory ship launched by a huge collaboration between industry and academia. In addition to other scientific studies, the MAS has collected a large corpus of images from its onboard cameras. These images are being used to detect other boats and warn the captain about possible danger ahead of time. With this data, currently they can detect 8 different types of ships, 27 different types of buoys as well as other general obstacles. This information can be used to ensure law compliant navigation (don’t get too close to other ships), as well as path planning. Currently, Submergence is using DeepStream on Jetson AGX Xavier for deployment.

Robustness of Vision Transformers [S42002]

There is a raging debate in AI on whether transformers are robust to adversarial examples and out of distribution inputs. In this short session, Yijing Watkins from Pacific Northwest National Laboratory attempted to answer the latter part of the question. Yijing presented evaluations of Vision transformers, data efficient image transformers, and robust vision transformers on the ImageNetC robustness benchmark. A similar analysis of DETR and Swin transformers on MSCOCO-C robustness benchmark shows that:

- Vision transformers learn global information consistently through all layers.

- Vision transformers require large amounts of data to converge, and

- Vision transformers are robust on ImageNet-C and MSCOCO-C benchmarks.

Why you should attend tomorrow

This brings us to the end of Day 3 coverage. We hope you have enjoyed our deep dive into Hopper architecture, as well as the case studies from industries and Andrew Ng’s talk on data centric AI. The last day is scheduled to have over 300 sessions, such as

- Sports Video Analytics

- Real-time conversation with artificial humans

- Super resolution for satellite images

- AI driven autonomous drone

- Introducing AGX Orin

- Agricultural robotics with TensorRT

As we mentioned before, if you register with our link, you could win an RTX 3080 Ti GPU, so please register here.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning