Introduction

Welcome to our Day 2 coverage of the NVIDIA GTC conference. We will cover Jensen’s keynote, Hopper H100 GPU, Building a career in AI, and more. As a quick reminder, GPU Technology Conference (GTC) is NVIDIA’s flagship conference for developers and engineers, where they unveil the latest technologies in game development, computer vision, natural language processing, physics simulation, and data science. We covered Day 1 in detail in our blog, please check it out here if you haven’t already done so. Please note that the assigned day for an event would be different for different time zones. For example, an event that may have taken place on Day 1 for North American participants may have taken place on Day 2 in Asia.

Day 2 of GTC was jam-packed with sessions on all the earlier topics. In this blog post, we will summarize the sessions on AI, deep learning, and career advice. Specifically, we will cover the following sessions:

- GTC Keynote with Jensen Huang

- Five steps to starting a career in AI

- AI models made simple with NVIDIA TAO

- AI in the Datacenter

- Jetson AI software ecosystem

- Large scale image retrieval

- Introduction to Autonomous Vehicles

- GPU programming with standard Python and C++

- Getting started with edge AI on Jetson

- Lessons from Kaggle winning solutions

- Accelerating Deep Learning with NVIDIA DALI

- High-performance inference from PyTorch models

Although we curate and summarize hour-long talks to maximize your productivity, nothing beats attending these events live and asking your questions to industry experts. There is still time to register for FREE for a chance to win the Ultimate Play NVIDIA RTX 3080 Ti GPU.

GTC Keynote with Jensen Huang [S42295]

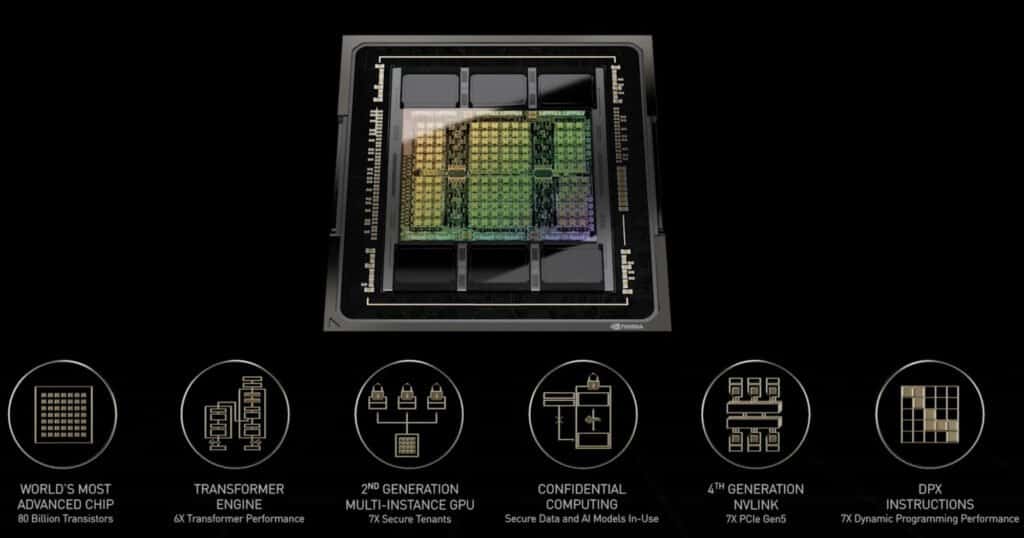

Figure 1. Overview of Features of H100 GPU (Credit: GTC Keynote on YouTube)

This is the flagship event of the whole conference as NVIDIA has major announcements in the keynote. Jensen kicked off the event with a visually stunning video called ‘I am ai’ explaining how AI is touching lives and impacting the world. The Transformer neural network architecture got a shoutout throughout the presentation. Here are the major announcements from the Keynote:

- Hopper H100 GPU: This successor to the Ampere-based A100 GPU brings enormous leaps in performance. The H100 will consist of 80 billion transistors and use TSMC 4N process. It is the world’s first GPU with HBM3 memory, with a massive 4.9 TB/s of memory bandwidth. H100 supports a new 8-bit floating-point format called FP8. We are unsure how the FP8 format works since the keynote didn’t have much information on FP8 v/s INT8 performance. The H100 also contains a new type of Tensor Core for accelerating transformer networks and a new set of instructions called DPX to support dynamic programming algorithms. With DPX, NVIDIA aims to accelerate problems like the traveling salesman problem, routing optimization, gene sequencing, and protein folding. H100 will ship in Q3 of this year.

- High-performance computing: NVIDIA leveraged the Hopper architecture and H100s to announce major AI training and HPC products. 8 H100s will serve the next generation of DGX systems called the DGX H100. 32 DGX H100s can be connected together to form a DGX POD with a total of 768 TB/s of bandwidth (for comparison, the bandwidth required for the entire internet is 100 TB/s). Jensen also gave an update on the Grace CPU for data centers. Grace is on track to ship next year and Grace CPUs can be combined with various configurations of H100 GPUs for AI training.

- Weather modeling with AI: FourCastNet, a physics-informed deep neural network (PINN) by NVIDIA has surpassed the accuracy of weather prediction of any analytical model for the first time. This network was trained on 10 TB of climate data and is an amazing 4 to 5 orders of magnitude faster than traditional methods for inference. While not stated in the keynote, this gives hope to people working in reinforcement learning that PINNs could one day accelerate RL simulations by orders of magnitude.

- Omniverse: NVIDIA hopes that just like TensorFlow and PyTorch are essential for building today’s deep learning applications, Omniverse will be essential for building the next generation of AI models for applications in robotics and logistics. Digital twinning is one of the immediate industrial applications of Omniverse, so much so that a completely new server called OVX was announced for real-time, synchronized simulation of digital twins.

- There were brief mentions of AI for chip design, neural radiance fields for 3D rendering, Graph neural networks for analyzing social media and map data and transformers for self-supervised learning.

- Deep Learning SDKs: There were many mentions of NVIDIA’s domain-specific deep learning SDKs. For example, the benefits of Triton, an open-source hyper-scale inference server were mentioned. Triton is framework agnostic and model agnostic. Jensen also gave a shoutout to RIVA, NVIDIA’s speech AI library, which we introduced on Day 1. Some of the most notable of these SDKs were Modulus for PINNs and FLARE for federated learning.

- Self-driving: No NVIDIA keynote is complete without announcements about self driving cars, and this one was no different. Jensen showcased the latest build of NVIDIA Drive on Hyperion 8, the hardware platform for autonomous driving. Hyperion 9 was also announced which will be able to ingest and process twice as much sensor information as Hyperion 8. NVIDIA also plans to create digital twins of more than 500,000 kilometers of highways across the US, Europe, and Asia by 2024. All this information will be available in Omniverse and should significantly accelerate the development of autonomous driving.

- Jetson Orin: Jetson AGX Orin, the successor to Jetson AGX Xavier is now available. The keynote did not spend much time on Orin, but separately announced specs reveal that Orin is expected to have 6X the performance of Jetson AGX Xavier.

This was a densely packed presentation with multiple announcements. We recommend checking out the entire video of the keynote on GTC or YouTube.

5 steps to starting a career in AI [SE2572]

In this extremely interesting session, five successful AI professionals shared five key ingredients for successfully building a career in AI. Two of the speakers Louis Stewart and Kate Kallat were from NVIDIA joined by two CEOs (Sheila Beladinejad and David Ajoku) and a Professor (Teemu Roos, from the University of Helsinki). Here were their five top picks for success in AI:

- Mentorship and Networking: David started off by invoking the spirit of Issac Newton in that great work happens ‘by standing on the shoulders of giants’. Quite often people think that mentorship is a one way deal where a senior person mentors a junior to climb up ‘the ladder’, but these professionals strongly emphasized the fact that mentorship is a 2-way street since the mentor learns in the process of mentoring. Thus, anyone looking to climb up the technical or corporate ladder should not be hesitant in seeking mentorship from successful seniors. It goes without saying that we live in an extremely competitive world. Thus, people who network extensively, show up to industry events, and stand out from the crowd have a much better chance of success.

- Get hands-on experience: Nothing beats hands-on experience. Hours and hours spent learning the theory of AI do not compare with the learning from a few hours of focused hands-on experience with the tools of AI. The speakers emphasized this with personal experiences. For instance, Kate was actually studying politics before she got into AI, and hands-on learning was how she picked up AI skills on her first job in this industry. Speaking of hands-on experience, our courses are some of the best in giving you hands-on experience in deep learning from start to finish.

- Developing soft skills: No matter how good of a programmer you are, at some point, you will find yourself speaking to an audience of non-technical people. Your ability to communicate your ideas effectively could determine whether or not you get the job, or seal a business deal. Thus, developing the ability to communicate ideas effectively and confidently is a huge career asset. For example, Prof. Roos attended acting classes to improve his public speaking skills even though he had no intention of becoming an actor!

- Defining your why: You cannot succeed in any career if you are neither good at it nor passionate about it. Having a clear mental picture of why you got into AI will help you break barriers, get out of your comfort zone and get over the bumps that are bound to arrive in any career path. For instance, Kate narrated her experience. She got her first job in this industry as a manager of an AI product. As someone who did not have a background in computer science, she felt passionate about democratizing AI and using her product to showcase that you didn’t need a Ph.D. in AI to take advantage of the latest tools and technologies.

- In the final step, everyone talked about a different topic. Prof. Roos advises beginners to make sure they are in it for the right reasons, not just to make a quick buck. This is closely tied back to the previous point of defining your why. David advised that in a career, your own effort and preparedness is the only variable that is within your control, so prepare assiduously to realize your dreams. Sheila advised beginners not to get carried away by the hype and build their own career path depending on their aptitude and what works best for them.

AI models made simple with NVIDIA TAO [S41773]

Building AI applications is hard. You need the right data, the right models and people with the right skills to train and deploy production grade models. These problems are industry wide and significantly reduce the number of companies and engineers who are able to build AI powered real world applications. This is where NVIDIA TAO comes in. TAO, which stands for Train, Adapt and Optimize is a transfer learning framework geared towards commercial applications in both computer vision and natural language processing.

NVIDIA has streamlined the process of starting from pre-trained models, fine-tuning them on your custom dataset (which doesn’t have to be large), and deploying the fine-tuned models on either embedded devices or the cloud. TAO can therefore save you a lot of time and money to get to a commercially useful application.

After laying out these basics, NVIDIA announced the new features in the upcoming TAO 22-04 release:

- New models for training on point cloud data

- New models for action classification based on human pose data

- Flexible model for keypoint estimation on any type of object (earlier TAO models were restricted to key point estimation of humans).

- A new city segmentation model for use in robotics and autonomous driving.

- Tensorboard integration to visualize training progress including intermediate predictions and histogram of weights.

- Rest APIs for TAO toolkit with deployment on Kubernetes clusters

- It is now possible to bring in your own custom model architecture into TAO and optimize or prune and deploy the model.

This session included a code walkthrough of training a model with Jupyter notebook and some examples of customers using TAO in production. You can get started with TAO from here.

AI in the Datacenter [DLIT2552]

This was an extremely detailed and comprehensive introduction to machine learning and AI, ideally suited for managers and beginners to AI. The key topics covered were:

- Basics of machine learning,

- Feature engineering,

- Deep learning,

- Developer workflow,

- Training infrastructure,

- Multi-node GPU acceleration,

- Application Deployment

Overall, this session is highly recommended for leaders and managers who want to introduce AI into their companies. Engineers might find limited utility from this broad overview.

Jetson AI software ecosystem [SE2245]

This session provided an excellent overview of the entire software ecosystem for Jetson devices. The session should be really interesting to engineers and robotics startups looking to integrate Jetson into their products. The speakers went into details of the components of the JetPack SDK, such as Linux for Tegra (L4T) distro, Board Support Package (BSP), storage system as well as CUDA and Deep Stream libraries for accelerating computer vision applications for robotics. TAO toolkit and Triton inference server were also introduced, which we have covered earlier in this post.

Large scale image retrieval [S42388]

This session was a fascinating insight into the applications of Swin transformers. Christof Henkel explained the history of the Google Landmark recognition challenge and how Swin transformers help in improving retrieval of similar images. Since this kind of problem also occurs in SLAM, this talk will also be interesting to people interested in robotics and navigation.

Introduction to Autonomous Vehicles [S42587]

If you want to develop self-driving cars but feel overwhelmed by the amount of information out there, this session is for you. Katie Washabaugh has distilled the foundational knowledge of autonomous driving from the technical history to sensor architecture, AI computational stack, and the development workflow of autonomous vehicles. She described each step in the process in an easy-to-understand way and ended by mentioning a few futuristic trends such as software-defined autonomous vehicles and hardware evolution in the next few years.

GPU programming with Python and C++ [S41496]

We all understand how we must use CUDA libraries to accelerate computation on NVIDIA GPUs. What if it didn’t have to be that way? What if we could write C++ or python code as usual and it got accelerated automatically on the GPU? If you would love that to be true, this session is for you. NVIDIA is making efforts to replace standard libraries such as numpy in Python and the C++ standard library with CUDA versions that can run on both CPU and GPU. As a developer, you can continue to write code just as you would for the CPU and it would get accelerated by the GPU behind the scenes. This session showcased the immediate use-cases for these replacement libraries and the impressive performance gains they enable.

Getting started with edge AI on Jetson [SE2596]

If you have spent some time with Jetson devices, you have probably heard of Dustin Franklin from NVIDIA whose jetson-inference repository has thousands of stars on GitHub. In this session, Dusty provides a gentle introduction to edge computing using Jetson. If you have wondered what this craze about edge computing is all about, this session will demystify it for you. The difference between this session and SE2245 is that this one is for beginners and hobbyists looking to take their first steps into edge AI while the latter is geared towards professionals.

Lessons from Kaggle winning solutions [S42635]

Kaggle competitions have gained a bad reputation for being huge ensembles that just slightly outperform simple models, thereby having no real-world usage. In this session, no less than 8 engineers from NVIDIA dispelled this myth by giving several examples of Kaggle winning solutions that were used by them to create real products. If you are an avid Kaggler, this session is definitely for you.

Accelerating Deep Learning with NVIDIA DALI [S41443]

DALI is a library by NVIDIA for data pre-processing. This session is a gentle introduction to DALI and how to use it to accelerate not only model training but also data pre-processing on GPUs. If you often train deep networks and would like to explore ways of improving training speed, this session is for you.

High-performance inference from PyTorch models [S41285]

Meta (formerly Facebook), the creators of PyTorch and NVIDIA are collaborating to bring several features for accelerating inference directly in PyTorch. This session covers basic things like TorchScript and ONNX to TensorRT conversion, as well as more advanced topics like Torch-TensorRT and torch.fx. Torch-TensorRT brings the merits of TensorRT directly into PyTorch, giving you the best of both worlds. Torch-TensorRT is open source and available here. FX is an intermediate representation for PyTorch graphs much like TorchScript graphs with some advantages in simplicity and optimization. If you care deeply about model inference within PyTorch, this session is definitely for you.

Why you should attend tomorrow

This brings us to the end of Day 2. What a jam packed day! If you think today was intense, tomorrow is looking to be much more so, with over 250 sessions planned, such as

- TensorRT for real-time inference on autonomous vehicles

- LIDAR simulation for AV perception

- Hyperion Roadmap

- AV Development with NVIDIA Drive OS

- NVIDIA DriveSim on Omniverse

- Building synthetic datasets for AV, and many more

As we mentioned before, if you register with our link, you could win an RTX 3080 Ti GPU, so please register here.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning