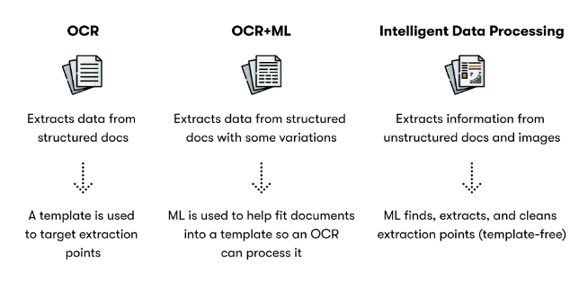

Traditional Optical Character Recognition (OCR) systems are primarily designed to extract plain text from scanned documents or images. While useful, such systems often ignore semantic structure, layout, and visual cues like images, watermarks, and tables, limiting their utility in modern AI pipelines.

Enter Nanonets-OCR-s – a groundbreaking Vision-Language Model (VLM) that sets a new standard for intelligent document understanding. Whether you’re digitizing academic papers, parsing contracts, or building searchable enterprise knowledge bases, Nanonets-OCR-s delivers an unmatched combination of accuracy, structure, and intelligence.

- The Evolution Beyond Traditional OCR

- What is Nanonets-OCR-s?

- Core Innovations & Technologies Used in Nanonets-OCR-s

- Training and Dataset Composition

- Semantic Features and Output Capabilities of Nanonets-OCR-s

- Markdown Output Comparison: Nanonets-OCR-s vs Donut vs Dolphin

- Technical Strengths and Limitations of Nanonets-OCR-s

- Benchmark Comparison

- Conclusion

- References

The Evolution Beyond Traditional OCR

Conventional OCR systems like Tesseract (LearnOpenCV’s blog post on Deep Learning Based OCR Text Recognition Using Tesseract and OpenCV) or cloud APIs are adept at pulling plain text from scanned pages. However, they ignore vital structural and contextual elements – images, plots, LaTeX equations, watermarks, checkboxes, and tables – which are essential for meaningful interpretation. This limitation severely hampers their utility in LLM-centric pipelines where semantic richness and structural fidelity matter.

Nanonets-OCR-s changes the narrative by integrating multimodal understanding into the core of its architecture. It doesn’t just extract text – interprets, organizes, and formats information into clean, contextualized Markdown enriched with semantic tags.

What is Nanonets-OCR-s?

Nanonets‑OCR‑s is an open-weight Vision‑Language Model (VLM) released on June 12, 2025 by Nano Net Technologies. It’s a 3.75 B parameter multimodal transformer based on Qwen2.5-VL‑3B, fine-tuned for image-to-markdown OCR.

Unlike traditional OCR systems that extract plain text, Nanonets-OCR-s understands document layout, content type, and context – embedding elements like headings, math formulas, tables, and even image descriptions into Markdown or HTML-like syntax. It goes beyond simple OCR, generating structured Markdown output with semantic tagging, ready for LLM pipelines.

Core Innovations & Technologies Used in Nanonets-OCR-s

This model uses advanced Vision-Language Modeling techniques, likely based on a multimodal transformer architecture (Qwen2.5-VL-3B-Instruct, a 3B parameter VLM). It integrates:

- Visual encoding: CNN/ViT-based encoders to perceive layout, fonts, symbols, and graphics

- Language modeling: Transformer-based decoder trained to output Markdown, HTML, and structured tags

- Fine-tuning strategy: Aligned to produce structured text output with a mixture of real-world and synthetic documents

| Component | Detail |

|---|---|

| Base Model | Qwen2.5-VL-3B, a 3 billion parameter VLM |

| Model Type | Transformer-based multimodal encoder-decoder |

| OCR Strategy | OCR-free (similar to Donut), trained end-to-end from image to structured Markdown |

| Semantic Tagging | Outputs structured elements like <signature>, <watermark>, <img>, etc. |

| Target Format | Markdown with embedded semantic structure, LaTeX, and HTML-like tags |

Training and Dataset Composition

To build a model capable of such nuanced understanding, the team at Nanonets curated a diverse and extensive dataset of over 250,000 document pages. This dataset includes:

- Academic papers with complex mathematical notation

- Legal documents with signatures and structured clauses

- Financial documents such as invoices and tax forms

- Healthcare forms with checkboxes and annotations

The training strategy employed a two-step pipeline:

- Pretraining on synthetic datasets to establish visual-linguistic grounding

- Fine-tuning on manually annotated, real-world documents to refine accuracy and domain robustness

The final result is a VLM capable of tackling real-world document variability while producing LLM-ready, semantically structured text.

Semantic Features and Output Capabilities of Nanonets-OCR-s

Nanonets-OCR-s excels by not merely converting text from images but by encoding deep structural awareness into its output. Its Markdown generation is augmented by semantic tagging that aligns with both human readability and machine ingestion.

Some of its key capabilities include:

- LaTeX Equation Recognition: Automatically converts mathematical equations into LaTeX syntax, distinguishing between inline and block equations for Markdown compatibility.

- Detects math regions and converts them to:

- Inline syntax →

$...$ - Display syntax →

$$...$$

- Inline syntax →

- Trained to identify not just symbols but layout context (centered math vs. inline usage)

- Ideal for academic, scientific, and engineering documents

- Detects math regions and converts them to:

We’ve also published a blog post on Fine-Tuning Gemma-3 for LaTeX Equation Generation, where the results turned out to be remarkably accurate and robust. Explore the details and results by visiting the above link.

- Intelligent Image Description: Describes charts, plots, and diagrams using

<img>tags with context-aware captions that make visual elements digestible for LLMs.- Describes semantic content, visual style, and context

- Enables downstream multimodal LLMs to reason about visual elements

- Parses charts, plots, logos, diagrams, and wraps them in:

<img>

Description of the visual content

</img>

- Signature Detection & Isolation: Accurately identifies and tags signatures with

<signature>, essential for legal and formal documents.- Identifies signatures from handwritten or cursive blocks

- Useful in legal, financial, or HR documents

- Tags them like:

<signature>John Doe</signature>

- Watermark Extraction: Detects and isolates document watermarks, embedding them within

<watermark>tags to retain provenance and classification.- Detects semi-transparent or background text overlays

- Helps preserve document provenance or classification labels

- Extracts and wraps them in:

<watermark>Confidential</watermark>

- Smart Checkbox Handling: Converts checkboxes and radio buttons into standardized Unicode symbols such as ☑, ☒, and ☐, ensuring logical consistency.

- Detects checkboxes and radio buttons

- Converts them to standardized Unicode symbols:

- ☑ checked

- ☒ crossed

- ☐ empty

- This enables structured form processing and reliable boolean tagging

- Complex Table Extraction: Translates multi-row, multi-column tables into Markdown and HTML with preserved alignment, headers, and merged cells.

- Captures multi-row, multi-column, nested tables

- Converts them into Markdown tables or HTML table syntax

- Maintains alignment, merged cells, header context

- Crucial for financial, legal, and research documents

Markdown Output Comparison: Nanonets-OCR-s vs Donut vs Dolphin

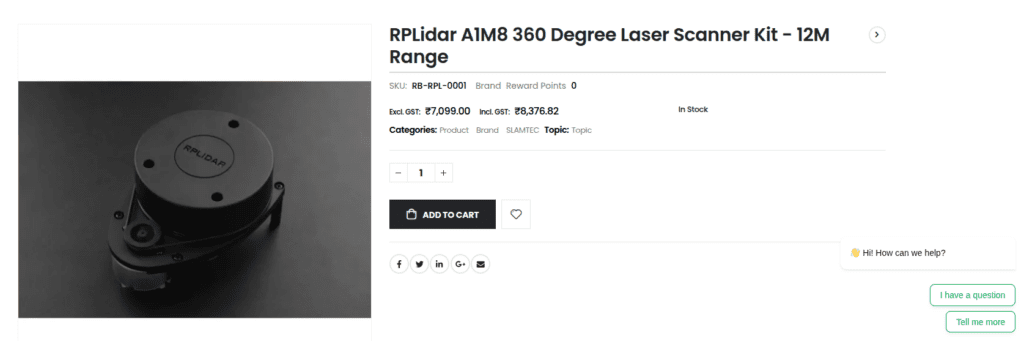

To provide a clear and fair evaluation of how different models interpret and convert document images into Markdown, we compared the outputs of three notable models: Nanonets-OCR-s, Donut, and Dolphin on the same product detail image. The code links for the respective models implementation sourced from the official sources can be fetched from the References section.

Brief Overview of the Compared Models

- Nanonets-OCR-s is a 3.75B vision-language model designed specifically for semantic OCR and Markdown generation. It is trained to extract structured content directly from document images without relying on external OCR engines.

- Donut (Document Understanding Transformer), developed by NAVER AI Lab, is an OCR-free model capable of processing document images and producing JSON or Markdown-style outputs. It is often used for form extraction and document layout understanding.

- Dolphin is a lightweight OCR model designed for visual language tasks. It typically performs well on structured layouts and image captions but may not match the deep semantic structuring of larger VLMs.

Input Image Description

The test image is a commercial product detail page for an RPLidar device as attached below. It includes a product name, SKU, price (with and without GST), stock status, social media icons, and a chatbot-style footer asking “Have a question? Tell me more.”

Markdown Generation Comparison

Nanonets-OCR-s Generated Markdown Output

Page 1 of 1

RPLidar A1M8 360 Degree Laser Scanner Kit - 12M Range

SKU: RB-RPL-0001 Brand Reward Points 0

Excl. GST: ₹7,099.00 Incl. GST: ₹8,376.82

In Stock

Categories: Product Brand SLAMTEC Topic: Topic

<img>Product image</img>

<watermark>RPLIDAR</watermark>

Quantity:

-

1

+

Add to Cart Heart

<img>Facebook icon</img> <img>Twitter icon</img> <img>LinkedIn icon</img> <img>Google+ icon</img> <img>Email icon</img>

Hi! How can we help?

I have a question

Tell me more

Highlights:

- Semantic segmentation of elements

- Inline image tags with descriptions

- Markdown headings and labels structured as readable content

- Recognition of watermark, chatbot dialogue, and social icons

Donut Generated Markdown Output

{

"menu": [

{

"nm": "RPLidar AIM8 360 Degree Laser Scanner Kit-12M",

"unitprice": "Range",

"cnt": "0",

"price": "0"

},

{

"nm": "SOLI。88-MV-COOL Band version Punto O",

"unitprice": "709,900",

"cnt": "0",

"price": "0"

},

{

"cnt": "0",

"price": "0"

}

],

"total": {

"total_price": "68,078.02"

}

}

Highlights:

- Focused on tabular interpretation

- Unable to semantically segment non-tabular text

- Includes hallucinated or misread values (e.g., unrelated product entry)

Dolphin Generated Markdown Output

RPLidar A1M8 360 Degree Laser Scanner Kit – 12M Range

SKU: RB-RPL-0001 Brand Reward Points 0

-1+

↑. International Olympic Committee. [2022-09-22].

f > in G =

have a question

Tell me more

Highlights:

- Basic OCR extraction

- Embedded raw image references

- Partially captures relevant information, but lacks structure or Markdown styling

- Contains spurious text from image background noise

Summary

| Feature | Nanonets-OCR-s | Donut | Dolphin |

|---|---|---|---|

| Markdown Formatting | ✅ Structured and clean | ❌ JSON-formatted | ⚠️ Incomplete, noisy |

| Image Context Tagging | ✅ <img> tags | ❌ | ⚠️ Raw base64 blobs |

| Watermarks, Chat UI | ✅ Detected & tagged | ❌ | ⚠️ Partially mixed in text |

| Semantic Structure | ✅ Headings, labels, layout | ⚠️ Table-centric | ❌ Flat OCR dump |

| Hallucination Risk | Low | Medium | High |

Verdict

Nanonets-OCR-s delivers the most readable, LLM-friendly, and semantically tagged Markdown output. Donut provides structured JSON suited to forms but misses document-wide cohesion. Dolphin captures raw text but lacks context or usable structure.

This comparison illustrates the value of semantic-aware VLMs in transforming complex visual content into meaningful structured data, particularly for downstream AI processing.

Technical Strengths and Limitations of Nanonets-OCR-s

Strengths

- Outputs clean, LLM-compatible Markdown with embedded semantic tags

- No reliance on third-party OCR engines

- Recognizes and structures multiple content modalities including equations, tables, images, and checkboxes

- Runs on open-weight models like Qwen2.5-VL-3B, enabling local deployment and fine-tuning

Limitations

- Currently not trained on handwritten content, limiting its applicability in informal or cursive-text scenarios

- May occasionally hallucinate content, especially when visual context is ambiguous or degraded

- Requires modern GPUs (e.g., A100, RTX 4090) for real-time or batch-scale processing

Benchmark Comparison

When benchmarked against other leading solutions such as Donut and Dolphin, Nanonets-OCR-s demonstrates superior structured output quality:

| Feature | Nanonets-OCR-s | Donut | GPT-4V / Gemini | Dolphin |

|---|---|---|---|---|

| Output Format | Markdown + Tags | JSON/Markdown | Freeform Text | Raw Text/Image |

| Visual Element Support | ✅ | Limited | ✅ (Prompted) | ⚠️ Basic OCR |

| Semantic Structuring | ✅ | Medium | Variable | ❌ |

| LLM Compatibility | High | Medium | Prompt-Dependent | Low |

| Open-source Deployment | ✅ | ✅ | ❌ (API only) | ✅ |

| Image Tagging | ✅ <img> tags | ❌ | ✅ | ⚠️ Base64 blob |

| Chat / UI Detection | ✅ | ❌ | ✅ (Prompted) | ⚠️ Partial |

| Hallucination Risk | Low | Medium | Medium | High |

Nanonets-OCR-s leads in producing consistent, semantically tagged Markdown without complex prompt engineering, making it ideal for automated pipelines.

Conclusion

Nanonets-OCR-s represents a substantial leap forward in the evolution of document intelligence. By transcending traditional OCR limitations and embracing vision-language modeling, it offers a truly modern solution for extracting meaning from complex visual content. Whether you’re automating financial paperwork, digitizing academic research, or building AI-powered knowledge bases, this model brings unmatched precision, structure, and adaptability.

As we step into a world where LLMs and multimodal AI systems require structured, semantically rich data, Nanonets-OCR-s is not just a tool—it’s a foundational component in building intelligent, scalable, and explainable AI systems.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning