In computing, Graphics Processing Units (GPUs) have transcended their original role, rendering simple polygons to become the workhorses behind realistic gaming worlds, machine learning advancements, and large-scale scientific simulations. A modern high-end GPU can perform tens of trillions of arithmetic operations every second, a level of performance that once required entire supercomputers.

In this blog post, we’ll walk you through every layer of the RTX 3090 GPU’s Ampere architecture and explain how engineers harness massive parallelism, specialized memory systems, and hierarchical designs to deliver astonishing computational power.

- The Exponential Growth of GPU Compute

- CPU vs. GPU: Contrasting Design Philosophies

- Physical Architecture of a Modern GPU (GA102 Example)

- Binning: One Die, Multiple Products

- Feeding the Beasts: Graphics Memory (GDDR6X, GDDR7, and HBM)

- Parallel Compute Models: From SIMD to SIMT

- Real-World GPU Workloads

- Conclusion

- References

The Exponential Growth of GPU Compute

Over the past three decades, GPU performance requirements have skyrocketed. In 1996, to render Super Mario 64 in stereoscopic 3D, a system needed roughly 100 million calculations per second. Fast forward to 2011, and Minecraft’s block-based world pushed that to 100 billion calculations per second. Today’s blockbuster titles, like Cyberpunk 2077, necessitate upwards of 36 trillion calculations per second to handle realistic lighting, physics simulations, and ray tracing effects.

Imagine harnessing every person on Earth, about 8 billion individuals, to each perform a single multiplication per second. Despite this monumental collective effort, you’d reach only around 8 billion operations per second, still a factor of 4,500 below what a single modern GPU can achieve. This staggering gap illustrates why specialized hardware, finely tuned for parallel workloads, is essential.

CPU vs. GPU: Contrasting Design Philosophies

To appreciate why GPUs excel at certain tasks, consider the classic ship-and-plane analogy:

- CPU (Jumbo Jet): Equipped with a small number of powerful, flexible cores, a CPU handles diverse tasks, running an operating system, managing I/O devices, and executing complex branching code. Its strong single-thread performance is akin to a jet’s speed and versatility, capable of landing at thousands of airports around the globe.

- GPU (Cargo Ship): Built with thousands of simpler cores, a GPU is optimized for throughput rather than latency. It carries vast quantities of data (graphic primitives, pixel values, matrix elements) in repeatable batches, similar to how a cargo ship transports bulk containers between specialized ports. Loading and unloading cargo may take longer per container than a plane, but the total volume moved per trip is orders of magnitude greater.

| Feature | CPU (Jumbo Jet) | GPU (Cargo Ship) |

|---|---|---|

| Core Count | 6–24 powerful x86 cores | 10,000+ CUDA/Cores + hundreds of specialized cores |

| Flexibility | Runs OS, branching logic, network stacks, diverse applications | Executes narrow instruction sets (arithmetic, vector math, shaders) |

| Throughput vs. Latency | Lower throughput, low latency per task | Extremely high throughput, higher per-operation latency |

| I/O Interfaces | Disks, network, USB, keyboard, thousands of connection types | PCIe, NVLink, display ports—high-bandwidth, specialized pathways |

| Ideal Workloads | Sequential / control-heavy tasks, complex branching, multitasking | Embarrassingly parallel tasks: 3D rendering, AI training, crypto hashing |

By matching hardware design to workload characteristics, GPUs deliver phenomenal performance on data-parallel problems that would bottleneck on a CPU.

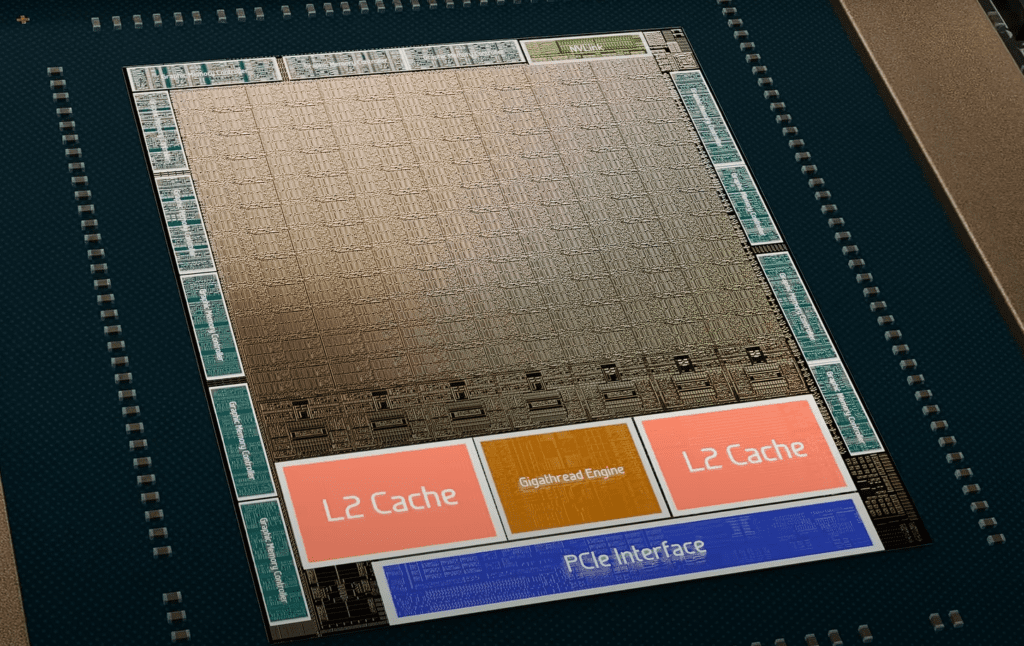

Physical Architecture of a Modern GPU (GA102 Example)

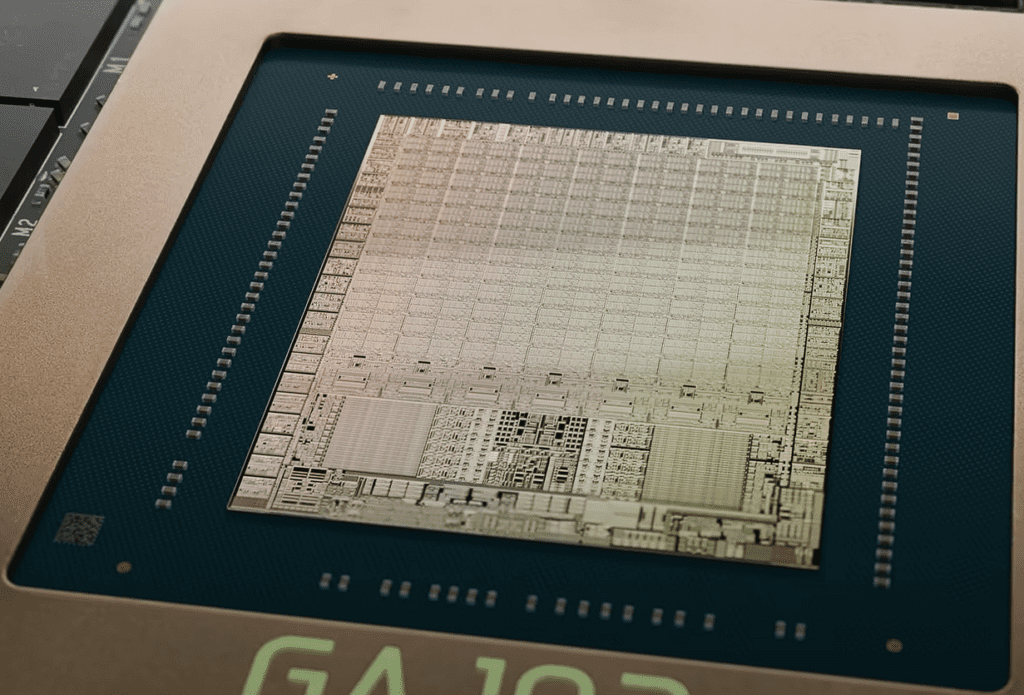

Modern GPUs, such as NVIDIA’s GA102 die used in the RTX 30-series, are marvels of semiconductor engineering. Let’s peel back the layers:

Billion-Transistor Die

At the heart of the GPU lies a silicon die spanning just a few square centimeters, yet containing 28.3 billion transistors. These transistors act as digital switches, opening and closing to represent binary 1s and 0s. Without this transistor density, executing trillions of operations per second would remain science fiction.

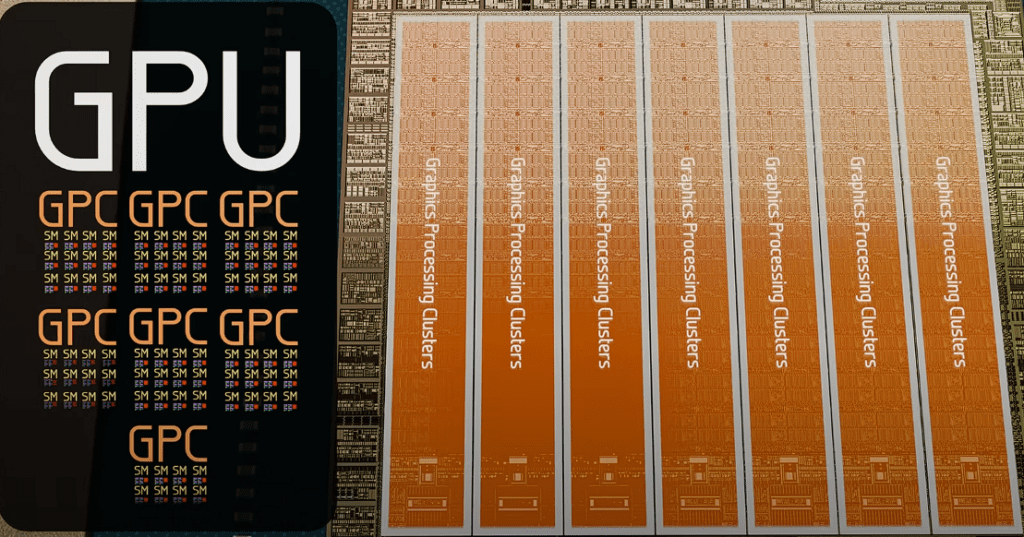

Hierarchical Compute Blocks

To organize computation at scale, the die is structured into nested units:

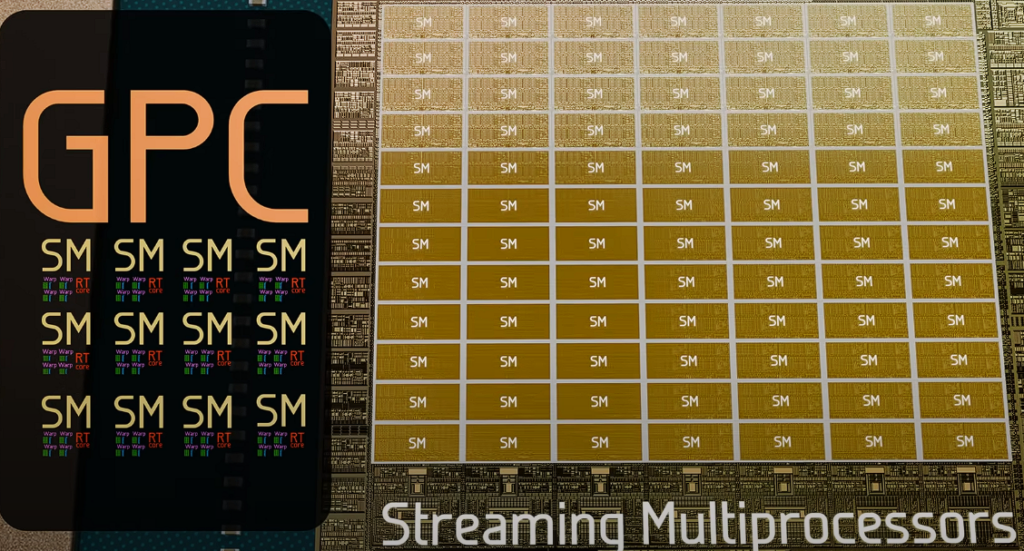

- Graphics Processing Clusters (GPCs): GA102 features 7 GPCs, each a self-contained block that processes a subset of the rendering workload.

- Streaming Multiprocessors (SMs): Each GPC houses 12 SMs, totaling 84 on the GA102. SMs are the workhorses that dispatch and execute threads.

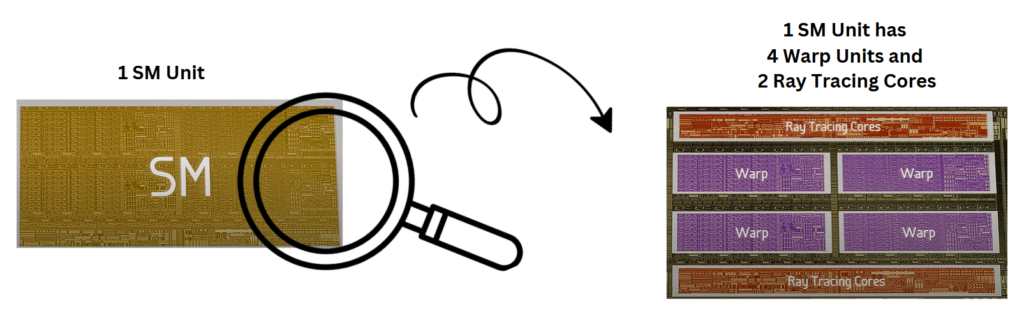

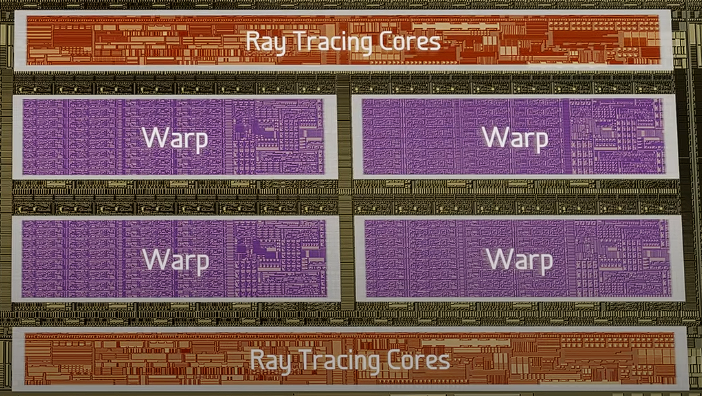

- Warps, CUDA Cores, Tensor & Ray-Tracing Cores:

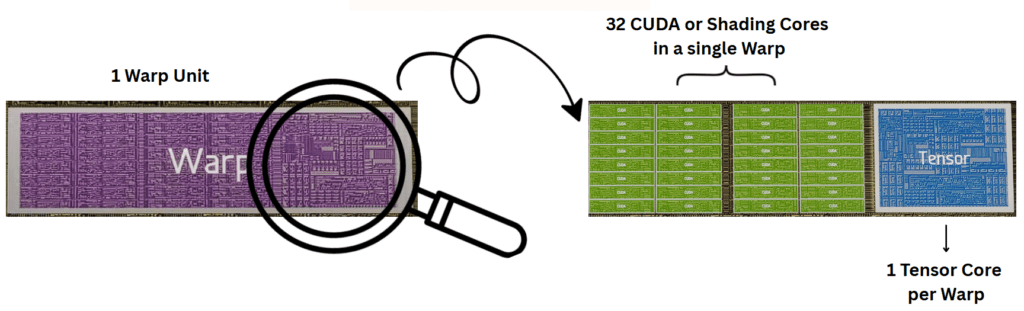

- Warp: A group of 32 threads executing the same instruction sequence in parallel.

- CUDA (Shading) Cores: 32 simple arithmetic units per warp, for a grand total of 10,752 on GA102. They handle basic operations like add, multiply, and bitwise logic, with blazing speed.

- Tensor Cores: One per warp (336 on GA102), specialized in matrix multiply-accumulate (essential for AI workloads).

- Ray-Tracing Cores (RT Cores): One per SM (84 total), dedicated to processing ray-tracing acceleration structures and intersection tests.

This hierarchical arrangement ensures work is subdivided and scheduled efficiently, maximizing utilization of the die’s compute resources.

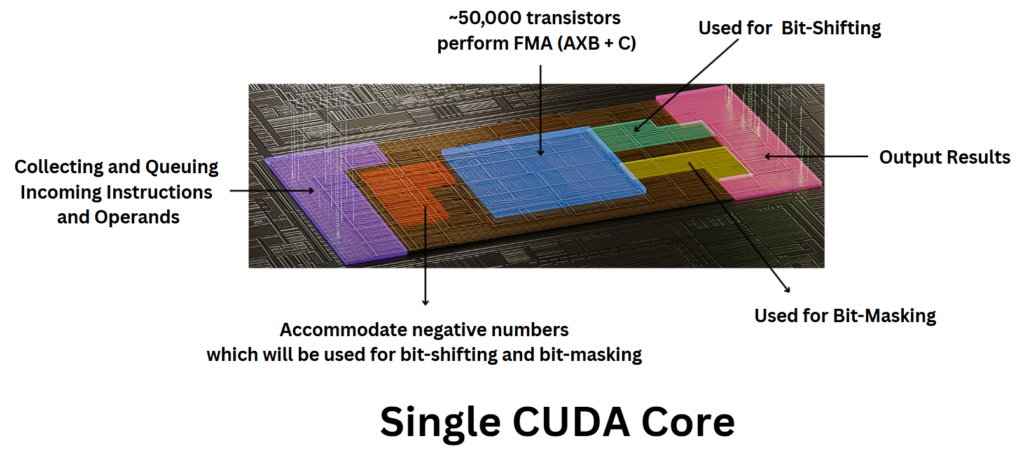

Microscopic View: Inside a CUDA Core

A single CUDA core is deceptively complex:

- Out of ~410,000 transistors present in a single CUDA core, ~50,000 transistors implement a fused-multiply-add (FMA) unit, computing A×B + C in one clock cycle, and additional logic for bit-shifting, masking, and control.

- Clock Rate & Throughput: At a boost clock of 1.7 GHz, each core achieves 1.7 billion FMAs per second. Multiply that by 10,496 active cores of RTX 3090, and you reach ~35.6 trillion floating-point operations per second (FLOPS).

- Special Function Units: Four units per SM handle complex operations such as division, square roots, and trigonometric functions that don’t map cleanly to simple FMAs.

Memory Controllers, Caches & I/O

Surrounding the compute clusters are essential support circuits:

- Memory Controllers: 12 channels of GDDR6X memory form a 384-bit bus capable of 1.15 TB/s sustained bandwidth.

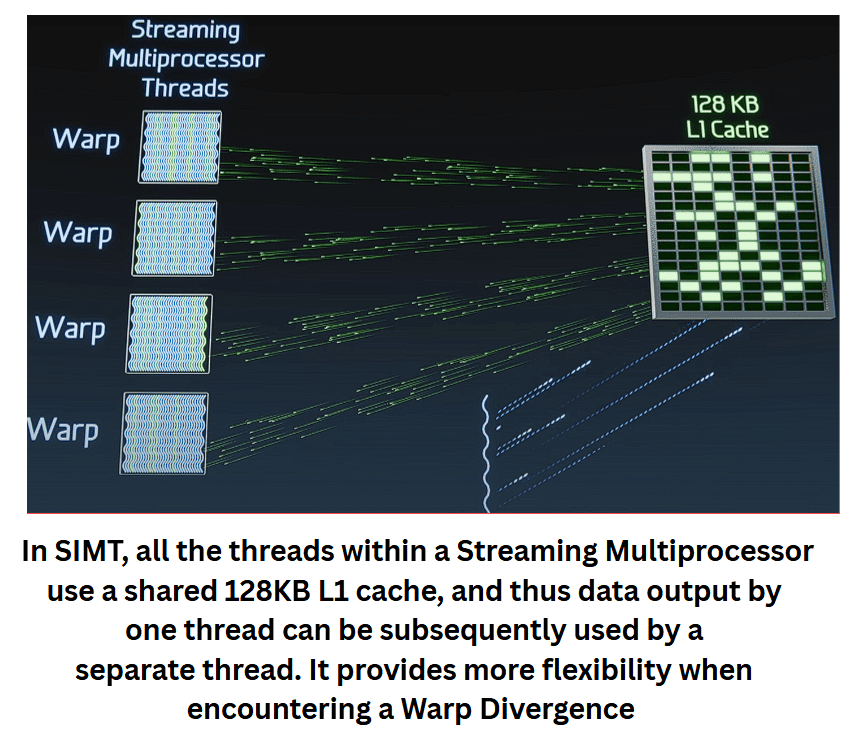

- On-Chip Cache: A set of 2 3MB L2 SRAM cache shared across SMs, plus 128 KiB L1 cache per SM, minimizes expensive DRAM round-trips.

- Gigathread Engine: Manages all 7 Graphics Processing Clusters and Streaming Multiprocessors (SMs) inside.

- PCIe & NVLink: High-speed serial links connect the GPU to the CPU/motherboard or other GPUs.

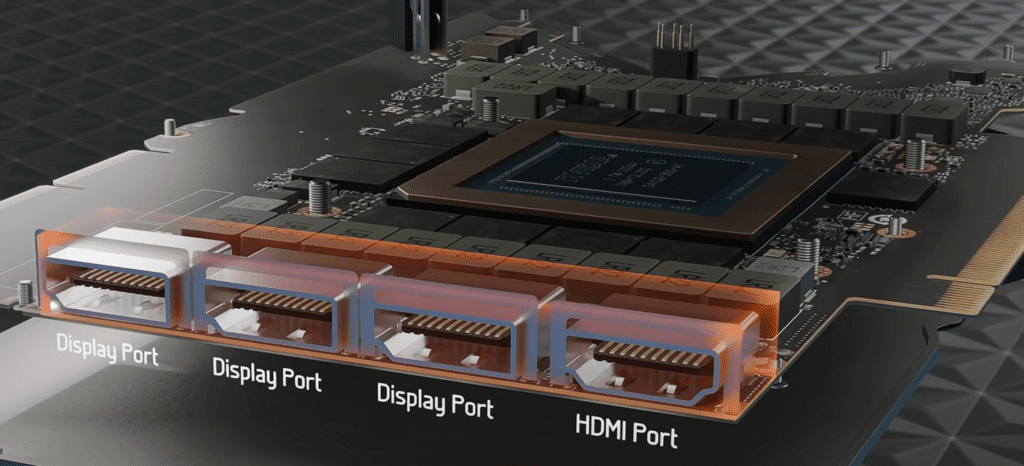

- Display Outputs: 1 HDMI and 3 DisplayPort connectors interface with monitors in real time.

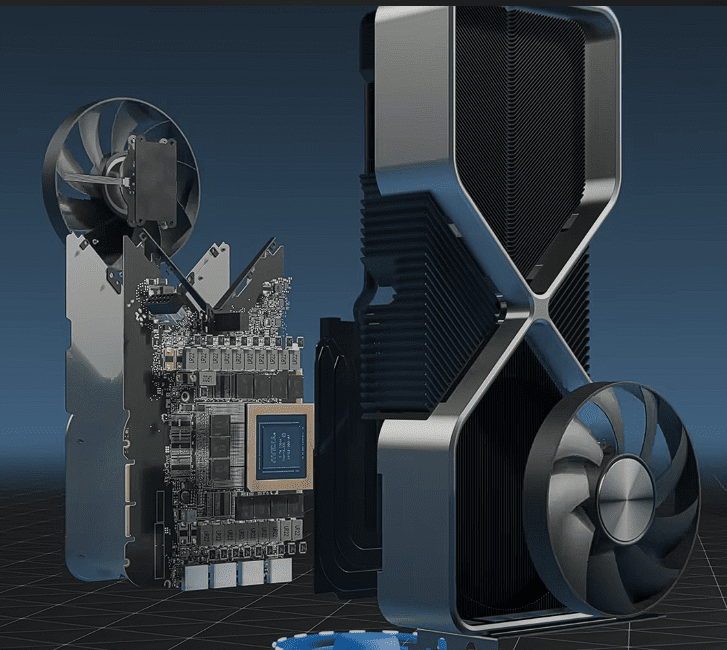

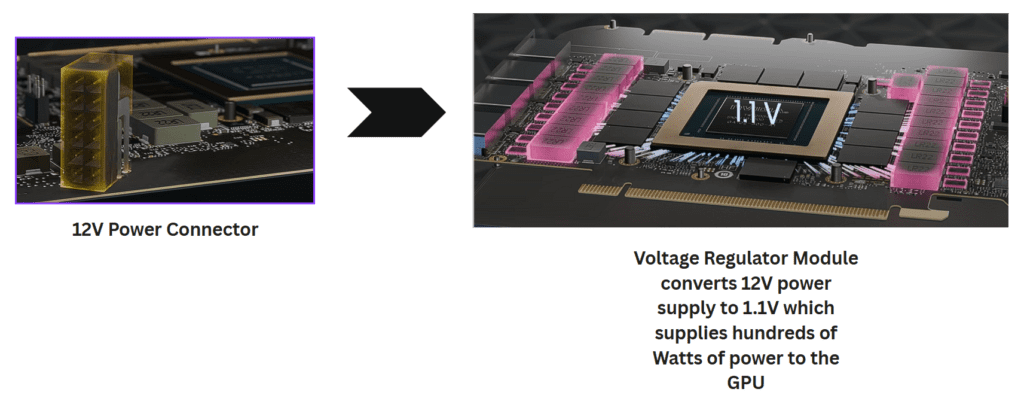

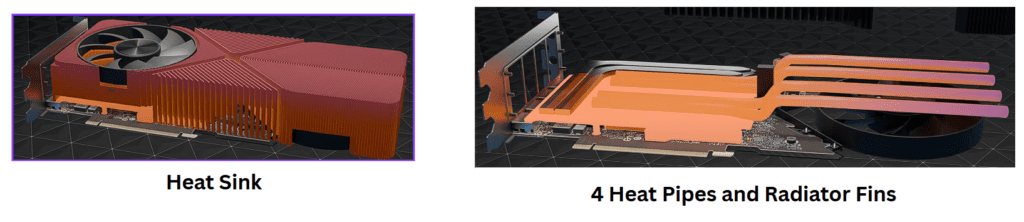

Power Delivery & Thermal Management

Feeding and cooling this beast requires engineering ingenuity:

- Voltage Regulator Module (VRM): Steps down the 12 V PCIe/auxiliary input to ~1.1 V, delivering hundreds of Watts to the GPU.

- Heatsink & Fans: Massive aluminum fin stacks with copper heat pipes transfer thermal energy to flowing air, kept in check by one or more high-CFM fans.

Binning: One Die, Multiple Products

Instead of discarding dies with minor defects, manufacturers bin them:

| Product | Active CUDA Cores | Description |

| RTX 3090 Ti | 10,752 | Full, defect-free GA102 |

| RTX 3090 | 10,496 (2 Defective SMs) | Minor disabled SMs post-manufacturing |

| RTX 3080 Ti | 10,240 (4 Defective SMs) | Additional disabled SMs for mid-tier SKU |

| RTX 3080 | 8,704 (16 Defective SMs) | Maximum disabled SMs for entry-level SKU |

Aside from core counts, each SKU varies clock frequencies, VRAM capacity (10–24 GB), and power targets.

Feeding the Beasts: Graphics Memory (GDDR6X, GDDR7, and HBM)

GPUs are data-hungry machines and need a constant data feed to keep thousands of cores busy. The following leading DRAM architectures deliver this:

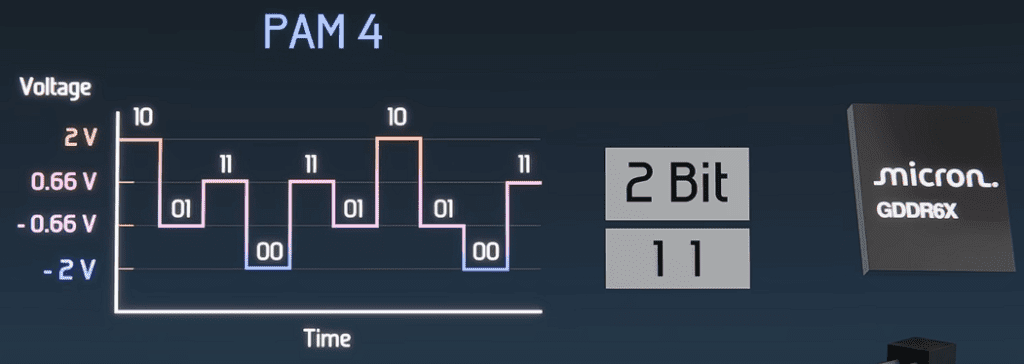

GDDR6X SDRAM

- Capacity & Configuration: High-end cards implement 24 chips, each 1 GB, for 24 GB total.

- PAM-4 Signaling: Transmits 2 bits per pin per clock using four distinct voltage levels, doubling throughput without doubling pin count.

- Bandwidth: Upwards of 1.15 TB/s on a 384-bit bus.

A total of 24 chips transfer combined 384 bits at a time, known as Bus Width. In the CPU, the Bus Width is 64 bits with a bandwidth of 6 GB/sec.

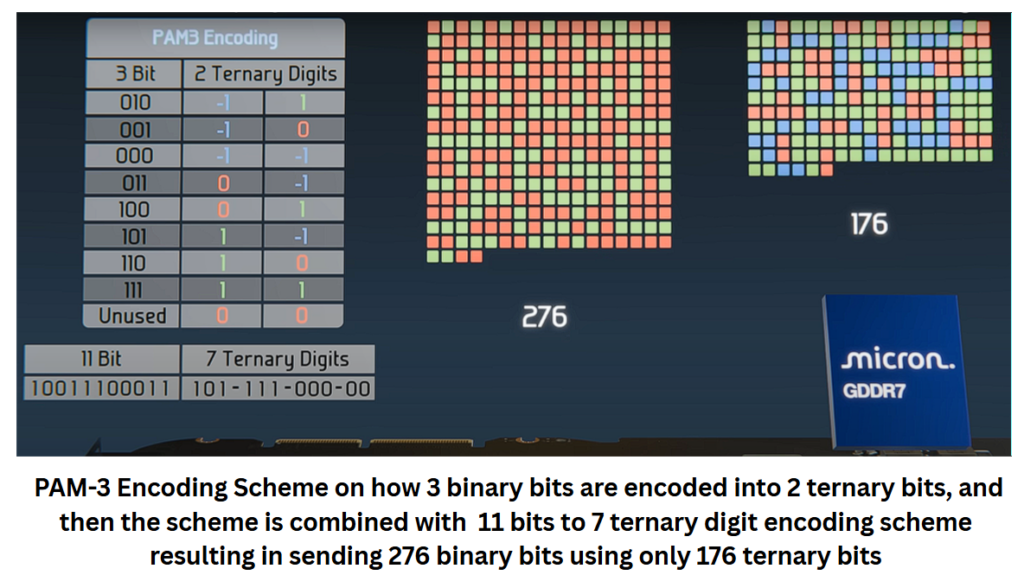

GDDR7 SDRAM

PAM-3 Encoding: Three voltage levels (−1, 0, +1) encode data more efficiently, improving power and signal integrity. GGDR7 uses 3 different encoding schemes to combine binary bits into ternary bits.

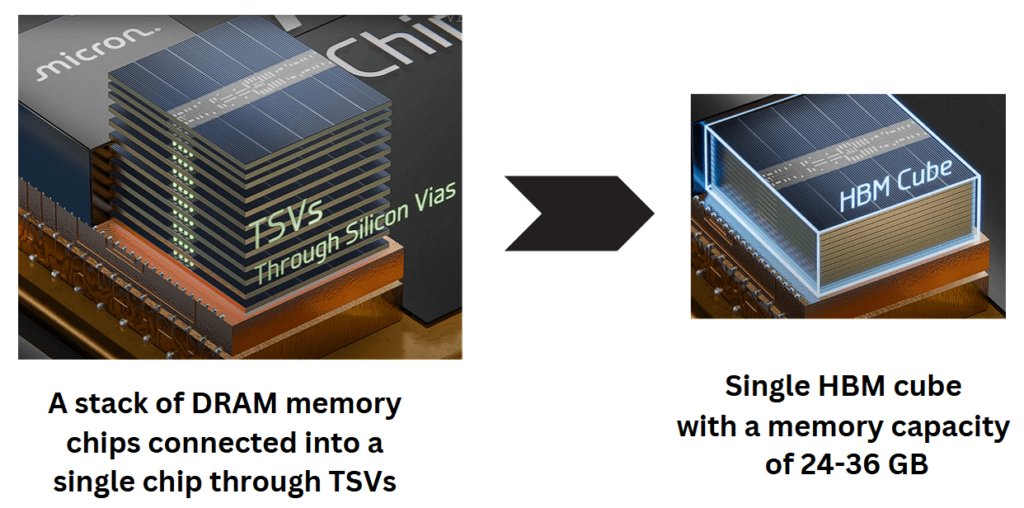

High-Bandwidth Memory (HBM3E)

HBM by Micron surrounds AI chips built from stacks of DRAM memory chips and uses TSVs to connect the stack into a single chip.

- Stacked DRAM Cubes: Connected via Through-Silicon Vias (TSVs).

- Capacity & Bandwidth: A single cube can have up to 24–36GB of memory, thus yielding 192 GB/s per stack, which is ideal for AI accelerators.

Parallel Compute Models: From SIMD to SIMT

GPUs excel at embarrassingly parallel tasks, where identical operations are applied independently across vast datasets. Embarrassingly Parallel Workloads are defined by tasks where each data element can be processed independently, whose examples include:

- 3D vertex transformations

- Pixel-based image filters

- Cryptographic hash computations

- Neural network matrix operations

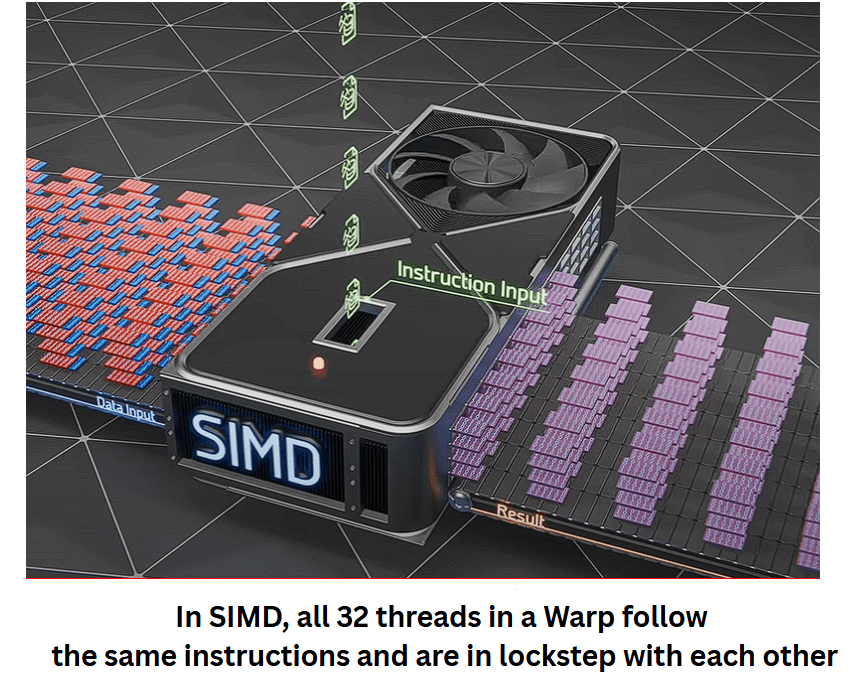

SIMD (Single Instruction, Multiple Data)

An early model where a single instruction drives a fixed-width vector of data lanes in lock-step, suitable for uniform workloads but inflexible for branching. In simple terms, early GPUs executed one instruction across a fixed set of data lanes, enforcing strict lock-step execution.

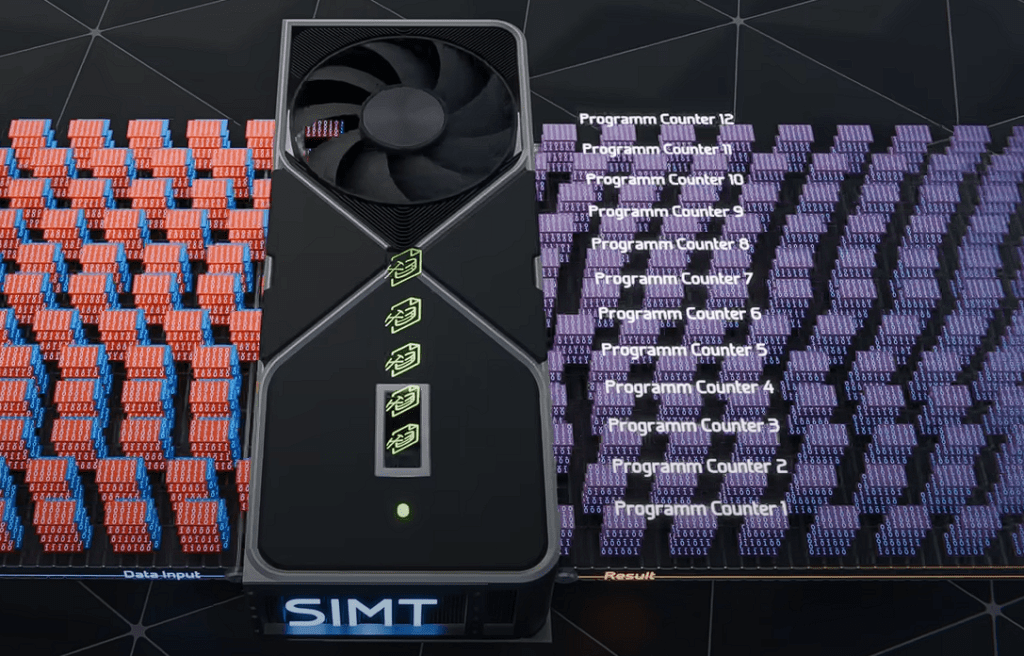

SIMT (Single Instruction, Multiple Threads)

NVIDIA’s innovation: each thread has its own program counter, enabling:

- Warp Divergence Handling: Threads in a warp can follow different control paths and later reconverge.

- Thread Hierarchy Components:

- Thread: Executes one instruction/data pair on one CUDA core.

- Warp: 32 threads issuing the same instruction but capable of managed divergence.

- Thread Block: A collection of warps allocated to one SM.

- Grid: All thread blocks launched by a GPU kernel.

- Gigathread Engine: The hardware scheduler that maps blocks to SMs and balances load.

This flexible model empowers developers to write general-purpose GPU code while retaining the massive parallel performance.

Real-World GPU Workloads

3D Graphics Rendering

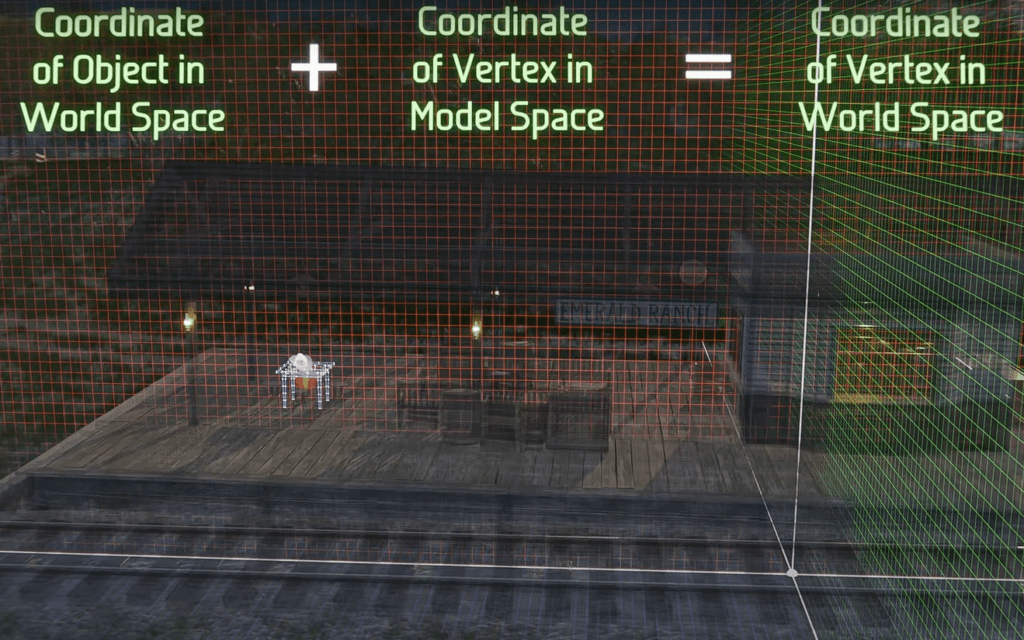

Consider transforming a cowboy hat model with 14,000 vertices:

- Model Space: Vertices relative to the object’s local origin.

- World Space Conversion: Add the object’s world-position vector to each vertex coordinate, which is an embarrassingly parallel addition across millions of numerical values.

- Pipeline Stages: Rotation, scaling, projection, rasterization, shading—each step parallelized across thousands of fragments or vertices.

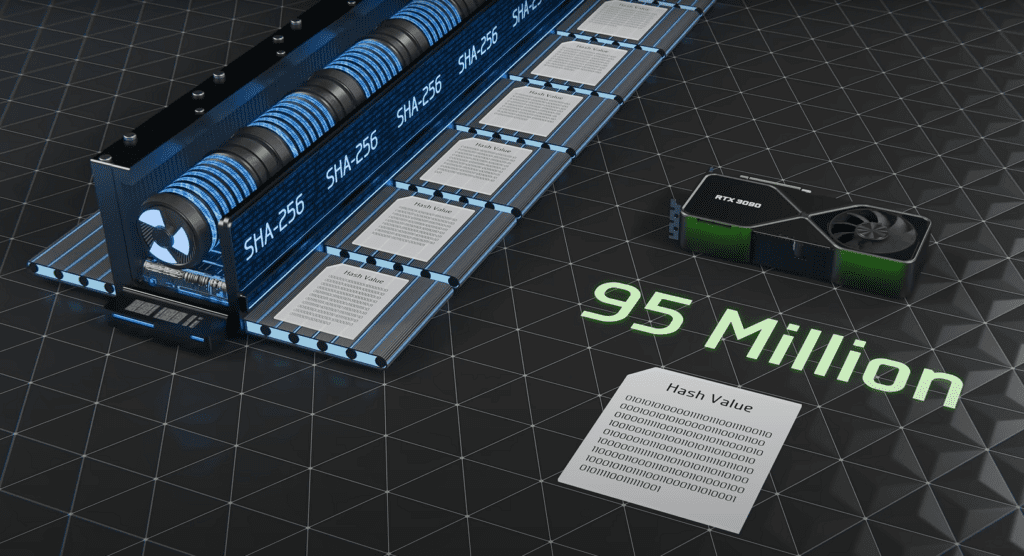

Cryptocurrency Mining

GPUs previously churned through 95 million SHA-256 hashes per second, trying different nonces in parallel. Modern ASICs now dominate at 250 trillion hashes/sec, showcasing how specialized hardware can eclipse general-purpose GPUs.

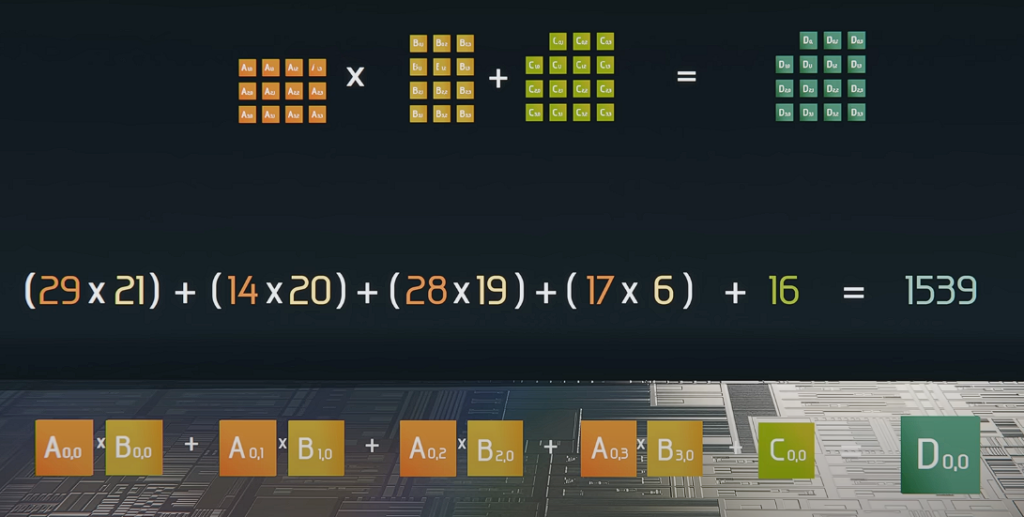

Neural Network Training & Inference (Tensor Cores)

Tensor Cores perform D = A×B + C matrix operations in hardware, enabling:

- Mixed Precision: FP16/INT8 arithmetic to boost throughput while maintaining accuracy.

- Scale: Trillions to quadrillions of matrix ops per training pass, which is critical for deep learning models like transformers and convolutional networks.

Conclusion

Modern GPUs represent a fusion of semiconductor innovation, parallel computing theory, and practical engineering. From billions of transistors on a silicon die to hierarchical scheduling units and specialized memory technologies, GPUs deliver computational power once reserved for supercomputers. Whether rendering virtual worlds, accelerating AI model training, or simulating scientific phenomena, GPUs continue to redefine the boundaries of what’s possible in computing. As GPU architectures evolve, embracing new memory standards, more flexible parallel models, and even tighter integration with CPUs, the pace of innovation will only accelerate.