As Machine Learning and AI technologies continue to advance, the need for efficient and secure methods to store, share, and deploy trained models becomes increasingly critical. Model weights file formats play a vital role in this process. These formats preserve a model’s learned parameters, enable reproducibility, and facilitate deployment across diverse environments and platforms. In this comprehensive blog post, we delve into the most popular model weight file formats in the industry, examining their origins, structures, use cases, and strengths.

- Why Model Weights File Formats Matter

- Overview of Popular Model Weights File Formats

- The Role of Pickle in Model Weights File Formats

- The Role of Protocol Buffers in Model Weights File Formats

- Detailed Exploration of Model Weights File Formats

- Choosing the Right Format

- Conclusion

Why Model Weights File Formats Matter

Model weight formats are more than just data containers. They:

- Enable model portability and interoperability across tools and frameworks.

- Preserve training progress for checkpointing and resumption.

- Support deployment in resource-constrained environments.

- Ensure security and integrity when sharing models.

Each format is designed with specific goals and trade-offs in mind, influencing how and where it is used.

Overview of Popular Model Weights File Formats

Below is a summarized comparison of widely used model weight formats:

| Format | Frameworks | Primary Use Case | Key Features |

|---|---|---|---|

.pt / .pth | PyTorch | Training and inference | Flexible, human-readable, framework-native |

.ckpt | TensorFlow | Checkpointing and training resumption | Robust, efficient for large models |

.h5 | Keras / TensorFlow | Saving full models in one file | Includes model, weights, and optimizer state |

.onnx | Cross-platform | Model interoperability and deployment | Open standard, hardware-optimized inference |

.safetensors | Hugging Face (PyTorch) | Secure and fast model sharing | Apple-native, optimized for Apple Silicon |

.gguf | GGML-based (LLaMA.cpp) | Efficient LLM inference | Quantization-ready, CPU/GPU optimized |

.tflite | TensorFlow Lite | Mobile and edge inference | Lightweight, hardware-accelerated |

| .engine | TensorRT | GPU Inference Optimization | High-performance, precision-tunable deployment |

| .mlmodel | Core ML (Apple) | iOS/macOS deployment | Apple-native, optimized for Apple Silicon |

The Role of Pickle in Model Weights File Formats

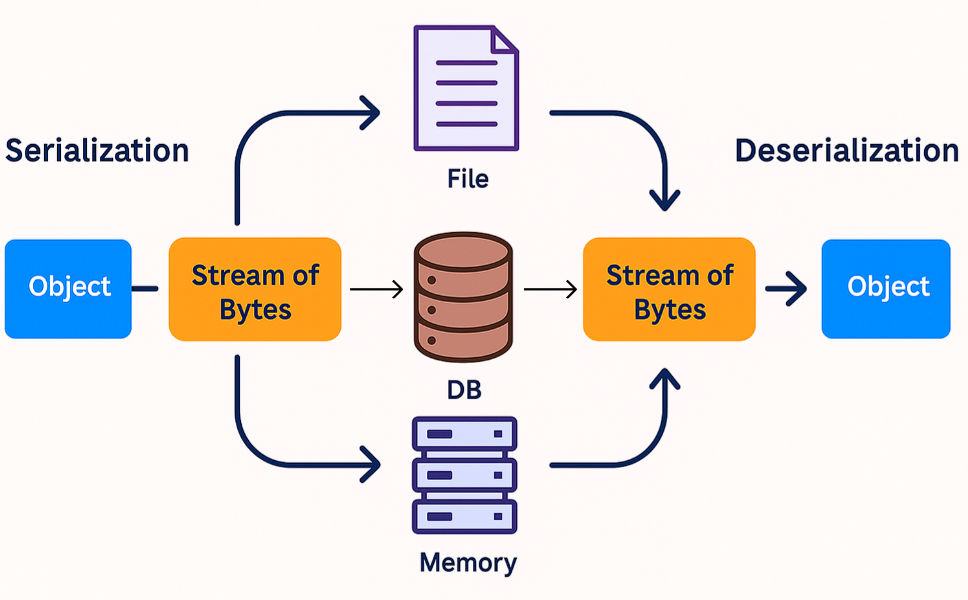

Python’s pickle module is foundational in many machine learning file formats, particularly within the PyTorch and broader Python ecosystem. Though not a formal model format itself, pickle is often the underlying mechanism used to serialize and deserialize model weights and configurations.

What is Pickle?

Pickle is a standard Python library that converts Python objects into a byte stream (serialization) and restores them (deserialization). It allows objects, such as model weights, entire models, or training histories, to be saved to disk.

Where is Pickle Used?

- PyTorch: The

.ptand.pthformats usepickleto serialize model state dictionaries or entire models. - Scikit-learn: Models are often saved using

.pkl, which is a direct result of usingpickle.dump(). - XGBoost & LightGBM: While they support native formats,

pickleis sometimes used for quick saving in Python environments.

Pros:

- Native to Python: Easy to use, especially for Python developers.

- Flexible: Can store virtually any Python object, including complex models.

Cons:

- Security Risk: Loading a pickled file can execute arbitrary code if the file is from an untrusted source. This makes it unsuitable for public sharing or web-facing applications.

- Lack of Interoperability: Pickled files cannot be easily loaded outside Python or across different framework versions.

The Role of Protocol Buffers in Model Weights File Formats

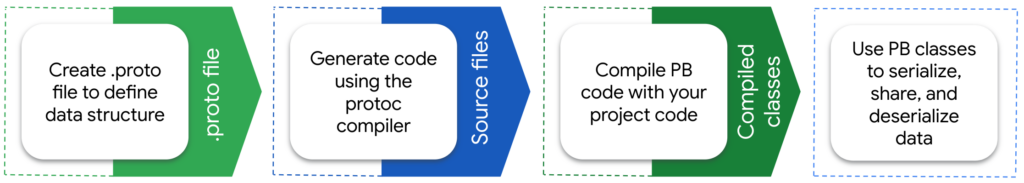

Developed by Google, Protocol Buffers (protobuf) is a foundational encoding format, not typically a file extension used directly by developers to save or load model weights. It offers a compact and efficient binary serialization mechanism, making it ideal for representing structured data like model architectures and weights.

Where Protocol Buffers are Used:

- TensorFlow

.pbfiles: These are frozen models serialized using Protobuf, combining both architecture and trained parameters for deployment. - ONNX (

.onnx): The entire ONNX standard is built upon the Protobuf serialization schema. - Apple Core ML (

.mlmodel): Uses Protobuf under a custom schema to define model components.

Detailed Exploration of Model Weights File Formats

.pt / .pth (PyTorch)

PyTorch’s native model weight formats are among the most widely used in research and industry today. Introduced by Facebook AI in 2016, .pt and .pth formats store either the entire model or just its state dictionary (weights and biases). They rely on Python’s pickle module for serialization.

Key Characteristics:

- Highly flexible, making it easy to save and load models for research workflows.

- Commonly used with Hugging Face models, especially for transformer-based architectures.

.pthand.ptare functionally identical; naming is a matter of convention.

Considerations:

- While widely supported within PyTorch, these formats are not natively portable to other frameworks.

- Pickle-based loading poses potential security risks when files come from untrusted sources.

.ckpt (TensorFlow)

The checkpoint format in TensorFlow, denoted by .ckpt, allows models to save training states, weights, and optimizer configurations. Each checkpoint typically includes three files: .data, .index, and .meta (for legacy models).

Key Characteristics:

- Enables resuming training exactly where it left off.

- Ideal for training large models and storing intermediate results.

- Widely used in models like BERT and T5 from Google.

Considerations:

- Checkpoints are not well-suited for deployment; models are typically converted to TensorFlow’s SavedModel format or to

.tflite. - File management can be more complex due to the multiple-part structure.

- Does not contain the model architecture; it requires the original code to rebuild the model structure.

.h5 (HDF5)

Adopted early by Keras, the .h5 (HDF5) format allows the storage of the entire model architecture, weights, and optimizer state in a single file. This format is intuitive for sharing and inspecting model components.

h5 Model file icon for HDF5 FormatKey Characteristics:

- User-friendly and well-documented.

- Suitable for small to medium-sized models.

- Offers a clear structure to inspect and modify weights or model layers.

Considerations:

- Increasingly replaced by TensorFlow’s SavedModel format.

- Not as efficient for very large models or complex serialization needs.

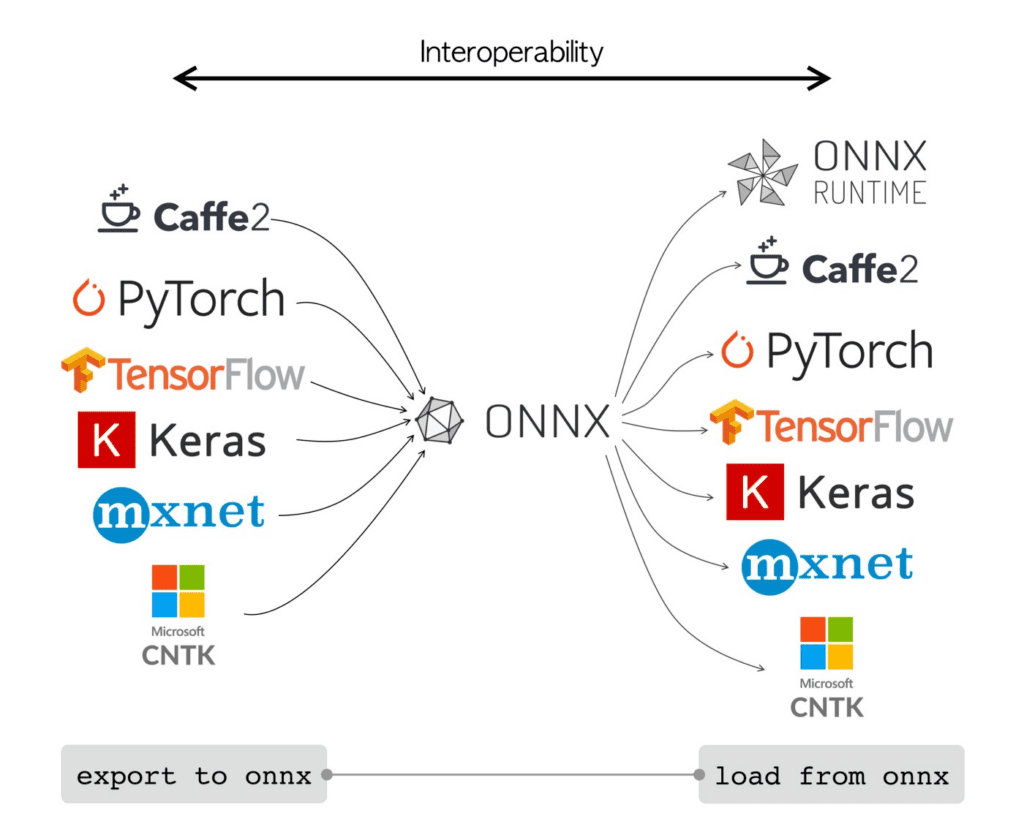

.onnx (Open Neural Network Exchange)

ONNX is an open-source format jointly developed by Microsoft and Facebook. It allows models trained in one framework (like PyTorch) to be run in another (like TensorFlow or ONNX Runtime), promoting cross-platform compatibility.

Key Characteristics:

- Encodes model architecture and weights in a Protobuf (protocol buffer) format.

- Widely supported across frameworks and hardware accelerators.

- Popular for deploying models in production environments.

Considerations:

- Custom layers and operations may require ONNX-compatible rewrites or extensions.

- Conversion tools (e.g.,

torch.onnx.export) can have limitations or require manual adjustments.

.safetensors

Created by Hugging Face, .safetensors addresses the security risks of pickle-based formats like .pt. It is a binary format optimized for safe and fast tensor storage, especially useful when models are shared publicly.

Key Characteristics:

- Enables zero-copy loading, which significantly reduces loading time.

- Eliminates code execution risk during deserialization.

- Became the default for Hugging Face’s Transformers library.

Considerations:

- Stores only tensors; model architecture must be defined separately in code.

- Less flexible for full model serialization, but ideal for safe inference sharing.

.gguf (GGML Unified Format)

Designed for efficient inference of large language models (LLMs), .gguf is part of the llama.cpp ecosystem. It integrates weights, tokenizer, and metadata into a single quantization-friendly file.

gguf file for LLM InferenceSourceKey Characteristics:

- Supports multiple levels of quantization (Q4, Q5, etc.), reducing memory and compute requirements.

- Ideal for deployment on CPU/GPU in resource-limited environments.

- Widely used in the open-source LLM community (e.g., Mistral, LLaMA).

Considerations:

- Tailored specifically for

llama.cppand similar inference engines. - Not suited for training or use outside GGML-compatible tools.

.tflite (TensorFlow Lite)

.tflite is a FlatBuffer format developed by Google to optimize TensorFlow models for edge and mobile devices. It provides a highly compressed model structure ideal for on-device inference.

tflite File Format for Mobile Model DeploymentKey Characteristics:

- Offers low-latency execution with optional hardware acceleration (e.g., NNAPI, GPU, Edge TPU).

- Suitable for Android and embedded systems.

- Includes support for post-training quantization.

Considerations:

- Not all TensorFlow operations are supported; they may require model simplification or conversion.

- Debugging and inspection are more difficult due to the compact binary structure.

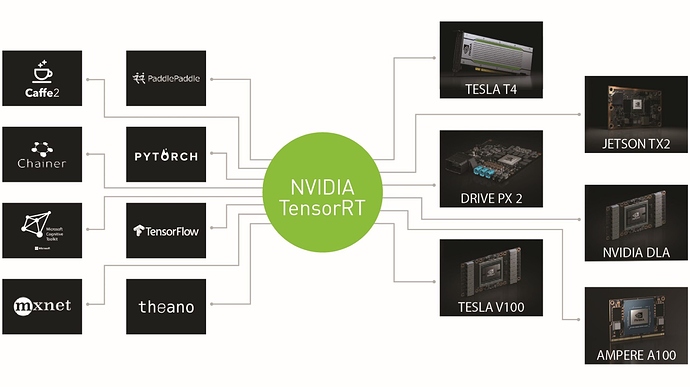

.engine (TensorRT)

TensorRT is NVIDIA’s high-performance Deep Learning Inference library, and .engine is its optimized runtime format. Rather than being used for training, models are typically exported from formats like .onnx or .pb and then compiled into .engine files for ultra-fast inference on NVIDIA GPUs.

engine workflow for GPU optimized InferenceSourceKey Characteristics:

- Converts pretrained models into a highly optimized execution graph.

- Supports precision modes like FP32, FP16, and INT8.

- Ideal for production deployment on NVIDIA hardware.

Common Use Cases:

- Real-time object detection on Jetson devices.

- High-throughput AI inference in cloud environments with GPUs.

- Robotics, automotive AI, and other latency-sensitive tasks.

Considerations:

.enginefiles are hardware and version specific.- Requires regeneration if used on a different GPU architecture or TensorRT version.

- Best used post-training for deployment only.

.mlmodel (Core ML)

Apple’s .mlmodel format is designed for deploying ML models within Apple’s ecosystem, including iOS, macOS, and watchOS. It allows seamless integration into apps using Xcode and Swift.

mlmodel format for iOS Ecosystem DeploymentSourceKey Characteristics:

- Stores model specifications, inputs/outputs, and metadata in a protobuf structure.

- Optimized for Apple Silicon performance.

- Compatible with Core ML tools for model conversion and validation.

Considerations:

- Restricted to Apple platforms.

- Requires conversion tools (e.g.,

coremltools) for model preparation.

Choosing the Right Format

Your choice of model weight file format should depend on:

- Framework and training pipeline

- Target deployment platform (mobile, web, server)

- Security and portability requirements

- Performance constraints (e.g., quantization, RAM limits)

| Scenario | Recommended Format |

| Training with PyTorch | .pt / .safetensors |

| TensorFlow model checkpointing | .ckpt |

| Model sharing in Keras | .h5 |

| Cross-framework deployment | .onnx |

| Edge inference (Android) | .tflite |

| Secure sharing via Hugging Face | .safetensors |

| Quantized LLMs on CPU/GPU | .gguf |

| GPU-accelerated deployment | .engine |

| iOS/macOS application | .mlmodel |

Conclusion

Understanding model weight file formats is essential for efficient model training, sharing, and deployment. Each format is optimized for specific use cases and environments. Whether you’re deploying a quantized LLM on a laptop, integrating AI into a mobile app, or sharing research models securely, the right format ensures performance, portability, and security.

Choose wisely, and your models will be as efficient in deployment as they are intelligent in inference.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning