Welcome back to our LangGraph series! In our previous post, we explored the fundamental concepts of LangGraph by building a Visual Web Browser Agent that could navigate, see, scroll, and summarize web pages. We saw how nodes, edges, state, and conditional routing come together to create intelligent workflows.

Today, we’re taking it a step further. What if an agent needs to do more than just follow a predefined path? What if it needs to try something, check if it worked, and if not, try again with improvements? This is the essence of self-correction and iterative behavior, and LangGraph is perfectly designed for it.

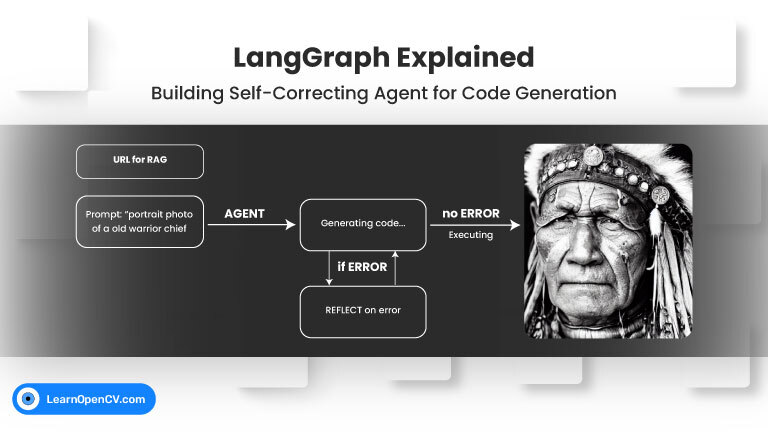

In this article, we’ll dive into building a Diffusers Code Generation Agent using self correcting RAG agent. This agent’s task is to write Python code using the Hugging Face diffusers library. But here’s the twist: it will then attempt to execute the code it generated. If the code fails, the agent will reflect on the error and use that insight to generate an improved version, looping until it succeeds or reaches a retry limit.

- Beyond One-Shot Generation

- The Self-Correcting Agent’s Blueprint

- LangGraph Concepts (Advanced)

- Running the Agent

- Conclusion

- References

Beyond One-Shot Generation

Large Language Models (LLMs) are incredibly powerful at generating code. You can ask them to write a script, and often, they’ll give you something impressive. However, generated code isn’t always perfect. It might have syntax errors, logical bugs, or simply not do what was intended.

For critical applications, a “one-shot” code generation isn’t enough. We need a feedback loop:

- Generate a solution.

- Execute/Test the solution.

- Evaluate the result (did it work? did it error?).

- If there’s an issue, reflect on the problem.

- Use the reflection to regenerate a better solution.

- Repeat.

This iterative process is naturally mapped by LangGraph’s ability to create cycles in its workflow.

The Self-Correcting Agent’s Blueprint

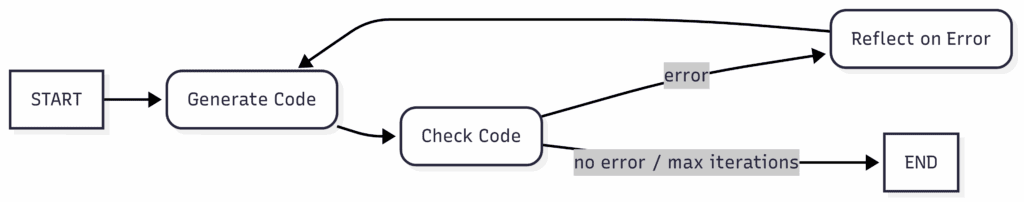

Our Diffusers Code Generation Agent workflow consists of three main operational nodes and a crucial conditional router:

- Generate: The LLM generates the initial code.

- Check Code: Attempts to execute the generated code.

- Reflect: If the code fails, the LLM reflects on the error to understand what went wrong.

These nodes form a self-correction loop. Here’s how it looks:

This diagram illustrates the core iterative cycle: generate, check, and if there’s an error, reflect and regenerate.

LangGraph Concepts (Advanced)

Let’s explore how our rag_agent.py script leverages LangGraph to implement this robust self-correction mechanism.

Agent State (AgentState)

As we learned, the AgentState (TypedDict) is the central hub for all information in our graph. For this code generation agent, we need to track the conversation, the generated output, and crucial flags for our loop:

from typing import TypedDict, Annotated, Sequence

from langchain_core.messages import BaseMessage # Base class for all message types

from langgraph.graph import add_messages # The reducer function

class AgentState(TypedDict):

error: str # Flag: "yes" if code failed, "no" if successful

messages: Annotated[Sequence[BaseMessage], add_messages] # Full conversation history

output: str # The generated code snippet

description: str # Description of the generated code

explanation: str # Explanation of the generated code

iterations: int # Counter for retry attempts

- error: This simple string acts as a crucial flag, telling our conditional edge whether the last code execution was successful.

- messages: Once again, Annotated[…, add_messages] is a powerful LangGraph reducer. It ensures that every BaseMessage object returned by a node (whether from a HumanMessage input, an AIMessage from the LLM, or a ToolMessage result) is automatically appended to this list, maintaining a full conversational history for the LLM to refer to.

- output, description, explanation: These fields store the structured output generated by our LLM, which we’ll discuss next.

- iterations: This integer counter is vital for preventing infinite loops in our self-correction process.

Structured Output with Pydantic

Generating a raw text response from an LLM can be unstructured. For code generation, we need specific fields: the code itself, a description, and an explanation. This is achieved using Pydantic models and PydanticOutputParser from LangChain.

from pydantic import BaseModel

from langchain.output_parsers import PydanticOutputParser

class DiffuserCodeOutput(BaseModel):

description: str

code: str

explanation: str

parser = PydanticOutputParser(pydantic_object=DiffuserCodeOutput)

We instruct the LLM in the system prompt to output a JSON string conforming to this DiffuserCodeOutput structure (parser.get_format_instructions()). The generate node then uses parser.parse(response_text) to safely extract the description, code, and explanation into Python objects, which are then stored in our AgentState. This ensures reliable data extraction from the LLM’s raw text response.

Retrieval Augmented Generation (RAG): Specialized Knowledge for LLM

While LLMs have vast general knowledge, they might not be up-to-date or highly specialized in very specific domains, like the nuances of a particular library’s API. This is where Retrieval Augmented Generation (RAG) comes into play.

RAG is a technique that allows an LLM to access, retrieve, and condition its response on specific, external, and up-to-date information. Instead of relying solely on the model’s pre-trained knowledge, we augment its capabilities by providing relevant context at inference time.

In our rag_agent.py script, this is implemented by:

from langchain_community.document_loaders.recursive_url_loader import RecursiveUrlLoader

from bs4 import BeautifulSoup as soup # Used for parsing HTML content

url = 'https://huggingface.co/docs/diffusers/stable_diffusion'

loader = RecursiveUrlLoader(

url=url,

max_depth=20, # How deep to go in finding linked pages

extractor=lambda x: soup(x, 'html.parser').text # Extract plain text from HTML

)

docs = loader.load() # Load all documents from the URL

concatenated_content = "\n\n\n --- \n\n\n".join([doc.page_content for doc in docs])

- Loading Documentation: We use LangChain’s

RecursiveUrlLoaderto fetch content from the official Hugging Face diffusers documentation URL. - Concatenating Content: The loaded documents (each doc containing page_content) are then joined into a single, large string. The

concatenated_contentholds a significant portion of thediffuserslibrary’s documentation. - Injecting into the System Prompt: Finally, the below given chunk of specialized knowledge is injected directly into the

SystemMessagethat guides our LLM.

system_message = SystemMessage(

content=f"""<instructions>

You are an expert coding assistant specializing in the Hugging Face `diffusers` library.

Your task is to answer the user's question by generating a complete, executable Python script.

Here is the relevant `diffusers` documentation to help you:

-------

{concatenated_content}

-------

You must respond in a **JSON format** with the following fields:

{parser.get_format_instructions()}

Strictly follow this structure:

1. `description`: A one-line summary of what the script does.

2. `code`: A full working Python script (no markdown formatting).

3. `explanation`: A short paragraph explaining key parameters and decisions.

</instructions>"""

)

By providing this concatenated_content in the system prompt, we are giving the LLM immediate access to highly relevant, up-to-date, and specific information about diffusers. This significantly improves the accuracy and relevance of the generated code, making the agent a true “expert” in this domain, even if the underlying LLM itself wasn’t explicitly trained on the very latest version of the documentation. This is a fundamental pattern for building knowledge-aware LLM applications.

Different Types of Messages (BaseMessage and Subclasses)

Within your agent’s messages field (and often summaries if storing them as message objects), you’ll use different types of messages to represent different roles in a conversation or interaction. All message types inherit from BaseMessage:

- HumanMessage: Represents input directly from a human user. Used for the initial task, for providing error messages back to the LLM (as seen in code_check), or any direct user interaction within the workflow.

- AIMessage: Represents output from an AI model (like an LLM). The LLM’s response to a prompt, including any generated text or requested tool calls, comes back as an AIMessage.

- SystemMessage: Provides global context or instructions to the AI model, often at the beginning of a conversation or at specific points in the workflow to guide the model’s behavior for the next step. Our system_message containing the RAG context is a prime example.

- ToolMessage: Represents the result of a tool call requested by the AI. (Less prominent in this specific rag_agent.py as exec() is a direct Python function, not a LangChain tool, but crucial for agents using external APIs).

Understanding these message types is crucial for building agents that interact with LLMs, as models like those from Google Gemini or OpenAI expect inputs in this format and return outputs using AIMessage (which might contain tool calls).

Nodes (The Agent’s Actions)

Each node is a Python function that processes the state and returns updates:

- generate(state: AgentState) -> AgentState:

- This is where the LLM (gemini-2.5-pro) is invoked to produce the initial (or revised) code solution.

- It receives the messages history (including previous errors if any), the iterations count, and the error flag.

- If there was a previous error (state[“error”] == “yes”), it adds a HumanMessage like “Try again. Fix the code…” to prompt the LLM for correction.

- It then calls llm.invoke(messages) and parses the structured output using our parser.

- Updates the output, description, explanation, messages (via add_messages), and iterations in the state.

- code_check(state: AgentState) -> AgentState:

- This node takes the code generated by the generate node.

- It uses the built-in Python function exec() to attempt to run the Python code directly. exec() is powerful for dynamic code execution.

- The exec() function takes the code string and a globals() dictionary. globals() is a built-in Python function that returns the current global symbol table as a dictionary. When exec() is called with globals(), it means that any variables, functions, or imports defined within the generated code will be executed within the current script’s global scope. This is important because if the generated code needs to, for example, import diffusers or define helper functions, it will do so in an environment where they can be accessed.

- A try-except block is essential here to catch any runtime errors that occur when executing the generated code.

- If exec() succeeds, the error flag in the state is set to “no”. If it fails, error is set to “yes”, and the exception message is added to the messages history as a

HumanMessagefor the LLM to see in the next iteration.

try:

exec(code, globals()) # Attempt to run the code

except Exception as e:

# If an error occurs during execution

print("Code execution failed:", e) # Log the error

messages.append(HumanMessage(content=f"Your solution failed: {e}")) # Add error to messages

return {**state, "messages": messages, "error": "yes"} # Set error flag

- reflect(state: AgentState) -> AgentState:

- This node is only hit if code_check reports an error and the should_continue router decides to reflect.

- It adds a simple HumanMessage like “Reflect on the error and try again.” to the messages list.

- It then invokes the LLM again, providing the full messages history (including the previous error details). The LLM’s response (its reflection) is added back to messages.

- This step allows the LLM to “think” about the problem before attempting to regenerate code, potentially leading to better fixes by allowing it to explicitly process the error context.

Edges (The Control Flow Loop)

The power of iteration lies in how we connect these nodes.

- workflow.add_edge(START, “generate”): The graph begins by generating a solution.

- workflow.add_edge(“generate”, “check_code”): Always check the generated code after it’s generated.

Now, the crucial part: the conditional edge after check_code.

- should_continue(state: AgentState) -> str:

- This is our router function. It examines the state to decide the next step.

- It checks state[“error”] (did the code run successfully?) and state[“iterations”] (have we tried too many times?).

- If error == “no” (success) OR iterations >= max_iterations (retry limit reached), it returns “end”.

- Otherwise (code failed and still within limits), it returns “reflect” (to go to the reflection step) or “generate” (to go straight to regeneration, if a specific flag is set for skipping reflection).

- workflow.add_edge(“reflect”, “generate”): This completes the self-correction loop! After reflecting on an error, the agent goes back to the generate node to produce a new version of the code.

workflow.add_conditional_edges("check_code", should_continue, {

"end": END, # If "end", the graph finishes

"reflect": "reflect", # If "reflect", go to the reflection node

"generate": "generate" # If "generate", go back to generation immediately (skipping reflection if needed)

})

This setup ensures that the agent will keep trying, learning from its mistakes, until it produces working code or reaches a predefined attempt limit.

Running the Agent

To execute this agent, you provide an initial_state with the system prompt, the user’s question, and initial iteration count.

initial_question = "generate image of an old man in 20 inference steps"

initial_state = {

"messages": [

system_message,

HumanMessage(content=initial_question)

],

"iterations": 0,

"error": "",

"output": "",

"description": "",

"explanation": "",

}

solution = app.invoke(initial_state) # Run the graph

# Print final structured output

print("\n--- FINAL RESULT ---")

print("📝 Description:\n", solution["description"])

print("\n📄 Code:\n", solution["output"])

print("\n🔍 Explanation:\n", solution["explanation"])

When you run this agent, you’ll see a dynamic process:

- The agent generates code.

- It attempts to run the code.

- If there’s a problem, you’ll see “Code execution failed” in your terminal, and the agent will then enter the reflection phase.

- It then goes back to generate a new, hopefully corrected, version of the code.

- This continues until the code runs successfully, at which point the final structured output is printed.

This demonstrates how LangGraph enables truly intelligent, resilient, and iterative agent behavior.

Conclusion

In this second post of our LangGraph series, we moved beyond linear workflows to build a sophisticated Self-Correcting Code Generation Agent. We reinforced our understanding of State (including structured fields like output and control flags like error and iterations), learned how to leverage Pydantic for structured LLM outputs, and most importantly, explored how Retrieval Augmented Generation (RAG) empowers our agent with specialized knowledge. Finally, we saw how conditional edges are used to create powerful iterative loops that enable agents to learn from feedback and self-correct.

The ability to build agents that can try, fail, learn, and retry is a game-changer for reliability in AI applications. LangGraph provides the perfect framework to design and manage these complex, dynamic behaviors.

Experiment with this agent! Give it different code generation tasks, introduce artificial errors, and observe how it uses its internal loop to resolve issues. In our next installment, we’ll explore even more advanced LangGraph patterns!

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning