Developing intelligent agents, using LLMs like GPT-4o, Gemini, etc., that can perform tasks requiring multiple steps, adapt to changing information, and make decisions is a core challenge in AI development. Imagine an agent that needs to browse a website, understand what’s on the screen, scroll down for more information, and summarize everything it finds. This isn’t a single query and response; it’s a workflow with loops and conditional logic. This is where LangGraph becomes incredibly useful.

Built on top of LangChain, LangGraph is a library designed to help you build stateful, multi-actor applications with complex control flow, including cycles (loops). It lets you define your agent’s logic as a directed graph of nodes and edges, much like drawing a flowchart.

In this post, we’ll take a deep dive into the fundamental concepts of LangGraph by building a Visual Web Browser Agent. This agent will leverage a vision-capable AI and browser automation tools to navigate a webpage, visually process its content chunk by chunk (through screenshots), and decide if it needs to scroll.

- Why LangGraph?

- Core LangGraph Concepts

- Building the Visual Web Agent

- Application Demo

- Conclusion

- References

Why LangGraph?

You might ask, why not just use a standard programming script with if/else statements and loops? For simple sequences of steps (like fetching data, then analyzing it once), standard programming or basic LangChain chains work fine. However, when your agent needs to:

- Maintain memory of previous actions or observations (State).

- Make decisions based on the current situation (Conditional Edges).

- Repeat a sequence of steps based on a condition (Cycles).

- Coordinate multiple distinct capabilities, e.g., browsing, summarizing, deciding, etc. (Nodes).

…the complexity quickly grows. LangGraph provides a structured way to manage this, offering clear benefits:

- Visualization: The graph structure lends itself well to visualization, giving you a clear picture of your agent’s process.

- State Management: LangGraph automatically handles passing and updating a shared state object between nodes.

- Explicit Control Flow: Define exactly how the execution moves from one step to the next, including loops and branches.

- Modularity: Each node is a self-contained unit of logic, making the agent easier to build, understand, and debug.

Core LangGraph Concepts

Let’s break down the essential building blocks of a LangGraph application.

State (TypedDict and Reducers)

The heart of any LangGraph application is its state. This is the shared data structure that travels from node to node. Each node receives the current state, performs its operation, and returns a dictionary specifying how the state should be updated.

In Python, you define the structure of your state using a TypedDict. This is essentially a dictionary with predefined keys and value types.

Let’s look at a simple example:

from typing import TypedDict

class AgentState(TypedDict):

message: str

Here, the state is just a dictionary with one key: message. A node could read this message, modify it, and return the updated state.

Our Visual Web Agent needs to keep track of more things. Its state includes:

from typing import Annotated, Sequence, List, TypedDict, Union

from langchain_core.messages import BaseMessage

from langgraph.graph.message import add_messages # Import the reducer

class AgentState(TypedDict):

messages: Annotated[Sequence[BaseMessage], add_messages] # Conversation history + Auto-append

url: Union[str, None] # Current page URL

current_ss: Union[List[str], None] # List of base64 screenshots

summaries: Annotated[Sequence[BaseMessage], add_messages] # List of summary messages

scroll_decision: Union[str, None] # Decision from LLM ('yes'/'no')

task: str # The overall goal

Different Types of Messages (BaseMessage and Subclasses)

Within the agent’s messages field (and often summaries if storing them as message objects), you’ll use different types of messages to represent different roles in a conversation or interaction. All message types inherit from BaseMessage:

- HumanMessage: Represents input directly from a human user. Used for the initial task or any direct user interaction within the workflow. Can contain text and/or images (as in our visual agent’s prompt to the vision LLM).

- AIMessage: Represents output from an AI model (like an LLM). The LLM’s response to a prompt, including any generated text or requested tool calls, comes back as an

AIMessage. - SystemMessage: Provides context or instructions to the AI model, often at the beginning of a conversation or at specific points in the workflow to guide the model’s behavior for the next step. Used in our agent to provide context for summarization and scroll decisions.

- ToolMessage: Represents the result of a tool call requested by the AI. If an

AIMessageincludestool_calls, then LangGraph executes those tools (often via aToolNode), and the output of the tool execution is returned as aToolMessagelinked to the specific tool call ID.

Understanding these message types is crucial for building agents that interact with LLMs, as models like those from Google or OpenAI expect inputs in this format and return outputs using AIMessage.

Tools (@tool, .invoke(), .ainvoke(), ToolNode)

Tools are external functions or APIs that your agent can use to interact with the world or perform specific actions (like searching the web, running code, or controlling a browser). LangChain provides the @tool decorator to easily define Python functions as tools.

Let’s have a look at how the tools are created in Python using LangGraph:

from langchain_core.tools import tool

@tool

async def navigate_url(url: str) -> str:

"""Navigates the browser to the URL provided."""

# ... Playwright code to navigate ...

return "Success or error message"

The @tool decorator makes the function discoverable and describes it in a way that LLMs can understand by using the docstring.

Within a node function, you call these tools using their .invoke() (synchronous) or .ainvoke() (asynchronous) methods.

async def init_node(state: AgentState) -> AgentState:

# ... browser initialization ...

navigate_output = await navigate_url.ainvoke(base_url) # Calling the tool

# ... update state with output ...

return state

When an LLM (especially one bound with tools -> .bind_tools()) decides it needs to use a tool, it’s AIMessage response will contain tool_calls. LangGraph has a built-in ToolNode that automatically takes these tool_calls, executes the corresponding tool functions, and returns the results as ToolMessages. Our visual agent calls tools directly within its nodes for simplicity, but for agents where the LLM decides which tool to call, ToolNode becomes very convenient.

The Role of Base64

Our agent needs to “see” the webpage, which means taking screenshots. Screenshots are image files, and in code, they are often represented as binary data (sequences of bytes). However, when sending image data to many modern multimodal AI models via an API, you can’t just send the raw bytes directly as part of a text prompt or JSON request.

This is where the base64 library becomes essential.

The base64 library provides functions to encode binary data into a text format. The base64.b64encode() function takes binary data (like the bytes from a screenshot) and converts it into a base64 encoded sequence of bytes. We then use .decode(“utf-8”) to turn those bytes into a standard string.

import base64

binary_ss = await page.screenshot() # Gets binary data (bytes)

b64_ss_bytes = base64.b64encode(binary_ss) # Encodes bytes to base64 bytes

b64_ss_string = b64_ss_bytes.decode("utf-8") # Decodes base64 bytes to a string

return b64_ss_string

This resulting base64 string is a text representation of the image. It can be easily included within a JSON object or a text-based multimodal prompt format (like the data:image/png;base64,… URL format used in our summarizer_node). This process is crucial for allowing the vision LLM to receive and interpret the image data from the screenshot. The base64 library is the standard Python tool for this binary-to-text encoding.

Nodes

Nodes are the individual processing units in your graph. They are typically Python functions that take the current state and return an updated state dictionary.

Let’s see how a simple node is implemented in Python:

def complimentor(state: AgentState) -> AgentState:

"""Adds a compliment message to the input string."""

print("Executing Complimentor Node...")

state['message'] = "Hello " + state['message'] + ", you are doing an amazing job!!"

return state

This node receives the state, modifies the message field, and returns the updated state.

Nodes can perform any kind of operation: calling an LLM, using a tool, running custom logic, or even just passing the state through(using a passthrough function like lambda state:state). In our visual agent, nodes call web browsing tools (navigate, screenshot, scroll) and interact with the vision LLM for summarization and decision-making.

In our visual agent:

- init_node: Calls browser setup and navigation tools.

- ss_node: Calls the screenshot tool.

- summarizer_node: Calls the vision LLM (with a multimodal prompt) to get a summary message.

- decide_scroll_node: Calls the LLM to get a ‘YES’ or ‘NO’ decision.

- scroll_decision_node: Calls the scroll tool.

- aggregate_node: Calls the LLM to combine summaries, generated by taking a screenshot of the URL page at different lengths.

Each node is responsible for a single, logical step.

Edges

Edges define the connections and flow between nodes.

- Basic Edges: A simple directed connection. After the source node finishes, the graph automatically moves to the target node.

# Example based on langGraph-103.py

def first_node(state): ...

def second_node(state): ...

graph = StateGraph(AgentState)

graph.add_node('step1', first_node)

graph.add_node('step2', second_node)

graph.add_edge('step1', 'step2') # Always go from step1 to step2

- You also define the entry point (where the graph starts) using

set_entry_pointor by importingSTARTfunction from LangGraph, and the finish point (where it ends) usingset_finish_pointor by routing to the specialENDnode. - Conditional Edges: This is the way of introducing branching logic. After a node finishes, instead of going to a single fixed next node, the graph calls a separate router function. The router function looks at the current state and returns the name of the next node. You provide

add_conditional_edgeswith a mapping from these names to the actual target nodes.

def first_router(state):

if state['operation_1'] == '+':

return 'add_path'

else:

return 'subtract_path'

graph = StateGraph(AgentState)

graph.add_node('router_node', lambda state: state) # Router nodes often just pass state

graph.add_node('adder', adder_node)

graph.add_node('subtractor', subtractor_node)

graph.add_conditional_edges(

'router_node', # Source node

first_router, # Router function

{

#edge: node relation

'add_path': 'adder',

'subtract_path': 'subtractor'

}

)

- In our visual agent, the

scroll_decide__nodesets a scroll_decision in the state. Theroute_scroll_decisionfunction checks this decision (‘yes’ or ‘no’) and tells the graph whether to go to thescroll_node_actionor theaggregate_node

Building the Visual Web Agent

Now, let’s look at how these concepts come together in the visual web browser agent script.

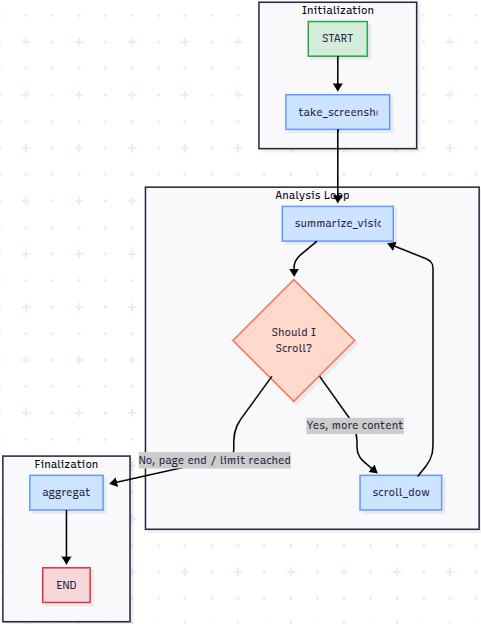

This diagram clearly shows the three main phases:

- Initialization:

- Starts (

START). - Goes to take_screenshot (which is part of our

init_nodesequence in the code, followed by thetake_sscall in thess_node). The diagram simplifies initialization slightly by starting at the screenshot, but conceptually, navigation happens first.

- Starts (

- Analysis Loop: This is the core iterative process.

- Starts with summarize_vision (our

summarizer_node), processing the current screenshot. - Based on the summary and task, the “Should I Scroll?” decision point (our

scroll_decision_nodecalling the LLM and theroute_scroll_decisionfunction) is reached. - If the decision is “Yes, more content”, the flow goes to scroll_down (our

scroll_node_action), which performs the scroll. After scrolling, the edge loops back to summarize_vision (our screenshot node,ss_node, which then leadssummarizer_node) to process the new view. - If the decision is “No, page end/limit reached”, the loop is broken, and the flow moves to the Finalization stage.

- Starts with summarize_vision (our

- Finalization:

- The workflow goes to aggregate (our

aggregate_node), which combines all the collected summaries. - Finally, the process ends (

END).

- The workflow goes to aggregate (our

This diagram maps directly to the nodes and edges we defined in the Python script.

Application Demo

To run this agent, you need:

- Python, installed with the necessary libraries (

playwright,langchain,langgraph,google-generativeai,python-dotenv). - Playwright browser binaries installed (

playwright install). - A

.envfile with yourGOOGLE_API_KEY.

The graph is compiled using app = workflow.compile(). We then use app.astream(initial_state) within an asyncio loop to execute the graph and stream the state changes.

async def run_graph():

initial_state = {

"messages": [],

"url": None,

"current_ss": [],

"summaries": [],

"scroll_decision": None,

"task": "Give a brief overview of this Wikipedia page on Large Language Models."

}

print("\n--- Starting LangGraph Agent ---\n")

try:

# Stream allows us to see the graph execution step-by-step

# recursion_limit prevents infinite loops during testing

async for step in app.astream(initial_state, {"recursion_limit": 10}):

step_name = list(step.keys())[0]

print(f"\n--- Step: {step_name} ---")

latest_state = step[step_name]

# Print relevant info for demo

if step_name == "summarizer":

if latest_state.get('summaries'):

latest_summary_message = latest_state['summaries'][-1]

if hasattr(latest_summary_message, 'content') and latest_summary_message.content:

print(">>> Individual Screenshot Summary:")

print(latest_summary_message.content)

elif step_name == "decide_scroll":

decision = latest_state.get('scroll_decision')

print(f">>> Scroll Decision: {decision}")

elif step_name == "aggregate":

# Find and print the final summary added to messages

... # (See full script for logic)

except Exception as e:

print(f"\n--- An error occurred: {e} ---")

finally:

await close_browser() # Clean up Playwright browser

When the browser window is manually resized, the web content appears to be ‘cut off’, rendering only within a specific portion of the window.

This is not a rendering bug, but a fundamental aspect of how the browser automation tool, Playwright, works. When the agent launches the browser, it does so with a pre-defined viewport size (e.g., 1280×720 pixels). This viewport is the agent’s virtual screen—the exact rectangular area it uses for all its operations, including taking screenshots and calculating scroll distances. It ensures that the agent has a consistent and predictable canvas to work on.

When we manually resize the OS window, we are only changing the container frame. The agent’s internal viewport remains fixed. The empty white area at the bottom is simply the part of the window that falls outside the agent’s configured viewport. Consequently, the take_ss tool captures an image of this fixed viewport, which is why the screenshots accurately reflect this “cut-off” view.

When you run this, you’ll observe the agent:

- Opening a browser window (if headless=False).

- Navigating to the URL.

- Taking the first screenshot.

- Printing the summary generated by the vision model for that initial view.

- Printing the LLM’s decision on whether to scroll.

- If it decides ‘yes’, you’ll see the scroll action happen, followed by another screenshot, summary, and decision cycle.

- Once it decides ‘no’, it proceeds to the aggregation step and prints the final combined summary.

The astream(), method yields the state after each node completes, allowing you to observe the agent’s progress step-by-step, inspect the state, and print specific outputs like the summaries or decisions. This flow visually demonstrates the graph’s execution, the state updates (screenshots, summaries), and the conditional routing based on the AI’s decisions.

Conclusion

LangGraph provides a powerful and intuitive way to design and build complex agent workflows. By modeling your application as a graph with stateful nodes and flexible edges, you can create agents capable of handling multi-step processes, making dynamic decisions, and performing iterative tasks like visually browsing and summarizing a webpage.

This example provides a foundation. You can expand this agent’s capabilities by adding tools for clicking, typing, handling specific page elements, and incorporating more sophisticated error handling and decision-making logic within your nodes and routers. Dive into the code, experiment with the concepts, and start building your sophisticated agents with LangGraph!

References

- LangGraph Documentation: https://www.langchain.com/resources

- A special thanks to Vaibhav for his awesome video lecture offered by FreeCodeCamp youtube channel.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning