The rapid evolution of artificial intelligence, particularly large language models (LLMs), has unlocked unprecedented potential for generating human-like text, solving complex problems, and enhancing productivity across industries. Yet, a persistent challenge remains: these models are often isolated from the real-time, dynamic data that powers modern workflows. Connecting AI to external systems-be it databases, APIs, or productivity tools. They typically require specific integrations, creating a fragmented landscape that stifles scalability and innovation. Model Context Protocol (MCP), an open standard pioneered by Anthropic to bridge this gap.

Proposed by Anthropic, MCP promises to redefine how AI interacts with tools by offering a universal, standardized framework for seamless data integration. Much like how TCP/IP standardized network communication or ODBC transformed database connectivity, MCP aspires to become the backbone of an interconnected AI ecosystem.

This article describes the theoretical underpinnings, architecture, and transformative potential of MCP, exploring why it could mark a turning point in the evolution of intelligent systems.

- What is Model Context Protocol (MCP)?

- How Does MCP Work?

- Detailed Analysis of MCP (Model Context Protocol)

What is Model Context Protocol (MCP)?

The Model Context Protocol (MCP) is a framework designed to make it easier for AI models, especially large language models (LLMs), to connect with external data like files, databases, or APIs. It is like a universal plug that lets AI “talk” to different systems without needing custom setups for each one.

Developed by Anthropic, MCP is open-source, meaning anyone can use and improve it, and it aims to help AI give better, more relevant answers by accessing real-time data.

How Does MCP Work?

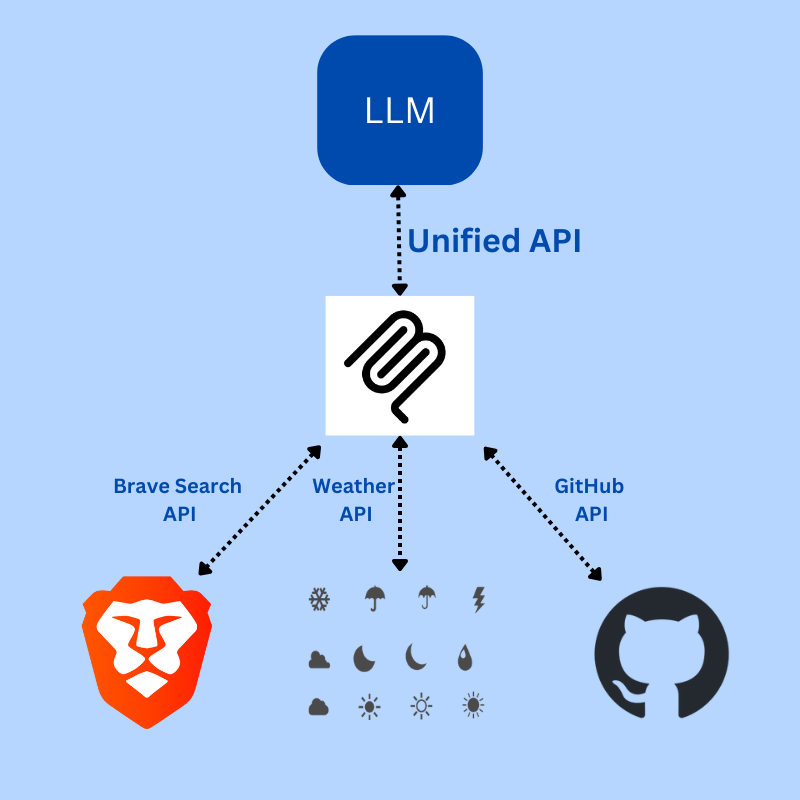

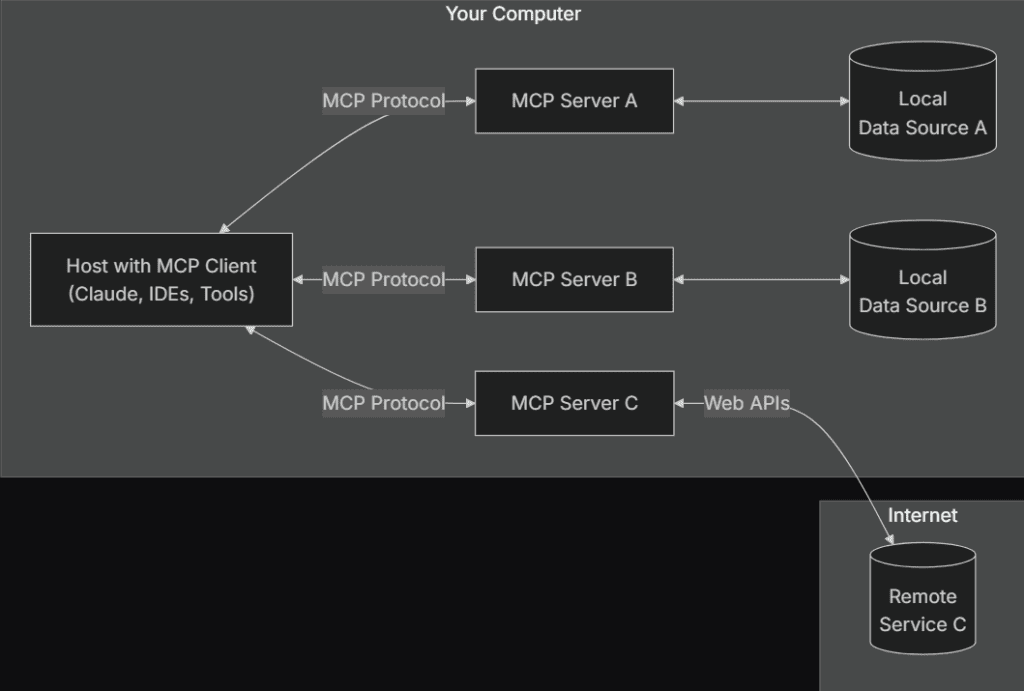

MCP operates on a client-server model. The AI application (the “host”, like a chat tool or IDE) uses MCP clients to connect to MCP servers, which are programs that expose specific capabilities, such as fetching data or running tools.

Communication happens via JSON-RPC 2.0, a standard way to send requests and get responses, making it flexible for both local and remote connections. For example, an AI assistant could use MCP to check your calendar for free slots, with the server handling the data fetch and the client passing it to the AI.

Detailed Analysis of MCP (Model Context Protocol)

MCP simplifies development by standardizing connections, making it easier to scale AI applications and switch between different AI models. It also focuses on security, ensuring user consent and data privacy, which is crucial for sensitive data.

Early adopters like Block and Apollo are already using it, showing its practical value in real-world tools.

What Problem Does MCP Solve?

MCP addresses the “MxN” integration problem, where M different LLMs need to connect with N different tools, resulting in a combinatorial explosion of custom solutions. By offering a single protocol, MCP reduces this complexity to N+M.

Its primary purpose is to enhance frontier models’ ability to produce relevant responses by breaking down information silos and legacy system barriers.

As MCP is open-source, with a GitHub repository, its open approach makes it crucial to become the next standard for LLM integration tools.

Architecture of MCP

MCP operates on a client-server architecture, detailed in the specification at Model Context Protocol Specification.

The architecture comprises three main components:

| Component | Description |

| Host Process | The main AI application (e.g., Claude Desktop, IDE) that manages MCP clients, controlling permissions, lifecycle, security, and context aggregation. |

| MCP Clients | Intermediaries within the host, each connecting to one MCP server, handling communication via JSON-RPC 2.0, ensuring security and sandboxing. |

| MCP Servers | Lightweight programs exposing capabilities (resources, prompts, tools) via MCP, supporting Stdio for local and HTTP with Server-Sent Events (SSE) for remote communication. |

Communication is facilitated by JSON-RPC 2.0, inspired by the Language Server Protocol, as noted in the specification. This allows for requests, results, errors, and notifications, ensuring robust interaction.

Key Components and Functionality

MCP servers offer three primary capabilities:

| Capability | Description |

| Resources | Data sources like files, database records, or API responses, providing context to the AI. |

| Prompts | Templated messages or workflows guiding AI responses or tasks, enhancing interaction. |

| Tools | Functions for AI execution, such as sending emails or interacting with services, enabling actionability. |

Clients, on the other hand, support sampling, allowing server-initiated agentic behaviors for recursive LLM interactions. Utilities like configuration, progress tracking, cancellation, error reporting, and logging ensure smooth operation and management.

Hypothetical Use Case

To illustrate, consider an AI assistant scheduling a meeting by accessing a user’s calendar. The host (e.g., Claude Desktop) uses an MCP client to connect to an MCP server interfacing with the calendar service. The AI sends a request via the client, the server fetches and returns the data (e.g., free slots), and the AI uses this to generate a response.

Another example is an AI in a medical setting accessing patient records securely. The MCP server ensures user consent is obtained, data is encrypted, and access is logged, aligning with MCP’s security principles of user control, data privacy, tool safety, and LLM sampling controls.

Benefits of MCP

MCP’s standardization reduces integration complexity. It enhances scalability, allowing easy addition of new data sources via MCP servers, and is model-agnostic, facilitating switching between LLM providers.

MCL supports SDKs in Python, TypeScript, Java, and Kotlin. Early adopters like Block, Apollo, Zed, Replit, Codeium, and Sourcegraph, mentioned in Anthropic’s news, demonstrate practical adoption

MCP Adoption

MCP’s versatility is evident in use cases across domains:

| Domain | Examples |

| Productivity Tools | Managing files, emails, calendars, and collaboration platforms like Slack. |

| Development | Interacting with Git, GitHub, databases for coding, debugging, deployment. |

| Customer Support | Retrieving CRM data, creating tickets, updating records via AI chatbots. |

| Research/Analysis | Querying databases, fetching API data for insights and reports. |

Pre-built MCP servers for Google Drive, Slack, GitHub, Git, Postgres, and Puppeteer, which are already available, ease integration.

Conclusion

Model Context Protocol (MCP) represents a significant leap forward in AI integration, addressing long-standing challenges of interoperability, scalability, and security. By providing a standardized, open-source framework, MCP simplifies the process of connecting LLMs to external data sources, tools, and workflows, eliminating the need for complex, one-off integrations.

As AI continues to evolve, the ability to seamlessly interact with dynamic, real-world data will be crucial for building truly intelligent and responsive applications. MCP lays the foundation for a more connected AI ecosystem, empowering developers to create smarter, more capable AI systems with minimal friction. With growing adoption and ongoing innovation, MCP has the potential to become the industry standard for AI tool integration, much like TCP/IP did for networking.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning