In Computer Vision, the term “image segmentation” or simply “segmentation” refers to dividing the image into groups of pixels based on some criteria.

A segmentation algorithm takes an image as input and outputs a collection of regions (or segments) which can be represented as

- A collection of contours is shown in Figure 1.

- A mask (either grayscale or color ) where each segment is assigned a unique grayscale value or color to identify it. An example is shown in Figure 2.

The problem of image segmentation has been approached in a million different ways. Sometimes, it is posed as a graph partitioning problem. At other times it is posed as an energy minimization problem in a variational framework, and still other times it is formulated as a solution to a partial differential equations. If the last few lines felt like mumbo-jumbo to you, do not worry. Those are the only few lines in this post that sound like that!

In this post, we will not go into specific techniques. We will focus on a few basic definitions in image segmentation that can be a bit confusing for beginners.

What are Superpixels?

When we group pixels based on color, texture, or other low level primitives, we call these perceptual groups superpixels — a term popularized by Ren and Malik (2003).

The output of a superpixel algorithm is shown using an animation in Figure 1. Notice the segmentation algorithm is simply grouping pixels of similar color and texture. It is not attempting to group parts of the same object together.

What is Semantic Segmentation?

In Semantic Segmentation the goal is to assign a label (car, building, person, road, sidewalk, sky, trees etc.) to every pixel in the image.

Figure 2 shows the result of Semantic Segmentation. The people in the mask are represented using red pixels, the grass is colored light green, the trees are coded dark green and the sky is coded blue.

We can say which pixels belong to the class “person” by simply checking if the mask color is red at that pixel, but we cannot say if two red colored mask pixels belong to the same person or to different ones.

What is Instance Segmentation?

Instance Segmentation is a concept closely related to Object Detection. However, unlike Object Detection the output is a mask (or contour) containing the object instead of a bounding box. Unlike Semantic Segmentation, we do not label every pixel in the image; we are interested only in finding the boundaries of specific objects.

Figure 3 shows an example output of an Instance Segmentation algorithm called Mask R-CNN that we have covered in this post. We see the mask for every person is colored differently so we can tell them apart. However, not every pixel has a class label associated with it.

What is Panoptic Segmentation?

Panoptic segmentation is the combination of Semantic segmentation and Instance Segmentation. Every pixel is assigned a class (e.g. person), but if there are multiple instances of a class, we know which pixel belongs to which instance of the class.

You can see an example in Figure 4. Every pixel has a distinct color-coded label. For example, the sky is coded blue, the trees are coded dark green, the grass is coded light green, and people are colored different shares of yellow, red and purple. The colors yellow and red both point to the same class — person — but to different instances of the same class. We can tell different people apart by looking at the mask color.

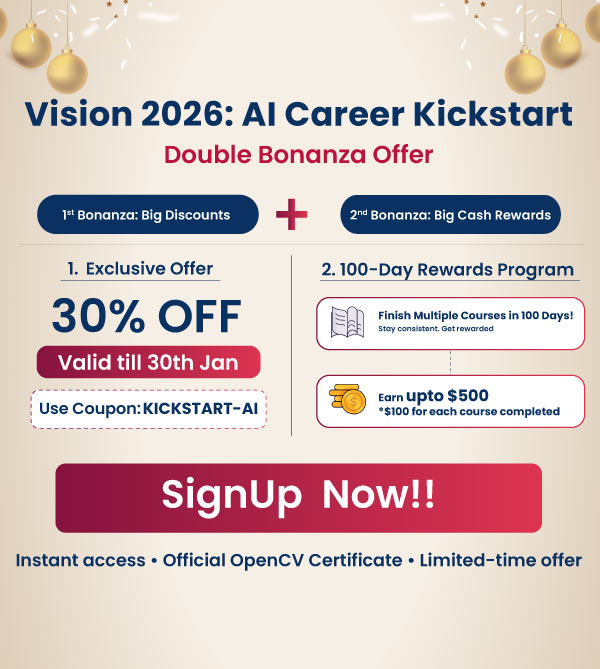

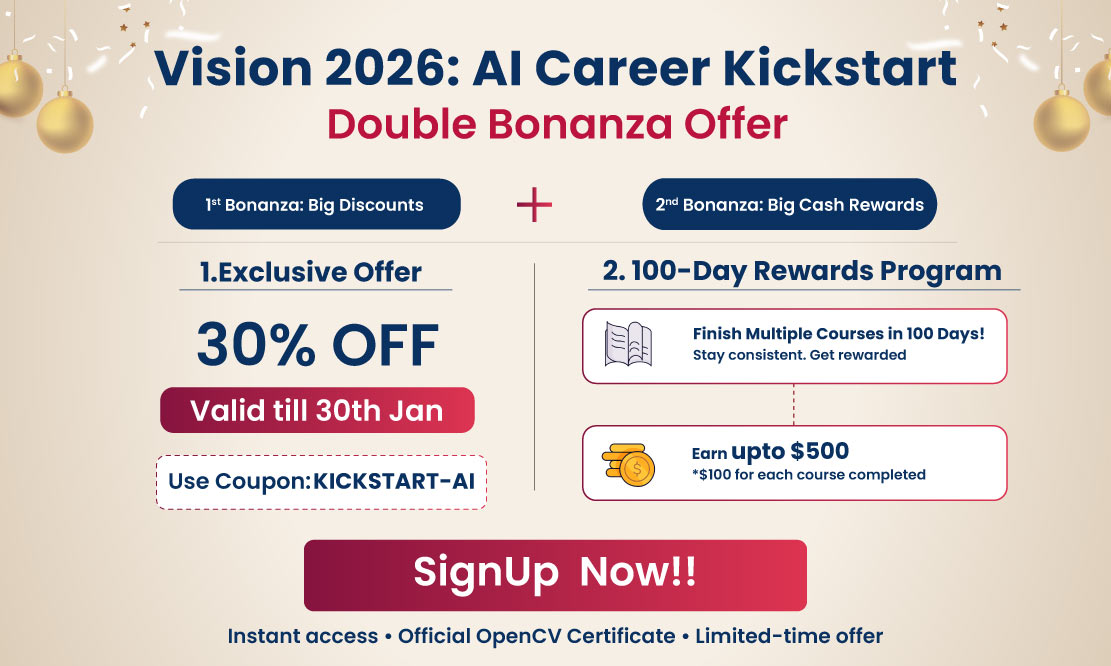

Subscribe

If you liked this article, please subscribe to our newsletter. You will also receive a free Computer Vision Resource guide. In our newsletter, we share Computer Vision, Machine Learning and AI tutorials written in Python and C++ using OpenCV, Dlib, Keras, Tensorflow, CoreML, and Caffe.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning