- Introduction

- Jensen’s Keynote

- Bringing AI to functional safety [A41108]

- How to Build a Digital Twin [A41384]

- Honorable mentions

- Summary

1. Introduction

What do the RTX 4090 GPU, Jetson Orin Nano, functional safety in self driving cars and the Grace CPU have in common? Not much, except that they all just dropped in the last couple of days of the just concluded NVIDIA GTC conference. In this post, we will unpack all the latest AI announcements and technical talks by NVIDIA including the flagship keynote event. This is a follow up to our coverage of Day 1 of GTC which can be found here.

NVIDIA GTC 2022 : The most important AI event this Fall

As usual, we strongly recommend you to register for GTC yourself and watch the replay of these talks. This is one of those events that you really shouldn’t miss. You stand a chance to win a RTX 3080 Ti GPU till the time the registration process is open.

2. Jensen’s Keynote

Let’s begin with the big one first. NVIDIA CEO Jensen Huang made several major announcements at the GTC.

2.1 GeForce RTX 4090 GPU

It is no secret that the RTX 3090 and 3090 Ti GPUs are extremely popular among small startups and academic researchers. While the RTX class gaming GPUs are not big enough to train huge language models, they certainly pack a big punch for training or fine-tuning production grade computer vision models for edge AI and robotics.

In March of this year, we covered the features of the H100 datacenter GPU from Hopper architecture. The RTX 4090 GPU is based on the Ada Lovelace architecture, the consumer graphics equivalent of Hopper. Therefore, the 4090 (and RTX 4080) consist of fourth generation tensor cores, transformer engines and support FP8 data type. According to NVIDIA, the new tensor cores increase throughput by up to 5 times compared to the RTX 3090 which was based on the Ampere architecture. The 4090 consists of 16384 CUDA cores, boosts up to 2.5 GHz, contains 24 GB of GDDR6X memory and will be available from October 12th. You can find more details about the 4090 and Lovelace architecture here.

2.2 Jetson Orin Nano

In a surprise move, Jensen announced a smaller version of Jetson Orin, called Jetson Orin Nano at GTC. Unlike consumer graphics, the Jetson series has a long term roadmap that is publicly shared with customers. Until recently, the next product to come out of the Jetson family was supposed to be Jetson Orin NX, but the Orin Nano seems to be filling in the shoes of the Xavier NX.

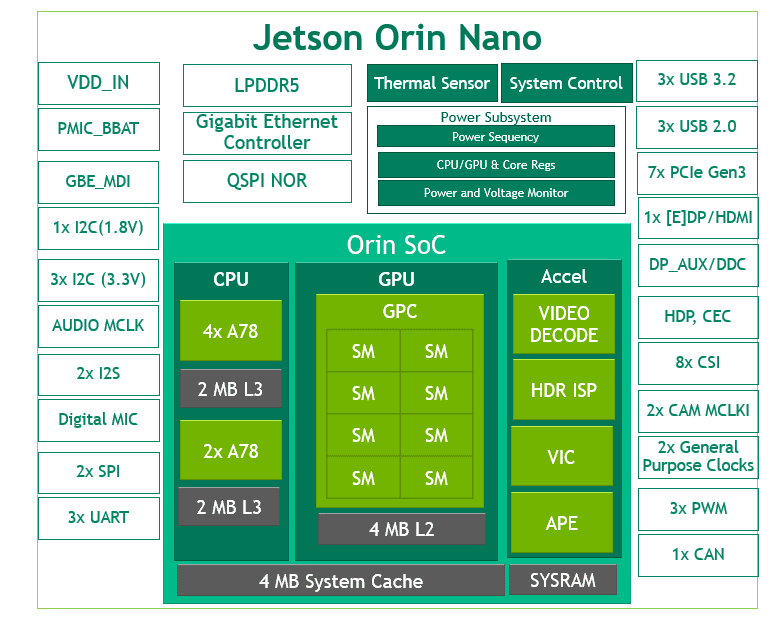

The Orin Nano’s GPU is based on the Ampere architecture. The full SoC consists of 6 CPU cores, 8 SMs, 1024 CUDA cores and 32 third generation tensor cores. As a reminder, if you don’t understand concepts like SMs and tensor cores, please refer to our earlier posts here and here which explain all these concepts.

There are 2 variants of the Orin Nano with 4GB and 8GB RAM, with the 8 GB one set to exceed the performance of the Xavier NX module from the last generation. An NVIDIA representative has confirmed to us that at this point there are no plans to sell developer kits of the Orin Nano to hobbyists, as was the case for Jetson Nano and Xavier NX. As of now, NVIDIA only plans to sell the mezzanine connector modules (pictured above) for integration into commercial products. You can find more about the Orin Nano here.

2.3 NVIDIA IGX

When robots move things in a factory, the people working near them need to be sure that they are operating safely. The possibility of a malfunction in the hardware is very real and could be fatal in places like factory floors and warehouses. A similar situation can occur in medical devices where hardware malfunction could lead to patient harm quite quickly.

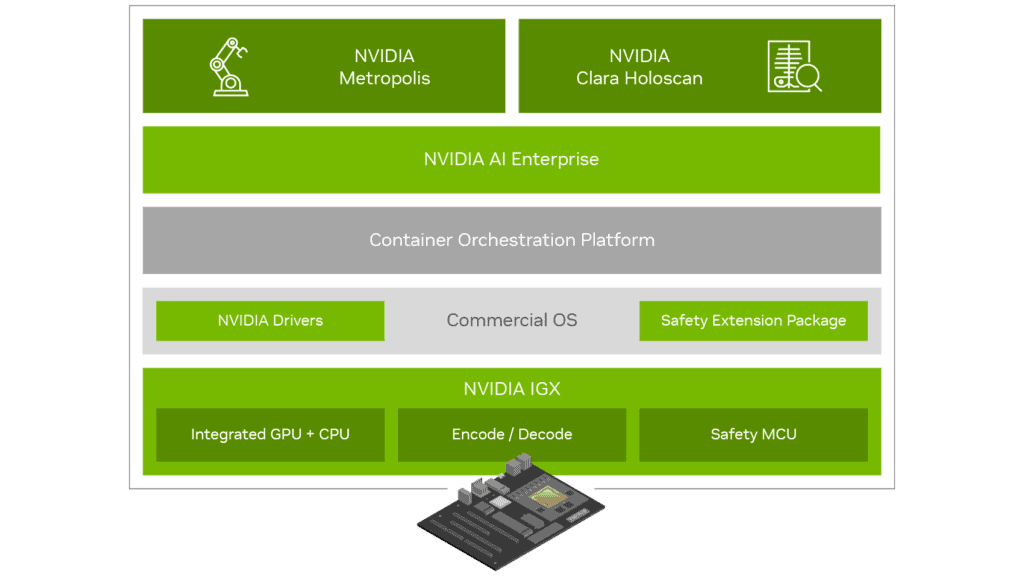

Previously we have covered the safety aspects of developing products based on NVIDIA’s Jetson platform. NVIDIA IGX is basically a pro version of the Jetson series which brings secure hardware to industrial robots and medical devices. Jensen mentioned that the key premise of IDX’s safety is redundancy so that there are 2 copies of the same hardware running the exact same software.

In addition, IGX will feature safety extensions for Orin SoCs and a dedicated safety microcontroller unit (sMCU). This is typically a Cortex-R class microcontroller which ensures that safety related events can be handled within hard realtime constraints irrespective of the state of the scheduler or interrupt handlers of the Linux kernel running on the main SoC. Although details are scarce as of now, IDX hardware and software will be certified for functional safety and it is reasonable to assume that it will conform to ISO26262 or IEC61508 standards. You can find out more about IGX here.

2.4 Omniverse: Metaverse for Robots

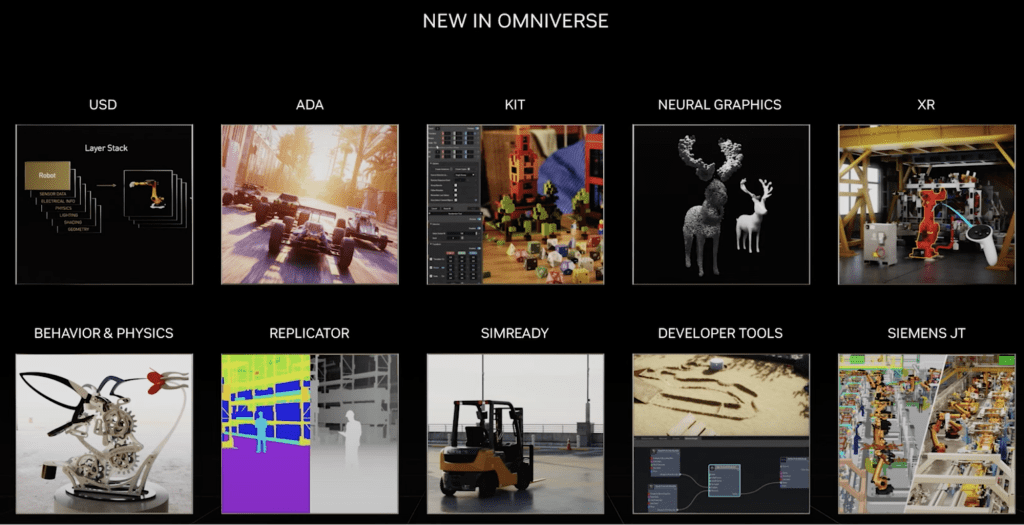

We have explained the basics of Omniverse in our Spring GTC coverage. In short, Omniverse is a platform for building simulations of complex 3D environments with multi-body rigid dynamics and ray tracing enabled rendering. One of the most common use cases of Omniverse in industry is to generate synthetic datasets for training computer vision models. NVIDIA announced many updates to Omniverse:

- Support for Ada Lovelace GPUs.

- New rendering tools based on GANs and diffusion models.

- Omnigraph: a graph execution engine to procedurally control the behavior of objects in a simulation using graphical programming.

- SimReady asset library which can be used for synthetic data generation and digital twins.

- Omniverse Replicator app for synthetic data generation (we have covered SimReady and replicator in our coverage of Day 1 Highlights)

With these updates, NVIDIA is positioning Omniverse as the platform for industrial metaverse where you can create complex 3D simulations for all industries.

2.5 NVIDIA Drive Thor

Thor is the latest SoC announced by NVIDIA for self-driving vehicle applications. Here are the things you should know about Thor:

- It is based on the Hopper/Ada architecture with 4th gen tensor cores and transformer engine.

- Thor delivers up to 2000 teraflops of 8-bit floating point operations.

- The CPU in Thor will be the Grace CPU also designed by NVIDIA (yes, we know NVIDIA is known for its GPUs but in this case, they also designed the CPU!)

- Thor can run multiple operating systems on the same SoC, so a self-driving car could run Linux for the driving modules and Android for in-car infotainment systems.

3. Bringing AI to functional safety [A41108]

In this talk, Riccardo Mariani gave a comprehensive overview of NVIDIA’s approach to functional safety or FUSA. FUSA is a systems level approach to safety of autonomous machines. As industries prepare to deploy millions of robots across diverse applications, there could be multiple sources of faults that a robot may encounter:

- Systematic Faults in hard/software such as bugs in software or hardware performance in edge cases, or

- Random hardware faults caused by radiation, electromigration (usually passing too much current through a semiconductor chip) or interference by the external environment.

Systematic faults should be avoided by careful design of hardware and software as well as by improving the design process. Random faults, on the other hand, cannot be totally avoided. In this case, the focus is on detection and robustness via safety mechanisms and redundancy. Riccardo explained the basics of IEC 61508 and ISO 13849 standards, including the famous ASIL performance levels.

The subsequent few minutes were spent in explaining ISO DTR 5469, which is a completely new approach to FUSA and seeks to use AI to enhance functional safety as well as outlines approaches for FUSA in safety critical products which use AI. Here, we want to emphasize that most AI models, particularly deep neural networks cannot be rigorously safety certified. For example, consider the simple ResNet-50 image classification model. Due to the high dimensionality of the input space and parameter space, there is no way to rigorously show when an image will be correctly classified. Now imagine a self-driving car running and object detection network. The network can, in principle, give any arbitrary output such as detecting a wall in the middle of a road where none exists (provided the wall class exists in training data 🙂 ). In the absence of external safety mechanisms, such false detections could easily jeopardize the safety of the equipment or people.

In addition to safeguarding AI functions with external non-AI mechanisms, this DTR seeks to use AI to enhance safety as well. Riccardo gave the example of a factory-like environment where the trajectory of a moving person can be extrapolated to predict if the person will fall within the safety zone of a robot. In this way, AI can enable predictive safety (anticipating machine failure using past performance data) or proactive safety (identifying safety concerns before they happen).

The rest of the talk was about the safety features in NVIDIA Drive and the new IGX platform, which we have already covered before.

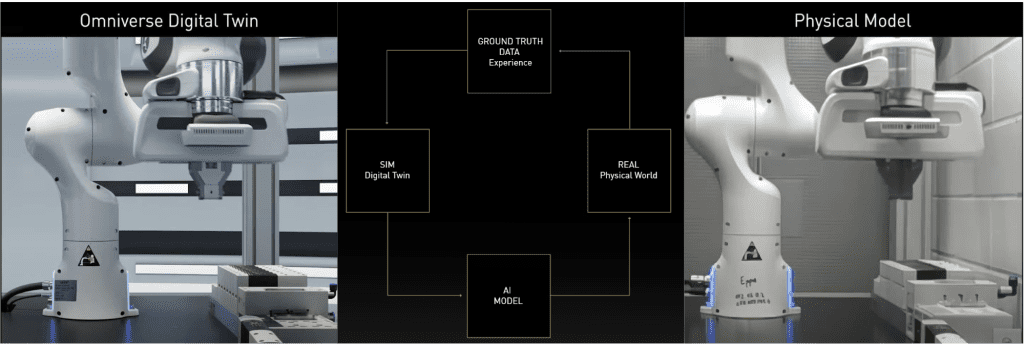

4. How to Build a Digital Twin [A41384]

A digital twin is a large-scale, physically accurate simulation of any real world object or environment. As you can probably guess, NVIDIA’s preferred platform to create digital twins is Omniverse. The basic steps for building digital twins are:

- Planning the scope and scale of your simulation.

- Establishing a workflow to create and ingest USD data (please refer to our coverage of Day 1 Highlights for an explanation of USD).

- Clean, optimize and extend USD data pipeline (including using custom 3D tools).

- Visual simulation and rendering in full fidelity using ray tracing.

- Add physics and behavior (you may also use the SimReady library here).

- Add sensors, collect synthetic data and train any AI model to have the robot behave autonomously.

- Connect simulation to the real world by streaming data from real sensors to the virtual world.

The talk explained how NVIDIA’s tools like Isaac Sim and Omniverse play well with each other and external standards like URDF to make it easy to import robot definitions and train AI models with synthetic data. If your industry has long iteration times between product updates or if you want to enhance the autonomy and reliability of your systems, digital twinning with Omniverse could be really helpful in reducing iteration times and identifying failure cases.

5. Honorable mentions

We have covered the major themes at this season’s GTC and summarized the talks most applicable across a broad range of industries. In this process, we have skipped some talks which have a similar content to the ones covered here, but sometimes specialized for certain industries. Here we mention 10 such talks across 3 themes.

Omniverse

- Building extensions and apps for virtual worlds [A41167]

- How to build simulation ready USD 3D assets [A41339]

- Foundations of the Metaverse: The HTML for 3D virtual worlds [A41236]

- Transforming industries with digital twins [A4D9017]

- Next evolution of USD for building virtual worlds [A41330]

Industrial Robotics

- Deploying AI at the edge with fleet command [A4D9026]

- Optimizing warehouse design and planning with simulation [A4D9008]

- End to end smart factory AI application [A41137]

Computer Vision

- Implementing parallel pipelines with DeepStream [A41173]

- Essential Technologies for startups [A41098]

- Vision when data is expensive and constantly changing [A41174]

- Tracking objects across multiple cameras [A41369]

6. Summary

It has been another exciting GTC with lots of new announcements and step-function improvements across hardware, software and industries. NVIDIA is fast becoming a major driving force pushing several industries towards autonomy, safety and reliability. There appears to be a four pronged approach to their efforts.

- Advancing AI software and algorithms, like Neural reconstruction engine.

- Advancing hardware design, like tensor cores and transformer engines.

- Adding safety mechanisms and FUSA for industrial applications.

- Omniverse for robotics training, digital twinning and open digital collaboration.

We have covered these major themes across the two blog posts for this GTC. Finally, we have also listed some more specialized talks which should interest you. If so, we once again encourage you to register and watch the replay of these talks. Although you won’t be able to ask questions anymore, the recordings of talks will be available even after GTC has ended.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning