In this post, we will learn about a Deep Learning based object tracking algorithm called GOTURN. The original implementation of GOTURN is in Caffe, but it has been ported to the OpenCV Object Tracking API and we will use this API to demonstrate GOTURN in C++ and Python.

What is Object Tracking ?

The goal of object tracking is to keep track of an object in a video sequence. A tracking algorithm is initialized with a frame of a video sequence and a bounding box to indicate the location of the object we are interested in tracking. The tracking algorithm outputs a bounding box for all subsequent frames.

What is GOTURN?

GOTURN, short for Generic Object Tracking Using Regression Networks, is a Deep Learning based tracking algorithm. The video below explains GOTURN and shows a few results.

Most tracking algorithms are trained in an online manner. In other words, the tracking algorithm learns the appearance of the object it is tracking at runtime.

Therefore, many real-time trackers rely on online learning algorithms that are typically much faster than a Deep Learning based solution.

GOTURN changed the way we apply Deep Learning to the problem of tracking by learning the motion of an object in an offline manner. The GOTURN model is trained on thousands of video sequences and does not need to perform any learning at runtime.

How does GOTURN work?

GOTURN was introduced by David Held, Sebastian Thrun, Silvio Savarese in their paper titled “Learning to Track at 100 FPS with Deep Regression Networks”.

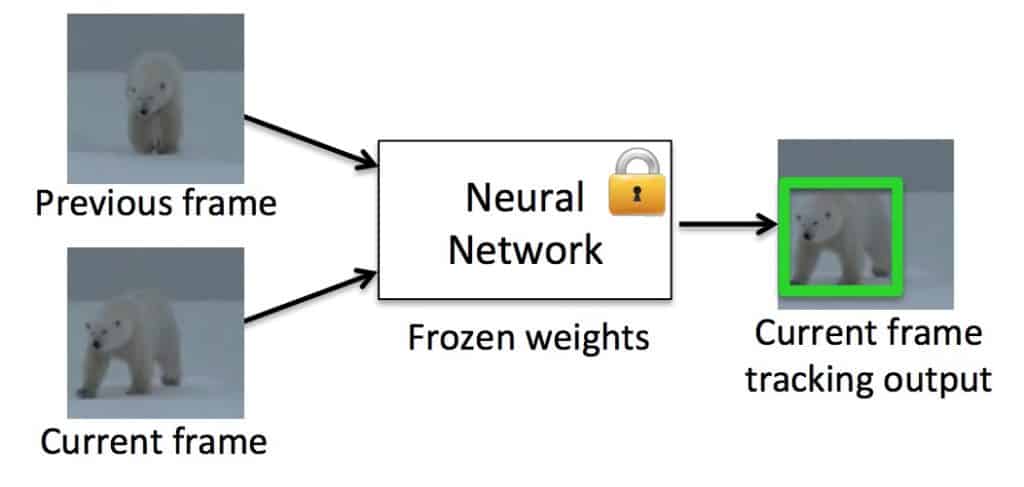

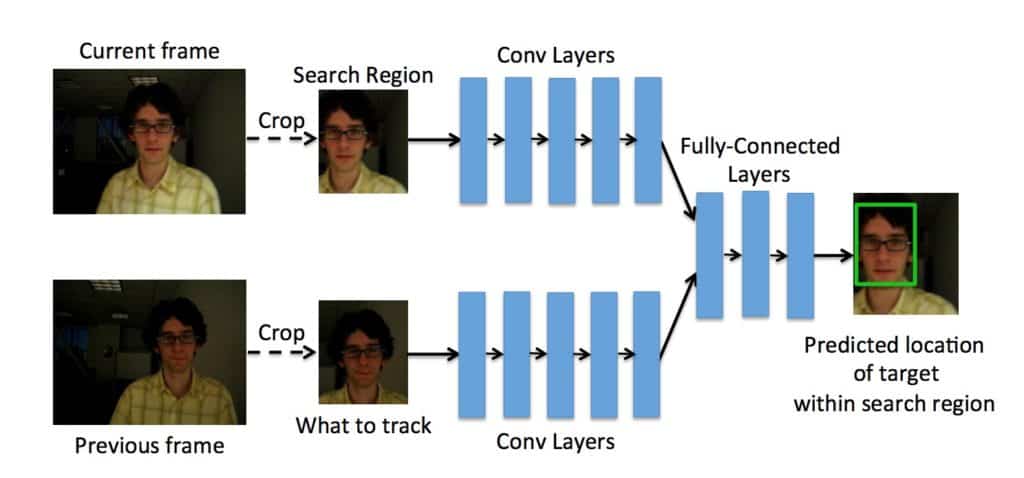

As shown in Figure 1, GOTURN is trained using a pair of cropped frames from thousands of videos.

In the first frame (also referred to as the previous frame), the location of the object is known, and the frame is cropped to two times the size of the bounding box around the object. The object in the first cropped frame is always centered.

The location of the object in the second frame (also referred to as the current frame) needs to be predicted. The bounding box used to crop the first frame is also used to crop the second frame. Because the object might have moved, the object is not centered in the second frame.

A Convolutional Neural Network (CNN) is trained to predict the location of the bounding box in the second frame.

If you are an absolute beginner, think of the CNN as a black box with many knobs that can be set to different values. When the settings on the knobs are right, the CNN produces the right bounding box. Initially, the settings of the knobs are random. At the time of training, we show the neural network pairs of frames for which we known the location of the object (i.e. bounding boxes). If the CNN makes a mistake, the knobs are changed in a principled way using an algorithm called back propagation so that it gradaully stops making as many mistakes. When changing the knob settings stops improving the results anymore, we say the model is trained.

GOTURN Architecture

In the previous section, we just showed the CNN as a black box. Now, let’s see what is inside the box.

Figure 2 shows the architecture of GOTURN. As mentioned before, it takes two cropped frame as input. Notice, the previous frame, shown at the bottom, is centered and our goal is the find the bounding box for the currrent frame shown on the top.

Both frames pass through a bank of convolutional layers. The layers are simply the first five convolutional layers of the CaffeNet architecture. The outputs of these convolutional layers (i.e. the pool5 features) are concatenated into a single vector of length 4096. This vector is input to 3 fully connected layers. The last fully connected layer is finally connected to the output layer containing 4 nodes representing the top and bottom points of the bounding box.

Whenever you see a bank of convolutional layers and are confused what it means, think of them as filters that change the original image such that important information for solving the problem at hand is retained and unimportant information in the image is thrown away.

The multi-dimensional image (tensor) obtained at the end of the convolutional filters is converted to a long vector of numbers by simply unrolling the tensor. This vector serves as input to a few fully connected layers and finally the output layer. The fully connected layers can be thought as the learning algorithm that is using the useful information extracted from the images by the convolutional layer to solve the classification or regression problem at hand.

How to use GOTURN in OpenCV

The authors have released a caffe model for GOTURN. You can try it using Caffe, but in this tutorial, we will use OpenCV’s tracking API. Here are the steps you need to follow.

Download GOTURN model files : You can download the GOTURN caffemodel and prototxt files located at this link. The model file is split into 4 files which will need to be merged before unzipping (see step 2).

Alternatively, you can use this dropbox link to download the model. Please keep in mind it may take a long time to download the file because it is about 370 MB! If you use this method, skip step 2.Merge zip files: The GOTURN model file shared via GitHub is split into a 4 different files because the model file is large. These files need to be combined before unzipping. OSX and Linux users can do so using the following commands.

# MAC and Linux Users

cat goturn.caffemodel.zip* > goturn.caffemodel.zip

On Windows, the files can be merged using 7-zip. Move model files to current directory: GOTURN implementation in OpenCV expects the model file to be present in the directory from where the executable is executed. So, move it to the current directory.

Assuming you have downloaded the code, let’s see how the tracker is used.

Create Tracker: First, we need to create an instance of the GOTURN tracker class. This can be done as

C++

// Create tracker

Ptr<Tracker> tracker = TrackerGOTURN::create();

Python

# Create tracker

tracker = cv2.TrackerGOTURN_create()

Read Video Frame: Next, we read a video frame

C++

// Read video

VideoCapture video("chaplin.mp4");

// Exit if video is not opened

if(!video.isOpened())

{

cout << "Could not read video file" << endl;

return EXIT_FAILURE;

}

// Read first frame

Mat frame;

if (!video.read(frame))

{

cout << "Cannot read video file" << endl;

return EXIT_FAILURE;

}

Python

# Read video

video = cv2.VideoCapture("chaplin.mp4")

# Exit if video not opened

if not video.isOpened():

print("Could not open video")

sys.exit()

# Read first frame

ok,frame = video.read()

if not ok:

print("Cannot read video file")

sys.exit()

Define bounding box: We need to select the object we want to track in the video. This is done by defining a bounding box or selecting an ROI. In our example, we have hardcoded the bounding box, but you can use cv2.selectROI to invoke a GUI for finding the region of interest.

C++

// Define initial boundibg box

Rect2d bbox(287, 23, 86, 320);

// Uncomment the line below to select a different bounding box

//bbox = selectROI(frame, false); [/cpp]

Python

# Define a bounding box

bbox = (276, 23, 86, 320)

# Uncomment the line below to select a different bounding box

#bbox = cv2.selectROI(frame, False) [/python]

Initialize tracker: The tracker takes as input the first frame and bounding box around the object we want to track.

C++

// Initialize tracker with first frame and bounding box

tracker->init(frame, bbox);

Python

# Initialize tracker with first frame and bounding box

ok = tracker.init(frame,bbox)

Predict the bounding box in a new frame: Finally, we loop over all frames in the video and find the bounding box for new frames using tracker.update. The rest of the code is simply for timing and displaying.

C++

while(video.read(frame))

{

// Start timer

double timer = (double)getTickCount();

// Update the tracking result

bool ok = tracker->update(frame, bbox);

// Calculate Frames per second (FPS)

float fps = getTickFrequency() / ((double)getTickCount() - timer);

if (ok)

{

// Tracking success : Draw the tracked object

rectangle(frame, bbox, Scalar( 255, 0, 0 ), 2, 1 );

}

else

{

// Tracking failure detected.

putText(frame, "Tracking failure detected", Point(100,80), FONT_HERSHEY_SIMPLEX, 0.75, Scalar(0,0,255),2);

}

// Display tracker type on frame

putText(frame, "GOTURN Tracker", Point(100,20), FONT_HERSHEY_SIMPLEX, 0.75, Scalar(50,170,50),2);

// Display FPS on frame

putText(frame, "FPS : " + SSTR(int(fps)), Point(100,50), FONT_HERSHEY_SIMPLEX, 0.75, Scalar(50,170,50), 2);

// Display frame.

imshow("Tracking", frame);

// Exit if ESC pressed.

if(waitKey(1) == 27) break;

}

Note: When the tracker fails, tracker.update returns 0 (false). This information can be used if we are using the tracker along with a detector. When tracker fails, the detector can be used to detect the object and reinitialize the tracker.

Python

while True:

# Read a new frame

ok, frame = video.read()

if not ok:

break

# Start timer

timer = cv2.getTickCount()

# Update tracker

ok, bbox = tracker.update(frame)

# Calculate Frames per second (FPS)

fps = cv2.getTickFrequency() / (cv2.getTickCount() - timer);

# Draw bounding box

if ok:

# Tracking success

p1 = (int(bbox[0]), int(bbox[1]))

p2 = (int(bbox[0] + bbox[2]), int(bbox[1] + bbox[3]))

cv2.rectangle(frame, p1, p2, (255,0,0), 2, 1)

else :

# Tracking failure

cv2.putText(frame, "Tracking failure detected", (100,80), cv2.FONT_HERSHEY_SIMPLEX, 0.75,(0,0,255),2)

# Display tracker type on frame

cv2.putText(frame, "GOTURN Tracker", (100,20), cv2.FONT_HERSHEY_SIMPLEX, 0.75, (50,170,50),2);

# Display FPS on frame

cv2.putText(frame, "FPS : " + str(int(fps)), (100,50), cv2.FONT_HERSHEY_SIMPLEX, 0.75, (50,170,50), 2);

# Display result

cv2.imshow("Tracking", frame)

# Exit if ESC pressed

k = cv2.waitKey(1) & 0xff

if k == 27:

break

Strengths and Limitations of GOTURN

Compared to other Deep Learning based trackers, GOTURN is fast. It runs at 100FPS on a GPU in Caffe and at about 20FPS in OpenCV CPU. Even though the tracker is generic, one can, in theory, achieve superior results on specific objects (say pedestrians) by biasing the traning set with the specific kind of object.

I have identified some of the weaknesses in GOTURN. Please keep in mind, these observations are based on limited tests and one should take them with a grain of salt. Also, note that the OpenCV version of GOTURN uses a different model than the Caffe version. The following observations are for the OpenCV version which was created without any guidance from the authors.

- Tracking objects that are not in the training set in the presence of objects that are in the training set: I was tracking the palm of my hand and as I moved it over my face, the tracker latched on to the face and never recovered. I tried covering my face with my palm just to see if I could get the tracker off my face, but it did not.

Then, I tried tracking my face and occluded it with my hands, but the tracker was able to track the face through the occlusion.

My guess is that there were many more faces in the training set than palms and so it has a problem tracking a hand when a face is in the neighborhood.This may be a more general problem when there are multiple objects in the scene interacting. The tracker may latch onto objects in the scene which are in the training set when they come close to the tracked objects that are not in the training set.

- Tracking part of an object: It also appears that the tracker would have a hard time tracking a part of an object compared to the entire object. For example, when I tried to use it to track the tip of my finger, it ended up tracking the hand. This is probably because it is not trained on parts of objects, but entire objects.

- Lack of Motion information: Since motion information is not incorporated in the two frame model, if we are tracking an object ( say a face ) moving in one direction, and it gets partially occluded by a similar object ( say another face ) moving in the other direction, there is a chance the tracker will latch onto the wrong face. This problem can be fixed by using the first frame as the previous frame.

Addendum

Recently, more advanced state of the art trackers have come into light. One of them is DeepSORT, which uses an advanced association matrix than it’s predecessor, SORT. It uses YOLO network as the object detection model, and can save the person identities for upto 30 frames (default, can be changed). FairMOT uses joint detection and re-ID approach to give real time results. It can use YOLOv5 or DLA-34 as the backbone, and is superior in the re-identification task.

Acknowledgment

Many thanks to Prof. David Held for permitting us to use images from the paper in this post and for answering questions.