Gemma 3 is the latest addition to Google’s family of open models, built from the same research and technology used to create the Gemini models. It is designed to be lightweight yet powerful, enabling deployment across various devices, from smartphones to workstations. Building upon the strengths and insights gained from its predecessor (Gemma 2), this new series empowers developers, researchers, and enterprises with an efficient and versatile suite of models capable of handling complex, multimodal tasks.

In this blog post, we explore the Gemma 3 models in detail, examine their architectural strengths, including the architecture and pre- and post-training recipes, and compare them with the earlier Gemma 2 series to understand the scale of innovation involved.

- Gemma 3

- Gemma 3 Models Hardware Requirements

- Architectural Components of Gemma 3

- Key Features of Gemma 3

- Gemma 3 Training Methodology

- Ablation Study for Gemma 3

- Memorization or Regurgitation Rate

- Conclusion

- References

Gemma 3

Google’s Gemma 3 is its third-generation open model series, part of its broader initiative to democratize responsible AI. It offers powerful, efficient, and safe models with multimodal, multilingual, and function-calling capabilities. These models are optimized for both cloud-scale deployments and on-device performance, making them ideal for a wide range of real-world applications.

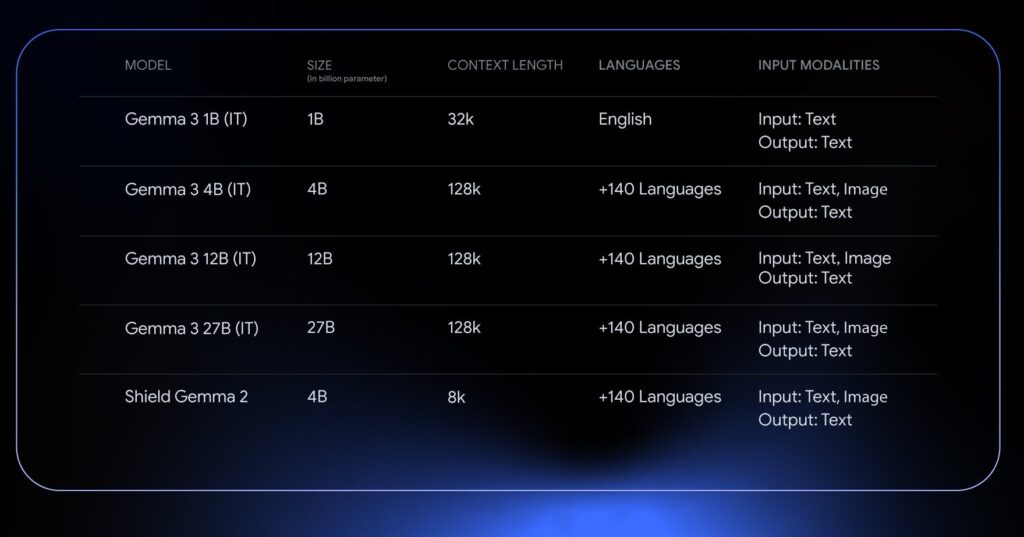

Available in four parameter sizes – 1B, 4B, 12B, and 27B, Gemma 3 is speculated to be built on the technological advancements introduced by Google’s Gemini 2.0 foundation models. Unlike its predecessor, Gemma 3 includes vision capabilities, extended context handling, and structured output functionalities.

Gemma 3 Models Hardware Requirements

| Model | CPU Cores | GPU VRAM | Recommended Hardware |

|---|

| 1B | 16 | 2.3 GB (Text-to-Text) | GTX 1650 4 GB |

| 4B | 16-32 | 9.2 GB Text-to-Text) 10.4 GB Image-to-Text) | RTX 3060 12 GB |

| 12B | 32 | 27.6 GB (Text-to-Text) 31.2 GB (Image-to-Text) | RTX 5090 32 GB |

| 27B | 32-64 | 6.1 GB (Text-to-Text) 70.2 GB (Image-to-Text) | RTX 4090 24 GB (x3) |

Architectural Components of Gemma 3

Gemma 3 retains the decoder-only Transformer architecture found in previous iterations but introduces several improvements to scale its performance:

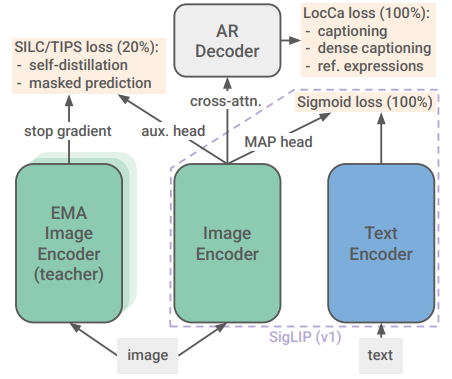

SigLIP Vision Encoder

Integrates visual inputs by converting images into token representations. Enables multimodal capabilities such as visual Q&A and captioning.

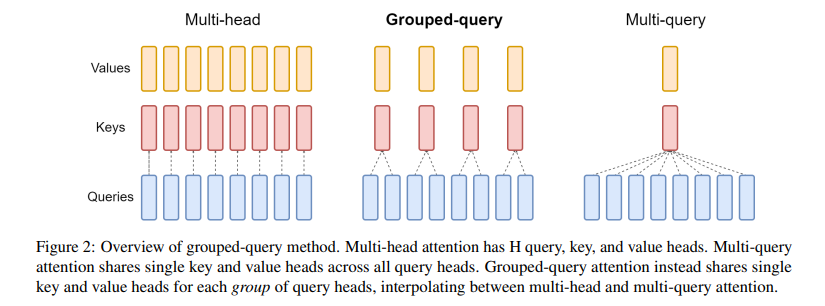

Grouped-Query Attention (GQA)

Grouping keys and values in attention heads reduces memory and compute overhead. This helps scale the model efficiently across parameter sizes.

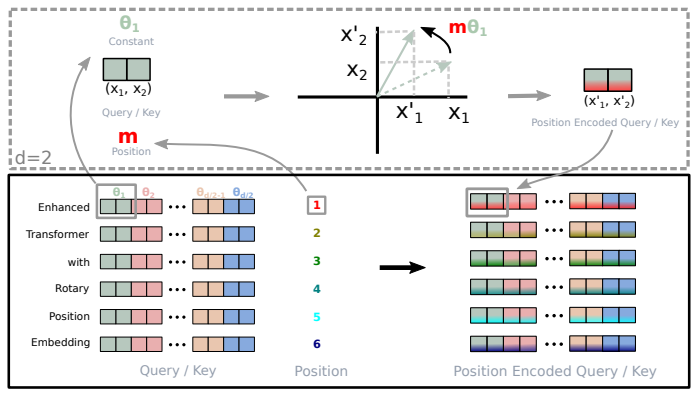

Rotary Positional Embeddings (RoPE)

Encodes positional information in a continuous space, enhancing the model’s ability to generalize across variable-length sequences.

Extended Context Window Handling

It supports up to 128K tokens in context. This allows the 4B, 12B, and 27B models to understand and reason about long-form content like documents or conversations. The 1B variant, being purely for texts, supports 32k tokens in context only. It facilitates the processing of extensive textual information within a single prompt.

Optimized Local-Global Attention Ratio

Balances fine-grained (local) and global context understanding, improving efficiency without sacrificing comprehension. Increases the ratio of local to global attention layers and reduces the span of local attention to manage the increased context length without excessive computational overhead.

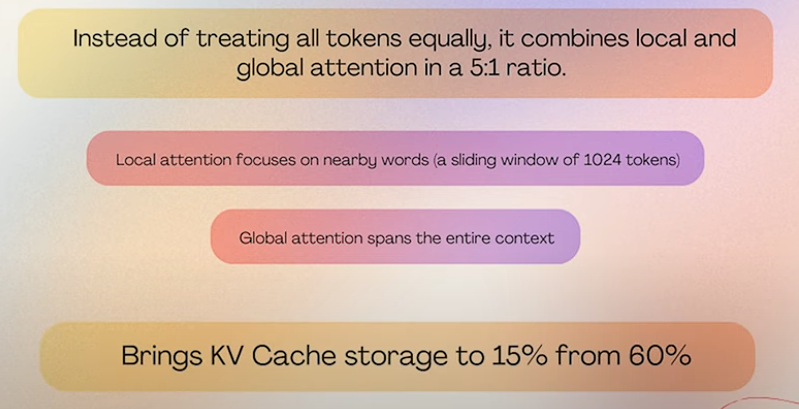

Gemma 3 uses the traditional transformer model architecture that includes attention. One of the key differences is that in each of the layers, there is no full global attention. There’s no use in full global attention whereby a token looks back at all previous tokens.

Rather, each token will only look back at about a thousand previous tokens in every four out of five layers. This is called a sliding window approach, and it’s a mix of using this local sliding window for four layers, using full global attention, using four more local layers, and then full.

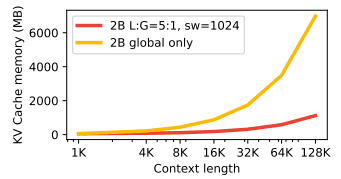

By doing this, developers saved by a factor of about five in the amount of attention calculations, and also saved a factor of five in the size of their KV cache. It could bring KV Cache storage to 15% from 60% of the inference memory. And that is why we can work with longer context questions and queries without running out of cache space or VRAM.

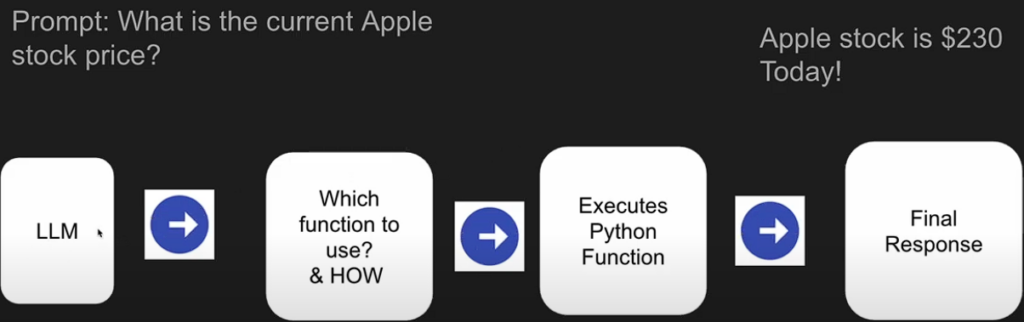

Function-Calling Head

Enables structured output generation for API calls and dynamic task execution. Key for building autonomous agent-based systems and AI-driven workflow.

Key Features of Gemma 3

Multimodal Capabilities

Gemma 2 series was primarily designed for text-based tasks, fine-tuned versions like PaliGemma and CodeGemma extended its capabilities to vision-language tasks and code-related tasks, respectively. Gemma 3 introduces vision support via integration with the 400M SigLIP vision encoder variant, allowing it to process both text and image inputs.

To handle high-resolution images, the model implements a “Pan & Scan” approach where images are divided into patches, encoded separately, and then aggregated. This allows the model to maintain detail while processing large images efficiently. This multimodal functionality makes it suitable for image captioning, visual Q&A, and other vision-language tasks.

Extended Context Length

1B model does not have a vision encoder, whereas all three other models have the same SigLIP vision encoder. The new architecture supports up to 128k tokens, which is similar to the LlaMA-3 series models (for Gemma 3’s 4B, 12B, and 27B models), significantly improving the model’s capacity to understand and reason over long-form content. The 1B variant supports up to 32,000 tokens. This capability is enabled through the local/global attention mechanism described earlier.

Multilingual Coverage

Building upon the multilingual capabilities of Gemma 2, which supported over 35 languages, Gemma 3 expands its support to over 140 languages. This broadens the model’s applicability across diverse linguistic contexts, making it more versatile for global applications.

Function Calling and Structured Output

Gemma 3 supports advanced function calling, enabling structured outputs and easy automation of downstream tasks such as API calls and data extraction.

Efficiency and Deployment Flexibility

The 27B model is optimized to run on a single GPU, and quantized versions of all model sizes are available for resource-constrained environments. These can be deployed using Google Cloud (Vertex AI, Cloud Run), locally, or even on mobile devices (especially the 1B variant).

Gemma 3 Training Methodology

The Gemma 3 models were developed using an advanced training strategy that builds on established methodologies while incorporating several novel innovations.

Pretraining

The models were trained on TPUs. Google tends to train on its hardware rather than using NVIDIA. Developers used v5e and v5p type TPUs. Although the latest TPU is v6e. We believe p to be more performative than e for a given class. So maybe v5e would be a bit less advanced in terms of performance and throughput than v5p. TPUv4 has been used for the 12B Gemma 3 model, possibly for reasons of availability of TPUs, or maybe for doing some comparative work.

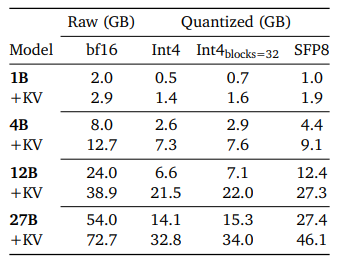

The Gemma 3 models are available in BF16 format. This is Brain Float 16. Of course, that’s not supported in Colab, so you have to run in Float 16. It’s common to release the models in 16-bit, although DeepSeek has kind of changed that and trained and released its models lately in 8 bits. So these models still are using the old approach of training in 16 bits, but they do release quantized versions in 4 bits.

Gemma 3 models have also released the 8-bit versions, that probably is very good quality, but allow you to save on VRAM by a factor of 2. Right in the image attached above, we can see that we need 54 GB for loading the model, and then 72.7 GB for the KV cache. Whereas, because of quantizing this down to 8 bits, we can bring it to 27.4 GB and then 46.1 GB. The numbers for KV Cache would have been a lot more if we had to use full global attention.

Pretraining is the part of the training where the largest amount of tokens is used, 14 trillion were used for Gemma 3 27B, 12 trillion used for 12B, just 4 trillion tokens and 2 trillion tokens for the 1B model here. Now the tokenizer that’s being used is very large. It’s got 262k tokens. It’s way more than the 128k tokens used for LLaMA 3 series, which better supports multilingualism.

Knowledge Distillation

A notable part of Gemma 3’s training process is Knowledge Distillation. After initial pretraining, the models were refined using knowledge transferred from larger, more capable systems—helping boost performance while keeping the models efficient.

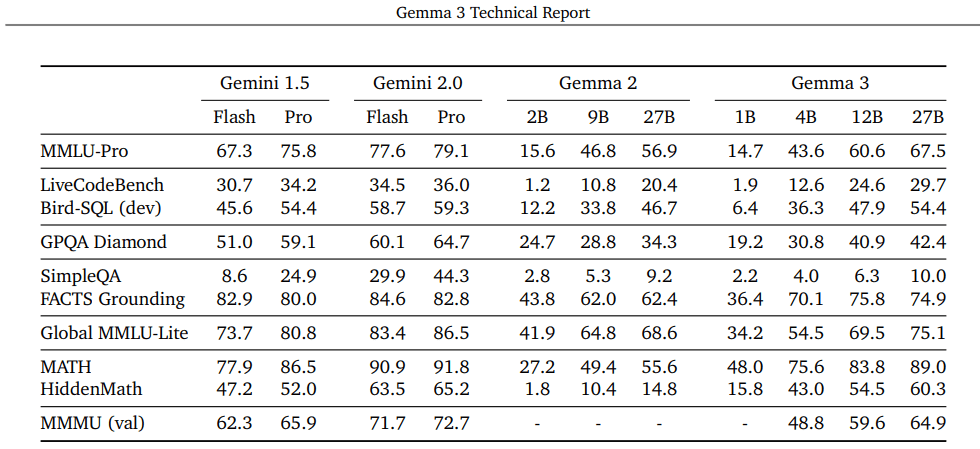

While the exact teacher model used for distillation hasn’t been disclosed, it’s one of the Gemini 2.0 series models, such as Gemini Flash 2.0 or Gemini Pro 2.0. Given that Gemma 3 models already outperform Gemini Flash 1.5 Pro in some benchmarks and even approach Gemini 2.0 Flash in some benchmarks, it’s reasonable to assume the distillation came from a stronger, second-generation Gemini model.

Instruction Tuning

It helps align the model and improve performance. Reinforcement learning has also been done on a few different types of data, human feedback data, code execution feedback, and then ground truth rewards for solving math problems. Involving quantitative data, reinforcement learning on the correct answers, and using that to improve performance.

Ablation Study for Gemma 3

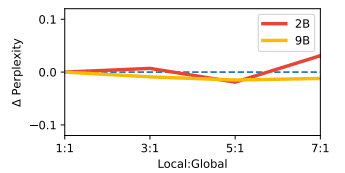

Local:Global Ratio

The choice of how often to do global attention, do it every layer, do it every three layers, every five layers, every seven layers. And we can clearly infer from the attached image below that there’s not all that much difference. We can go out as far as at least maybe doing it every seven layers and not see much performance or perplexity degradation.

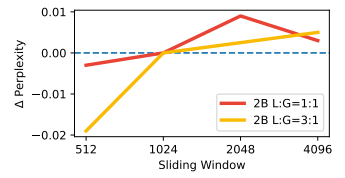

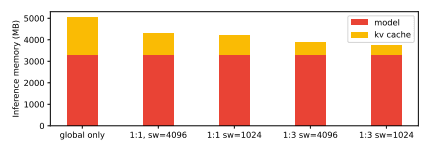

Sliding Window Size

Interestingly, perplexity remains largely stable even with a sliding window as small as 1,000 tokens. However, for the 2B parameter model, performance slightly degrades when using global attention once every three layers with a window size reduced to 512. A related graph attached below shows that the memory usage drops significantly, especially when global attention is applied only every five layers, compared to every single layer.

KV Cache Memory

This approach results in roughly a 5× reduction in KV cache usage, enabling the model to handle significantly more or longer sequences within the same GPU memory budget. During inference with a 32K token context, the choice of attention strategy significantly affects memory usage. The “global-only” setup, commonly used in dense models, leads to a 60% memory overhead. In contrast, configurations like 1:3 global-to-local attention with a sliding window of 1024 reduce this overhead to less than 15%, offering substantial savings.

Long Context

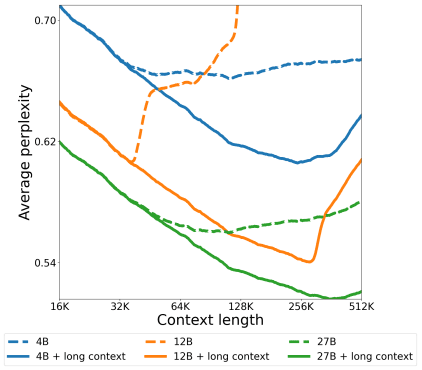

Gemma 3 models undergo a two-phase training process to support extended context lengths. Initially, models are pretrained on sequences up to 32K tokens. In the second phase, context length is further extended, which is reflected in perplexity metrics across different model sizes, 4B (blue), 12B (orange), and 27B (green). Graphs show that models trained with context extension (solid lines) consistently achieve lower perplexity than those without (dashed lines), confirming the effectiveness of this staged approach.

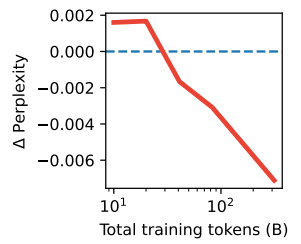

Although we know that distillation follows pretraining, the exact number of tokens used during distillation remains undisclosed. Pretraining datasets range from 14T tokens down to 2T, depending on model size, but distillation typically involves just 1–3% of the total training tokens. This compact distillation phase transfers knowledge from stronger teacher models while reinforcing the models’ ability to manage extended sequences, even with minimal additional data.

Small versus Large Teacher

Ablation studies suggest that the size of the teacher model used during distillation significantly affects the performance of the student model, depending on the amount of data used. When fewer distillation tokens are available, using a smaller teacher tends to produce better results—likely due to a regularizing effect that prevents overfitting. On the other hand, with larger token budgets, performance improves when distilling from a stronger, larger teacher model.

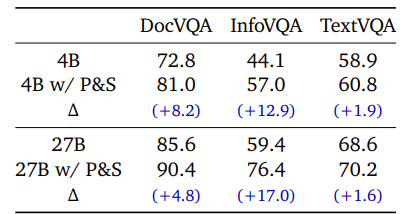

Vision Encoder

Although the encoder is frozen during training (i.e., not updated), input resolution significantly impacts performance. Higher-resolution images, especially those at 896px, consistently outperform lower-resolution ones across benchmarks like DocVQA, InfoVQA, and TextVQA, due to richer visual detail being preserved even after downsampling.

The Pan & Scan technique helps the model better interpret images with varied aspect ratios and resolutions. P&S enables images to retain their native structure more effectively, which boosts tasks requiring fine-grained text reading, making it a valuable enhancement for visual-language models.

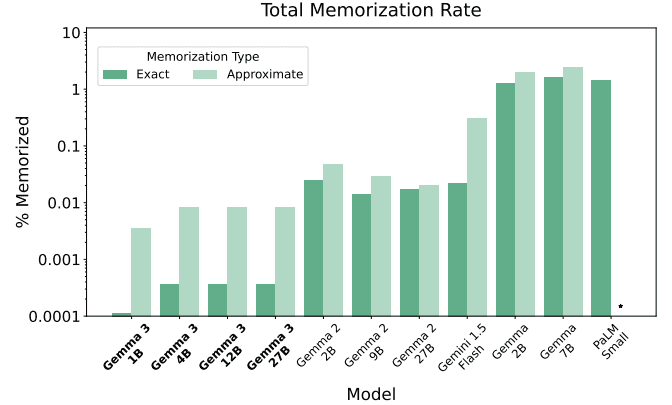

Memorization or Regurgitation Rate

Memorization refers to the unintended behavior exhibited by the LLMs of reproducing substantial portions of a work from their training data. With Gemma 2, the exact regurgitation was about 0.01 of a percent, whereas now it’s down below 0.001. It is possibly due to additional post-training steps like reinforcement learning, which might have shifted the model’s output distribution away from patterns found in the original pretraining data. As more training is applied beyond the initial pretraining phase, the model becomes less likely to regurgitate memorized content, improving generalization.

Conclusion

Gemma 3 marks a major leap forward in Vision-Language Models, offering powerful multimodal capabilities and extended context handling. With its efficient design and flexible architecture, Gemma 3 is not only scalable for cloud deployments but also optimized for local execution, with even the 27B model capable of running on a single GPU. This scalability ensures that Gemma 3 can be utilized across a variety of applications, from large-scale enterprise solutions to more localized edge deployments. As AI continues to evolve, Gemma 3 sets a strong foundation for building intelligent, scalable, and highly adaptable systems.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning