In today’s post, we are covering the topic of Gaze Estimation and Tracking.

I was invited to give a talk on the subject at a workshop on Eye Tracking for AR and VR organized by Facebook Research at the International Conference on Computer Vision (2019).

The slides are from that talk.

This post is for absolute beginners. We explain the problem of gaze estimation, current methods for collecting ground truth data, public datasets, and current methods for solving the gaze estimation/tracking problem. All the information is contained in the video. The text below simply links to some of the material presented above.

1. What is Gaze Estimation?

Simply put, in gaze estimation / tracking, we want to find where a person is looking.

There are two setups

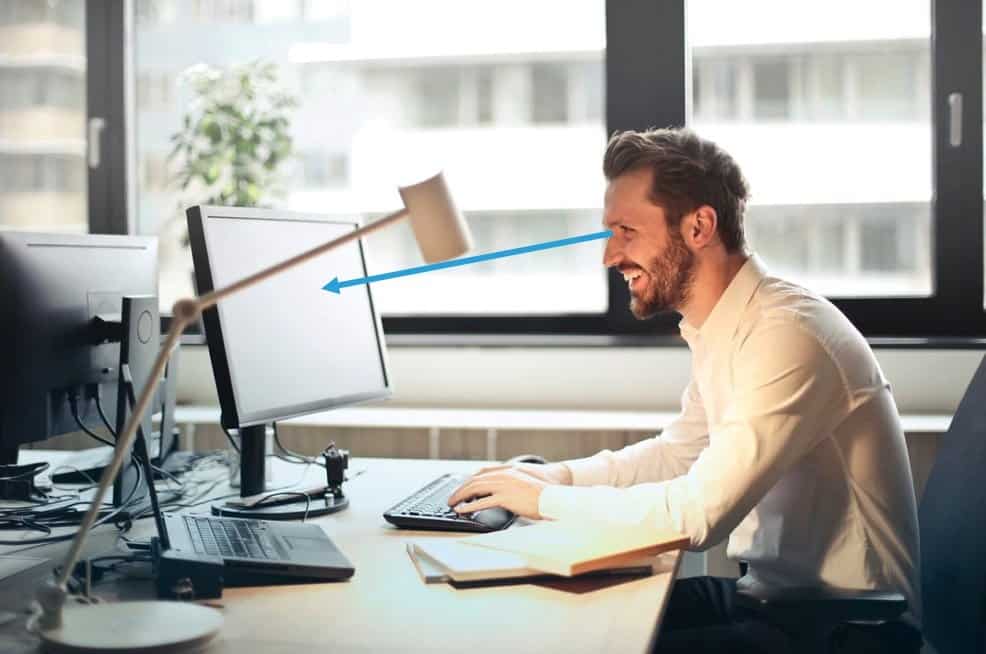

1.1 Screen Based (or Remote ) Gaze Tracking

In this setup, the person is looking at a screen (monitor) and we are interested in finding out the x and y coordinates of location on the screen where they are looking.

1.2 Gaze Tracking in Wearable Devices

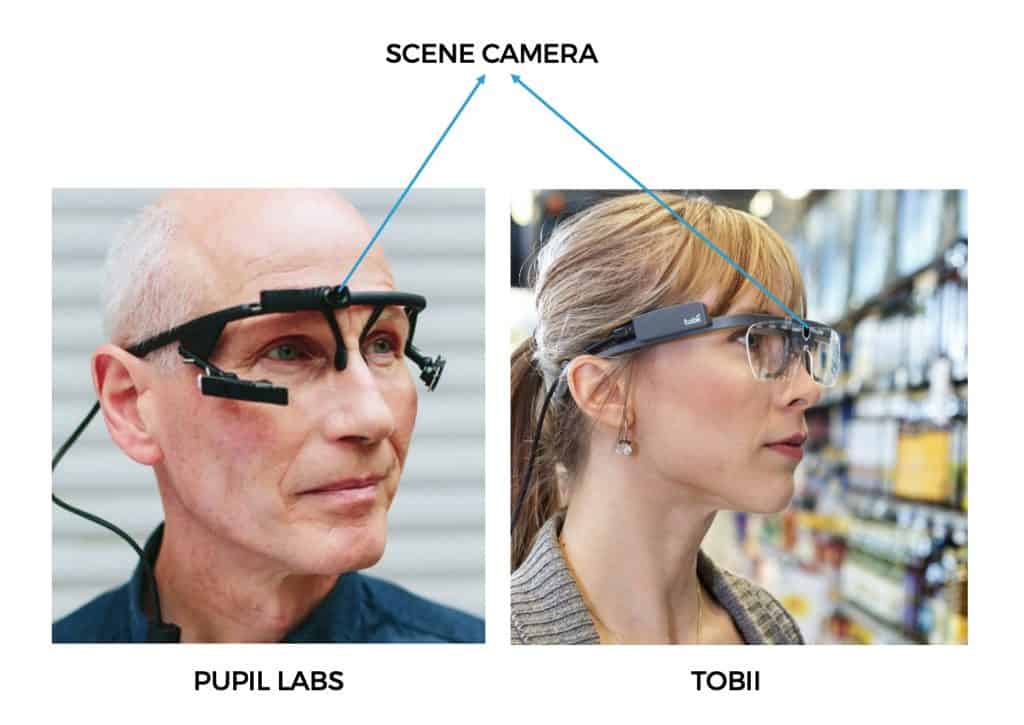

In this setup, the person whose gaze we are interested in tracking wears a device (glasses). This device contains a camera, called the scene camera that records what the person is looking at.

The goal is to find the x and y coordinates of the person’s gaze in the scene camera.

2. Gaze Tracking Resources

In this section, we will link to resources useful for gaze tracking research and development.

2.1 Research Papers and Algorithms

The literature on gaze tracking is vast. If you are interested in getting started, we refer you the recent survey paper that does a very good job of reviewing existing techniques.

The above survey paper was published in 2017. Since, then there was been additional papers of which the following are noteworthy

Deep Multitask Gaze Estimation with a Constrained Landmark-Gaze Mode

Recurrent CNN for 3D Gaze Estimation using Appearance and Shape Cues

Quite interestingly, there was a yet another workshop on gaze tracking at ICCV 2019 organized by Microsoft. The papers in the workshop are worth reviewing.

2.2 Gaze Tracking Products

Tobii sells many commercial wearable and screen trackers. You can find the complete product listing here.

https://www.tobiipro.com/product-listing/

We also have an open source option sold by Pupil Labs.

2.3 Datasets

The biggest challenge in eye gaze estimation research is the availability of good public datasets.

Fortunately, in recent years real as well as synthetic datasets have been released that are crucial to push research forward.

2.3.1 Synthetic Datasets for Gaze Tracking

SYNTHESEYES (2015) by University of Cambridge

UNITYEYES (2016) by University of Cambridge

NVGaze (2019) by NVIDIA

2.3.2 Real Datasets for Gaze Tracking

UTMULTIVIEW (2013) by The University of Tokyo

EYEDIAP (2014) by Idiap Research Institute

MPIIGAZE (2015) by MPI Informatik.

GAZECAPTURE (2016) by University of Georgia, Massachusetts Institute of Technology, and MPI Informatik

RT-GENE (2018) by Imperial College London.

OpenEDS : Open Eye Dataset by Facebook

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning