Object detection has undergone tremendous advancements, with models like YOLOv12, YOLOv11, and Darknet-Based YOLOv7 leading the way in real-time detection. While these models perform exceptionally well on general object detection datasets, fine-tuning YOLOv12 on HRSC2016-MS (High-Resolution Ship Collections) presents unique challenges.

This article provides a detailed end-to-end pipeline for fine-tuning YOLOv12, YOLOv11, and Darknet-Based YOLOv7 on HRSC2016-MS. It covers dataset preprocessing (basic shuffling and splitting the dataset into train, test, and val sets), training, evaluation, and a comparative analysis of their performance. The key focus areas include:

- Handling dataset biases to improve small-object detection.

- Shuffling the dataset to ensure proper generalization.

- Comparing YOLOv12, YOLOv11, and Darknet-Based YOLOv7 on validation/test sets.

- Visualizing detections to compare model performance.

Additionally, this article highlights the importance of understanding dataset structure and biases. Researchers invest significant time in dataset preparation and proper train-validation-test splits to enhance model generalization and avoid misleading results. By addressing these challenges in HRSC2016-MS, we demonstrate how proper data preparation and structuring contribute directly to improved model accuracy.

- Understanding the HRSC2016-MS Dataset

- Dataset Conversion & Preprocessing (Shuffling)

- Fine-Tuning YOLOv12 on the HRSC2016-MS Dataset

- Fine-Tuning YOLOv11 on the HRSC2016-MS Dataset

- Fine-Tuning YOLOv7-Based DarkNet on HRSC2016-MS

- Setting Up DarkNet

- Downloading Pretrained Darknet-Based YOLOv7 Weights

- Text files for Darknet

- Preparing Custom Darknet-Based YOLOv7 Class Names File

- Preparing Custom Darknet-Based YOLOv7 Training Data File

- Preparing Custom Darknet-Based YOLOv7 Testing Data File

- Preparing Custom Darknet-Based YOLOv7 Configuration File

- Training Darknet-Based YOLOv7

- Evaluation Metrics: Darknet-Based YOLOv7

- Comparison of mAP Scores for YOLOv12, YOLOv11, and Darknet-Based YOLOv7

- Inference Results on Few Images

- Key Takeaways

- Conclusion

- References

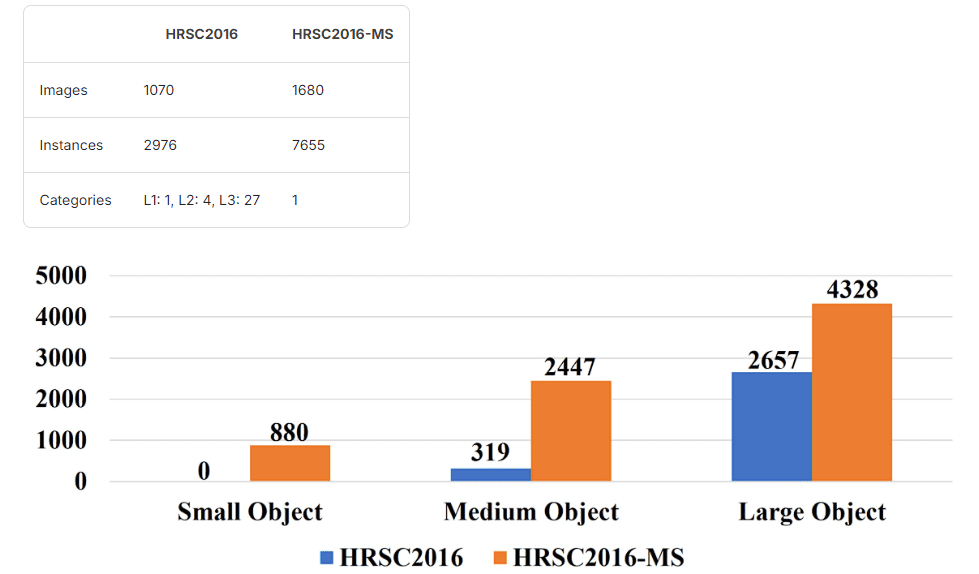

Understanding the HRSC2016-MS Dataset

HRSC2016-MS is a maritime object detection dataset consisting of aerial images of ships. The dataset has only a single class as the ship. It contains various ship sizes, densities, and orientations, making it a challenging dataset for object detection models.

Why Was HRSC2016-MS Introduced?

Before HRSC2016-MS, the HRSC2016 dataset already existed, containing ship images for detection tasks. However, the authors of HRSC2016-MS introduced this modified dataset to address several key limitations of the original dataset:

Enhanced Multi-Scale Representation

- The original HRSC2016 dataset had ships of relatively similar scales and did not sufficiently represent multi-scale variations.

- HRSC2016-MS was introduced to include a broader range of ship sizes, ensuring better generalization across real-world applications.

Improved Dataset Diversity

- The original HRSC2016 dataset lacked enough variance in ship clutter and scale transitions.

- HRSC2016-MS introduced more densely packed ships, improving the dataset’s utility for small-object detection.

More Challenging Object Distributions

- The original dataset had less variation in ship orientations and density per image.

- HRSC2016-MS aimed to increase dataset complexity, making it a better benchmark for advanced object detection models.

Original Dataset Structure (Downloaded from Kaggle)

When first downloaded, the dataset had the following structure:

HRSC2016-MS/

├── AllImages/ # Contains all images (no separation into train/val/test)

├── Annotations/ # VOC XML annotations for each image

└── ImageSets/

├── train.txt # List of image filenames for training

├── val.txt # List of image filenames for validation

├── test.txt # List of image filenames for testing

├── trainval.txt # List of combined training and validation images for developers test

Issues with the Original Dataset

Non-YOLO-Compatible Annotations

- The dataset used VOC-style XML annotations, which needed conversion to YOLO format.

Non-YOLO-Compatible Directory Structure

- All images were stored in AllImages/instead of being split into train/, val/, and test.

- The dataset included text files (train.txt, val.txt, test.txt, trainval.txt) containing only image filenames, making it incompatible with YOLO and DarkNet.

Biased Train-Test Split

- The dataset comprises a total of 1680 images, and ideally, the test set should include images with filenames within the range of 1-1680. However, upon analyzing the label file names in the original HRSC2016-MS dataset, we discovered that the test set contained sequential images with filenames in the range of approximately 1-650. This indicates an improper distribution of images across the train, test, and validation sets, which could lead to potential biases and impact model performance.

- The test set contained smaller, cluttered ships compared to the train and val sets.

- This led to poor generalization, with the model struggling to detect small ships.

Dataset Conversion & Preprocessing (Shuffling)

To address the issues with the original dataset, we manually converted and restructured the dataset into YOLO and DarkNet-compatible formats. All of the dataset conversion and preprocessing code can be directly downloaded.

Convert annotations from XML to YOLO (.txt) Format

- Extracted bounding box coordinates (xmin, ymin, xmax, ymax) from XML files. Converted them into YOLO (.txt) format: <class_id> <x_center> <y_center> <width> <height>

- We did not use rotated bounding box annotations just to make the evaluation process a bit simpler.

Shuffling the Entire Dataset for Generalization

- Since the test set contained mostly small, dense ships, training on the original dataset led to poor generalization.

- We merged, shuffled, and re-split the dataset to ensure the following:

- A balanced distribution of small & large ships across train, val, and test sets.

- Prevention of overfitting to specific object sizes.

- The results presented here serve as a baseline. Given the inherent randomness in dataset splitting, other researchers may observe slightly varying outcomes; some may achieve better results, while others might obtain marginally poorer results. To ensure transparency and reproducibility, we will also be providing the exact dataset split used in our experiments, which will be available for reference in a zip file.

Restructuring the Dataset for YOLO

After processing, the dataset was structured and then converted to YOLO-specific format as:

HRSC-YOLO/

├── train/

│ ├── images/ # Training images

│ ├── labels/ # Training labels in YOLO format

├── val/

│ ├── images/ # Validation images

│ ├── labels/ # Validation labels in YOLO format

├── test/

│ ├── images/ # Test images

│ ├── labels/ # Test labels in YOLO format

Restructuring the Dataset for DarkNet

DarkNet requires a different structure, where each image and its corresponding label file are stored together. The format is as follows –

darknet_dataset/

├── train/

│ ├── image1.JPG

│ ├── image1.txt

├── valid/

│ ├── image2.JPG

│ ├── image2.txt

├── test/

│ ├── image3.JPG

│ ├── image3.txt

Fine-Tuning YOLOv12 on the HRSC2016-MS Dataset

Model Configuration

- Used YOLOv12 (Ultralytics implementation).

- Set image size = 640×640 for optimal performance.

Training Pipeline

- Set batch-size = 8 and epochs count as 100.

- Mosaic Augmentation was used to expose the model to small objects at different scales.

- Adaptive Learning Rate Scheduling helped prevent overfitting.

def train_yolov12(epochs=100, batch_size=8):

model = YOLO(yolov12_model_path)

model.train(data=data_yaml, epochs=epochs, batch=batch_size, imgsz=640, device='cuda', workers=4, save=True, save_period=10)

model.val()

print(model)

print("YOLOv12 training completed on HRSC2016-MS.")

Evaluation Metrics

- mAP@50, mAP@75, and mAP@[50:95] were tracked.

- Performance comparison before and after dataset preprocessing has been done, and the observations can be inferred below.

| Model – YOLOv12 | mAP@50 | mAP@75 | mAP@[50:95] |

| Trained On Original Dataset | 74.9% | 61.5% | 54.5% |

| Trained On Shuffled DataSet | 85.2% | 75.1% | 66.3% |

![yolov12_original_shuffled – LearnOpenCV Two bar charts comparing YOLOv12’s mAP scores on the validation and test sets before and after dataset shuffling. The shuffled dataset shows improved mAP@[50:95], indicating better generalization and small-object detection.](https://learnopencv.com/wp-content/uploads/2025/03/yolov12_original_shuffled-1024x435.png)

Fine-Tuning YOLOv11 on the HRSC2016-MS Dataset

- The same training pipeline as for YOLOv12 was followed.

- Compared YOLOv11’s performance on the original dataset vs. the preprocessed dataset.

- mAP scores have been compared using bar plots, and the observations are down here –

| Model- YOLOv11 | mAP@50 | mAP@75 | mAP@[50:95] |

| Trained On Original Dataset | 75.3% | 61.6% | 55.0% |

| Trained On Shuffled DataSet | 86.2% | 75.8% | 67.0% |

![yolov11_original_shuffled – LearnOpenCV Two bar charts comparing YOLOv11’s mAP scores on validation and test sets before and after dataset shuffling. The shuffled dataset improves mAP@[50:95], indicating enhanced small-object detection and better generalization.](https://learnopencv.com/wp-content/uploads/2025/03/yolov11_original_shuffled-1024x435.png)

Fine-Tuning Darknet-Based YOLOv7 on HRSC2016-MS

DarkNet is the original framework for developing the YOLO (You Only Look Once) family of object detection models. Unlike Ultralytics’s implementation of YOLO models, Darknet-Based YOLOv7 requires a specific directory structure, configuration files, and manually defined dataset paths. This section provides a detailed breakdown of how we set up, trained, and evaluated Darknet-Based YOLOv7 on the HRSC2016-MS dataset.

We will be providing a Jupyter notebook that can be easily executed in the VS Code editor. This notebook will guide you through the processes of data preparation, preprocessing, and generating the necessary configuration and text files required by DarkNet to initiate the training process. However, it is important to note that for the actual training part, using the code editor to initiate DarkNet training is not recommended. This is due to the large output generated during training, which may cause the code editor window to crash.

Therefore, just for training and evaluation sections, it is advised to execute the training and evaluation commands in the terminal rather than executing them in any code editor. Proper instructions have been given in the notebook. For practical implementation, following the instructions carefully is advised.

Setting Up DarkNet

- Cloned the DarkNet repository.

- Compiled DarkNet with CUDA & OpenCV support.

- A step-by-step procedure can be referred to for building Darknet from one of our articles on YOLOv4 and Darknet For Pothole Detection. The link is given just below.

Building Darknet reference article – https://learnopencv.com/pothole-detection-using-yolov4-and-darknet/

Downloading Pretrained YOLOv7-Based DarkNet Weights

To fine-tune Darknet-Based YOLOv7 on HRSC2016-MS, we use pretrained weights as a starting point. Download the YOLOv7 DarkNet weights using:

%cd /present/working/directory/darknet/

!wget https://github.com/AlexeyAB/darknet/releases/download/yolov4/yolov7x.conv.147

!wget https://github.com/AlexeyAB/darknet/releases/download/yolov4/yolov7x.weights

Save the weights inside the darknet/ directory. In fact, from now on, every command has to be executed in the darknet subdirectory present inside the cloned Darknet directory.

Text files Needed by Darknet

DarkNet requires explicit text files specifying the paths of images.

- train.txt → Contains absolute paths of images used for training.

- valid.txt → Contains absolute paths of images used for validation.

- test.txt → Contains absolute paths of images used for evaluation.

To generate these text files, we will be writing a Python script and then will be executing the script. The script is as follows –

%%writefile prepare_darknet_image_txt_paths.py

import os

DATA_ROOT_TRAIN = os.path.join(

'/directory/path/just/outside/the/built/darknet', 'train2'

)

DATA_ROOT_VALID = os.path.join(

'/directory/path/just/outside/the/built/darknet', 'val2'

)

DATA_ROOT_TEST = os.path.join(

'/directory/path/just/outside/the/built/darknet', 'test2'

)

train_image_files_names = os.listdir(os.path.join(DATA_ROOT_TRAIN))

with open('train.txt', 'w') as f:

for file_name in train_image_files_names:

if not '.txt' in file_name:

write_name = os.path.join(DATA_ROOT_TRAIN, file_name)

f.writelines(write_name+'\n')

valid_data_files__names = os.listdir(os.path.join(DATA_ROOT_VALID))

with open('valid.txt', 'w') as f:

for file_name in valid_data_files__names:

if not '.txt' in file_name:

write_name = os.path.join(DATA_ROOT_VALID, file_name)

f.writelines(write_name+'\n')

test_data_files__names = os.listdir(os.path.join(DATA_ROOT_TEST))

with open('test.txt', 'w') as f:

for file_name in test_data_files__names:

if not '.txt' in file_name:

write_name = os.path.join(DATA_ROOT_TEST, file_name)

f.writelines(write_name+'\n')

After the creation of the above script inside the darknet directory, we need to execute the below command to run the above-made script.

!python prepare_darknet_image_txt_paths.py

DarkNet also requires three custom configuration files:

- obj.names (ship.names) → Class names file

- obj.data (hrsc2016-ms-yolov7.data) → Training data file

- Custom .cfg file (yolov7-darknet-hrsc2016-ms.cfg) → Used to define YOLOv7-based Darknet architecture for the particular dataset

Preparing Custom Darknet-Based YOLOv7 Class Names File

%%writefile build/darknet/x64/data/ship.names

ship

Preparing Custom Darknet-Based YOLOv7 Training Data File

We need to make sure that we have already created a directory named backup_2000 before we start the training process.

%%writefile build/darknet/x64/data/hrsc2016-ms-yolov7.data

classes = 1

train = train.txt

valid = valid.txt

names = build/darknet/x64/data/ship.names

backup = backup_2000

Preparing Custom Darknet-Based YOLOv7 Testing Data File

The creation of backup_test_2000 directory is advised before we start the inferencing and testing process.

%%writefile build/darknet/x64/data/ship_test.data

classes = 1

train = train.txt

valid = test.txt

names = build/darknet/x64/data/ship.names

backup = backup_test_2000

Preparing Custom Darknet-Based YOLOv7 Configuration File

We will be creating a custom config file in which almost all of the content is the same as the ones present in the default yolov7x.cfg (because we are comparing the large YOLO models) except for a few parameters, which we need to modify according to our dataset.

In the new configuration file, we have to keep the batch value to 64 even though we trained our YOLOv12 and YOLOv11 models with a batch size of 8. This was done because the nominal batch size (NBS) for YOLO models is 64. It is advised to keep the subdivision value equal to 64 itself, as reducing the subdivision value may lead to out-of-memory errors when we are training the Darknet-Based YOLOv7 and calculating mAP scores.

%%writefile cfg/yolov7-darknet-hrsc2016-ms.cfg

[net]

# Testing

#batch=64

#subdivisions=64

# Training

batch=64

subdivisions=64

width=640

height=640

channels=3

momentum=0.9

decay=0.0005

angle=0

saturation = 1.5

exposure = 1.5

hue=.1

learning_rate=0.00261

burn_in=1000

max_batches = 2000

policy=steps

steps=1600,1800

scales=.1,.1

...

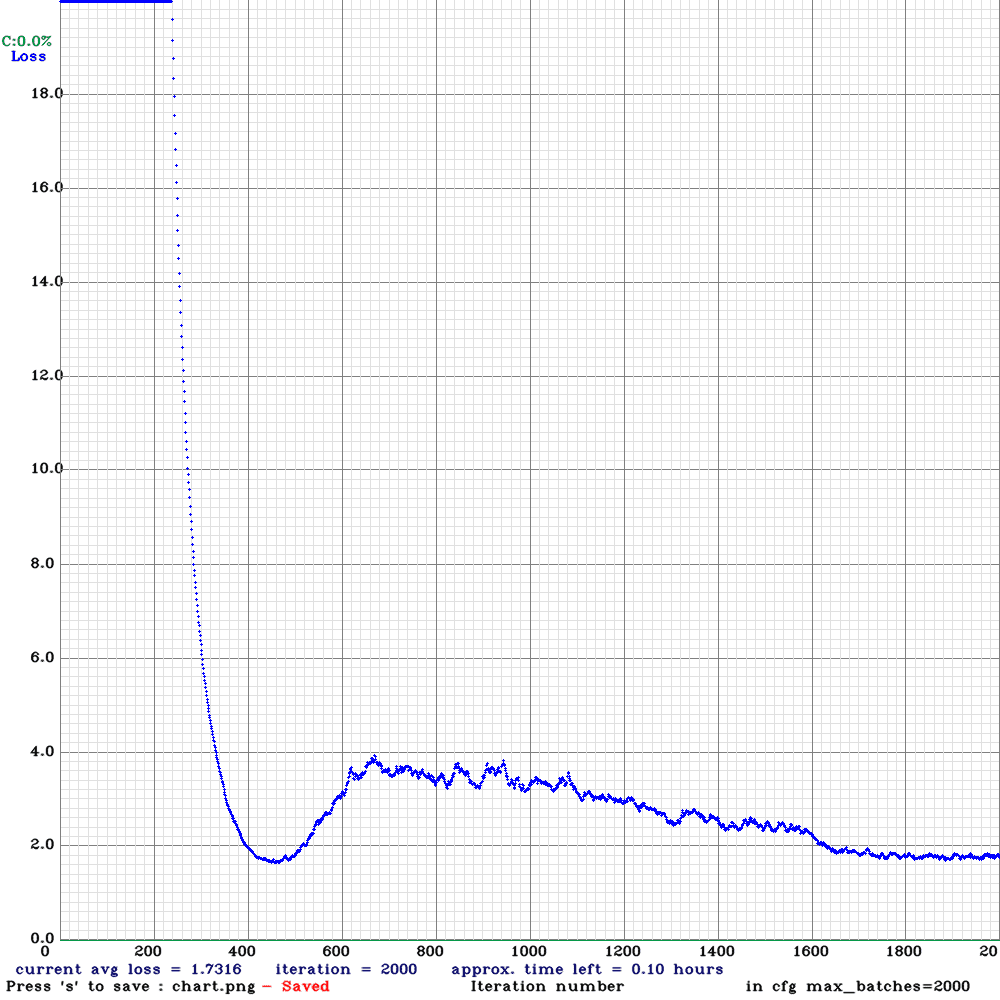

Generally, we put max_batches equal to (2000 * num_classes), and as we trained our YOLO models for 100 epochs, we had to set the max_batches value to around 1000, but while doing the experiments, we observed that the model was still to be converged. So we simply trained the model for 2000 steps or max_batches. The learning rates will be scheduled to reduce at steps 1600 and 1800.

[convolutional]

size=1

stride=1

pad=1

filters=18

#activation=linear

activation=logistic

[yolo]

mask = 6,7,8

anchors = 12,16, 19,36, 40,28, 36,75, 76,55, 72,146, 142,110, 192,243, 459,401

classes=1

...

Next are the number of filters and classes that we need to modify. We can find three [yolo] layers in the model configuration file. Change the classes in those layers from 80 to 1, as we have only one class. Before each [yolo] layer, there will be [convolutional] layers containing the filters parameter. Change the number of filters to the value given by (num_classes+5)*3, which will be 18 in our case.

The parameters to be modified are summarized as follows –

- batch=64

- subdivisions=64

- max_batches=2000

- steps=1600,1800 (80% and 90% of max_batches respectively)

- classes=1 in [yolo] layers

- filters=18

After running all of the above scripts, the darknet/ directory will look like this:

darknet/

├── train.txt # List of paths to the training images

├── valid.txt # List of paths to the validation images

├── test.txt # List of paths to the test images

├──cfg

│ ├── yolov7-darknet-hrsc2016-ms.cfg

├──build/darknet/x64/data

│ ├── ship.names

│ ├── hrsc2016-ms-yolov7.data

│ ├── ship_test.data

...

Training Darknet-Based YOLOv7

Reminder: Run the following commands in the terminal only.

The terminal command below starts the training process of the Darknet-Based YOLOv7 model and calculates the mAP@50 score after the training ends.

./darknet detector train build/darknet/x64/data/hrsc2016-ms-yolov7.data cfg/yolov7-darknet-hrsc2016-ms.cfg yolov7x.conv.147 -map -dont_show

Evaluation Metrics: YOLOv7-based Darknet

We will be using the following terminal command to obtain the mAP scores on the validation set with 0.75 IoU threshold value. We can just change the iou_thresh value in the command to set the IoU threshold value as per the needs.

./darknet detector map build/darknet/x64/data/ship_test.data cfg/yolov7-darknet-hrsc2016-ms.cfg backup_2000/yolov7-darknet-hrsc2016-ms_final.weights -iou_thresh 0.75

We simply need to modify the ship_test.data config file if we want to calculate mAP scores on the test set. We just need to change the ‘valid’ argument value from ‘valid.txt’ to ‘test.txt’.

Use the following command to generate detections by the trained model on the images provided. Take care of the path of the directory containing the images to be mentioned in the command. The output will contain the locations of the bounding boxes predicted by the model for the images passed.

./darknet detector test build/darknet/x64/data/hrsc2016-ms-yolov7.data cfg/yolov7-darknet-hrsc2016-ms.cfg backup_2000/yolov7-darknet-hrsc2016-ms_final.weights -dont_show -ext_output < test.txt > results_darknet_test.txt

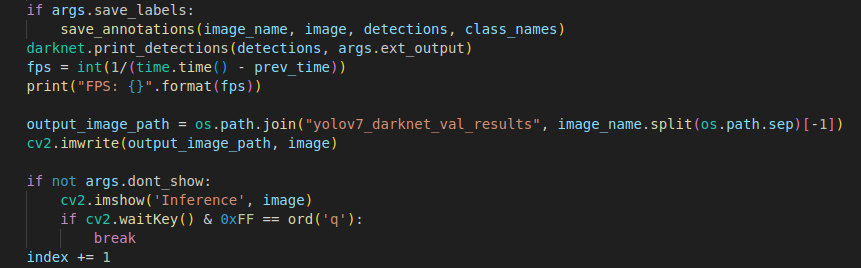

But to visualize the detections on the images, we have to modify an internal Python script (darknet_images.py) of the cloned darknet repository. We just need to add two extra lines to the file so that the annotated images are saved in a directory. Open the darknet_images.py file in VS Code Editor and make the changes as suggested. Those two extra lines are as follows –

output_image_path = os.path.join("yolov7_darknet_test_results", image_name.split(os.path.sep)[-1])

cv2.imwrite(output_image_path, image)

The changes made are shown below for reference.

darknet imagespy to Save Darknet Based YOLOv7 DetectionsTo run the darknet_images.py script with all of the arguments needed, we need to run the following command in the terminal.

python darknet_images.py --data_file build/darknet/x64/data/ship_test.data --input /path/to/test/images --config_file cfg/yolov7-darknet-hrsc2016-ms.cfg --weights backup_2000/yolov7-darknet-hrsc2016-ms_final.weights --thresh 0.25 --ext_output --save_labels --dont_show

We are now done with the experiments. It’s time to compare the performances of the fine-tuned models now.

Comparing mAP Scores of YOLOv12, YOLOv11, and Darknet-Based YOLOv7

mAP scores on the preprocessed dataset were significantly higher than on the original dataset, as has been shown earlier in this article. The final comparison on the preprocessed dataset based on the evaluation metrics mAP@50, mAP@75, and mAP@[50:95] is shown below.

On Validation Set –

| Model | mAP@50 | mAP@75 | mAP@[50:95] |

| YOLOv7 (DarkNet) | 85.6% | 71.9% | 61.56% |

| YOLOv11 | 83.7% | 72.2% | 63.8% |

| YOLOv12 | 83% | 71.8% | 63.5% |

![all_models_valid – LearnOpenCV A bar chart comparing mAP@50, mAP@75, and mAP@[50:95] for Darknet-Based YOLOv7, YOLOv11, and YOLOv12 on the validation set. YOLOv7-based DarkNet achieves the highest mAP@50, while YOLOv11 outperforms in higher IoU thresholds.](https://learnopencv.com/wp-content/uploads/2025/03/all_models_valid.png)

On Test Set –

| Model | mAP@50 | mAP@75 | mAP@[50:95] |

| YOLOv7 (DarkNet) | 88.2% | 75.6% | 6.5% |

| YOLOv11 | 86.2% | 75.8% | 67% |

| YOLOv12 | 85.2% | 75.1% | 66.3% |

![all_models_test – LearnOpenCV A bar chart comparing mAP@50, mAP@75, and mAP@[50:95] for Darknet-Based YOLOv7, YOLOv11, and YOLOv12 on the test set. YOLOv7-based DarkNet achieves the highest mAP@50, while YOLOv11 outperforms at stricter IoU thresholds.](https://learnopencv.com/wp-content/uploads/2025/03/all_models_test-1.png)

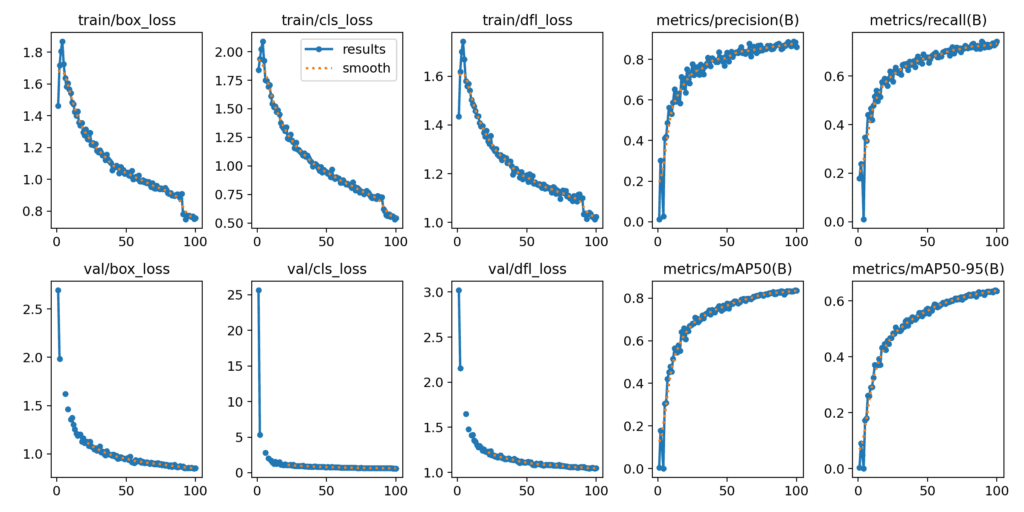

Visualization of Training and Loss Graphs

YOLOv12

![YOLOv12 results graphs – LearnOpenCV Graphs showing the training and validation losses for fine-tuned YOLOv12, including box loss, class loss, and objectness (dfl) loss. The metrics for precision, recall, mAP@50, and mAP@[50:95] are also plotted, highlighting the model's performance and improvement during training.](https://learnopencv.com/wp-content/uploads/2025/03/YOLOv12-results-graphs-1024x512.png)

YOLOv11

Darknet-Based YOLOv7

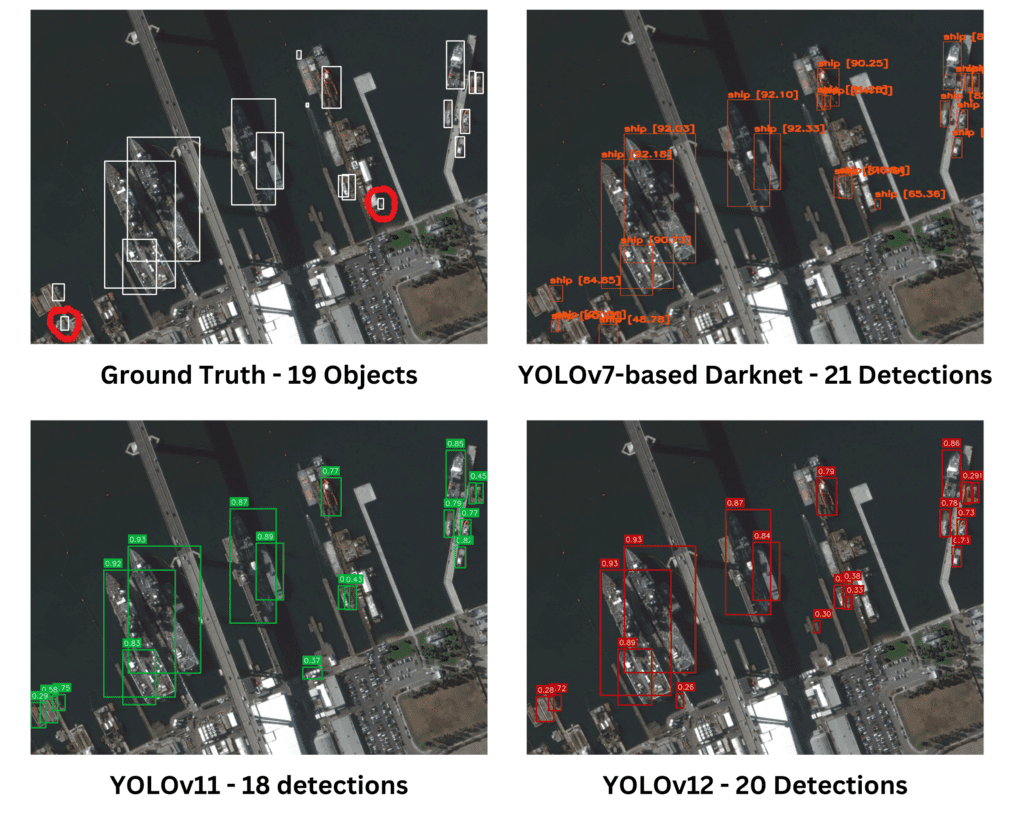

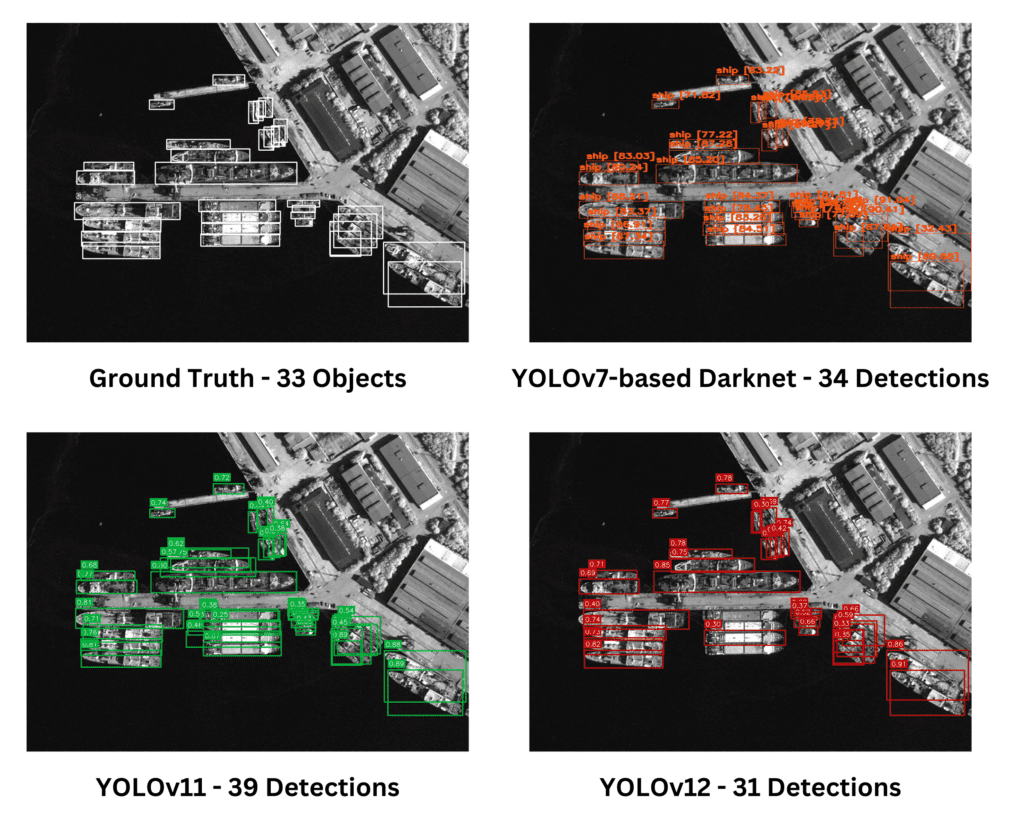

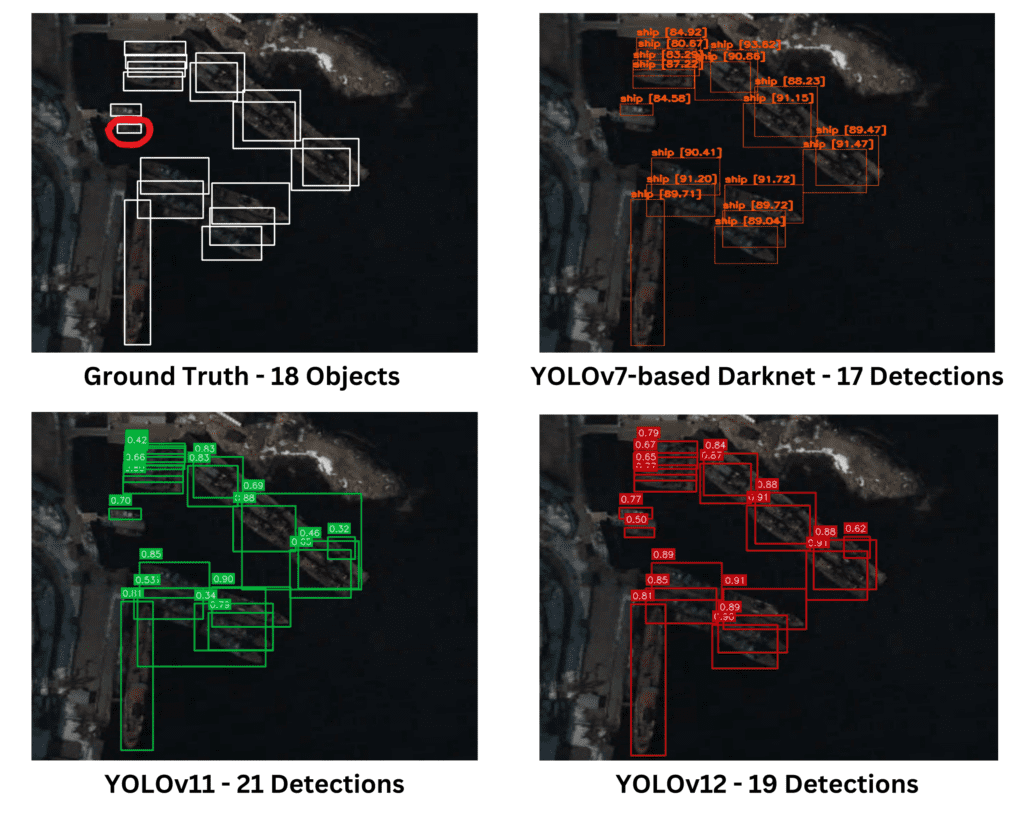

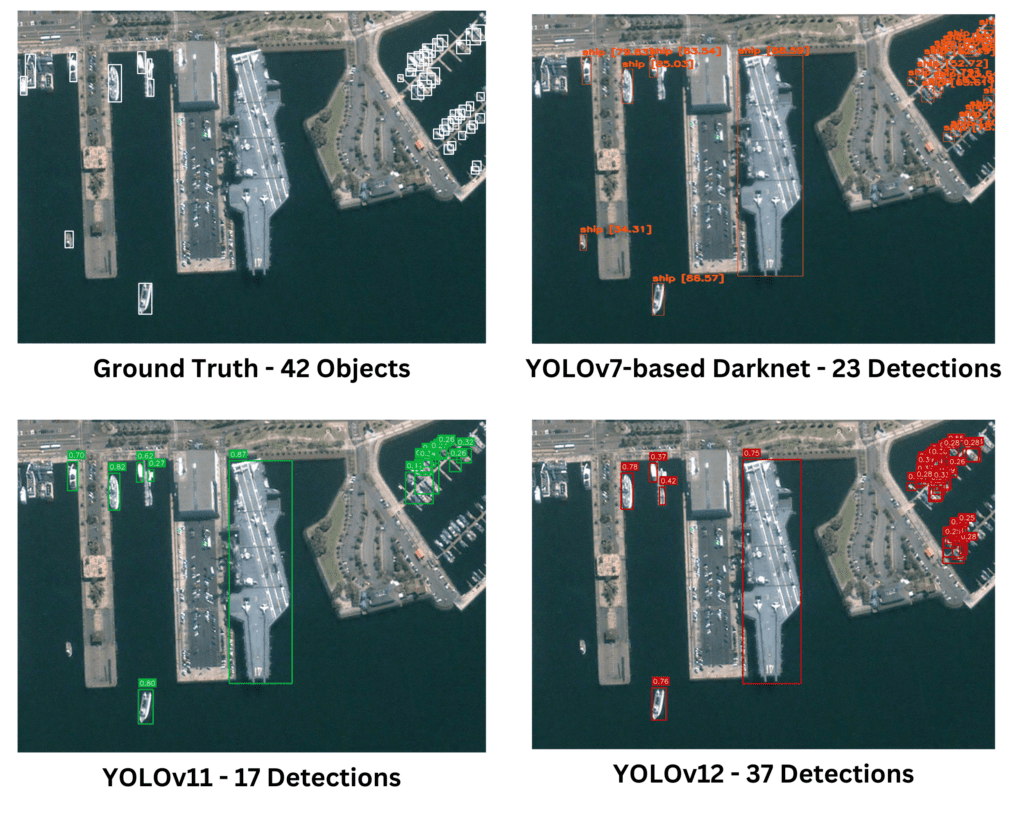

Inference Results on Few Images

All three models demonstrated similar performance on the given Ground Truth image. Darknet exhibited better accuracy in identifying the marked small objects that were missed or less accurately detected by the other models.

All evaluated models demonstrated strong performance in detecting dense and small objects. However, YOLOv11 exhibited overlapping detections, which may indicate redundant or less precise predictions. Overall, the models effectively handled the detection task with varying degrees of overlap and precision.

YOLOv12 was the only model able to detect the marked object in the Ground Truth image. Overall, all models exhibited similar performance in object detection. However, YOLOv11 continued to produce overlapping detections, indicating potential redundancy or reduced precision.

The given image serves as an ideal test case for evaluating object detection models, particularly in dense and small object scenarios. A superficial comparison may suggest that YOLOv12 outperforms other models based on the higher number of detections. However, a detailed analysis reveals that many YOLOv12 detections are overlapping, redundant, or false positives. Darknet-Based YOLOv7, despite detecting fewer objects, demonstrated higher accuracy with minimal false positives and overlaps. A robust evaluation must consider precision, recall, and false detection rates, rather than relying solely on detection count.

Key Takeaways

- mAP@50 Scores Indicate Strong Object Detection in Darknet-Based YOLOv7. This suggests that DarkNet is highly effective at basic object localization (detecting ships) but may struggle with tighter localization constraints.

- YOLOv11 Outperforms in High IoU Thresholds (mAP@75 & mAP@[50:95]). Inference quality improves at stricter IoU thresholds, meaning that YOLOv11 provides better object localization and more precise bounding boxes than the other models.

- YOLOv12 Never Outperformed in mAP but had stronger visual inference quality. YOLOv12’s ability to infer images more effectively may be due to better feature extraction for ships in dense environments.

- The HRSC2016-MS Dataset benefited from preprocessing, basically shuffling. Shuffling and restructuring the dataset improved generalization, allowing all models to detect smaller ships more effectively.

- Model Performance is Dataset-Dependent. Even though YOLOv12 is the latest model, it didn’t dominate in any mAP score evaluation metric. Darknet-Based YOLOv7 excelled in mAP@50, showing that older architectures can still be competitive in specific datasets.

Conclusion

This study provided an in-depth analysis of fine-tuning YOLOv12, YOLOv11, and Darknet-Based YOLOv7 on the HRSC2016-MS dataset, focusing on improving small-object detection in aerial ship imagery. Original dataset shuffling and restructuring played a crucial role in improving model performance, as the initial train-test split was biased, impacting generalization.

YOLOv11 outperformed all models at stricter IoU thresholds (mAP@75 and mAP@[50:95]), making it the best for precise bounding box predictions. Despite YOLOv12 not achieving the highest mAP scores, it demonstrated strong real-world inference performance, suggesting that qualitative detection effectiveness extends beyond numerical mAP values.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning