Object detection has traditionally been a closed-set problem: you train on a fixed list of classes and cannot recognize new ones. Grounding DINO breaks this mold, becoming an open-set, language-conditioned detector that can localize any user-specified phrase, zero-shot. Grounding DINO shatters this limitation by weaving language understanding directly into a transformer-based detector. It can localize any object you name in natural language, even concepts it has never encountered as explicit labels.

In this comprehensive tutorial, we explore how Grounding DINO achieves open-set detection, delve into its architectural innovations, review its rigorous experimental validation, and then roll up our sleeves to fine-tune it on a practical face-mask detection problem.

- Grounding DINO: An Overview

- Core Architectural Components of Grounding DINO

- Implementation Details of Grounding DINO

- Experimental Validation and Ablation Insights

- Fine-Tuning Grounding DINO on the Face Mask Detection Dataset

- Fine-Tuning Grounding DINO Results and Insights

- Guided Image Editing: Combining Grounding DINO’s Detection with Stable Diffusion’s Generation

- Conclusion

- References

Grounding DINO: An Overview

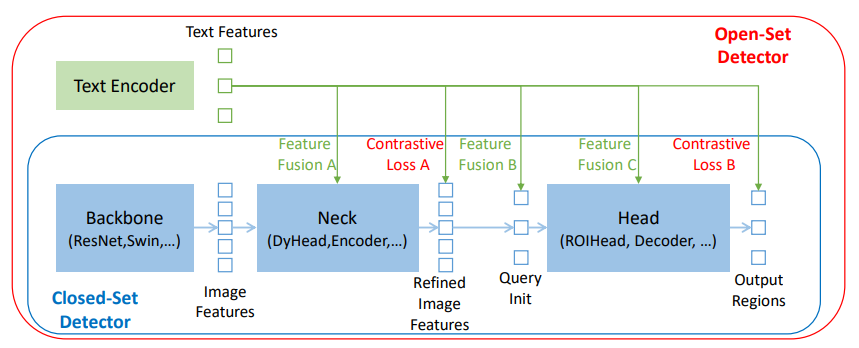

At its core, Grounding DINO builds upon the DETR-style transformer detector DINO, enhancing it in three fundamental ways.

- Feature Enhancer (Early modality fusion) – The Feature Enhancer stage co-trains vision and language streams via deformable self-attention on image tokens and reciprocal cross-attention with text tokens. This ensures that both modalities share a common, grounded feature space before forming any region proposals.

- Language-Guided Queries (Dynamically select relevant regions) – Second, the model employs Language-Guided Query Selection: rather than using a fixed set of learned object queries, it scores each enhanced image feature against all text tokens, picks the highest scoring ones, and initializes the detector’s queries from those embeddings.

- Cross-Modality Decoder (Interleave vision- and text-attention) – Alternates self-attention among queries with both image-to-text and text-to-image cross-attentions, refining each query’s spatial and semantic understanding in lockstep.

- Sub-Sentence Grounding (Encode each phrase independently) – Finally, by encoding each object category or referring expression as an independent sub-sentence with block-diagonal attention masks, Grounding DINO preserves multi-word concepts intact while preventing cross-phrase interference.

This architecture transforms a closed-set DETR into an open-set system: you pass in any text prompt, be it a COCO class, a rare object name, or a natural language expression, and the model returns bounding boxes with grounding scores.

Core Architectural Components of Grounding DINO

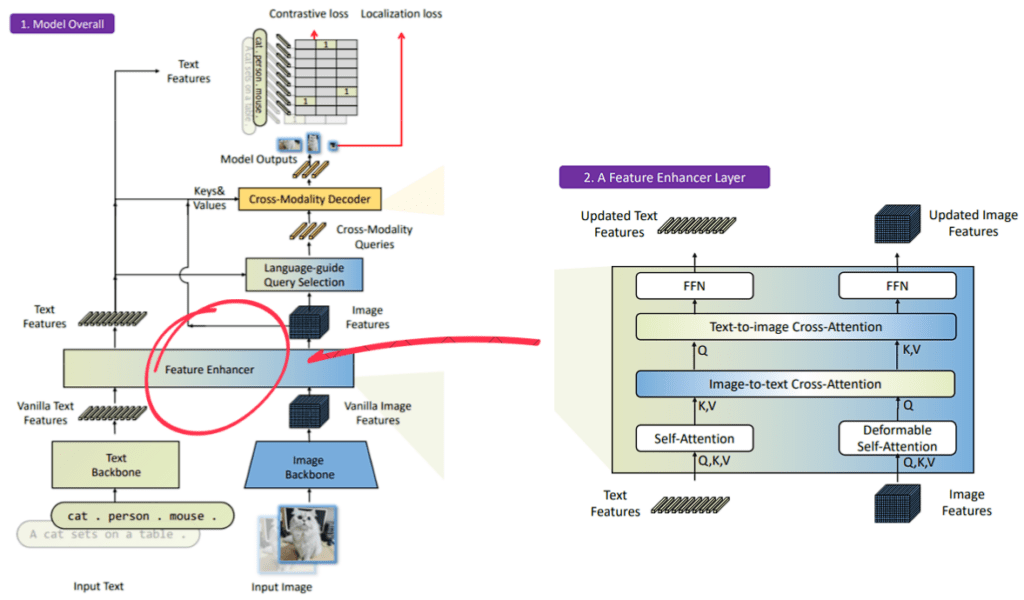

Feature Enhancer: Early Multi-Modal Fusion

The Feature Enhancer sits between the raw backbone outputs and the detector head. On the vision side, it applies deformable self-attention to efficiently capture long-range context across high-resolution feature maps. Concurrently, text tokens, obtained from a BERT-style transformer, undergo standard self-attention. Crucially, each enhancer layer then executes image-to-text cross-attention, enabling language tokens to “read” visual cues, followed by text-to-image cross-attention so that image tokens are biased toward the textual prompt.

Interspersed feed-forward networks refine both modalities after each attention block. By the time features emerge from this stage, they are richly intertwined: every patch embedding carries semantic hints from the language, and every word embedding is tuned to its visual context. This deep, bidirectional grounding is far more powerful than simply concatenating vision and text features at the end.

In a nutshell, before any detection head:

- Deformable Self-Attention on image tokens for multi-scale context.

- Image→Text Cross-Attention to ground visual features in language.

- Text→Image Cross-Attention to bias image tokens toward your prompt.

- Feed-Forward Networks refine each modality.

This early, deep fusion ensures both image and text features speak a common language.

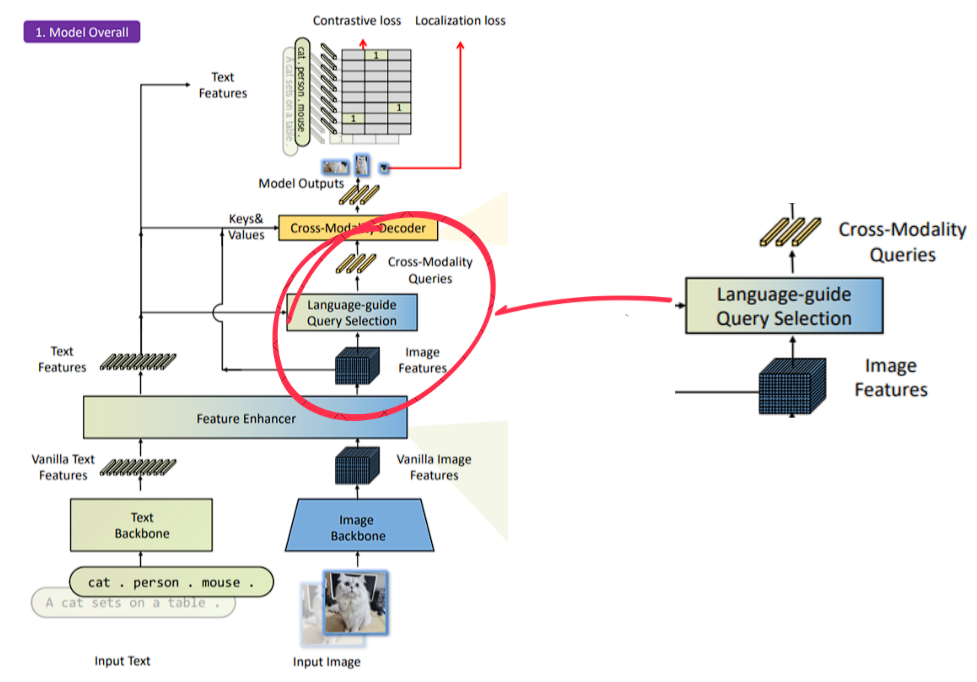

Language-Guided Query Selection: Steering the Detector

Instead of fixed DETR queries, Grounding DINO replaces those static queries with dynamically selected image features. After the Feature Enhancer, each image token’s embedding is compared to every text token via dot-product similarity; the maximum similarity per token represents how well that spatial feature matches any part of the prompt.

The top-Nq scoring tokens, commonly 900 to match DINO’s default, are chosen as query initializers. These embeddings, already fused with language cues, are then paired with learnable “content” queries and positional queries derived from their spatial coordinates. This mechanism ensures that, for a given phrase, the detector’s attention is immediately focused on the most relevant regions, accelerating convergence and boosting zero-shot accuracy.

When summarizing for Language-Guided Query Selection, Grounding DINO:

- Computes per-token similarity between every image feature and every text token.

- Takes the max similarity per image token, ranks them, and picks the top-Nq (e.g., 900).

- Uses those selected embeddings (plus learned “content” queries) to initialize the decoder.

This steers the detector’s attention to regions most relevant to your text.

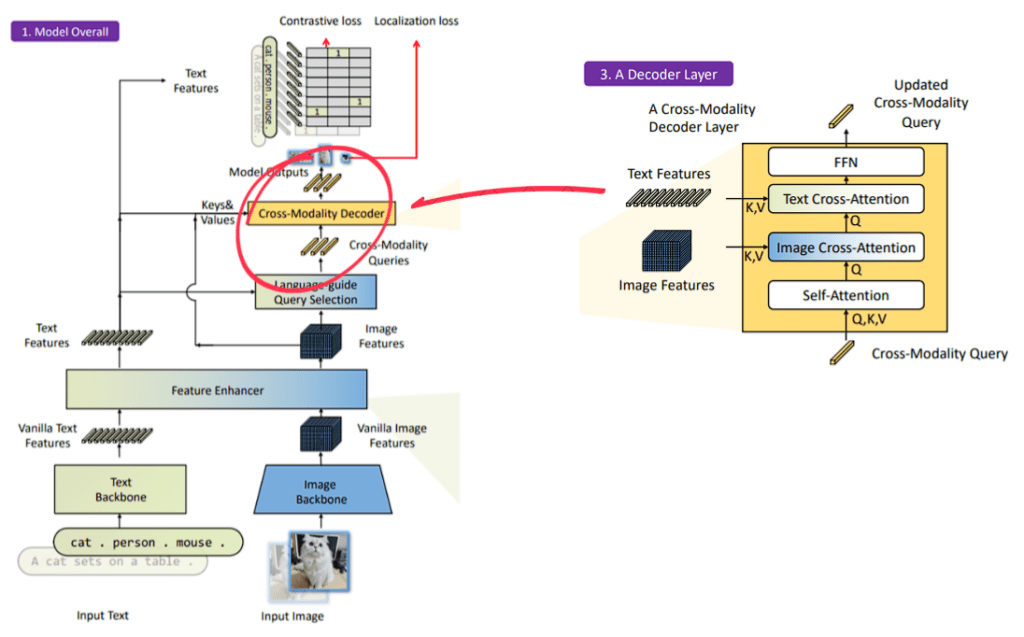

Cross-Modality Decoder: Iterative Refinement

Once the dynamic queries are set, the Cross-Modality Decoder drives them through a stack of transformer layers. Each layer comprises four sequential sublayers: self-attention among queries fosters inter-object reasoning (for instance, implicitly handling non-maximum suppression), followed by image cross-attention where queries read from the enhanced image tokens, then text cross-attention where queries re-align with the phrase embeddings, and finally a feed-forward network.

By interleaving vision and language attention, each query gradually refines both its spatial box prediction and its semantic match to the prompt. At the final layer, a box head regresses coordinates and a grounding head scores each query against every sub-sentence embedding, yielding a set of (box, phrase) pairs.

Summarizing the cross-modality approach, each transformer decoder layer applies, in sequence:

- Self-Attention among queries

- Image Cross-Attention (queries → enhanced image features)

- Text Cross-Attention (queries → enhanced text features)

- Feed-Forward Network

By interleaving vision and language attention, queries iteratively refine both where and what they detect.

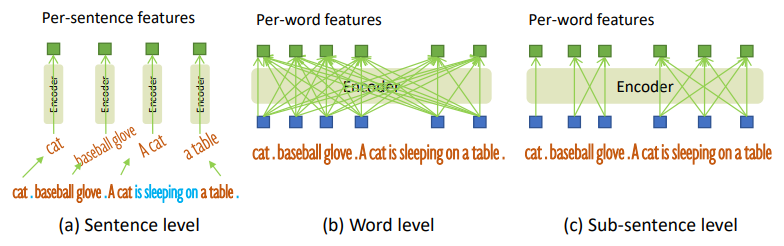

Sub-Sentence Grounding: Precision Without Noise

A pivotal design choice is the representation of textual inputs. Encoding an entire caption or concatenation of class names into one sentence leads to “bleed” between concepts. Conversely, encoding each word separately fractures multi-word entities. Grounding DINO’s sub-sentence approach segments the prompt into coherent phrases, be they single category names (“mask_weared_incorrect”) or full referring expressions, and encodes each independently.

To prevent cross-phrase attention, block-diagonal masks are applied so tokens only attend within their phrase group. This ensures that a region’s contrastive loss only pulls it toward its own phrase embedding, resulting in sharp, unambiguous grounding that scales to complex, attribute-rich queries.

Sub-Sentence Grounding approach concludes as follows –

To avoid “cross-talk” among class names or phrases, Grounding DINO:

- Splits the input prompt into sub-sentences (one embedding per class or referring expression).

- Applies block-diagonal attention masks so tokens attend only within their phrase.

- Contrastively trains each region solely against its own phrase embedding.

This preserves multi-word semantics and yields unambiguous grounding.

Implementation Details of Grounding DINO

Backbones & Hyperparameters

Grounding DINO ships in two main variants. The “Tiny” model uses Swin-Tiny as the vision backbone and processes text via BERT-base with a 256-token limit; the “Large” model upgrades to Swin-Large. Both employ six enhancer layers and six decoder layers, maintain 900 queries, and leverage deformable attention for efficiency.

| Variant | Image Backbone | Text Encoder | Queries | Decoder Layers | Enhancer Layers |

|---|---|---|---|---|---|

| Grounding DINO-T | Swin-Tiny | BERT-base (256 tok) | 900 | 6 | 6 |

| Grounding DINO (Large) | Swin-Large | BERT-base | 900 | 6 | 6 |

The training objective combines standard DETR localization losses (L1 and GIoU, weighted 5.0 and 2.0) with a focal contrastive loss on query–text dot products. Hungarian matching pairs predictions with ground truths based on combined localization and grounding costs, and auxiliary heads at every decoder layer (and on the encoder output) stabilize gradients and accelerate convergence.

Loss Functions & Matching

The training objective combines standard DETR localization losses (L1 and GIoU, weighted 5.0 and 2.0) with a focal contrastive loss on query–text dot products. Hungarian matching pairs predictions with ground truths based on combined localization and grounding costs, and auxiliary heads at every decoder layer (and on the encoder output) stabilize gradients and accelerate convergence.

- Localization: L1 + GIoU (weights 5.0 & 2.0)

- Contrastive Grounding: Focal loss on query–text dot products

- Matching: Hungarian assignment on combined localization + grounding cost

- Auxiliary Heads: Losses applied after every decoder layer and encoder output

Training & Efficiency

Training on detection-style and image–captions corpora across hundreds of millions of examples imbues the model with robust, open-world reasoning while preserving closed-set accuracy.

- Training GPUs: Tiny on 16×V100 (batch 32); Large on 64×A100 (batch 64)

- Inference Overhead: Only modest latency increase vs. vanilla DINO thanks to deformable attention and lean fusion modules

Experimental Validation and Ablation Insights

Grounding DINO’s performance is compelling on both familiar and unseen categories. When fine-tuned on COCO, it achieves over 50 AP, rivaling or surpassing specialized closed-set detectors. Yet in zero-shot mode, never training on COCO data, it attains 52.5 AP on COCO minival, 28.7 mAP on LVIS long-tail, and 26.1 AP on the open-domain ODinW benchmark, far eclipsing prior methods. It even excels at referring-expression comprehension, scoring above 70 percent accuracy on RefCOCO+.

| Setting | Metric | Grounding DINO | Prior SOTA |

|---|---|---|---|

| COCO Fine-Tuned | AP | 50.2 | 49.7 |

| COCO Zero-Shot (minival) | AP | 52.5 | 35–45 |

| LVIS Zero-Shot | AP (mAP) | 28.7 | 18–24 |

| ODinW Zero-Shot | AP (mean) | 26.1 | 15–20 |

| RefCOCO+ Referring Detection | Acc. | 70.4% | 60–65% |

Ablation studies reveal that removing any core component erodes open-set prowess. Stripping the enhancer’s deep fusion slashes zero-shot AP by over 12 points. Reverting to static queries or omitting text-cross attention costs 4–6 points. Encoding whole-sentence prompts instead of sub-sentences lops off a few additional points. Notably, these modifications have minimal effect on closed-set fine-tuning, underscoring that Grounding DINO’s innovations principally empower zero-shot and open-world generalization.

| Component | COCO ZS AP ↓ | LVIS AP ↓ | COCO FT AP ↓ |

|---|---|---|---|

| No Neck Fusion | –12.8 | –10.2 | –1.0 |

| Static Queries (no text) | –6.3 | –3.0 | –0.2 |

| No Text Cross-Attention | –4.5 | –1.8 | –0.1 |

| Whole-Sentence Prompts | –3.2 | –0.5 | ~0 |

Each ingredient, multi-phase fusion, language-guided queries, text attention, and sub-sentence grounding, contributes significantly to open-set performance while having minimal effect on closed-set fine-tuning.

Fine-Tuning Grounding DINO on the Face Mask Detection Dataset

Dataset Overview and Annotation Conversion

Face Mask Detection Dataset Description

The Face Mask Detection dataset is a curated collection designed to help researchers and practitioners build models that can distinguish between three critical real-world scenarios: people wearing masks correctly, people not wearing masks, and people wearing masks improperly. As the world grapples with respiratory illnesses and public health concerns, automated mask detection remains an important tool for surveillance, access control, and epidemiological research.

The annotations directory holds one XML file per image, detailing the image dimensions and each bounding box, plus class label (with_mask, without_mask, or mask_weared_incorrect).

Because Grounding DINO’s training pipeline expects a single CSV file rather than individual XML files, we need to perform two main preprocessing steps:

- Randomly split the images into a training set of 600 images and a test set containing the remaining 253 images.

- Convert the corresponding XML annotations for each set into a unified CSV (one row per bounding box, with label and absolute coordinates).

Original XML Annotation Format

Each XML annotation file (e.g., maksssskksss97.xml) follows PASCAL VOC conventions. A typical file looks like this:

<annotation>

<folder>images</folder>

<filename>maksssskksss97.png</filename>

<size>

<width>301</width>

<height>400</height>

<depth>3</depth>

</size>

<object>

<name>with_mask</name>

<pose>Unspecified</pose>

<truncated>0</truncated>

<occluded>0</occluded>

<difficult>0</difficult>

<bndbox>

<xmin>187</xmin>

<ymin>83</ymin>

<xmax>212</xmax>

<ymax>109</ymax>

</bndbox>

</object>

<!-- If the image contained more faces, each would appear here as another <object> block -->

</annotation>

Desired CSV Annotation Format

For Grounding DINO’s fine-tuning pipeline, we need two separate CSVs, one for the training set and one for the testing set, each with the following column order:

label_name,bbox_x1,bbox_y1,bbox_x2,bbox_y2,image_name,image_width,image_height

Converting XMLs to CSV (Train Set)

# === CONFIG ===

ORIG_IMG_DIR = 'images'

ORIG_ANN_DIR = 'annotations'

TRAIN_DIR = 'train'

TEST_DIR = 'test'

NUM_TRAIN_IMAGES = 600

CSV_OUTPUT_FILENAME = 'annotations.csv' # will be placed under TRAIN_DIR

# === UTILITIES ===

def xmls_to_csv(annotations_dir: str, output_csv: str):

"""Parse all PascalVOC‐style XMLs in `annotations_dir` → single CSV."""

with open(output_csv, 'w', newline='') as csvfile:

writer = csv.writer(csvfile)

writer.writerow([

'label_name',

'bbox_x1','bbox_y1','bbox_x2','bbox_y2',

'image_name',

'image_width','image_height'

])

for fname in os.listdir(annotations_dir):

if not fname.lower().endswith('.xml'):

continue

xml_path = os.path.join(annotations_dir, fname)

tree = ET.parse(xml_path)

root = tree.getroot()

image_name = root.findtext('filename')

size = root.find('size')

width = size.findtext('width')

height = size.findtext('height')

for obj in root.findall('object'):

label = obj.findtext('name')

b = obj.find('bndbox')

xmin = b.findtext('xmin')

ymin = b.findtext('ymin')

xmax = b.findtext('xmax')

ymax = b.findtext('ymax')

writer.writerow([

label,

xmin, ymin, xmax, ymax,

image_name,

width, height

])

print(f"[+] Wrote CSV annotations to {output_csv}")

def mkdirs_safe(path):

os.makedirs(path, exist_ok=True)

# === MAIN ===

def main():

# 1) Create train/ and test/ sub-dirs

for split in (TRAIN_DIR, TEST_DIR):

mkdirs_safe(os.path.join(split, 'images'))

mkdirs_safe(os.path.join(split, 'labels'))

# 2) Collect all image filenames (we assume .png here)

all_images = [f for f in os.listdir(ORIG_IMG_DIR)

if f.lower().endswith(('.png','.jpg','.jpeg'))]

random.shuffle(all_images)

# 3) Split into train/test

train_imgs = set(all_images[:NUM_TRAIN_IMAGES])

test_imgs = set(all_images[NUM_TRAIN_IMAGES:])

# 4) Copy files

for img_set, dest_split in [(train_imgs, TRAIN_DIR), (test_imgs, TEST_DIR)]:

for img_name in img_set:

# copy image

src_img = os.path.join(ORIG_IMG_DIR, img_name)

dst_img = os.path.join(dest_split, 'images', img_name)

shutil.copy2(src_img, dst_img)

# copy corresponding XML

xml_name = os.path.splitext(img_name)[0] + '.xml'

src_xml = os.path.join(ORIG_ANN_DIR, xml_name)

dst_xml = os.path.join(dest_split, 'labels', xml_name)

if os.path.exists(src_xml):

shutil.copy2(src_xml, dst_xml)

else:

print(f"[!] Warning: annotation not found for {img_name}")

print(f"[+] Copied {len(train_imgs)} images → {TRAIN_DIR}/images")

print(f"[+] Copied {len(test_imgs)} images → {TEST_DIR}/images")

# 5) Generate CSV from train/labels

train_labels_dir = os.path.join(TRAIN_DIR, 'labels')

csv_outpath = os.path.join(TRAIN_DIR, CSV_OUTPUT_FILENAME)

xmls_to_csv(train_labels_dir, csv_outpath)

if __name__ == '__main__':

main()

CSV generation runs only over train/labels/, producing train/annotations.csv with the exact schema required by Grounding DINO.

Grounding DINO Installation Steps

- Clone the GroundingDINO repository from GitHub.

git clone https://github.com/IDEA-Research/GroundingDINO.git

- Change the current directory to the GroundingDINO folder.

cd GroundingDINO/

- Install the required dependencies in the current directory.

pip install -e .

- Download pre-trained model weights.

mkdir weights

cd weights

wget -q https://github.com/IDEA-Research/GroundingDINO/releases/download/v0.1.0-alpha/groundingdino_swint_ogc.pth

cd ..

Fine-Tuning Grounding DINO Codebase

Here’s the Grounding DINO codebase walkthrough –

Load Preconfigured Grounding DINO Model and Prepare Data Paths

# Model

model = load_model("groundingdino/config/GroundingDINO_SwinT_OGC.py", "weights/groundingdino_swint_ogc.pth")

# Dataset paths

images_files=sorted(os.listdir("multimodal-data/images"))

ann_file="multimodal-data/annotation/annotation.csv"

The above code loads a preconfigured Grounding DINO model (using a specified config file and pretrained weights) and sets up the paths to the image directory (sorted list of all files) and to the annotation CSV file for downstream data loading.

Draw a Labeled Bounding Box on an Image

def draw_box_with_label(image, output_path, coordinates, label, color=(0, 0, 255), thickness=2, font_scale=0.5):

# Draw the rectangle

cv2.rectangle(image, (coordinates[0], coordinates[1]), (coordinates[2], coordinates[3]), color, thickness)

# Define a position for the label (just above the top-left corner of the rectangle)

label_position = (coordinates[0], coordinates[1]-10)

# Draw the label

cv2.putText(image, label, label_position, cv2.FONT_HERSHEY_SIMPLEX, font_scale, color, thickness, cv2.LINE_AA)

# Save the modified image

cv2.imwrite(output_path, image)

This function uses OpenCV to draw a colored rectangle and accompanying text label at the specified coordinates on a NumPy image, then saves the result to the given output path.

Read and Organize Dataset from CSV

def read_dataset(ann_file):

ann_Dict= defaultdict(lambda: defaultdict(list))

with open(ann_file) as file_obj:

ann_reader= csv.DictReader(file_obj)

# Iterate over each row in the csv file

# using reader object

for row in ann_reader:

#print(row)

img_n=os.path.join("multimodal-data/images",row['image_name'])

x1=int(row['bbox_x1'])

y1=int(row['bbox_y1'])

x2=int(row['bbox_x2'])

y2=int(row['bbox_y2'])

label=row['label_name']

ann_Dict[img_n]['boxes'].append([x1,y1,x2,y2])

ann_Dict[img_n]['captions'].append(label)

return ann_Dict

Parses the annotation CSV line by line, builds a nested dictionary keyed by image path, and collects each image’s bounding boxes (converted from x/width and y/height) and associated label captions.

Training Loop for Fine-Tuning

def train(model, ann_file, epochs=1, save_path='weights/model_weights',save_epoch=50):

# Read Dataset

ann_Dict = read_dataset(ann_file)

# Add optimizer

optimizer = optim.Adam(model.parameters(), lr=1e-5)

# Ensure the model is in training mode

model.train()

for epoch in range(epochs):

total_loss = 0 # Track the total loss for this epoch

for idx, (IMAGE_PATH, vals) in enumerate(ann_Dict.items()):

image_source, image = load_image(IMAGE_PATH)

bxs = vals['boxes']

captions = vals['captions']

# Zero the gradients

optimizer.zero_grad()

# Call the training function for each image and its annotations

loss = train_image(

model=model,

image_source=image_source,

image=image,

caption_objects=captions,

box_target=bxs,

)

# Backpropagate and optimize

loss.backward()

optimizer.step()

total_loss += loss.item() # Accumulate the loss

print(f"Processed image {idx+1}/{len(ann_Dict)}, Loss: {loss.item()}")

# Print the average loss for the epoch

print(f"Epoch {epoch+1}/{epochs}, Average Loss: {total_loss / len(ann_Dict)}")

if (epoch%save_epoch)==0:

# Save the model's weights after each epoch

torch.save(model.state_dict(), f"{save_path}{epoch}.pth")

print(f"Model weights saved to {save_path}{epoch}.pth")

if __name__=="__main__":

train(model=model, ann_file=ann_file, epochs=1000, save_path='weights/model_weights')

The above code snippet defines a train function that loads annotations, initializes an Adam optimizer, and iterates over each epoch and image. For every image, it zeroes gradients, calls train_image to compute the loss given image tensors, bounding-box targets, and captions, backpropagates, steps the optimizer, accumulates and logs the loss, and periodically saves the model weights.

Testing and Inference with the Fine-Tuned Model

Apply Phrase-Wise Non-Maximum Suppression (NMS)

def apply_nms_per_phrase(image_source, boxes, logits, phrases, threshold=0.3):

h, w, _ = image_source.shape

scaled_boxes = boxes * torch.Tensor([w, h, w, h])

scaled_boxes = box_convert(boxes=scaled_boxes, in_fmt="cxcywh", out_fmt="xyxy")

nms_boxes_list, nms_logits_list, nms_phrases_list = [], [], []

print(f"The unique detected phrases are {set(phrases)}")

for unique_phrase in set(phrases):

indices = [i for i, phrase in enumerate(phrases) if phrase == unique_phrase]

phrase_scaled_boxes = scaled_boxes[indices]

phrase_boxes = boxes[indices]

phrase_logits = logits[indices]

keep_indices = ops.nms(phrase_scaled_boxes, phrase_logits, threshold)

nms_boxes_list.extend(phrase_boxes[keep_indices])

nms_logits_list.extend(phrase_logits[keep_indices])

nms_phrases_list.extend([unique_phrase] * len(keep_indices))

return torch.stack(nms_boxes_list), torch.stack(nms_logits_list), nms_phrases_list

Groups detected boxes and scores by each unique phrase, rescales normalized box coordinates to pixel values, performs NMS separately for each phrase with a specified IoU threshold (default 0.3), and returns the filtered boxes, corresponding logits, and their associated phrases.

def process_image(

model_config="groundingdino/config/GroundingDINO_SwinT_OGC.py",

model_weights="weights/groundingdino_swint_ogc.pth",

image_path="test_pepper.jpg",

text_prompt="peduncle.fruit.",

box_threshold=0.8,

text_threshold=0.40

):

model = load_model(model_config, model_weights)

#model.load_state_dict(torch.load(state_dict_path))

image_source, image = load_image(image_path)

boxes, logits, phrases = predict(

model=model,

image=image,

caption=text_prompt,

box_threshold=box_threshold,

text_threshold=text_threshold

)

print(f"Original boxes size {boxes.shape}")

boxes, logits, phrases = apply_nms_per_phrase(image_source, boxes, logits, phrases)

print(f"NMS boxes size {boxes.shape}")

annotated_frame = annotate(image_source=image_source, boxes=boxes, logits=logits, phrases=phrases)

cv2.imwrite("result.jpg", annotated_frame)

if __name__ == "__main__":

#model_weights="weights/groundingdino_swint_ogc.pth"

model_weights="weights/model_weights1000.pth"

process_image(model_weights=model_weights)

Loads a fine-tuned Grounding DINO model and an input image, runs predict with a specified text prompt and score thresholds, applies phrase-wise NMS, annotates the image with final bounding boxes and labels, and writes the resulting frame to result.jpg.

Fine-Tuning Grounding DINO Results and Insights

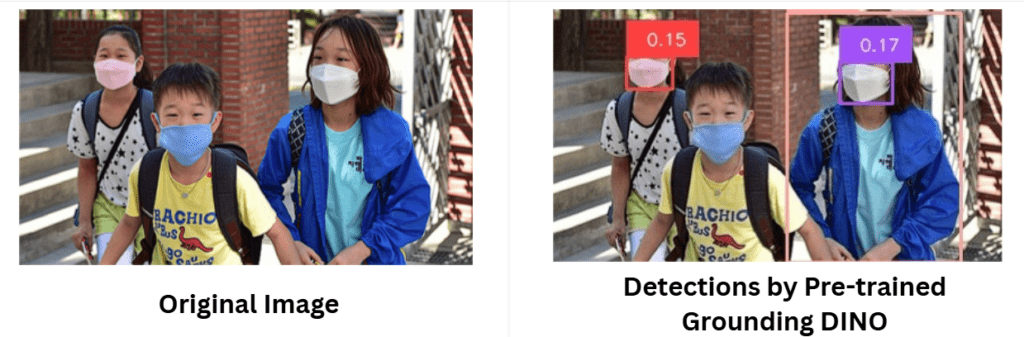

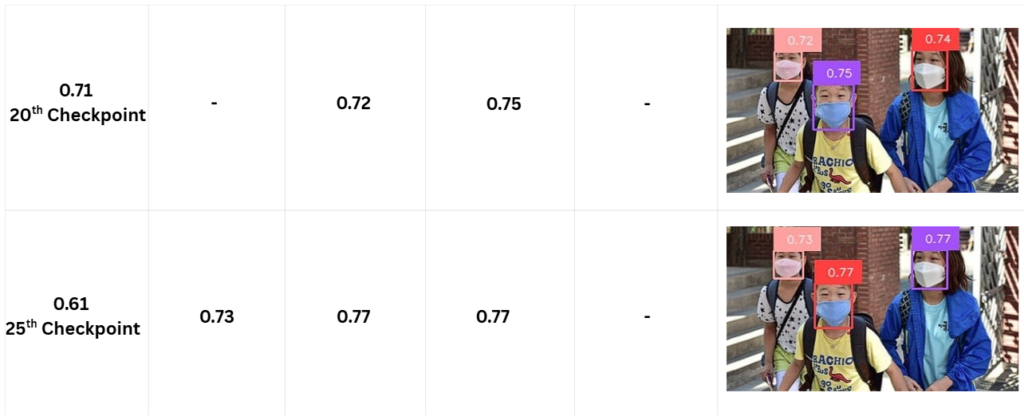

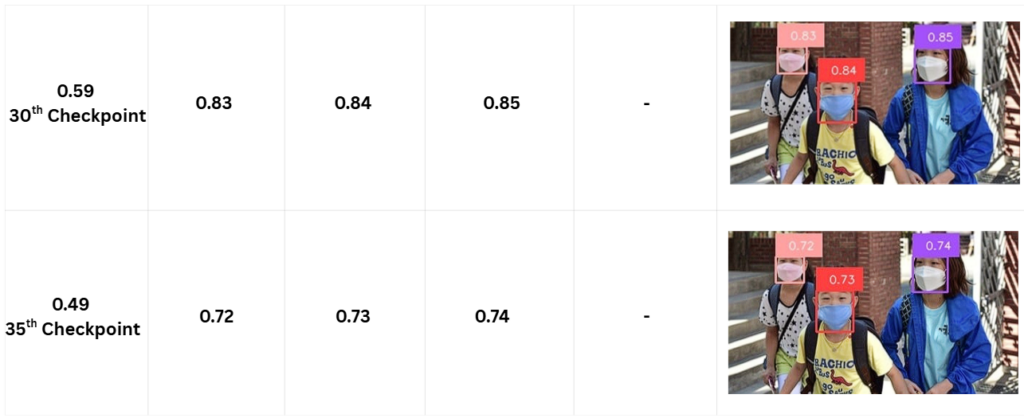

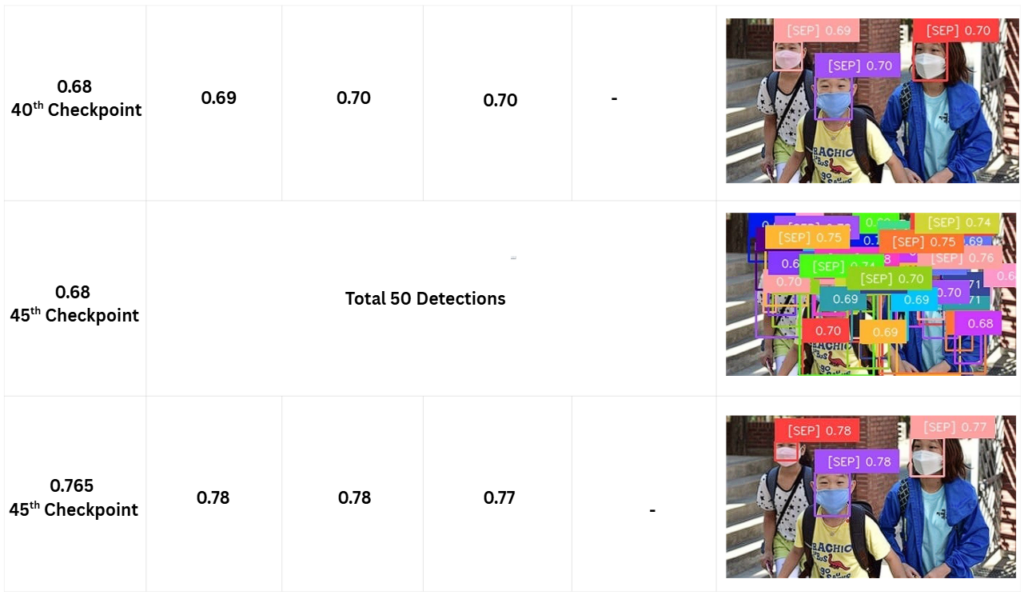

The original pretrained Grounding DINO model detects some masks correctly, but struggles with false positives and missed detections. The bounding boxes shown have low confidence scores (around 0.15-0.17), reflecting uncertainty in this domain-specific task since the model was never fine-tuned on face masks. This highlights the necessity of fine-tuning to adapt the model from generic open-set detection to precise mask detection.

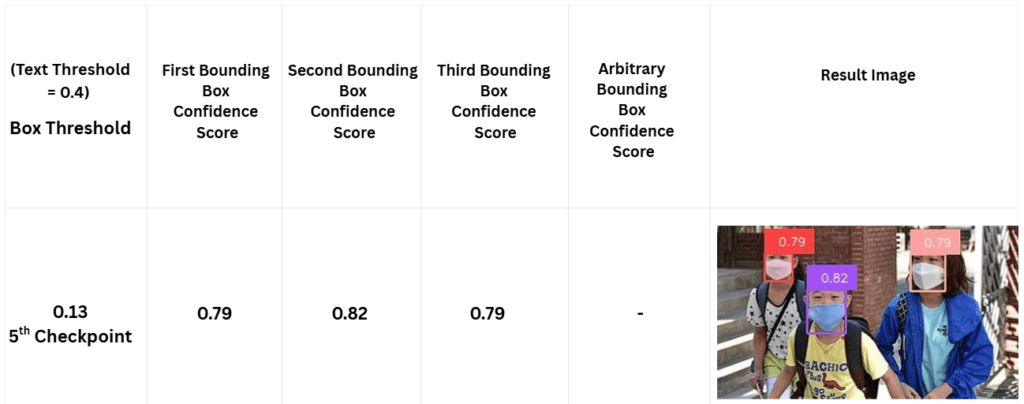

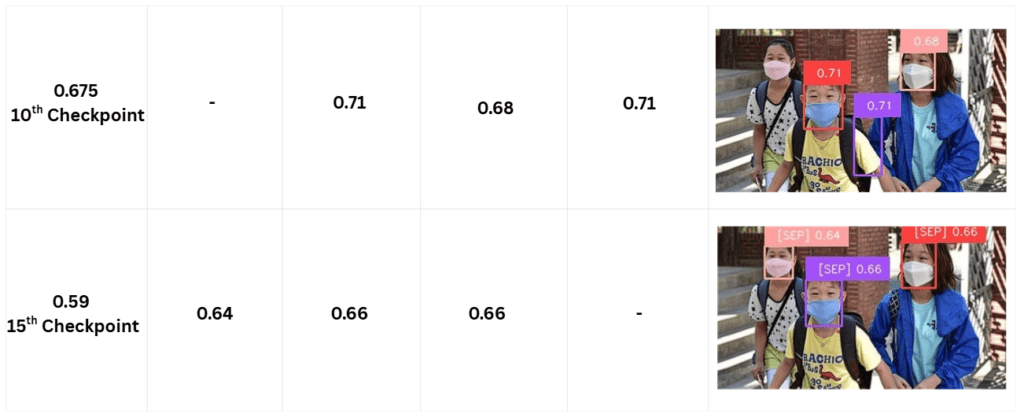

The model shows a steep improvement in detection confidence within the first 5 epochs of training. Confidence scores on key bounding boxes climb rapidly, often reaching values above 0.7, indicating that Grounding DINO quickly adapts to the face mask classes from its open-set pretraining. This fast convergence encourages practical fine-tuning with limited data.

However, as training progresses beyond 10 epochs, the model’s confidence temporarily dips, particularly between epochs 15 and 20, and again between epochs 30 and 35. These intermediate “minima points” likely correspond to moments when the model navigates complex trade-offs between localizing objects accurately and aligning them to the textual mask classes. Such fluctuations are common in fine-tuning and hint at nuanced internal recalibrations of feature representations and decision boundaries.

At around epoch 45, the model produces substantially more bounding boxes than the ground-truth count for the same images, often exceeding three detections where only three faces exist. This over-detection indicates potential overfitting or loss of precision in the bounding-box confidence calibration. The model may become overly sensitive, triggering multiple detections on the same object or hallucinating boxes on background regions. This phenomenon could arise from:

- The model memorizes training examples and fails to generalize suppression of duplicates.

- The training objective overemphasizes recall at the expense of precision.

- Insufficient or suboptimal non-maximum suppression (NMS) parameters failing to prune redundant boxes.

- A lack of regularization or early stopping allows the model to diverge from the optimal detection boundary.

Generalization vs. Overfitting Tradeoff

Across other testing samples, a similar trend emerges: Grounding DINO exhibits solid generalization and accurate predictions early on (within 5–7 epochs), but as training continues, false positives and mispredictions become more frequent. This suggests that while the architecture is inherently robust, careful tuning of training duration and regularization is critical to avoid degrading performance through overfitting.

Practical Implications for Fine-Tuning Grounding DINO

- For practical fine-tuning on modest datasets like face-mask detection, early stopping or checkpoint selection based on validation performance around epoch 5–10 may yield the best tradeoff between accuracy and precision.

- Regular evaluation during training is vital to detect when over-detection or false positives start to emerge.

- Adjusting post-processing parameters (NMS threshold, confidence thresholds) or incorporating stronger regularization (weight decay, dropout) may help alleviate late-stage false positive inflation.

In summary, the fine-tuning process reveals Grounding DINO’s strengths in rapid adaptation and highlights the importance of vigilant monitoring and hyperparameter tuning to prevent overfitting and preserve detection quality over longer training regimes.

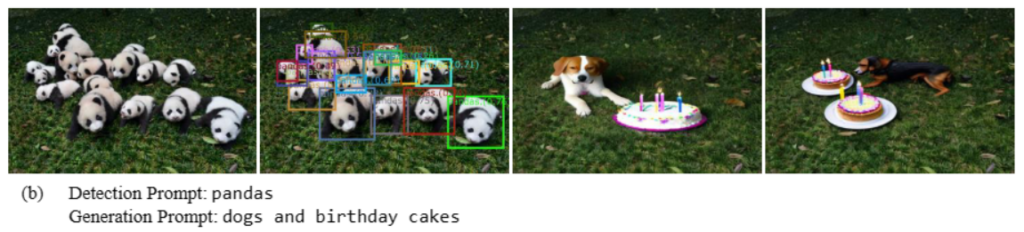

Guided Image Editing: Combining Grounding DINO’s Detection with Stable Diffusion’s Generation

One of the exciting practical applications of Grounding DINO is its ability to precisely localize arbitrary objects or regions within an image based on natural language prompts. When combined with powerful generative models like Stable Diffusion, this capability enables sophisticated image editing workflows that go beyond traditional segmentation or manual masking.

By first using Grounding DINO to identify and delineate regions corresponding to user-specified concepts or phrases, these localized masks can then guide Stable Diffusion’s generative inpainting or object replacement mechanisms. This fusion allows for context-aware edits, such as replacing an object, modifying specific attributes, or removing unwanted elements, while maintaining photorealistic coherence in the final image.

For practitioners and researchers interested in experimenting with this pipeline, the official Grounding DINO GitHub repository includes source code and notebooks demonstrating how to integrate it with Stable Diffusion for interactive image editing. You can explore the full implementation by clicking on the link – Grounding DINO + Stable Diffusion Integration

This marriage of detection and generation opens a rich frontier in vision-language applications, unlocking new creative and practical possibilities.

Conclusion

Grounding DINO’s fine-tuning journey underscores its unique position as a fast-learning, flexible open-set detector that excels at grounding novel objects with minimal labeled data. The observed learning dynamics, fast initial gains, intermediate minima, and eventual over-detection, emphasize the importance of carefully managing training duration and validation. With strategic early stopping and post-processing calibration, Grounding DINO can reliably detect domain-specific categories such as face masks with high precision and confidence. This adaptability confirms its value as a robust foundation for deploying open-world object detectors across diverse real-world scenarios.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning