- Introduction

- CUDA programming model

2.1 What is CUDA?

2.2 Introduction to some important CUDA concepts - Implementing a dense layer in CUDA

- Summary

1. Introduction

A few months ago, we covered the launch of NVIDIA’s latest Hopper H100 GPU for data centres. The Hopper architecture is packed with features to accelerate various machine learning algorithms. It continues a now-established trend of NVIDIA adding more AI-specific functionality to their GPUs. However, we noticed that most deep learning practitioners and engineers do not understand the specifics of each architecture and what benefits they bring to the table. Thus, we decided to write a two-part series of blog posts to fill in the gap. What you learn in this series of posts will help you to:

- Understand how a GPU accelerates AI workloads.

- Understand most CUDA concepts and implement dense layers in CUDA.

- Understand the features of many generations of NVIDIA GPUs.

- Choose the right GPU for your training or inference workload.

- Learn how to profile your code and maximise GPU utilisation.

We will begin by introducing the CUDA programming model and go through the most important concepts of CUDA in detail. This will help you to understand how GPUs work. Then, we will use this understanding to implement matrix multiplication in C++ with CUDA. Matrix multiplication forms the bedrock of most deep learning computations and most commonly used layers such as dense, convolutional and attention layers can be represented as matrix multiplies.

Note: Although we will only cover CUDA in this post, other GPU chip makers like AMD and Intel also have similar software stack (though not as mature as CUDA) and a lot of the concepts discussed here will carry over to ROCm from AMD or One API from Intel.

2. CUDA programming model

2.1 What is CUDA?

You have no doubt heard about CUDA, and know that it has something to do with NVIDIA GPUs. You may not know what CUDA exactly is. For example,

- Is CUDA a library that talks to your GPU? If so, is it a C++ or python library?

- Is it a compiler for the GPU?

- Is it a driver for the GPU to let the operating system talk to the GPU? If so, do gamers need CUDA to run games (the original use case for GPUs)

Back in the early 2000s, much before the widespread use of GPUs for machine learning, CPUs used to be the most important hardware for computing. GPUs were primarily developed for graphics and were very difficult to use for scientific computing. Unsurprisingly, very few programmers could write efficient code for using GPUs for non-graphics related computing. NVIDIA realized that programmers needed to see GPUs as an essential part of computing and not just as fancy super specialized pieces of hardware (much like FPGAs are perceived to this day). Thus, they introduced a new way of thinking about programming, commonly called a programming model. In this new programming model, different computations could be performed on different devices most suited to that task. For example, since CPUs excel at sequential computations while GPUs, by design, excel at parallel computations, the programming model introduced ways for CPUs and GPUs to exchange data and synchronize their operations. This unified model simplified heterogenous programming and NVIDIA called it Compute Unified Device Architecture or CUDA. So, returning back to the question, what is CUDA? It is a unified programming model or architecture for heterogenous computing.

The CUDA programming model has a programming interface in C/C++ which allows programmers to write code for both CPU and GPU computations. This C/C++ interface is most commonly referred to when people say they are ‘programming in CUDA’. Bindings also exist for almost all other major languages like Python, Java, MATLAB and even Fortran. Deep learning frameworks such as TensorFlow or PyTorch use the C/C++ CUDA interface to implement operations like matrix multiplications, which forms the backbone of dense, convolutional, recurrent and attention layers to name a few. CUDA has been wildly successful in popularizing GPU programming and no other heterogenous computing model has the same reach and popularity among developers as CUDA.

Since CUDA abstracts away most of the inner workings of GPUs, you can learn to write a simple GPU program in a few minutes. However, CUDA also exposes relevant functionality for advanced programmers to truly extract all possible performance from the GPU. Thus, you as a programmer can continue improving your skills and your programs over months and years as you become more comfortable with CUDA computing.

2.2 Introduction to some important CUDA concepts

GPU programming is a vast topic and it is not possible to explain all CUDA concepts within one blog post. However, in true LearnOpenCV fashion, we will give you a flavor of some technical aspects in far greater detail than is expected from a deep learning practitioner while keeping the explanation easy and digestible. Specifically, we will explain how GPU hardware is organized from a CUDA programmer’s perspective. A GPU contains the following hardware blocks

- CUDA cores

At the heart of the GPU hardware is a hardware unit called a ‘CUDA core’ which executes a ‘thread’ in software terms. A CUDA core can execute instructions for multiplying, dividing or calculating special functions, for example, activation functions. Although there are many differences between them, it can help to think of a CUDA core as the GPU equivalent of a CPU core. Although a CUDA core is weaker than a CPU core, GPUs have thousands of them. For example, even the consumer grade RTX 3090 GPU has over 10,000 CUDA cores! However, there are limitations around the instructions CUDA cores can execute, which we explain next.

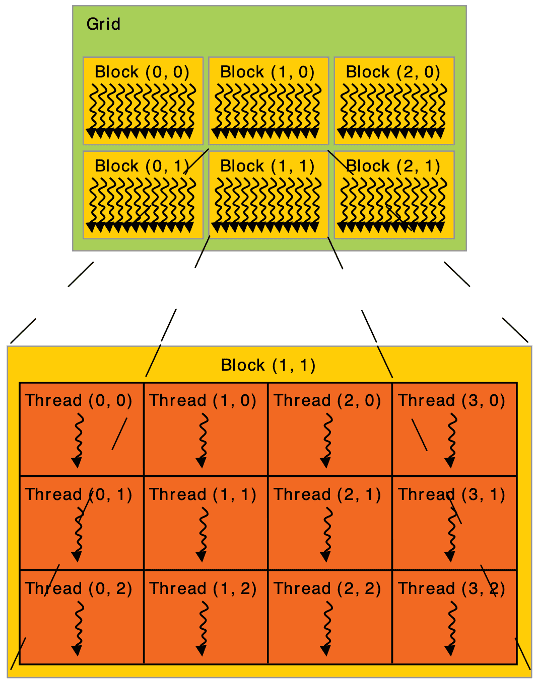

Figure 1. Hierarchy of CUDA threads, blocks and grids

(Source: NVIDIA CUDA C++ Programming Guide)

- CUDA blocks and grids

CUDA threads are grouped together into so called ‘blocks’. All threads within a block execute the same instructions and all of them run on the same SM (explained later). The programmer should divide the computation into blocks and threads. Blocks are further grouped into entities called CUDA grids. This will all make sense when we look at a CUDA C++ program at the end of this section.

- CUDA kernels

Suppose you want to run matrix multiplication. If you were just using a CPU, you could write matrix multiplication with for loops that go through all entries in the matrices and perform the required work. Thus, the same CPU thread will produce all the entries of the output matrix. However, on a GPU each CUDA thread will work to produce only one entry of the output matrix. We need a way to specify the computation that each CUDA thread should perform. This is done via special functions known as ‘CUDA kernels’. A kernel is written in such a way that different threads do the same computation but on different data. This computing paradigm is called Single Instruction Multiple Thread or SIMT. In CUDA terminology, you perform computations on a GPU by ‘launching’ CUDA kernels.

Figure 2. A schematic of Streaming Multiprocessor (SM) in the latest H100 GPU

(Source: NVIDIA H100 whitepaper)

- Streaming multiprocessors (SMs)

We have been building our way up the hardware hierarchy. We started with the smallest unit of computing hardware called the CUDA core. We saw how threads are grouped into blocks and blocks into grids. Further, we saw that the compute instructions for the grid are specified in C++ functions called CUDA kernels. Streaming Multiprocessors (SMs) are the second highest layer in the hardware hierarchy. An SM is a sophisticated processor within the GPU which contains hardware and software for orchestrating the execution of hundreds of CUDA threads. Modern GPUs contains several dozens of SMs. For instance, the RTX 3090 has 82 SMs. For the purposes of execution, the SM divides blocks of threads into ‘warps’ which are groups of size 32. SMs which are physically located close to each other are further grouped into entities called Graphics Processing Clusters (GPCs).

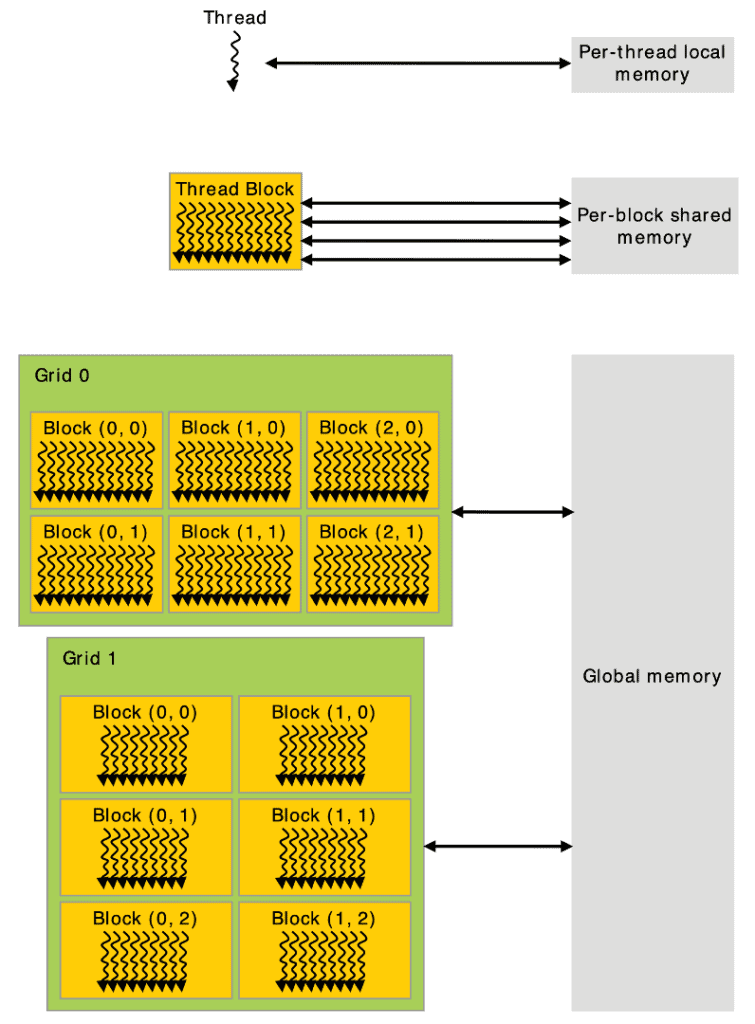

Figure 3. CUDA memory hierarchy. Global memory is visible to all threads and blocks but it is slow, Shared memory is visible to all threads within a block and is ~10 times faster than global memory. FInally, each thread has its own local memory which is even faster.

(Source: CUDA C++ Programming Guide)

- Global memory or VRAM

So far we have discussed the computational units of a GPU, but the memory units are equally and arguably more important. Most of the power consumption and latency of computation occurs due to memory transfers rather than computation. Therefore, understanding and optimising memory access latency can speed up your workloads by orders of magnitude. The highest level of memory hierarchy in a GPU is the global memory or VRAM as it is called in consumer GPUs. This is the specification quoted in marketing materials and what most users understand by GPU memory. We have all encountered CUDA Out of Memory errors in TensorFlow or PyTorch at some point. OOM errors occur when the model or cannot fit within the global memory of the GPU. This is why large memory allows us to train larger models with large batch sizes, but CUDA offers programmers control over a much richer memory hierarchy, explained next.

- Shared memory

Shared memory is roughly a GPU equivalent of the cache in a CPU. While writing software to run on CPUs, the programmer has no control over the cache. CUDA, on the other hand, provides a way for the programmer to control what data should be present in the cache and which threads should have access to which data. For the uninitiated, cache in any computing system is a small patch of memory which is located physically close to the actual transistors that are doing the computation.

In CUDA terms, shared memory is located physically close to the CUDA cores and fetching data from shared memory is at least 10 times faster than from global memory. This can be extremely helpful in many deep learning workflows. For instance, when applying the well known gaussian blur filter to an image, every pixel position of the input image is required by many CUDA threads. In this case, CUDA allows threads working on nearby pixels to access the data they need as quickly as possible using shared memory. Shared memory is visible to threads in the same block.

- Read-only cache/Texture memory

As the name suggests, this is a read-only cache located physically close to CUDA cores and is shared within a warp. Here, the term read only implies that the data stored in this memory does not change during the course of kernel execution. Common image processing workloads such as scaling 2D or 3D arrays (like image resizing) greatly benefit from such memory, which is why this is also called texture memory.

- Registers

So far all the memory types we have discussed are shared between threads of a warp, block or SM. In contrast, registers are small memory banks dedicated to each thread. We have discussed how threads in a block all execute the same instructions. However, the numerical values of the results of intermediate calculations are different for every thread. Registers allow threads to store local copies of variable which are visible to only that one thread.

Although a CUDA programmer does not need to know or care about registers to write functional CUDA code, it definitely helps to keep in mind that each SM has a fixed, limited number of registers. A CUDA kernel which declares a lot of unnecessary local variables can perform a lot slower than one which uses registers as efficiently as possible. Typically, register allocation is handled by the compiler (nvcc) and the only thing a programmer can control is the number and size of local variables.

- Unified memory (UM)

It can be quite laborious for a programmer to keep track of which variables belong to which processor. UM is a software functionality in CUDA which allows the programmer to forget the distinction between CPU and GPU memory and see all available memory in the system as one large unified whole. In programming terms this means that you declare a variable, allocate memory for it once and use it on both CPU and GPU.

Like all things CUDA, using UM is quite easy but the more you know how hardware and compilers work, the better performance you get extract out of your GPU. In the case of UM, the best performance is achieved by using initialisation kernels on the GPU whenever necessary, optimising page faults and asynchronously prefetching data using `cudaMemPrefetchAsync()`.

3. Implementing a dense layer in CUDA

We have discussed the software and hardware features of CUDA GPUs in great detail. Now it is time to get into some code. We will build upon the concepts learnt so far to implement matrix multiplication using CUDA. Matrix multiplication forms the basis for dense, convolutional, recurrent and attention layers, so this is a really fundamental workflow used all the time. Although most deep learning practitioners don’t program in CUDA directly, we would still advise you to at least read through the code that follows and get a rough understanding of how it works. The code is heavily commented to make this easy.

The process of writing matrix multiplication is the following:

- First, we declare 4 matrices A, B, C and D using unified memory feature in CUDA. This allows us to forget all distinction between CPU and GPU memory and seamlessly access the matrices wherever necessary.

- Then, we define a CUDA kernel called

matmul_kernel. As explained earlier, a CUDA kernel is a function which is executed by all threads. - We will initialise the matrices A and B by writing some values into them.

- Next, we declare how we will split up the computation across blocks and threads. This is done using two variables

blocks_per_gridandthreads_per_block. - After declaring the splits, we launch the kernel and use CUDA events feature to accurately measure the time it takes to perform the computation.

- Finally, we will do the same computation on the CPU, measure the time taken and print the time taken to the terminal.

#include <stdio.h>

#include <stdlib.h>

#include <iostream>

#include <math.h>

#include <time.h>

//#define VERIFY

//uncomment above to print difference between CPU and GPU calculations

__global__ void matmul_kernel(

const float* M1,

const float* M2,

float* M3,

const int m,

const int n,

const int p

)

{

/*

CUDA kernel for matrix multiplication M3 = M1 * M2

This function will be executed by every CUDA thread

The instructions are the same, but each thread will work

on a separate chunk of the data, as specified by the array indices.

Note that the kernel definition is preceded by the __global__

qualifier. Further, the kernel function returns nothing (void)

Thus, we must modify the output matrix M3 within this function.

The changes made to M3 (or M1 and M2) will all be visible outside

the kernel to CPU and GPU memory after the kernel has executed.

*/

//Get the x and y indices of output entry for this thread

int i = blockIdx.y * blockDim.y + threadIdx.y;

int j = blockIdx.x * blockDim.x + threadIdx.x;

/*

Wait! what are blockDim, blockIdx and threadIdx??

These are structs provided by CUDA, which tells the thread

how many blocks have been launched, what block number does

the current thread reside in and finally, what is the x and y

index of the current thread within the block.

These variables allow each thread to choose which sub-section

of the A, B and C matrices it should work on and we use them next.

*/

if ((i>=m)||(j>=p))

{

return;

//this just means that dont process anything outside the

//bounds of the output matrix size

}

float cout=0.0;

//this is a local variable we have defined within the thread

//so, this variable will reside in register memory as explained earlier

for (int k=0; k<n; k++)

{

cout += M1[i*n + k]*M2[k*p + j];

//loop through elements of one row of M1 and

//one column of M2, multiply corresponding elements

//and add them up. We are just doing standard matrix

//multiplication.

}

M3[i*p+j] = cout;

//here we modify M3

}

int main(int argc, char* argv[])

{

/*

In this demo, we will create matrices of size

A: M x N

B: N x P

C: M x P <-- for GPU

D: M x P <-- for CPU

We will initialize A, B, C, D and perform matrix multiplications:

C = A*B (on GPU)

D = A*B (on CPU)

*/

if (argc != 4)

{

printf("Matrix multiplication example for A[MxN] and B[NxP]\nUsage: cu_mm.out M N P\n");

exit(1);

}

int M=atoi(argv[1]); //2049;

int N=atoi(argv[2]); //257;

int P=atoi(argv[3]); //512;

float *A, *B, *C, *D;

/*

Let's use unified memory

cudaMallocManaged allows us to allocate memory

once and use it across both CPU and GPU.

*/

cudaMallocManaged(&A, M*N*sizeof(float));//input Mat1

cudaMallocManaged(&B, N*P*sizeof(float));//input Mat2

cudaMallocManaged(&C, M*P*sizeof(float));//output Mat for GPU

cudaMallocManaged(&D, M*P*sizeof(float));//output Mat for CPU

//we will do matmul in both CPU and GPU and compare the execution times

for (int i=0; i<M*N; i++)

{

A[i]=sin((float)i/100);

//init with sine of index, just as an example

}

for (int i=0; i<N*P; i++)

{

B[i]=cos((float)i/100);

//init with sine of index, just as an example

}

//C and D can be left uninitialized

float elapsed_time_gpu=0.0;

double elapsed_time_cpu=0.0;

cudaEvent_t gpu_start, gpu_stop;

struct timespec cpu_start, cpu_stop;

//BEGIN GPU MATMUL

dim3 blocks_per_grid(ceil(M/32),ceil(P/32));

dim3 threads_per_block(32, 32);

/*

We use CUDA events to accurately measure the time taken by matmul op

Refer to page 16 of CUDA C++ Best Practices Guide:

https://docs.nvidia.com/cuda/pdf/CUDA_C_Best_Practices_Guide.pdf

*/

cudaEventCreate(&gpu_start);

cudaEventCreate(&gpu_stop);

cudaEventRecord(gpu_start, 0);

matmul_kernel<<<blocks_per_grid, threads_per_block>>>(A, B, C, M, N, P);

cudaEventRecord(gpu_stop, 0);

cudaEventSynchronize(gpu_stop);

//END GPU MATMUL

timespec_get(&cpu_start, TIME_UTC);

//BEGIN CPU MATMUL

for (int i=0; i<M; i++)

{

for (int j=0; j< P; j++)

{

float cout=0.0;

for(int k=0; k<N; k++)

{

cout+=A[i*N+k]*B[k*P+j];

}

D[i*P+j]=cout;

}

}

//END CPU MATMUL

timespec_get(&cpu_stop, TIME_UTC);

//Measure elapsed times

cudaEventElapsedTime(&elapsed_time_gpu, gpu_start, gpu_stop);

elapsed_time_cpu = ((double)(cpu_stop.tv_sec - cpu_start.tv_sec)) * 1000000 + ((double)(cpu_stop.tv_nsec - cpu_start.tv_nsec)) / 1000;

//tv_nsec is in nanoseconds

/*

Define VERIFY above to print diffs for the

first 100 entries

you will get all values very close to zero

*/

#ifdef VERIFY

for (int i=0; i<100; i++)

{

float diff=C[i]-D[i];

printf("%f, ", diff);

}

printf("\n");

#endif

//convert microseconds to milliseconds

printf("Elapsed time (CPU)= %f milliseconds\n", elapsed_time_cpu/1000);

printf("Elapsed time (GPU)= %f milliseconds\n", elapsed_time_gpu);

//cudaEventElapsedTime reports time in milliseconds

cudaFree(A);

cudaFree(B);

cudaFree(C);

cudaFree(D);

}

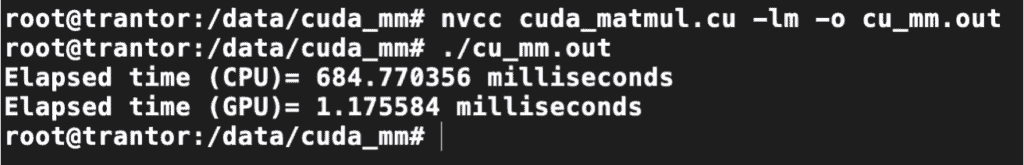

To keep things simple, we have defined everything in one file. You can compile the code using nvcc compiler on any system with CUDA installed.

nvcc cuda_matmul.cu -lm -o cu_mm.out

./cu_mm.out 2048 256 512

We tested this code on a computer equipped with an AMD Ryzen 5800X CPU and an RTX 3090 GPU, with 32 GB RAM running Ubuntu 20.04.

Figure 4. GPU is ~650x faster than a CPU.

The results were as follows:

- CPU computation time: 681.51 milliseconds

- GPU computation time: 1.047 milliseconds

Thus, the GPU was ~650 times faster than the CPU!! If the CPU code was to be written to use CPU parallel processing (the CPU has 16 cores and we assume perfect scaling), then the GPU would be ~40x faster than the CPU.

4. Summary

In this blog post, we have ventured into the technical depths of the hardware and software stack which forms the foundation of deep learning as we know it.

We started by understanding what exactly is CUDA and went through the most important CUDA concepts such as

- kernels,

- threads,

- blocks,

- SMs and

- various levels of the memory hierarchy.

Armed with this understanding, we implemented the forward pass of a dense layer purely in CUDA. We found that the with relatively little effort, a GPU can accelerate matrix multiplication by hundreds of times compared to a CPU.

Since the backward pass of a dense layer also requires a matrix multiply, the same basic idea can be used to implement backward pass and all other major types of layers.

We are just getting started with this journey. In the second blog post of this series, we will dive deep into hardware features for AI acceleration in recent NVIDIA GPUs. After this we return back to software and take a look at the cuDNN library. Finally, we will share some practical tips to profile your deep learning code and maximize GPU utilization.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning