It gives me immense pleasure to announce the best project award for our online course “Computer Vision for Faces” (cv4faces). These awards are given out twice a year to the top three projects submitted by students enrolled in cv4faces. You can check out the winners of last year’s best project award here.

We launched cv4faces less than a year back and almost 700 have students from more than 50 different countries have enrolled in the course so far. The course, which spans over 12 weeks, covers the basics of Image Processing in the first couple of weeks, followed by some applications of Computer Vision such as designing filters like those in Instagram and Snapchat. Next, we introduce advanced Computer Vision concepts like Object Recognition, Object Detection, and Tracking. We share a ton of applications of facial landmark detection like face swapping, face morphing and face averaging. Finally, we concluded the course with Deep Learning-based approaches to Face Recognition and Emotion Recognition using Google’s Tensorflow library. Students learn many important Machine Learning techniques like Support Vector Machines, Gradient Boosting, PCA, LDA, MIL, MeanShift etc. You can take a look at the detailed syllabus on the course page.

If you are a beginner in computer vision, machine learning or AI, cv4faces is an excellent starting course. We start with the very basics and the only pre-requisite is an intermediate level of knowledge in Python or C++.

Rules of the Competition

Students enrolled in cv4faces form groups of up to 3 students to compete in this competition, although they can compete alone as well. The students propose the topic of their final project and suggest a solution. We review the proposal and help students refine it if necessary. Students then have a month to submit the final project which includes working code, a project report and a video demo. Usually, a group submits their project multiple times and we review and provide feedback on how to improve the results. This interactive feedback helps them learn the nuances of solving complex AI problems.

The submissions are judged based on a few different criteria

- Creativity: Students had full freedom to chose a problem and the solution. Therefore, creativity in both defining the problem and in finding the final solution were important criteria in determining the winners.

- Quality: The quality of the final solution and the quality of the presentation were crucial in the final judgment.

- Difficulty: Some people chose a harder problem to solve and so while judging the quality of the final result, we also considered how hard the problem was.

- Effort: We expect a baseline level of effort from all participants. While effort put into a project was not a deciding factor in the final award, it is a deciding factor in whether the final project is approved.

Prizes

We award a total of $1,000 in prizes. The first, second and third prizes are $500, $300, and $200 in cash respectively.

Of course, the learning opportunity and the bragging rights are priceless!

The Winners

Students, most of whom are complete beginners in the field, went through an intense 12-week journey. Learning quickly in this short period of time and producing high-quality results is indeed commendable. Unfortunately, we have just three prizes to award! Students who narrowly missed this prize can improve their results and re-submit it to be considered for the second time. Without further ado, let’s meet the winners.

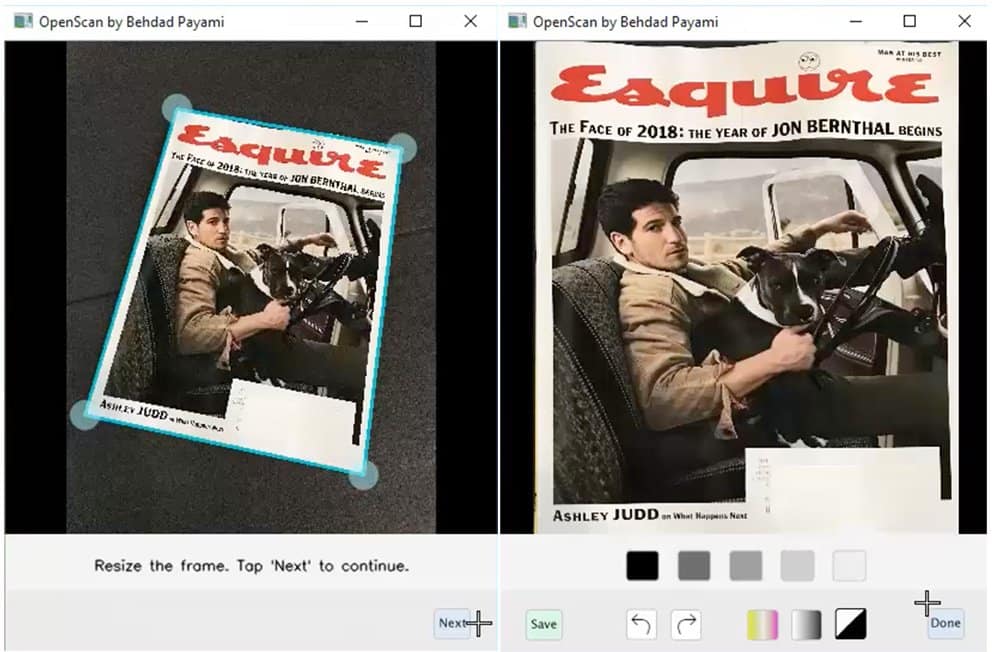

Third Prize : OpenScan by Behdad Payami

Using the skills developed in the course and inspired by this post on PyImageSearch, Behdad Payami built a document scanner in both C++ and Python with an elegant GUI.

The scanner automatically creates a top view of a document photographed on a flat surface. When the scan button is pressed, the program segments the document from the background and shows the location of the four corner points of the document. The GUI allows the user to manipulate the corner points in case the algorithm made a mistake. Using this information, the document scanner produces a top view of the document. The scanner also implements a few other image manipulation functions.

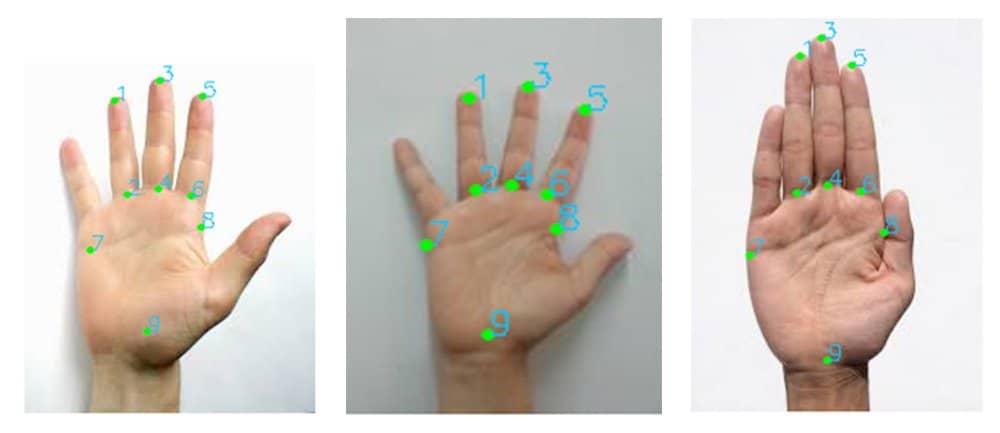

Second Prize : Hand Landmark Detection by Mehmet Ali Atici

The goal of the project was to detect 9 landmark points on the hands as shown on the image in the left. To accomplish this, Mehmet borrowed the ideas learned in Facial Landmark Detector and applied it his problem. There is a lot of hard work that goes into making things work in machine learning. Mehmet used Dlib’s imglab tool for preparing the datasets and it took him about 40 hours to just prepare the dataset!

He employed two steps

- Hand Detector : The first step was to train a hand detector to detect a bounding box around the hand. He used publicly available hand datasets and annotated bounding boxes around the hands in 3012 images belonging to 250 individuals. 2051 of these images were used for training and the remaining 961 for testing. He used this data to train a Histogram of Oriented Gradients (HOG) + Support Vector Machine (SVM) based object detector. Some results are shared below

- Hand Landmark Detector: For the hand landmarks detector, he annotated 22 landmarks on 931 images. Out of these, 691 images were used for training and 240 for testing. Even though he annotated 22 landmark points, he used 9 of them to train a landmark detector based on an ensemble of regression trees implemented in Dlib.

Mehmet has generously shared the code and trained model.

First Prize: AutoRetouch by Danil Stepin

Drumroll! The first prize goes to Danil Stepin for his project AutoRetouch that promises to make you look beautiful!

In this project, Danil implemented several algorithms for face beautification. The course fee was paid by his company on his behalf and his final project was an internal research project for his company. (Psst … ask your company to sponsor the course for you!).

Because this project will become part of a commercial product, he is unable to share the details of the algorithm or the code. Here are the main ideas he implemented.

- Lipstick application : This algorithm automatically created a lip mask based on the facial landmark detected using Dlib’s model. Naively blending color on top of the lips does not produce good results, and so he manipulated the chroma components of color while keeping luminance constant.

- Teeth whitening:

In this feature, he first created a mask for the mouth region. This was followed by non-linearly manipulating luminance using a LUT such that only the white parts of the image inside the mask were manipulated and the non-white portions were left alone.

- Glare removal: Danil built this feature on top of the facial skin segmentation taught in the course. Once a skin region was segmented, he used the algorithm presented in this paper to remove glare/ specular highlights.

- Face shape changer: After reading about deformation-based filters in module 5.5, Danil became interested in fast and accurate face shape changing without deforming the mouth, nose and eye regions.He was interested in realistically deforming the area around the jawline. He used ideas from Finite Element Method to warp the jawline to the desired shape. Then he used OpenCV’s remapping algorithm for jaw line shifting and piecewise warping. This method was fast and worked well on large sized images.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning