Transformer Neural Networks

Models with billions, or trillions, of parameters are becoming the norm. These models can write essays, generate code, as well as create art. But they can still get stuck on

What if a radiologist facing a complex scan in the middle of the night could ask an AI assistant for a second opinion, right from their local workstation? This isn't

Ever watched an AI-generated video and wondered how it was made? Or perhaps dreamed of creating your own dynamic scenes, only to be overwhelmed by the complexity or the need

Object detection has come a long way, especially with the rise of transformer-based models. RF-DETR, developed by Roboflow, is one such model that offers both speed and accuracy. Using Roboflow’s

GPT-4o image generation is a game-changer! With native support in ChatGPT, you can now create stunning visuals from text prompts, refine them, and explore styles like Studio Ghibli or photorealism.

This article presents ASR with Diarization using OpenAI Whisper and Nvidia Nemo Toolkit.

In the rapidly evolving field of deep learning, the challenge often lies not just in designing powerful models but also in making them accessible and efficient for practical use, especially

In this article, we do text summarization using T5 and fine-tune the model to build a Text Summarization Gradio app.

In this article, we are fine tuning the TrOCR Small Printed model on the SCUT CTW1500 dataset to improve its performance on curved text.

In this article, we explore TrOCR architecture, models, training strategy and run inference using HuggingFace.

In this article, we show how to implement Vision Transformer using the PyTorch deep learning library.

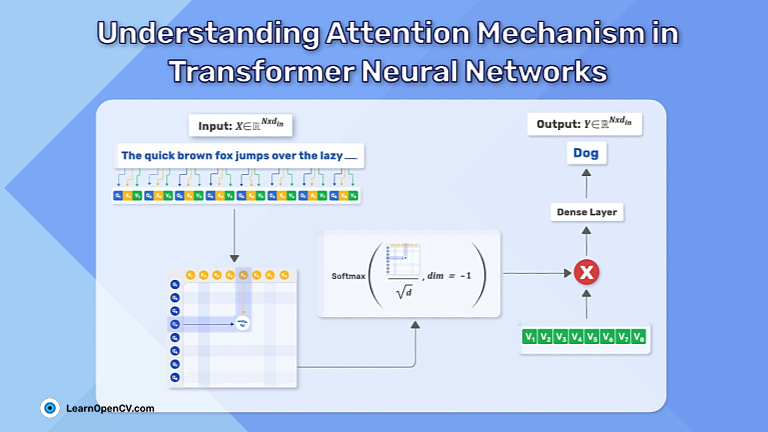

In this article, we cover the attention mechanism in neural networks in detail and also implement it using PyTorch