A camera, when used as a visual sensor, is an integral part of several domains like robotics, surveillance, space exploration, social media, industrial automation, and even the entertainment industry.

For many applications, it is essential to know the parameters of a camera to use it effectively as a visual sensor.

In this post, you will understand the steps involved in camera calibration and their significance.

We are also sharing code in C++ and Python along with example images of checkerboard pattern.

What is camera calibration?

The process of estimating the parameters of a camera is called camera calibration.

This means we have all the information (parameters or coefficients) about the camera required to determine an accurate relationship between a 3D point in the real world and its corresponding 2D projection (pixel) in the image captured by that calibrated camera.

Typically this means recovering two kinds of parameters

- Internal parameters of the camera/lens system. E.g. focal length, optical center, and radial distortion coefficients of the lens.

- External parameters : This refers to the orientation (rotation and translation) of the camera with respect to some world coordinate system.

In the image below, the parameters of the lens estimated using geometric calibration were used to un-distort the image.

Camera Calibration using OpenCV

To understand the process of calibration we first need to understand the geometry of image formation. Click on the link below for a detailed explanation

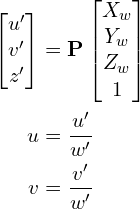

As explained in the post, to find the projection of a 3D point onto the image plane, we first need to transform the point from world coordinate system to the camera coordinate system using the extrinsic parameters (Rotation ![]() and Translation

and Translation ![]() ).

).

Next, using the intrinsic parameters of the camera, we project the point onto the image plane.

The equations that relate 3D point ![]() in world coordinates to its projection

in world coordinates to its projection ![]() in the image coordinates are shown below

in the image coordinates are shown below

Where, ![]() is a 3×4 Projection matrix consisting of two parts — the intrinsic matrix (

is a 3×4 Projection matrix consisting of two parts — the intrinsic matrix (![]() ) that contains the intrinsic parameters and the extrinsic matrix (

) that contains the intrinsic parameters and the extrinsic matrix (![]() ) that is combination of 3×3 rotation matrix

) that is combination of 3×3 rotation matrix ![]() and a 3×1 translation

and a 3×1 translation ![]() vector.

vector.

![\begin{align*} \mathbf{P} &= \overbrace{\mathbf{K}}^\text{Intrinsic Matrix} \times \overbrace{[\mathbf{R} \mid \mathbf{t}]}^\text{Extrinsic Matrix} \\[0.5em] \end{align*}](https://learnopencv.com/wp-content/ql-cache/quicklatex.com-035febc5cf4968ee25a10ae730c67e54_l3.png)

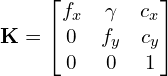

As mentioned in the previous post, the intrinsic matrix ![]() is upper triangular

is upper triangular

where,

![]() are the x and y focal lengths ( yes, they are usually the same ).

are the x and y focal lengths ( yes, they are usually the same ).

![]() are the x and y coordinates of the optical center in the image plane. Using the center of the image is usually a good enough approximation.

are the x and y coordinates of the optical center in the image plane. Using the center of the image is usually a good enough approximation.

![]() is the skew between the axes. It is usually 0.

is the skew between the axes. It is usually 0.

The Goal of Camera Calibration

The goal of the calibration process is to find the 3×3 matrix ![]() , the 3×3 rotation matrix

, the 3×3 rotation matrix ![]() , and the 3×1 translation vector

, and the 3×1 translation vector ![]() using a set of known 3D points

using a set of known 3D points ![]() and their corresponding image coordinates

and their corresponding image coordinates ![]() . When we get the values of intrinsic and extrinsic parameters the camera is said to be calibrated.

. When we get the values of intrinsic and extrinsic parameters the camera is said to be calibrated.

In summary, a camera calibration algorithm has the following inputs and outputs

- Inputs : A collection of images with points whose 2D image coordinates and 3D world coordinates are known.

- Outputs: The 3×3 camera intrinsic matrix, the rotation and translation of each image.

Note : In OpenCV the camera intrinsic matrix does not have the skew parameter. So the matrix is of the form

Different types of camera calibration methods

Following are the major types of camera calibration methods:

- Calibration pattern: When we have complete control over the imaging process, the best way to perform calibration is to capture several images of an object or pattern of known dimensions from different view points. The checkerboard based method that we will learn in this post belongs to this category. We can also use circular patterns of known dimensions instead of checker board pattern.

- Geometric clues: Sometimes we have other geometric clues in the scene like straight lines and vanishing points which can be used for calibration.

- Deep Learning based: When we have very little control over the imaging setup (e.g. we have a single image of the scene), it may still be possible to obtain calibration information of the camera using a Deep Learning based method.

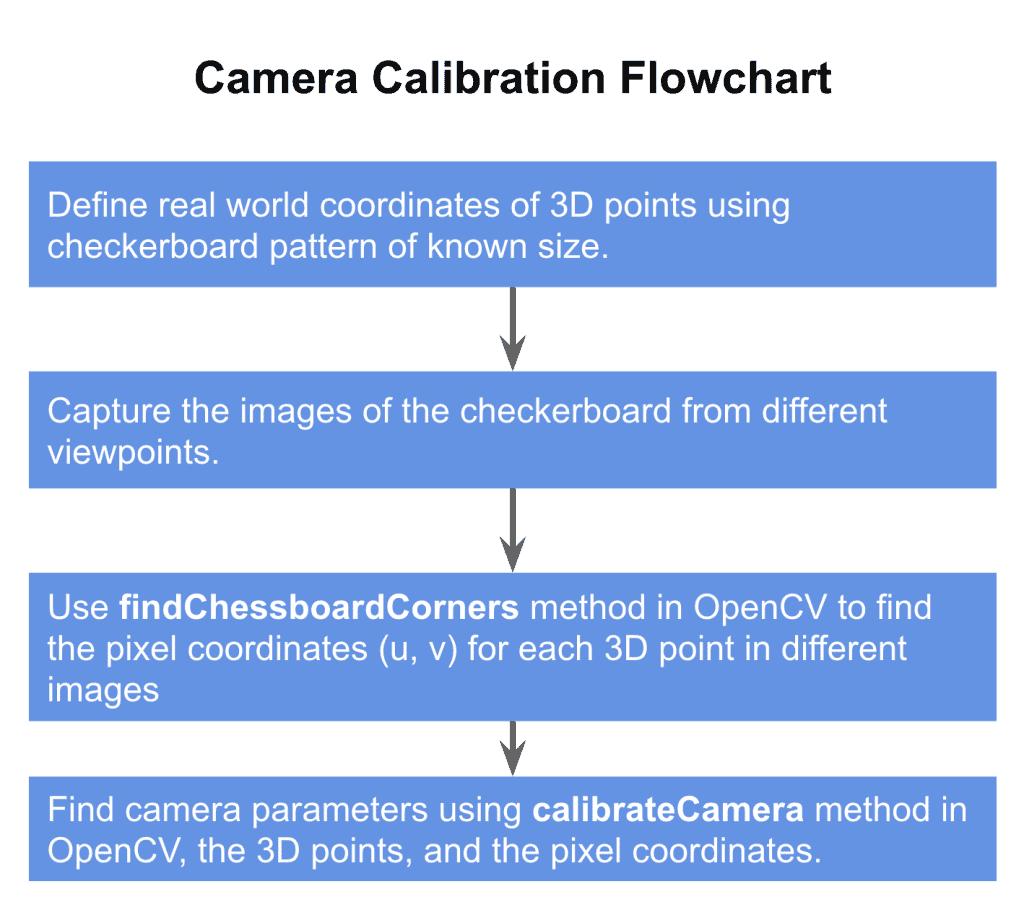

Camera Calibration Step by Step

The calibration process is explained by a flowchart given below.

Let’s go over these steps.

Step 1: Define real world coordinates with checkerboard pattern

In the process of calibration we calculate the camera parameters by a set of know 3D points ![]() and their corresponding pixel location

and their corresponding pixel location ![]() in the image.

in the image.

For the 3D points we photograph a checkerboard pattern with known dimensions at many different orientations. The world coordinate is attached to the checkerboard and since all the corner points lie on a plane, we can arbitrarily choose ![]() for every point to be 0. Since points are equally spaced in the checkerboard, the

for every point to be 0. Since points are equally spaced in the checkerboard, the ![]() coordinates of each 3D point are easily defined by taking one point as reference (0, 0) and defining remaining with respect to that reference point.

coordinates of each 3D point are easily defined by taking one point as reference (0, 0) and defining remaining with respect to that reference point.

Why is the checkerboard pattern so widely used in calibration?

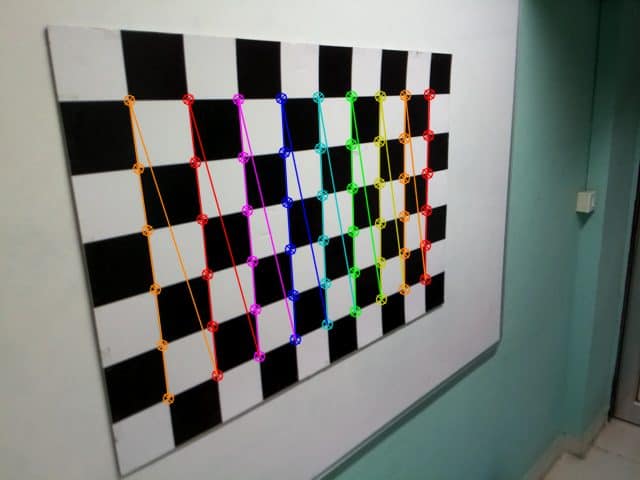

Checkerboard patterns are distinct and easy to detect in an image. Not only that, the corners of squares on the checkerboard are ideal for localizing them because they have sharp gradients in two directions. In addition, these corners are also related by the fact that they are at the intersection of checkerboard lines. All these facts are used to robustly locate the corners of the squares in a checkerboard pattern.

Step 2 : Capture multiple images of the checkerboard from different viewpoints

Next, we keep the checkerboard static and take multiple images of the checkerboard by moving the camera.

Alternatively, we can also keep the camera constant and photograph the checkerboard pattern at different orientations. The two situations are similar mathematically.

Step 3 : Find 2D coordinates of checkerboard

We now have multiple of images of the checkerboard. We also know the 3D location of points on the checkerboard in world coordinates. The last thing we need are the 2D pixel locations of these checkerboard corners in the images.

3.1 Find checkerboard corners

OpenCV provides a builtin function called findChessboardCorners that looks for a checkerboard and returns the coordinates of the corners. Let’ see the usage in the code block below. Its usage is given by

C++

bool findChessboardCorners(InputArray image, Size patternSize, OutputArray corners, int flags = CALIB_CB_ADAPTIVE_THRESH + CALIB_CB_NORMALIZE_IMAGE )

Python

retval, corners = cv2.findChessboardCorners(image, patternSize, flags)

Where,

| image | Source chessboard view. It must be an 8-bit grayscale or color image. |

| patternSize | Number of inner corners per a chessboard row and column ( patternSize = cvSize (points_per_row, points_per_colum) = cvSize(columns,rows) ). |

| corners | Output array of detected corners. |

| flags | Various operation flags. You have to worry about these only when things do not work well. Go with the default. |

The output is true or false depending on whether a pattern was detected or not.

3.2 Refine checkerboard corners

Good calibration is all about precision. To get good results it is important to obtain the location of corners with sub-pixel level of accuracy.

OpenCV’s function cornerSubPix takes in the original image, and the location of corners, and looks for the best corner location inside a small neighborhood of the original location. The algorithm is iterative in nature and therefore we need to specify the termination criteria ( e.g. number of iterations and/or the accuracy )

C++

void cornerSubPix(InputArray image, InputOutputArray corners, Size winSize, Size zeroZone, TermCriteria criteria)

Python

cv2.cornerSubPix(image, corners, winSize, zeroZone, criteria)

where,

| image | Input image. |

| corners | Initial coordinates of the input corners and refined coordinates provided for output. |

| winSize | Half of the side length of the search window. |

| zeroZone | Half of the size of the dead region in the middle of the search zone over which the summation in the formula below is not done. It is used sometimes to avoid possible singularities of the autocorrelation matrix. The value of (-1,-1) indicates that there is no such a size. |

| criteria | Criteria for termination of the iterative process of corner refinement. That is, the process of corner position refinement stops either after criteria.maxCount iterations or when the corner position moves by less than criteria.epsilon on some iteration. |

Step 4: Calibrate Camera

The final step of calibration is to pass the 3D points in world coordinates and their 2D locations in all images to OpenCV’s calibrateCamera method. The implementation is based on a paper by Zhengyou Zhang. The math is a bit involved and requires a background in linear algebra.

Let’s look at the syntax for calibrateCamera

C++

double calibrateCamera(InputArrayOfArrays objectPoints, InputArrayOfArrays imagePoints, Size imageSize, InputOutputArray cameraMatrix, InputOutputArray distCoeffs, OutputArrayOfArrays rvecs, OutputArrayOfArrays tvecs)

Python

retval, cameraMatrix, distCoeffs, rvecs, tvecs = cv2.calibrateCamera(objectPoints, imagePoints, imageSize)

where,

| objectPoints | A vector of vector of 3D points. The outer vector contains as many elements as the number of the pattern views. |

| imagePoints | A vector of vectors of the 2D image points. |

| imageSize | Size of the image |

| cameraMatrix | Intrinsic camera matrix |

| distCoeffs | Lens distortion coefficients. These coefficients will be explained in a future post. |

| rvecs | Rotation specified as a 3×1 vector. The direction of the vector specifies the axis of rotation and the magnitude of the vector specifies the angle of rotation. |

| tvecs | 3×1 Translation vector. |

Camera Calibration Code

The code for camera calibration using Python and C++ is shared below. However, it is much simpler to download all images and code using the link below.

Python Code for Camera Calibration

Please read through the code comments, they explain what each each step does.

#!/usr/bin/env python

import cv2

import numpy as np

import os

import glob

# Defining the dimensions of checkerboard

CHECKERBOARD = (6,9)

criteria = (cv2.TERM_CRITERIA_EPS + cv2.TERM_CRITERIA_MAX_ITER, 30, 0.001)

# Creating vector to store vectors of 3D points for each checkerboard image

objpoints = []

# Creating vector to store vectors of 2D points for each checkerboard image

imgpoints = []

# Defining the world coordinates for 3D points

objp = np.zeros((1, CHECKERBOARD[0] * CHECKERBOARD[1], 3), np.float32)

objp[0,:,:2] = np.mgrid[0:CHECKERBOARD[0], 0:CHECKERBOARD[1]].T.reshape(-1, 2)

prev_img_shape = None

# Extracting path of individual image stored in a given directory

images = glob.glob('./images/*.jpg')

for fname in images:

img = cv2.imread(fname)

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

# Find the chess board corners

# If desired number of corners are found in the image then ret = true

ret, corners = cv2.findChessboardCorners(gray, CHECKERBOARD, cv2.CALIB_CB_ADAPTIVE_THRESH + cv2.CALIB_CB_FAST_CHECK + cv2.CALIB_CB_NORMALIZE_IMAGE)

"""

If desired number of corner are detected,

we refine the pixel coordinates and display

them on the images of checker board

"""

if ret == True:

objpoints.append(objp)

# refining pixel coordinates for given 2d points.

corners2 = cv2.cornerSubPix(gray, corners, (11,11),(-1,-1), criteria)

imgpoints.append(corners2)

# Draw and display the corners

img = cv2.drawChessboardCorners(img, CHECKERBOARD, corners2, ret)

cv2.imshow('img',img)

cv2.waitKey(0)

cv2.destroyAllWindows()

h,w = img.shape[:2]

"""

Performing camera calibration by

passing the value of known 3D points (objpoints)

and corresponding pixel coordinates of the

detected corners (imgpoints)

"""

ret, mtx, dist, rvecs, tvecs = cv2.calibrateCamera(objpoints, imgpoints, gray.shape[::-1], None, None)

print("Camera matrix : \n")

print(mtx)

print("dist : \n")

print(dist)

print("rvecs : \n")

print(rvecs)

print("tvecs : \n")

print(tvecs)

C++ Code

Please read through the comments to understand each step.

#include <opencv2/opencv.hpp>

#include <opencv2/calib3d/calib3d.hpp>

#include <opencv2/highgui/highgui.hpp>

#include <opencv2/imgproc/imgproc.hpp>

#include <stdio.h>

#include <iostream>

// Defining the dimensions of checkerboard

int CHECKERBOARD[2]{6,9};

int main()

{

// Creating vector to store vectors of 3D points for each checkerboard image

std::vector<std::vector<cv::Point3f> > objpoints;

// Creating vector to store vectors of 2D points for each checkerboard image

std::vector<std::vector<cv::Point2f> > imgpoints;

// Defining the world coordinates for 3D points

std::vector<cv::Point3f> objp;

for(int i{0}; i<CHECKERBOARD[1]; i++)

{

for(int j{0}; j<CHECKERBOARD[0]; j++)

objp.push_back(cv::Point3f(j,i,0));

}

// Extracting path of individual image stored in a given directory

std::vector<cv::String> images;

// Path of the folder containing checkerboard images

std::string path = "./images/*.jpg";

cv::glob(path, images);

cv::Mat frame, gray;

// vector to store the pixel coordinates of detected checker board corners

std::vector<cv::Point2f> corner_pts;

bool success;

// Looping over all the images in the directory

for(int i{0}; i<images.size(); i++)

{

frame = cv::imread(images[i]);

cv::cvtColor(frame,gray,cv::COLOR_BGR2GRAY);

// Finding checker board corners

// If desired number of corners are found in the image then success = true

success = cv::findChessboardCorners(gray, cv::Size(CHECKERBOARD[0], CHECKERBOARD[1]), corner_pts, CV_CALIB_CB_ADAPTIVE_THRESH | CV_CALIB_CB_FAST_CHECK | CV_CALIB_CB_NORMALIZE_IMAGE);

/*

* If desired number of corner are detected,

* we refine the pixel coordinates and display

* them on the images of checker board

*/

if(success)

{

cv::TermCriteria criteria(CV_TERMCRIT_EPS | CV_TERMCRIT_ITER, 30, 0.001);

// refining pixel coordinates for given 2d points.

cv::cornerSubPix(gray,corner_pts,cv::Size(11,11), cv::Size(-1,-1),criteria);

// Displaying the detected corner points on the checker board

cv::drawChessboardCorners(frame, cv::Size(CHECKERBOARD[0], CHECKERBOARD[1]), corner_pts, success);

objpoints.push_back(objp);

imgpoints.push_back(corner_pts);

}

cv::imshow("Image",frame);

cv::waitKey(0);

}

cv::destroyAllWindows();

cv::Mat cameraMatrix,distCoeffs,R,T;

/*

* Performing camera calibration by

* passing the value of known 3D points (objpoints)

* and corresponding pixel coordinates of the

* detected corners (imgpoints)

*/

cv::calibrateCamera(objpoints, imgpoints, cv::Size(gray.rows,gray.cols), cameraMatrix, distCoeffs, R, T);

std::cout << "cameraMatrix : " << cameraMatrix << std::endl;

std::cout << "distCoeffs : " << distCoeffs << std::endl;

std::cout << "Rotation vector : " << R << std::endl;

std::cout << "Translation vector : " << T << std::endl;

return 0;

}

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning