The landscape of Artificial Intelligence is rapidly evolving towards models that can seamlessly understand and generate information across multiple modalities, like text and images. Salesforce AI Research has introduced BLIP3-o, a family of fully open-source unified multimodal models, marking a significant step in this direction.

Available in two versions with 4 billion and 8 billion parameters, BLIP3-o offers scalable solutions for diverse needs. This blog post provides a technical introduction to BLIP3-o, exploring its key improvements, underlying architecture, and diverse applications.

- Unifying Image Understanding and Generation

- Key Architectural Innovations and Improvements

- How BLIP3-o Works

- Applications of BLIP3-o

- Trying BLIP3-o Yourself: Inference and Demo Interface

- Open Source and Future Directions

- Conclusion

- References

Unifying Image Understanding and Generation

A core challenge in multimodal AI has been to develop a single framework that excels at both interpreting and creating visual content. BLIP3-o tackles this by systematically investigating optimal architectures and training strategies. The research emphasizes the synergy between autoregressive models, known for their reasoning and instruction-following strengths, and diffusion models, which are powerful in generation tasks.

Key Architectural Innovations and Improvements

BLIP3-o introduces several notable improvements over previous models:

- CLIP Feature Diffusion: Instead of relying on conventional VAE-based representations that focus on pixel-level reconstruction, BLIP3-o employs a diffusion transformer to generate semantically rich CLIP (Contrastive Language-Image Pre-training) image features. This approach leverages the high-level semantic understanding of CLIP, leading to higher training efficiency and improved generative quality. By operating in the same semantic space, BLIP3-o effectively unifies image understanding and generation.

- Flow Matching: BLIP3-o utilizes Flow Matching as a training objective. This technique is more effective at capturing the underlying distribution of images compared to traditional Mean Squared Error (MSE) loss, resulting in greater sample diversity and enhanced visual quality in generated images. To explore more on flow matching, click on the link here and visit our other blog post describing in detail what diffusion models and conditional flow models are.

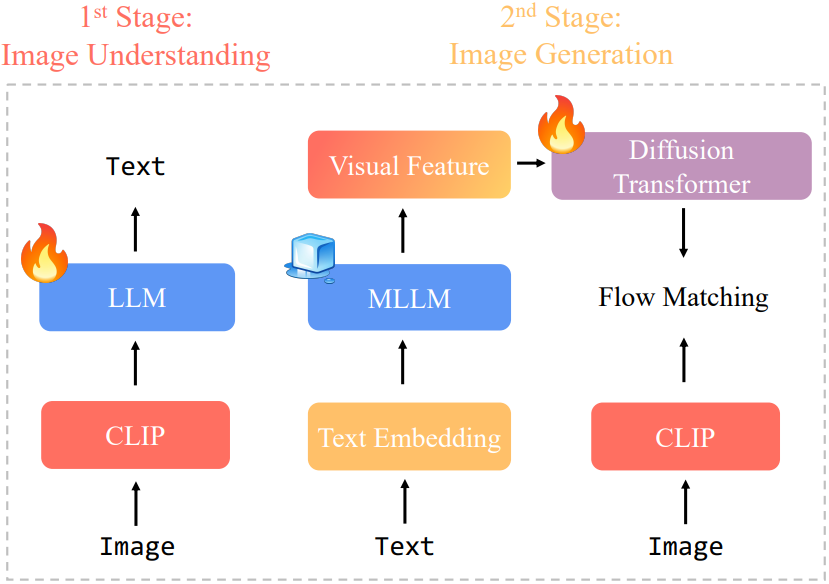

- Sequential Training Strategy: The model employs a sequential pretraining strategy. It is first trained on image understanding tasks, and subsequently, the autoregressive backbone is frozen while the diffusion transformer is trained for image generation. This “late fusion” approach preserves the model’s image understanding capabilities while developing strong image generation abilities, avoiding potential interference between the two tasks.

- Enhanced Datasets: BLIP3-o benefits from large-scale pretraining data and a specially curated high-quality instruction-tuning dataset called BLIP3o-60k. This dataset, generated by prompting GPT-4o with diverse captions covering various scenes, objects, and human gestures, significantly improves prompt alignment and visual aesthetics.

How BLIP3-o Works

The core of BLIP3-o’s architecture involves an autoregressive model that produces continuous visual features. These features then condition a diffusion process.

The inference pipeline for image generation using the CLIP + Flow Matching approach involves two diffusion stages:

- The conditioning visual features from the autoregressive model guide an iterative denoising process to produce CLIP embeddings.

- These CLIP embeddings are then converted into actual images by a diffusion-based visual decoder. This two-stage process allows for stochastic sampling in the first stage, contributing to greater diversity in the generated images.

The image understanding component leverages the CLIP encoder to transform images into rich semantic embeddings.

Applications of BLIP3-o

BLIP3-o’s unified architecture opens doors to a wide array of applications:

- Text-to-Image Generation: Creating high-quality images from textual descriptions. This is useful for content creation in advertising, social media, and artistic endeavors.

- Image Captioning and Description: Generating detailed textual descriptions for given images.

- Visual Question Answering (VQA): Answering questions based on the content of an image.

- Iterative Image Editing: The framework shows potential for tasks like modifying images based on instructions.

- Visual Dialogue: Enabling more interactive and conversational experiences involving images.

- Step-by-Step Visual Reasoning: Facilitating complex reasoning based on visual information.

- Document OCR and Chart Analysis: The model performs well in handling complex text-image tasks.

Prompts used in Fig 2:

• A blue BMW parked in front of a yellow brick wall.

• A woman twirling in a sunlit alley lined with colorful walls, her summer dress catching the

light mid-spin.

• A group of friends having a picnic.

• A lush tropical waterfall, ‘Deep Learning‘ on a reflective metal road sign.

• A blue jay standing on a large basket of rainbow macarons.

• A sea turtle swimming above a coral reef.

• A young woman with freckles wearing a straw hat, standing in a golden wheat field.

• Three people.

• A man talking animatedly on the phone, his mouth moving rapidly.

• A wildflower meadow at sunrise, ‘BLIP3o‘ projected onto a misty surface.

• A rainbow-colored ice cavern, ‘Salesforce‘ drawn in the wet sand.

Trying BLIP3-o Yourself: Inference and Demo Interface

For those looking to experiment with BLIP3-o directly, the GitHub repository provides the necessary tools to run inference and interact with a demo interface.

Setup and Execution:

To get started, you can set up a conda environment and install the required dependencies:

conda create -n blip3o python=3.11 -y

conda activate blip3o

pip install --upgrade pip setuptools

pip install -r requirements.txt

Next, download the model checkpoints. For example, to download the 8B parameter model:

python -c "from huggingface_hub import snapshot_download; print(snapshot_download(repo_id='BLIP3o/BLIP3o-Model-8B', repo_type='model'))"

Finally, launch the inference script, pointing it to the downloaded checkpoint path:

python inference.py /HF_model/checkpoint/path/

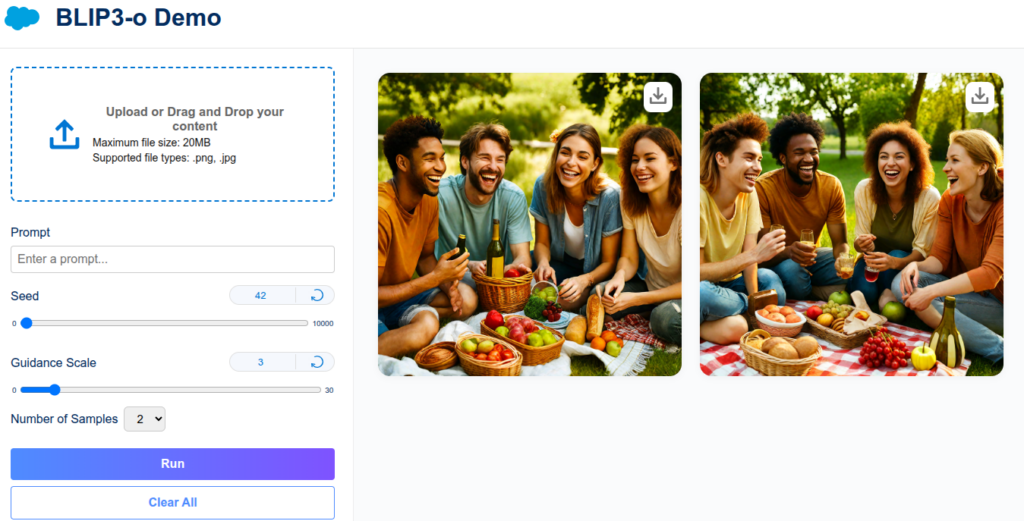

Running this script will launch a Gradio-based demo interface, allowing users to interact with the BLIP3-o model.

Understanding the Demo Interface:

The BLIP3-o demo interface provides a user-friendly way to test the model’s capabilities. The key components visible in the interface are:

- Image Upload Area: On the left, users can upload an image or drag and drop it directly into the designated box. The interface specifies a maximum file size (e.g., 20MB) and supported file types (e.g., .png, .jpg). This area is typically used for tasks like image captioning or visual question answering (though the specific functionality might depend on the mode selected if multiple tasks are supported by the demo).

- Prompt Input: A text field labeled “Prompt” allows users to enter textual instructions or questions related to the uploaded image or for text-to-image generation.

- Seed: This slider and input field (ranging from 0 to 10000 in the example) allows users to set a seed for the random number generator. Using the same seed with the same prompt and other parameters will generally produce the same output, which is useful for reproducibility.

- Guidance Scale: This slider and input field (ranging from 0 to 30 in the example) controls how much the generation process adheres to the prompt. Higher values typically mean stricter adherence, while lower values allow for more creativity.

- Number of Samples: A dropdown menu to select how many output samples (e.g., images) the model should generate for the given prompt.

- Control Buttons:

- Run: This button initiates the model’s process based on the provided inputs (image, prompt, and parameters).

- Clear All: This button resets the input fields and clears any displayed outputs.

- Output Display Area: On the right side of the interface, the model’s output, such as generated images or textual answers, will be displayed. The provided image shows two generated images as an example output for a text-to-image task.

This interactive demo allows learners and researchers to explore BLIP3-o’s functionalities, experiment with different prompts and parameters, and gain a practical understanding of its multimodal capabilities.

Open Source and Future Directions

Salesforce has fully open-sourced BLIP3-o, including model weights for both its 4B and 8B parameter versions, training code, pretraining and instruction-tuning datasets, and evaluation pipelines. This commitment to open science aims to foster further research and democratize access to advanced multimodal AI.

Future development is focused on enhancing capabilities such as iterative image editing, visual dialogue, and step-by-step visual reasoning. Researchers are also looking to close the loop between understanding and generation, enabling the model to reconstruct an image it has understood, creating a seamless bridge between perception and creation.

Conclusion

In conclusion, BLIP3-o represents a significant advancement in the field of unified multimodal AI. Its innovative architecture, leveraging CLIP feature diffusion and flow matching, along with a strategic training approach and rich datasets, delivers state-of-the-art performance in both image understanding and generation. Its open-source nature further promises to accelerate innovation in this exciting domain.

References

- Research Paper: https://arxiv.org/abs/2505.09568

- Github Repo: https://github.com/JiuhaiChen/BLIP3o

- Blog Post by Salesforce: https://www.salesforce.com/blog/blip3/

- Blog Post Huggingface : https://huggingface.co/papers/2505.09568

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning