BERT, short for Bidirectional Encoder Representations from Transformers, was one of the game changing NLP models when it came out in 2018. BERT’s capabilities for sentiment classification, text summarization, and question answering made it look like a one stop NLP model. Although newer and larger language models have come forth, BERT is still relevant and it is worthwhile to learn its architecture, approach, and capabilities.

This comprehensive post offers an in-depth exploration of BERT, and the significant impact it has had on natural language processing and understanding. We will cover the foundational concepts, operational mechanisms, and the pretraining strategies that make it a standout in the NLP community. In addition, we will also go through the inference using a pretrained BERT model and training BERT to analyze movie reviews.

What is BERT?

BERT, which stands for Bidirectional Encoder Representations from Transformers, marks a pinnacle in the development of AI-based language understanding. Developed by researchers at Google in 2018, it’s designed to understand the context of words in search queries, thereby vastly improving the quality and relevance of results in Google Search.

Before BERT, the NLP models had substantial limitations. They typically processed words in a sentence sequentially, either left-to-right or right-to-left, which restricted their understanding of context and language nuances. BERT uses a new approach. It employs a technique known as bidirectional training, which allows the model to analyze text contextually from both directions, creating a more sophisticated understanding of language context and semantics than any model before it.

This advancement brought significant improvements in a range of language tasks such as sentence completion, sentiment analysis, and question-answering among others. In simpler terms, BERT has the capability to understand the subtleties and intricacies of language more like a human brain.

How Does BERT Work?

The following are the most important components of BERT which contribute to its high performance.

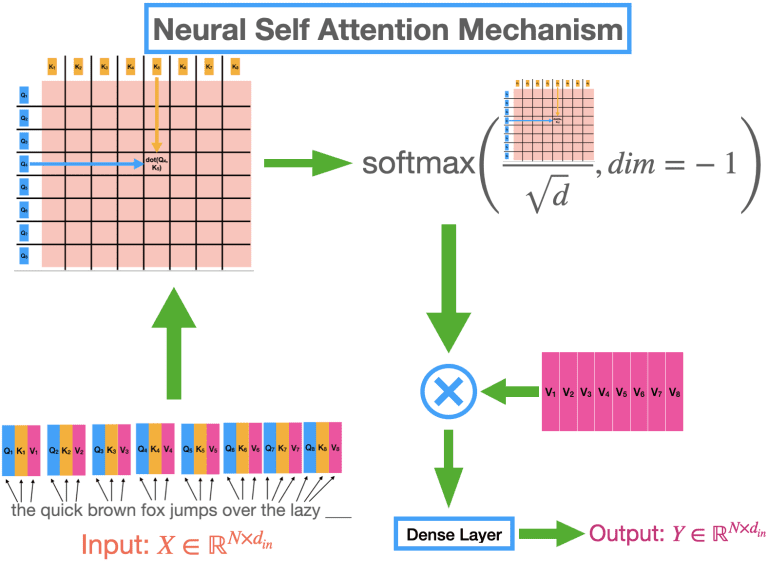

Transformers and Attention Mechanism

At its core, BERT is built upon ‘Transformers,’ a neural network architecture specifically designed to handle ordered sequences of data, such as natural language, making it perfect for NLP tasks. What makes Transformers unique is their ‘attention mechanism,’ which weighs the significance of different words in a sentence, allowing the model to focus more on what is relevant in the text.

Bidirectional Contextual Understanding

BERT’s prime feature is its bidirectionality. Unlike traditional NLP models, BERT attends to context in both directions (left-to-right and right-to-left), which allows for a deeper understanding of context. This feature enables the model to make more informed predictions about what the next word in a sentence should be. For instance, in the sentence “He went to the ___,” a unidirectional model might predict “store,” “gym,” or “movie,” but BERT can use the rest of the sentence to predict a more contextually appropriate word.

Assuming that the sentence is “He went to the ___ to workout”. Because BERT also analyzes the words after the blank space. So, it can infer the word “gym” with more confidence.

Fine-Tuning for Specific Tasks

Although BERT is pre-trained on a large corpus of text, it is also designed to be fine-tuned for specific NLP tasks. These include:

- Question answering

- Sentiment analysis: This involves adding a final output layer to the BERT model, then training the model further on data specific to the task. This versatility and adaptability are what make BERT particularly powerful and widely applicable in various domains.

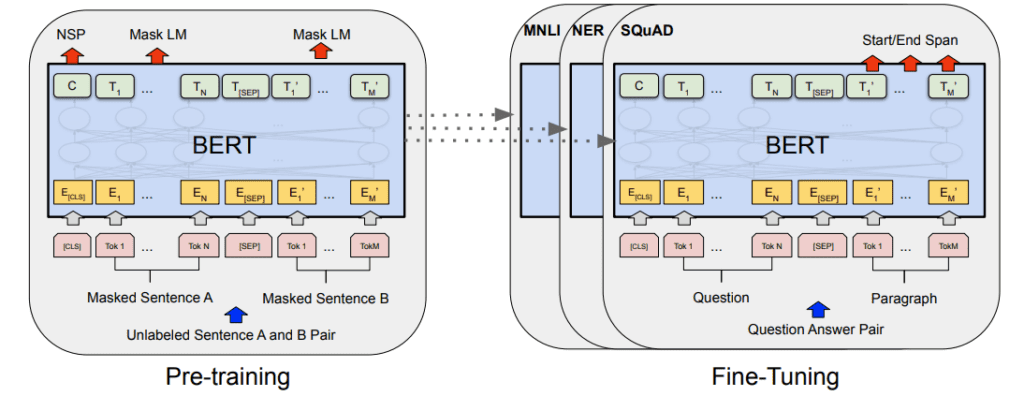

BERT’s Pretraining Strategy

Bert has been pretrained on two tasks. Masked Language Modeling (MLM) and Next Sentence Prediction (NSP).

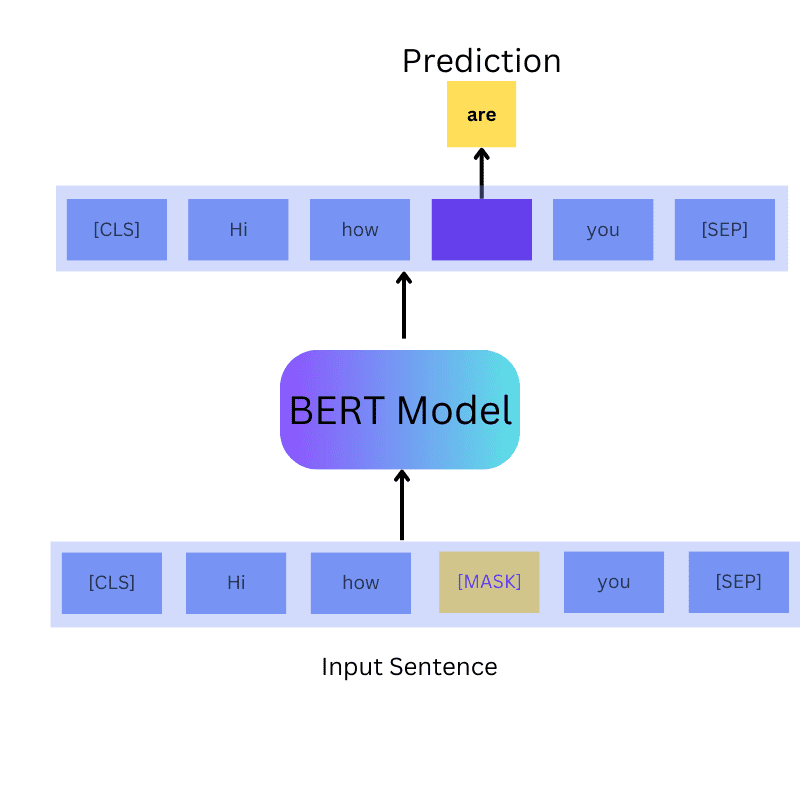

Masked Language Model (MLM)

Masked Language Model (MLM) is a key component of pretraining BERT. During this phase, BERT randomly masks some percentage of the words in the input data and then attempts to predict those masked words based on the context provided by the non-masked words. This process helps the model learn a rich understanding of language context.

Next Sentence Prediction (NSP)

Alongside MLM, BERT is also pretrained using Next Sentence Prediction. In this process, the model is fed pairs of sentences and must predict if the second sentence in the pair is the subsequent sentence to the first. This pretraining task enables BERT to understand the relationships between sentences, a crucial skill for many NLP tasks, such as summarization or question-answering.

Interestingly, BERT is trained on both the tasks simultaneously during the pretraining stage.

Inference using Pretrained BERT Models

Hugging Face already provides pretrained models for several tasks. These include the original pretrained BERT for Masked Language Modeling and variations of BERT for sentiment analysis.

Let’s take a look at the Hugging Face Transformers Pipeline and how to leverage these pretrained models.

Before moving forward, make sure that you have installed the Hugging Face transformers library using the following command.

pip install -U transformers

Masked Language Modeling Inference

We will start with the Masked Language Modeling Task. For most of the tasks, the Transformers library provides a pipeline module that we can easily use. First, you have to import the module.

from transformers import pipeline

Next, we need to initialize the pipeline for the Masked Language Modeling Task.

unmasker = pipeline(task='fill-mask', model='bert-base-uncased')

In the above code block, pipeline accepts two arguments.

task: Here, we need to provide the task that we want to carry out. For our use case, it is'fill-mask'. However, it supports several other tasks such as text classification, text generation, image classification, to name a few. You may take a look at the official docs to know more about them.model: This argument accepts the name of the model that we want to use in string format. Hugging Face already has the BERT pretrained model for Masked Language Modeling and that’s what we are using here.

Now, we can access the pipeline using the unmasker variable. All we need to do is provide a sentence with the [MASK] token. Here is an example.

unmasker('This is a [MASK] car.')

The model will try to replace the [MASK] token with the most appropriate word. By default it outputs five possibilities. The following block shows the output for the above sentence.

[{'score': 0.07382672280073166,

'token': 2047,

'token_str': 'new',

'sequence': 'this is a new car.'},

{'score': 0.05252403765916824,

'token': 3835,

'token_str': 'nice',

'sequence': 'this is a nice car.'},

{'score': 0.04461159184575081,

'token': 4438,

'token_str': 'classic',

'sequence': 'this is a classic car.'},

{'score': 0.037506841123104095,

'token': 2998,

'token_str': 'sports',

'sequence': 'this is a sports car.'},

{'score': 0.034782662987709045,

'token': 4145,

'token_str': 'concept',

'sequence': 'this is a concept car.'}]

The pipeline outputs them in decreasing order of the score. As we can see, all the words seem to fit it, however, the word new seems the most appropriate.

This was a simple example showcasing the working of the pipeline module from transformers for MLM using BERT.

Sentiment Analysis Inference using Pretrained BERT Model

Hugging Face also provides several variations of BERT. A common example is sentiment analysis where we use a BERT model to categorize a sentence as positive or negative.

One such model is the DistilBERT model which has been pretrained on the SST-2 (Stanford Sentiment Treebank) dataset.

DistilBERT is a smaller version of BERT, designed and trained for the purpose of distilling the knowledge of BERT into a more compact and faster model. It retains most of BERT’s performance capabilities while being smaller and faster. This makes it more accessible for limited computational resources.

The approach for sentiment analysis is going to be fairly similar. First, we need to initialize the pipeline.

sentiment_analysis = pipeline(task='sentiment-analysis')

This time, however, we initialize it with the 'sentiment-analysis' task. You may notice that we have not provided a model name here. By default, the sentiment analysis task uses a predefined model. It is the DistilBERT model fine-tuned on the SST-2 dataset. It is also possible to provide any other model from the Hugging Face model library. However, for now, let’s move forward with the default model.

Let’s define a few sentences. One with a positive sentiment and one with a negative sentiment.

sentences = [

'The movie was great.',

'It was a bad experience at the amusement park.'

]

The sentiment_analysis pipeline can accept lists containing multiple sentences. Let’s invoke the pipeline by passing the above list to it.

sentiment_analysis(sentences)

The following is the output.

[{'label': 'POSITIVE', 'score': 0.9998748302459717},

{'label': 'NEGATIVE', 'score': 0.9997939467430115}]

The model outputs the labels, whether POSITIVE or NEGATIVE, along with the score for each sentence. The model predicts both sentences correctly with very high accuracy.

The above examples give us an overview of how simple, yet powerful the Hugging Face transformers library is. For our next objective, we will take a step further and fine-tune the BERT model on a simple movie classification dataset.

Training BERT To Analyze IMDB Movie Reviews

We have already discussed the basics of BERT and how to use the pretrained BERT models for MLM and sentiment analysis. We got firsthand experience how simple it was with the Transformers library. The utility of the Transformers library extends beyond just inference.In fact we have access to thousands of datasets that we can load and fine-tune any BERT model with just a few lines of code. In the following section, we will explore this aspect in detail

To get a taste of how simple it is to train models using the Transformers library, we will fine-tune the BERT model on the IMDB Movie review dataset. This is meant to familiarize ourselves with the pipeline, rather than for obtaining an in-depth knowledge of the library.

The IMDB movie review dataset contains 25000 training and 25000 validation samples split across two classes: Positive and Negative. A review can belong to either of the classes.

Let’s get started with the process.

Installing the Dependencies

A few more dependencies are required when performing training with the Transformers library. The following code cell installs the necessary libraries.

!pip install -U transformers datasets evaluate accelerate

!pip install scikit-learn

datasets: Also known as the huggingface_datasets library, it provides a lightweight and extensible library to easily share and access datasets and evaluation metrics for machine learning tasks. We will use it to load the IMDB dataset.evaluate: This package is often used for evaluation purposes in machine learning workflows, to assess the performance of models against certain benchmarks or datasets.accelerate: Hugging Face’sacceleratelibrary simplifies the process of running machine learning scripts on multiple devices, like multi-GPU setups or TPUs. It’s used to speed up model training by efficiently utilizing hardware.

Furthermore, Scikit-Learn is needed by the Hugging Face libraries internally to manage dataset splits.

Imports

Next, we import all the necessary packages and modules.

from datasets import load_dataset

from transformers import (

AutoTokenizer,

DataCollatorWithPadding,

AutoModelForSequenceClassification,

TrainingArguments,

Trainer,

pipeline

)

import evaluate

import numpy as np

There’s no need to delve deeply into each package at the moment. We’ll certainly explore this in a future article where we’ll provide an in-depth guide to training language models. For now, we import all the necessary modules from the transformers library that we need to train BERT.

Hyperparameters

While training the BERT model, we will need to define a few hyperparameters for the dataset and model preparation. The following code block defines all of them.

BATCH_SIZE = 32

NUM_PROCS = 32

LR = 0.00005

EPOCHS = 2

We will use a batch size of 32 for the data loader along with 32 parallel processors to load the dataset. The learning rate is going to be 0.00005 and we will train the BERT model for just two epochs.

Download the IMDB Dataset

We can use the load_dataset method to load the IMDB dataset. It will be downloaded the first time if not already present.

train_dataset = load_dataset("imdb", split='train')

test_dataset = load_dataset("imdb", split='test')

The load_dataset function takes a string name for the dataset to load and the split. For easier management and processing of the dataset, we load the train and test sets separately.

Printing the dataset prints the feature rows and the number of samples in each set.

print(train_dataset)

print(test_dataset)

Following is the expected output.

Dataset({

features: ['text', 'label'],

num_rows: 25000

})

Dataset({

features: ['text', 'label'],

num_rows: 25000

})

Each set contains a text and label column holding the review text and the integer label for the class label.

To get a better understanding, we can print a sample from the training set.

# Visualize a sample.

train_dataset[0]

Here is the truncated output.

{'text': 'I rented I AM CURIOUS-YELLOW from my video store because of all the controversy that surrounded it when it was first released in 1967. I also heard that at first it was seized by ... of Swedish cinema. But really, this film doesn\'t have much of a plot.',

'label': 0}

As expected the text key holds the movie review and the label key holds the class label. For the IMDB dataset, the 0 label defines the negative class and the 1 label defines the positive class. The above has a label of 0 indicating that it is a negative review.

Let’s define the dataset information for the class labels.

id2label = {

0: "Negative",

1: "Positive",

}

label2id = {

"Negative": 0,

"Positive": 1,

}

The above two dictionaries will be used during the training and inference process.

Tokenize the Dataset

NLP models cannot process raw text directly. They need to be converted to numerical values. This process of mapping the necessary text to a numerical value is called tokenization and numericalization.

For our current purpose, let’s load the BERT tokenizer and tokenize the training and test sets.

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

# Helper function for preprocessing.

def preprocess_function(examples):

return tokenizer(examples["text"], truncation=True)

tokenized_train = train_dataset.map(

preprocess_function,

batched=True,

batch_size=BATCH_SIZE,

num_proc=NUM_PROCS

)

tokenized_test = test_dataset.map(

preprocess_function,

batched=True,

batch_size=BATCH_SIZE,

num_proc=NUM_PROCS

)

# Initialize data collator.

data_collator = DataCollatorWithPadding(tokenizer=tokenizer)

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased"): This line initializes a tokenizer from the Hugging Face transformers library that corresponds to the bert-base-uncased model. This tokenizer knows how to convert a text into tokens that the BERT model can understand, including how to split words into subwords (if necessary) and convert them into unique IDs. The bert-base-uncased model doesn’t take take into account the upper and lower cases of the text.preprocess_function: This is a helper function that takes batches of sentences and encodes them into the format expected by BERT. The tokenizer function is called on the text, ensuring that the text is prepared correctly. Thetruncation=Trueargument means that the function will cut off the sequence at the maximum length that BERT can handle.- Next, we prepare the

tokenized_trainandtokenized_testsets. The datasets that we get from here have been tokenized and numericalized.

Evaluation Metrics

We will monitor the accuracy metric while training the BERT model.

accuracy = evaluate.load('accuracy')

def compute_metrics(eval_pred):

predictions, labels = eval_pred

predictions = np.argmax(predictions, axis=1)

return accuracy.compute(predictions=predictions, references=labels)

The above code uses the evaluate library to load the accuracy metric. The compute_metrics acts as a callback that we will pass to the training pipeline which will calculate the accuracy on the validation set after each epoch.

Preparing the BERT Model

Preparing models in Hugging Face is straightforward. We just need to call the from_pretrained method along with the appropriate arguments.

model = AutoModelForSequenceClassification.from_pretrained(

"bert-base-uncased",

num_labels=2,

id2label=id2label,

label2id=label2id

)

It is necessary to ensure that the tokenizer name and the model name match. This ensures that the correct tokenization has been applied according to the model being used for training. As there are 2 classes in the dataset, num_labels is 2. We also pass the optional id2label and label2id arguments while preparing the model. These will be most helpful when running inference after training.

This builds a pretrained BERT base model with 109.5 million parameters.

Training Arguments

Before we can start the training, we need to define the necessary parameters using the TrainingArguments class.

training_args = TrainingArguments(

output_dir="imdb_classification",

learning_rate=LR,

per_device_train_batch_size=BATCH_SIZE,

per_device_eval_batch_size=BATCH_SIZE,

num_train_epochs=EPOCHS,

weight_decay=0.01,

evaluation_strategy="epoch",

save_strategy="epoch",

load_best_model_at_end=True,

)

- All the outputs will be stored inside the

imdb_classificationfolder which will be created during training. - We also pass down the learning rate, batch size for training and validation, number of training epochs, weight decay, and evaluation strategy.

The evaluation will take place after every epoch as evaluation_strategy is epoch.

Training the BERT Model

To train the model, we need to initialize the Trainer argument first.

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_train,

eval_dataset=tokenized_test,

tokenizer=tokenizer,

data_collator=data_collator,

compute_metrics=compute_metrics,

)

Here we pass the model, above defines the training arguments, the tokenized datasets, tokenizer, data collator, and metrics as arguments.

The trainer object contains a train method that we can call to start the training.

history = trainer.train()

All the resulting values will be stored in the history object. The summary of the training process is as below.

Epoch Training Loss Validation Loss Accuracy

1 0.272400 0.212047 0.924360

2 0.097800 0.188838 0.943120

By the end of the second epoch, the model has already reached 94% validation accuracy. This shows the efficacy of the Transformer architecture and the BERT pretrained model.

Running Inference

For running inference, let’s run the latest model first. The history object holds a global_step attribute that stores the latest step where the model was saved. We can use that to load the latest model from the imdb_classification directory.

model = AutoModelForSequenceClassification.from_pretrained(f"imdb_classification/checkpoint-{history.global_step}")

Next, just like the inference stage, we use the pipeline to load the task and model. In this case, this is a text classification task.

classify = pipeline(task='text-classification', model=model, tokenizer=tokenizer)

The following is the real-world review stored in a text file.

A grim, gritty, and gripping super-noir, The Batman ranks among the Dark Knight's bleakest -- and most thrillingly ambitious -- live-action outings.

This is quite challenging as from the outset it may seem like a negative review while in reality it is a positive one.

Let’s load the file and run the inference.

file = open('inference_data/text1.txt')

content = file.read()

classify(content)

Following is the result that the model outputs.

[{'label': 'Positive', 'score': 0.9977442026138306}]

Conclusion

Concluding our introduction to BERT, we began with an overview of the BERT model while discussing the architecture and pretraining strategy. We proceeded to examine the process of inference with pretrained BERT models. Lastly, we also trained a BERT model on the simple IMDB dataset to familiarize ourselves with the training pipeline.

The world of Transformers is huge and a lot more interesting articles are on the anvil featuring intricate and engaging datasets. Stay tuned for what’s to come!

Let us know in the comments what kind of articles you would like to know more about in NLP and Transformers.

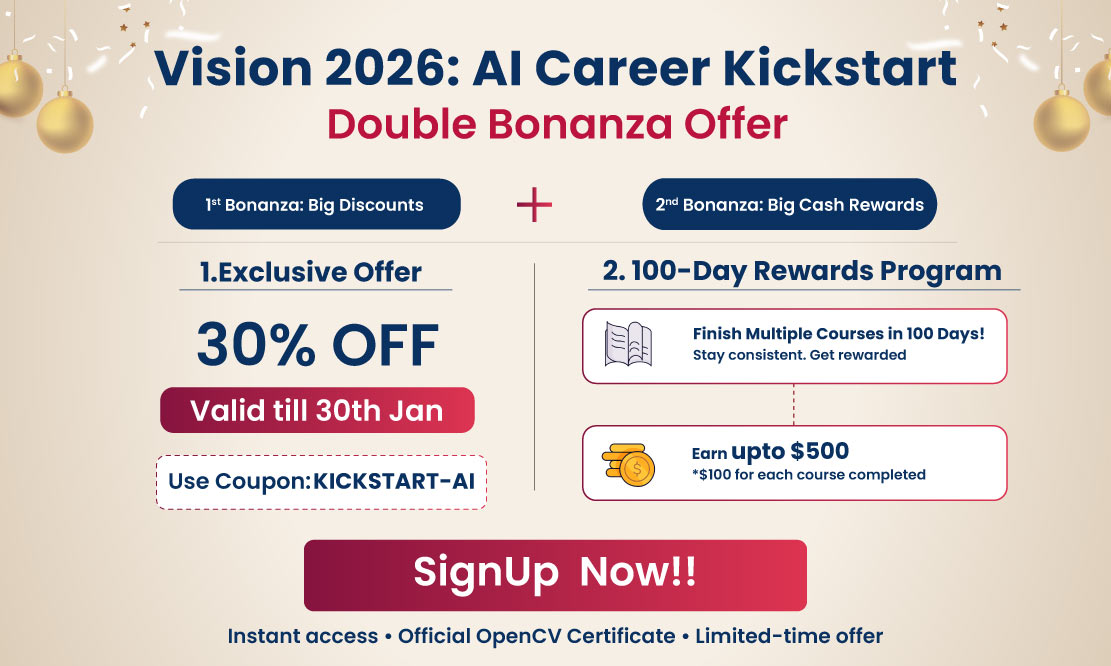

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning