In Deep Learning, Batch Normalization (BatchNorm) and Dropout, as Regularizers, are two powerful techniques used to optimize model performance, prevent overfitting, and speed up convergence. While both have their individual advantages, the combination of these techniques in a single neural network model has been the subject of much debate. Experimentation is key, and by understanding their roles and interactions, we can optimize our model for better performance.

This blog post aims to provide a comprehensive overview of BatchNorm and Dropout, emphasizing their interplay and offering practical advice for utilizing them effectively in deep learning models. We’ll also provide insights into best practices for applying these techniques, backed by experimental results.

- The Role of Batch Normalization and Dropout

- Challenges of Combining BatchNorm and Dropout

- When Combining BatchNorm and Dropout Can Work

- Best Practices for Using BatchNorm and Dropout Together

- Visualizing the Experimental Results

- Conclusion

The Role of Batch Normalization and Dropout

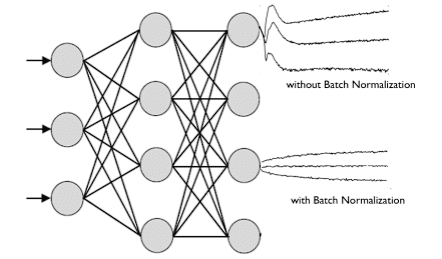

Batch Normalization (BatchNorm) is designed to stabilize and speed up training by normalizing activations across mini-batches. It helps to mitigate the internal covariate shift, making training more efficient and allowing for higher learning rates. This technique works by adjusting the activations of each layer based on the mean and variance of the mini-batch, and it also learns scale and shift parameters to refine the outputs.

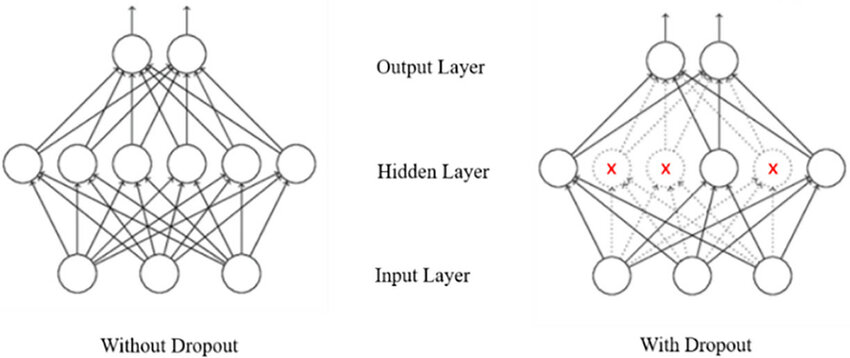

On the other hand, Dropout is a regularization technique that helps prevent overfitting by randomly setting a fraction of activations to zero during training. This forces the network to learn redundant representations and increases its robustness to noise, making it less likely to memorize specific features from the training data.

Both techniques have their merits individually, but the question arises: What happens when you combine them in the same model?

Challenges of Combining BatchNorm and Dropout

While both BatchNorm and Dropout are effective, their interaction can sometimes cause issues. The primary challenge lies in how Dropout alters the distribution of activations after BatchNorm normalizes them.

BatchNorm normalizes the activations using the mean and variance computed across the mini-batch. However, when Dropout is applied, it randomly zeros out some activations, which disturbs the batch statistics that BatchNorm relies on. This disruption can lead to instability during training, as BatchNorm may not be able to compute accurate statistics for the activations anymore.

Furthermore, while BatchNorm acts as a regularizer to some extent by stabilizing activations, Dropout introduces randomness, which might be redundant or even counterproductive in some scenarios where BatchNorm already provides sufficient regularization.

When Combining BatchNorm and Dropout Can Work

Despite the potential challenges, there are situations where combining BatchNorm and Dropout can still be beneficial. The size of the model, the complexity of the task, and the dataset being used are crucial factors in determining whether these techniques will complement each other.

For larger, more complex models with many layers, Dropout can still play a vital role in improving generalization, especially when combined with BatchNorm. In such cases, BatchNorm ensures stable learning by normalizing activations, while Dropout helps reduce overfitting by introducing randomness during training.

The effectiveness of combining BatchNorm and Dropout often depends on hyperparameter tuning and experimentation. Finding the right dropout rate, batch size, and layer placements can significantly impact the performance of the model. For example, testing different configurations of these techniques in convolutional layers and fully connected layers can yield useful insights into how they interact.

Best Practices for Using BatchNorm and Dropout Together

While BatchNorm and Dropout can complement each other in some cases, there are a few best practices that should be kept in mind to avoid training instability:

- Layer Placement:

When using both techniques, it’s generally recommended to apply BatchNorm before Dropout in the network architecture. Alternatively, Dropout can be applied only to layers that do not use BatchNorm (e.g., fully connected layers following convolutional layers with BatchNorm).

- Minimize Redundancy:

BatchNorm itself acts as a form of regularization by stabilizing activations, so adding Dropout in layers where BatchNorm is already applied may be redundant. If you do need to use Dropout, apply it carefully and selectively in areas where it would provide additional regularization without interfering with BatchNorm.

- Experimentation:

As the effectiveness of these techniques can vary across different tasks and datasets, experimenting with different configurations (e.g., adjusting dropout rates, batch sizes, and layer types) is crucial. Use cross-validation to determine the optimal configuration for your specific model.

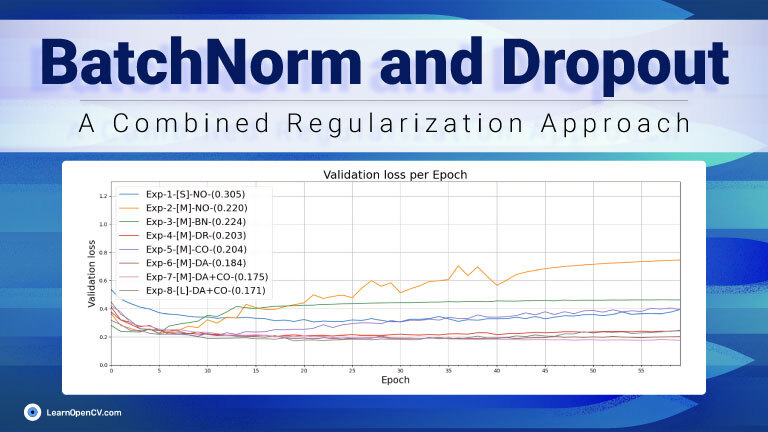

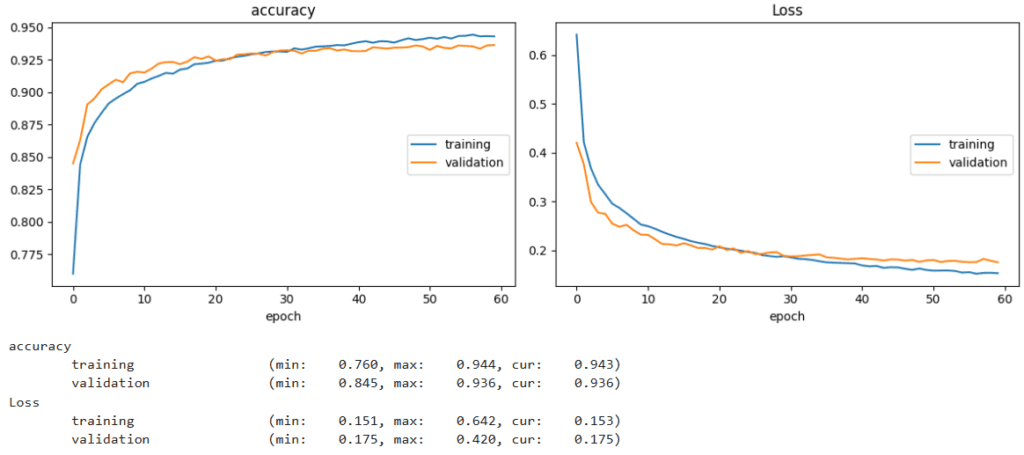

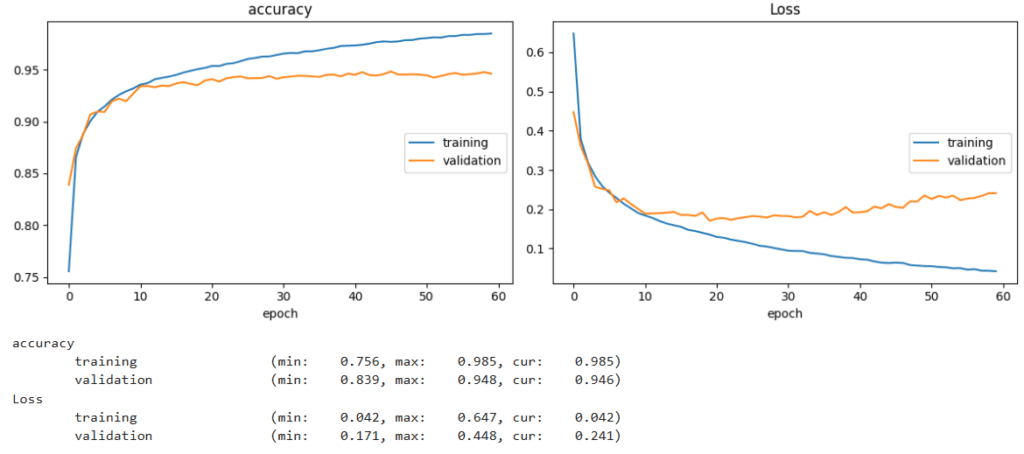

Visualizing the Experimental Results

To better understand the effects of different configurations, we’ve included accuracy and loss plots based on training done on the FashionMNIST dataset with various combinations of Dropout and BatchNorm, as well as individual layers of each technique, keeping the training configurations the same. These plots provide insights into how each configuration impacts model performance, helping to inform your decisions during experimentation.

Experimental results start from here –

- Experiment 1 – Using a Small Model

A smaller model compared to the LeNet Network, let’s check out how much it can learn!

The model has reached its learning capacity, and if we train the model any further, the “overfitting gap” (train and validation loss diverging) will continue to increase.

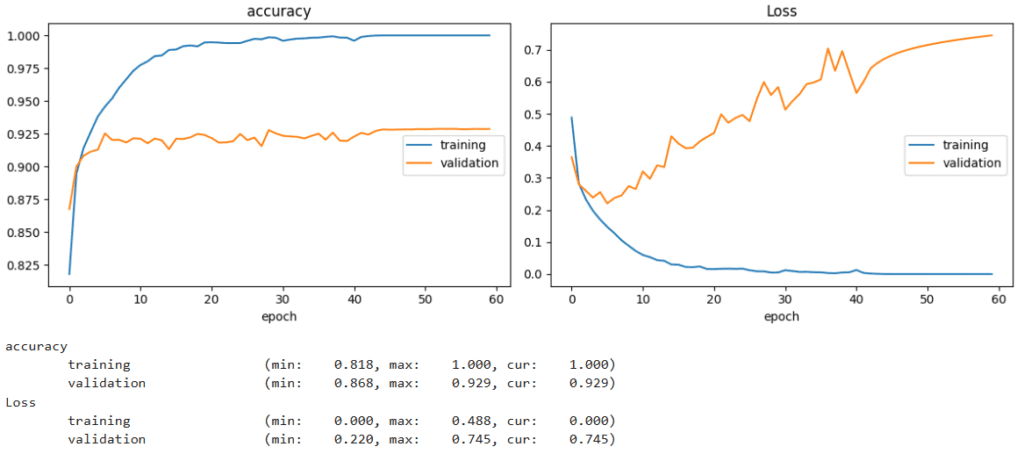

- Experiment 2 – Using a Medium Model with No Regularization:

Next, we use a new Medium model, which is bigger (in terms of parameters) than the previous one. We will train the MediumModel without regularization (no Dropout, Batch Norm, Data augmentation, LR Scheduler, or L2-penalty).

As we can see from the accuracy plot, the model quickly overfits. Still, one thing to note is that validation accuracy and loss are also better than the small model.

- Experiment 3 – Using a Medium Model with only Data Augmentation:

We will train the model without BatchNorm or Dropout. This run is better because it is a virtual way to increase the amount of training data, which helps in model generalization.

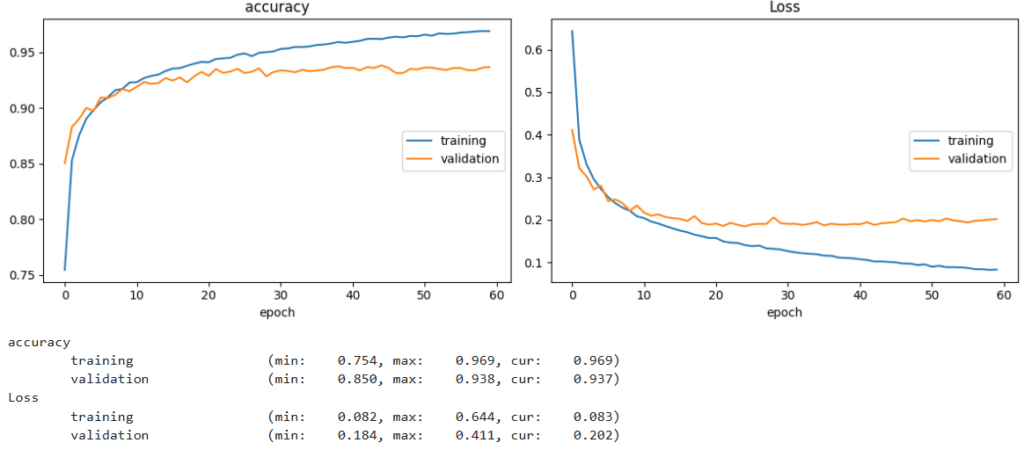

- Experiment 4 – Using a Medium Model with only Batch Normalization:

In this experiment, we have added BatchNorm2d layers to the feature extractor part of the model.

Even if accuracy does not improve and only the loss decreases, it still means this is a more robust model (misclassification confidence will not be very high). With the use of LR scheduler and batch normalization, the model still overfits, but there’s a significant improvement in the validation accuracy and loss.

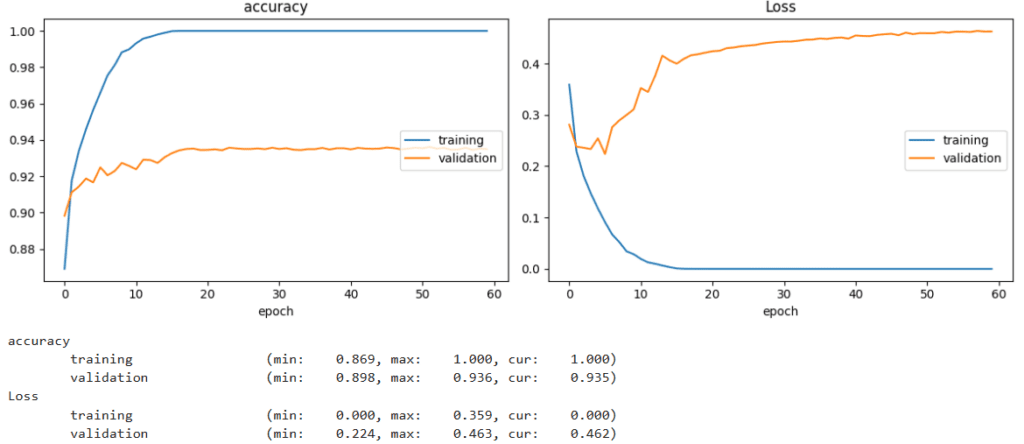

- Experiment 5 – Using a Medium Model with only Dropout Regularization:

Instead of batch norm layers, we will use Dropout2d for convolutional layers and Dropout for linear layers.

With the defined Dropout regularization values, the model is still overfitting. Still, the effect is slower and more controlled than with just batch normalization layers. With the dropout layers, the validation accuracy remains almost the same, but the loss improves.

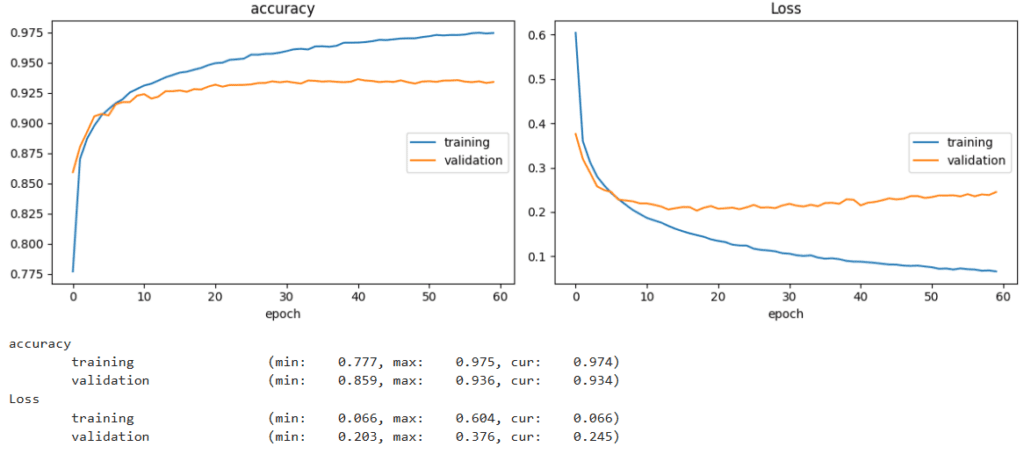

- Experiment 6 – Using a Medium Model with Dropout and Batch Norm Combined:

Let’s see the effect on training when we combine both batch-norm and dropout in a single model.

When both batch-normalization and dropout regularization techniques are combined, the model overfits again. There’s a very minor improvement in the validation accuracy (+0.001). But there’s a significant improvement in the validation loss.

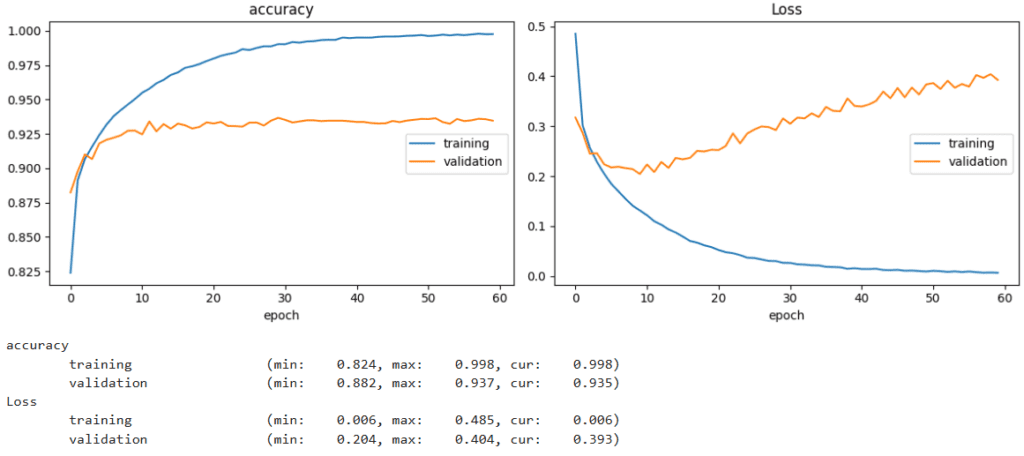

- Experiment 7 – Using a Medium Model with all three techniques:

Now we have used both BatchNorm and Dropout with Data augmentation. It works better than all of the above.

We can clearly see that the train and validation losses decrease till the last epoch. The gap between the two is very tiny. This model has the best generalization ability among all the above experiments. With the combined regularization effect, the learning curves are the best so far. Though the training loss and accuracy are better than on the validation set, the difference is minimal, and the model can be said to perform better on unseen data and thus is a generalized model.

- Experiment 8 – Using a Large Model with all three techniques:

Now that we have reduced the overfitting phenomenon, let’s try to improve the score. To do this, we create one large complex model by increasing the number of filters and layers, and apply all three regularization techniques as above.

With a larger model, we achieve the best accuracy (0.948) of all the experiments so far.

Conclusion

Combining BatchNorm and Dropout in a neural network is not a one-size-fits-all solution. While BatchNorm provides stability and regularization, Dropout can improve generalization by preventing overfitting. However, the interaction between these two techniques can lead to instability in training if not configured properly.

Batch normalization improves accuracy with only a small penalty for training time. Therefore, it should be the first technique used to improve CNNs. Dropout helps accuracy, but not in all cases. The conclusion is to apply dropout carefully, not assuming it will always improve the results. Dropout and Batch Normalization significantly increase training time.

By understanding how these layers work, experimenting with different configurations, and following best practices for layer placement and hyperparameter tuning, one can unlock the full potential of both techniques in your models. In cases where the combination works effectively, it can lead to more robust and well-generalized models.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning