The ongoing pandemic has adversely affected several businesses around the world. The impact was particularly devastating during the second wave in India earlier this year, with an exponential rise in cases, deaths, and crippling effect on the economy, notably smaller businesses. Bigger and established companies could somehow cope with the challenge by dipping into their deep pockets. Unfortunately, local businesses and shops are still struggling for survival.

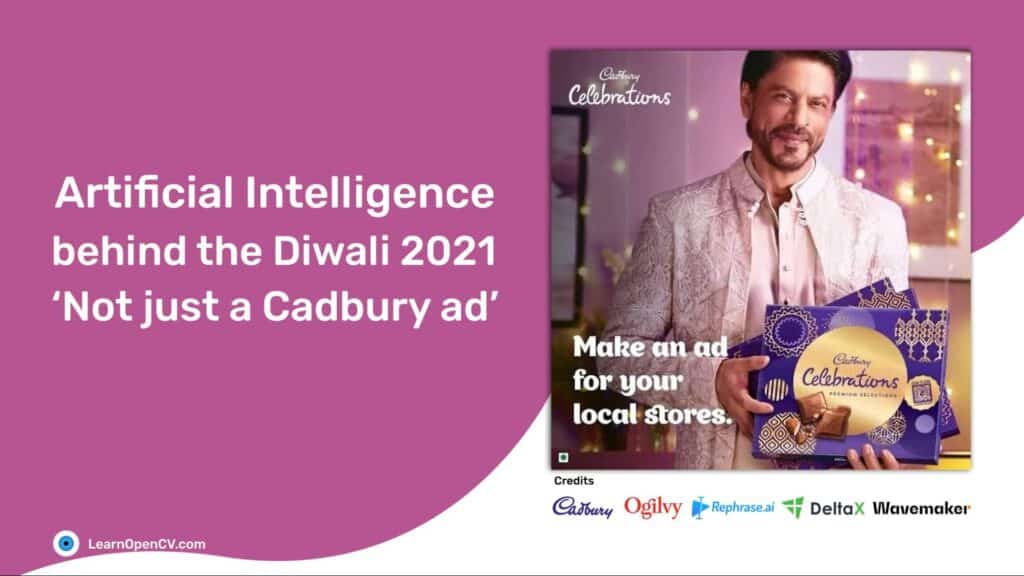

Cadbury and their creative partner Ogilvy and Rephrase.ai have come up with a unique initiative to help the local businesses during this festive Diwali season in India. They have launched an innovative hyper-personalized ad-tech campaign using a Bollywood star celebrity, Shah Rukh Khan, powered by Generative AI technology.

Shah Rukh Khan is to Bollywood what Tom Cruise is to Hollywood – a superstar.

For small business owners, having a celebrity like Shah Rukh Khan promote their local business in an ad is a dream come true.

Combining the allure of celebrity with the power of AI to level the playing field for the little guy is a pure marketing genius.

Rephrase.ai, the technology provider of this campaign, utilized the power of machine learning to synthesize a video for each business owner with the voice of Shah Rukh Khan. His lips moving in sync with his voice make the video incredibly realistic.

For AI Enthusiasts like us, it is interesting to understand what it takes technically to achieve something like this.

There are two parts to the solution:

- Audio Generation (Text To Speech)

- Lip Sync ( Sync audio with Video )

Let’s understand them in some detail.

Audio Generation

As part of the ad generation process, the local business owner provides their business name as part of a text field in an online form.

In the video generated by the AI, Shah Rukh Khan utters the name of the business.

So we need first to convert the name of the business in text format to speech. Algorithms in Natural Language Processing (NLP) take input text and identify the different phones and diphones in the text.

The system then uses pre-recorded clippings of those phones and diphones recorded by a voice actor (Shah Rukh Khan in this case) and stitches them together to make whole words.

The larger the collection of audio samples for different parts of speech to stitch together, the better the audio output. It is also helpful to include domain-specific audio samples. For example, if we expect the word “Shop” or “Store,” it’s better to have a recorded sample of the entire world than to try and synthesize it.

You can use several tools to accomplish this, such as the tacotron project, Real-Time-Voice-Cloning, and FastSpeech2.

Lip Sync

Once we have the desired speech audio, the next challenge is to synchronize the lip movements in the original video with the speech audio.

This has been a well-researched area in computer vision. Such methods utilize GANs (Generative Adversarial Networks) to generate a video where the speaker’s lips are in sync with the audio. You can learn more about GANs here. One such state-of-the-art method is wav2lip.

Wav2Lip generates accurate lip sync by learning a model that produces precise lip shapes in sync with the audio. Further, they add a Visual Quality Discriminator to improve the quality of the generated results. Refer to this insightful paper for more details.

The model generates a talking face video frame-by-frame. The input at each time-step is the current face crop from the source frame (i.e., Shah Rukh Khan’s video in this case), concatenated with the same current face crop with the lower-half masked to be used as a pose prior. The corresponding speech audio also serves as input to the speech sub-network. The network then generates the face crop with the mouth region morphed to sync the mouth or lips with the audio.

Incredible, isn’t it?

Check it out in action applied to Tony Stark.

These are constructive and ethical uses of AI technology to empower and help people. Sadly, there are inappropriate use cases of AI technology as well. Similar yet very different technology has been in the news earlier for entirely wrong reasons. An example is a video where Jordan Peele became the voice of Obama, making him say things he never did.

The Obama video was taken from a public event and the audio was replaced along with some facial manipulations.

It was also shamefully used for creating videos with very inappropriate and explicit content by a reddit user named ‘deepfakes’.

Conclusion

In this post, we explored how ‘text to speech’ and ‘lip sync’ systems can be combined to generate different personalized videos from a single source video.

Using this approach, numerous applications can be created such as making video lectures available in different regions in lip synced regional languages, better lip syncing in dubbed movies and video games characters etc. The opportunities are truly limitless!

The Cadbury campaign demonstrates only the tip of the iceberg in terms of the endless possibilities of personalized branding and marketing that can be realized with the ethical use of AI.

Wishing everyone a very happy festive season and a happy Diwali!

References

- Not Just A Cadbury Ad website

- Ogilvy website

- Rephrase.ai Blog

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning