AI, being no longer confined to passive algorithms, is transforming itself into autonomous agents that can perceive, reason, and act with increasing intelligence. These agents are designed to navigate uncertainty, adapt to changing conditions, and exhibit common sense, marking a significant leap towards true machine intelligence.

“By 2024, AI will power 60% of personal device interactions, with Gen Z adopting AI agents as their preferred method of interaction.“

— Sundar Pichai, CEO of Google

The rapid evolution of AI has ushered in an era where machines are increasingly capable of performing complex tasks with minimal human intervention. One such groundbreaking advancement is Agentic AI, an emerging paradigm designed to inspire AI systems with a degree of autonomy, self-direction, and goal-driven behavior that surpasses traditional models. This article delves deeply into the foundational principles, critical components, and building blocks of Agentic AI, real-world applications development using CrewAI and Phidata, and the future potential of Agentic AI while discussing the frameworks and tools enabling its development.

- Agentic AI

- Agentic AI vs Generative AI

- When (and When Not) to Use Agents

- Common Production Patterns for Agentic Systems

- Critical Characteristics of Agentic AI

- Unified Building Blocks of Agentic AI

- Frameworks for Building Agentic AI

- AI Agents Implementation

- Conclusion

- References

Agentic AI

Agentic AI, when specifically driven by large language models (LLMs), refers to autonomous systems that leverage LLMs’ capabilities to perceive, interpret, and act on dynamic inputs. These systems utilize the language models’ deep understanding of context, tasks, and language patterns to make decisions, adjust strategies, and perform complex actions without explicit human intervention.

Agentic AI, in general, refers to autonomous systems capable of perceiving their environment, making decisions, and taking actions to achieve specific goals, using advanced frameworks such as reinforcement learning, self-supervised learning, and multi-agent systems to dynamically adapt to changing contexts. Unlike traditional AI, which relies on predefined rules and static datasets, Agentic AI continuously learns from user interactions, behavioral patterns, and real-time data, refining its decision-making over time.

Agentic AI vs. Generative AI

As Agentic AI gains prominence, it is often confused with Generative AI (Gen AI) due to its shared reliance on advanced machine learning technologies, particularly LLMs. However, they serve fundamentally different purposes.

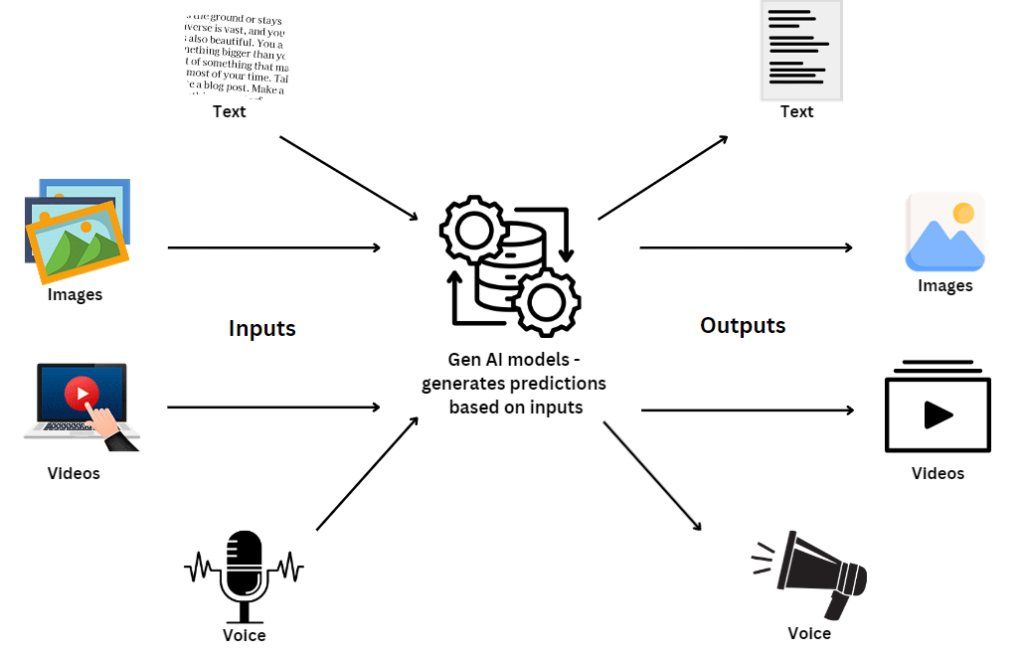

- Generative AI focuses on content creation, producing text, images, videos, or code based on learned patterns. Examples include GPT for text generation and Stable Diffusion for image synthesis.

- Agentic AI, on the other hand, is action-oriented, designed to make decisions, plan tasks, and execute them autonomously in dynamic environments. It does not just generate responses but actively interacts with its surroundings, making strategic choices based on goals and real-time feedback.

When (and When Not) to Use Agents

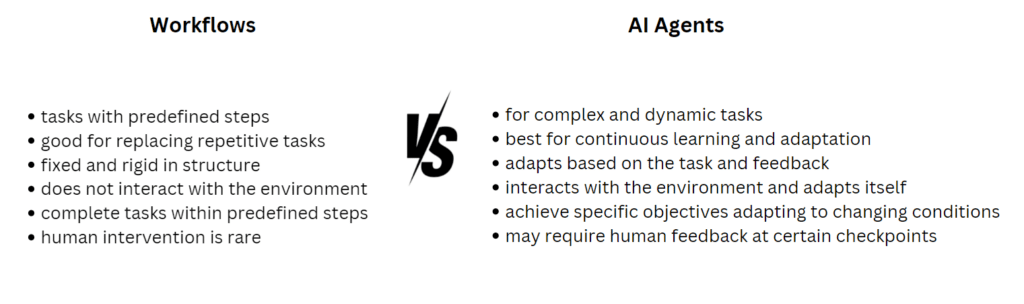

While agentic systems are powerful tools, knowing when to apply them for maximum effectiveness is essential. For many simple tasks, agents may be overkill, introducing unnecessary complexity. They trade speed and cost for improved performance on complex tasks. However, when simplicity is the key, relying on workflows or optimizing a single LLM call may be sufficient.

Workflows are best suited for tasks with clear, predefined steps that don’t require flexibility. These systems follow set paths, automating repetitive processes with high efficiency, which makes them ideal for straightforward, predictable applications. In contrast, AI Agents are designed for more complex and dynamic tasks. They can make real-time decisions, adapt strategies, and interact with their environment autonomously. Agents are perfect for situations where tasks evolve or require ongoing problem-solving, providing a level of adaptability that workflows can’t offer.

Therefore, using workflows for well-defined, repeatable tasks and AI agents is suggested when you need flexibility and adaptive decision-making to handle dynamic challenges. All these variations of ‘Agent’ are categorized as ‘Agentic Systems’ at Anthropic.

Common Production Patterns for Agentic AI Systems

The foundational element of agentic systems is an augmented large language model (LLM), enhanced with features like retrieval, tools, and memory. These additions enable the LLM to generate queries, choose tools, and decide what information to retain, allowing for more dynamic, context-driven tasks.

Workflows -> After augmented LLMs, prompt chaining takes the process a step further by dividing tasks into smaller, manageable subtasks. Routing further refines the process by classifying inputs and directing them to specialized tasks. It ensures that each type of input gets the appropriate processing path. Parallelization then allows those specialized multiple tasks to be processed simultaneously, aggregating their results for a comprehensive output. An orchestrator LLM directs tasks to specialized worker LLMs, which process them and return their results for synthesis unlike parallelization, where subtasks are predefined. Workflows involve a continuous Evaluator-Optimizer feedback loop where one model generates outputs and another model evaluates these outputs.

Agents -> Augmented with LLMs, agents are designed to handle complex tasks by first receiving input or commands from a human. Once the task is defined, they autonomously plan and execute actions, though they may seek clarification or judgment from the user when necessary. Agents gather “ground truth” from their environment, such as tool results or code outputs, to assess progress throughout the process. They can pause for human feedback at key checkpoints or when facing obstacles, ensuring continuous optimization.

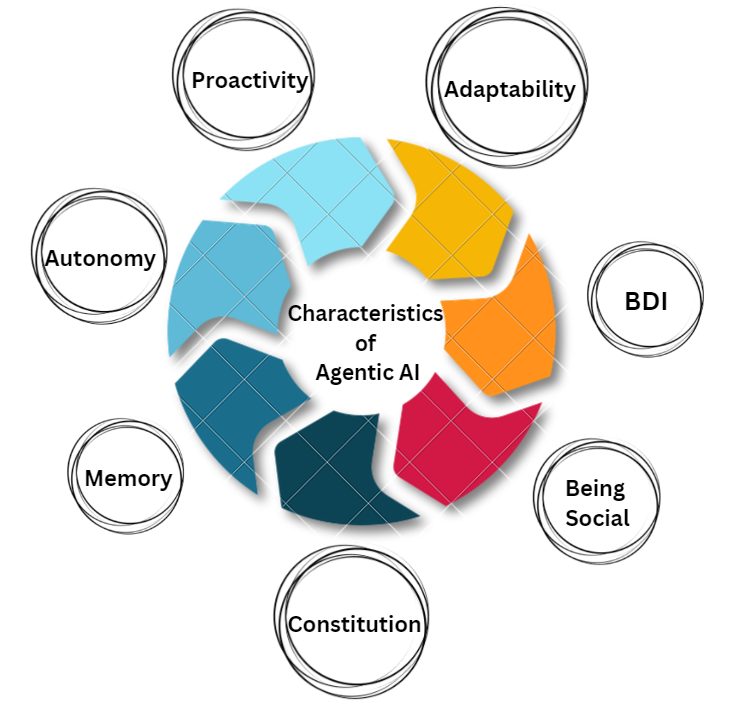

Critical Characteristics of Agentic AI

At its core, Agentic AI embodies a few critical characteristics that define its capabilities:

- Autonomy: The ability to perform tasks independently without requiring explicit instructions for every step. This enables agents to execute actions and adjust strategies based on their understanding of the environment.

- Proactivity: The ability to anticipate future scenarios and take proactive measures to achieve set goals. This proactive nature is essential for enabling agents to adapt and respond effectively in dynamic and unpredictable environments.

- Adaptability: The capability to learn and evolve from interactions, optimizing performance over time. Adaptability ensures agents remain relevant and effective even as conditions or goals change.

- Beliefs, Desires, and Intentions (BDI) – A common model used in agent-oriented programming (AOP) is the BDI model, where agents are characterized by their beliefs (information about the world), desires (goals or objectives), and intentions (plans of action).

- Being Social: Agentic AI systems are designed to interact and collaborate with other agents and humans. Their social aspect enables them to engage in meaningful communication, negotiate, and cooperate with both digital and human entities to achieve common objectives.

- Constitution: Agentic AI operates within a defined set of guidelines or rules that govern its decision-making process. These principles, often derived from ethical considerations or organizational policies, help ensure the agent upholds ethical standards and aligns with the goals of its operators.

- Memory: Long-term memory is essential for Agentic AI to store and retrieve previous experiences, interactions, and learnings. This memory allows agents to improve their decision-making over time, recall past decisions or actions, and learn from past mistakes.

These principles are universally applicable to both physical agents, such as robots, and virtual agents, such as software-based assistants, making them foundational across all domains of Agentic AI.

In this fast-evolving era of Artificial Intelligence, researchers and developers have a growing consensus that truly advanced and efficient Agentic AI applications are nearly impossible without LLMs. The ability of AI agents to understand natural language, reason contextually, and interact dynamically with humans has redefined what autonomy and intelligence mean in the digital age. Without LLMs, many argue that AI agents would remain confined to rigid rule-based systems, incapable of adaptive, real-world decision-making that closely resembles human cognition.

LLMs, being the Cornerstone of AI Agents in the Modern Era, have provided the critical foundation for reasoning, contextual understanding, and intelligent communication. In many ways, LLMs can be considered the soul of AI agents, granting them the ability to communicate, interpret, and act in a manner that feels inherently humanlike. This is why many of today’s leading AI applications, from chatbots and autonomous research agents to real-time problem solvers, are built on LLM-driven architectures.

However, while LLMs have revolutionized AI agents, claiming that all agentic AI systems are impossible without them would be an oversight.

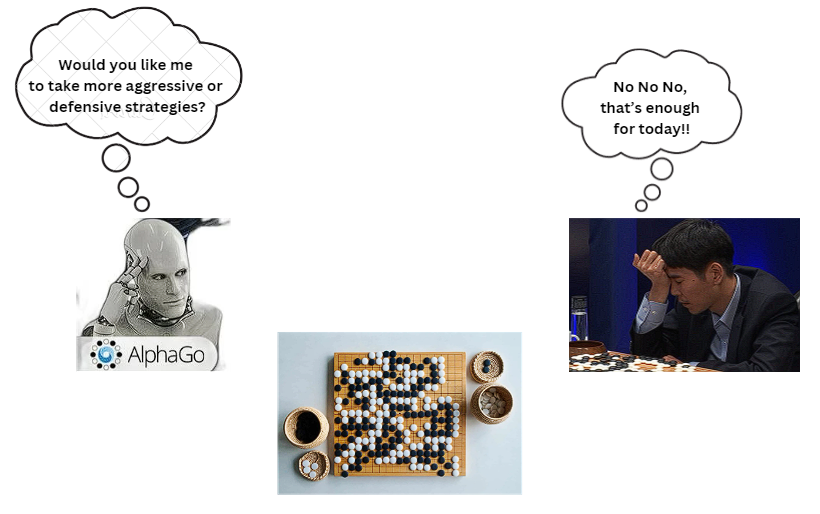

- AlphaGo demonstrated superhuman gameplay in Go using Reinforcement Learning and Monte Carlo Tree Search (MCTS), mastering strategies without any textual training or linguistic reasoning.

- MuZero went a step further, learning to play multiple games without even being given their rules, constructing an internal understanding of game dynamics purely through self-play and trial-and-error learning.

On the contrary, had AlphaGo and MuZero been equipped with an LLM, we could communicate with them, hear their ‘thought process,’ and understand how they evolve, bridging the gap between pure computation and human interpretability.

Imagine AlphaGo telling you, ‘I chose this move because it maximizes long-term board control based on my training history,’ which explains its reasoning process.

Now imagine AlphaGo asking you ‘Would you like me to take more aggressive or defensive strategies?’ showcasing its dynamic adaptability based on human interaction.

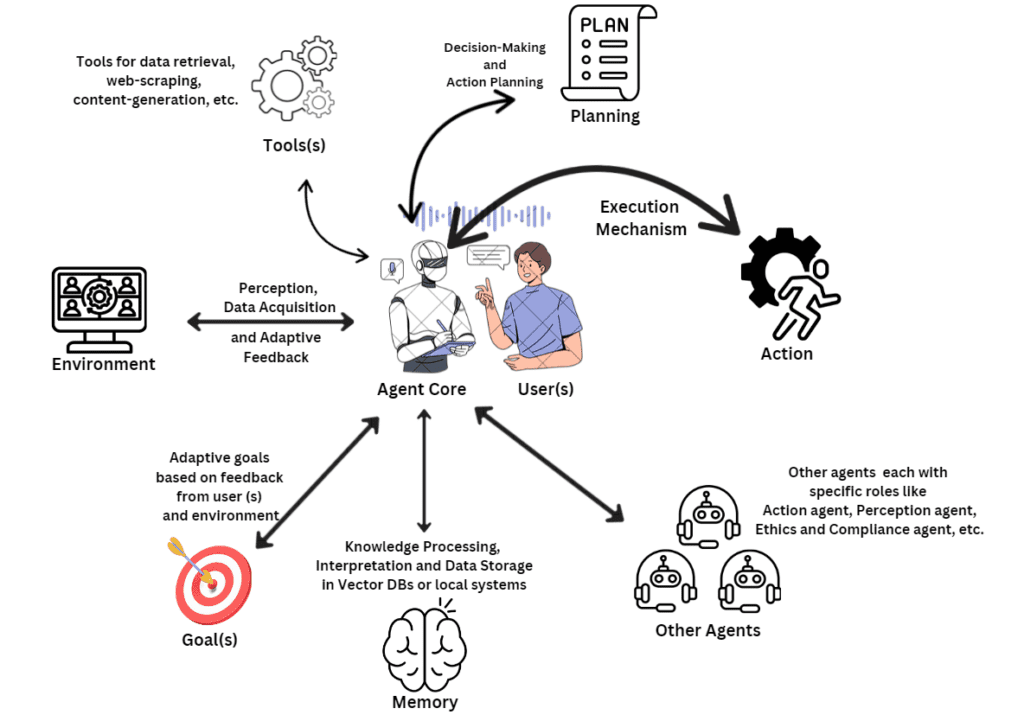

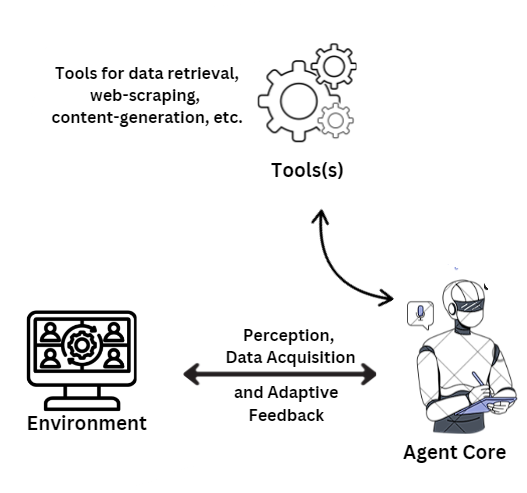

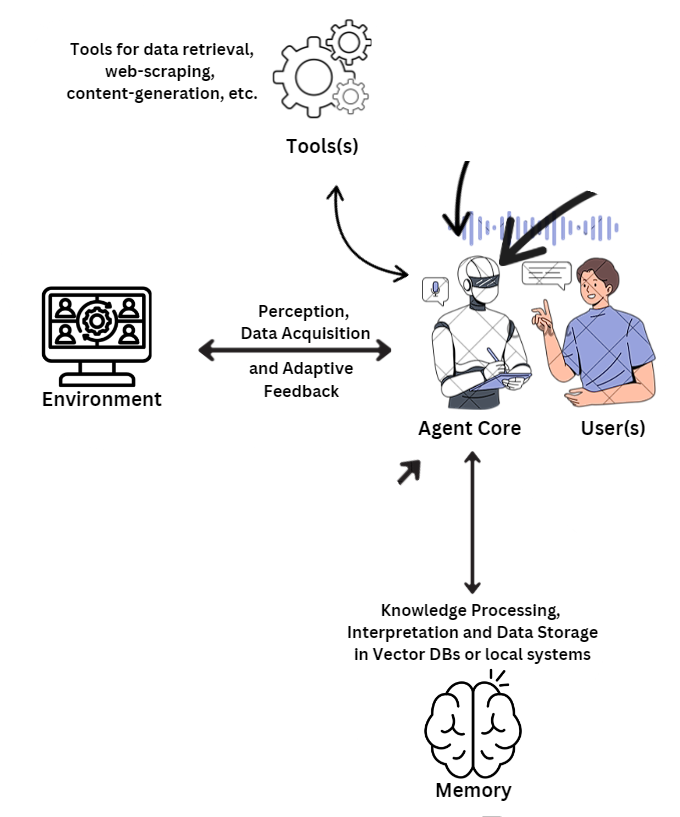

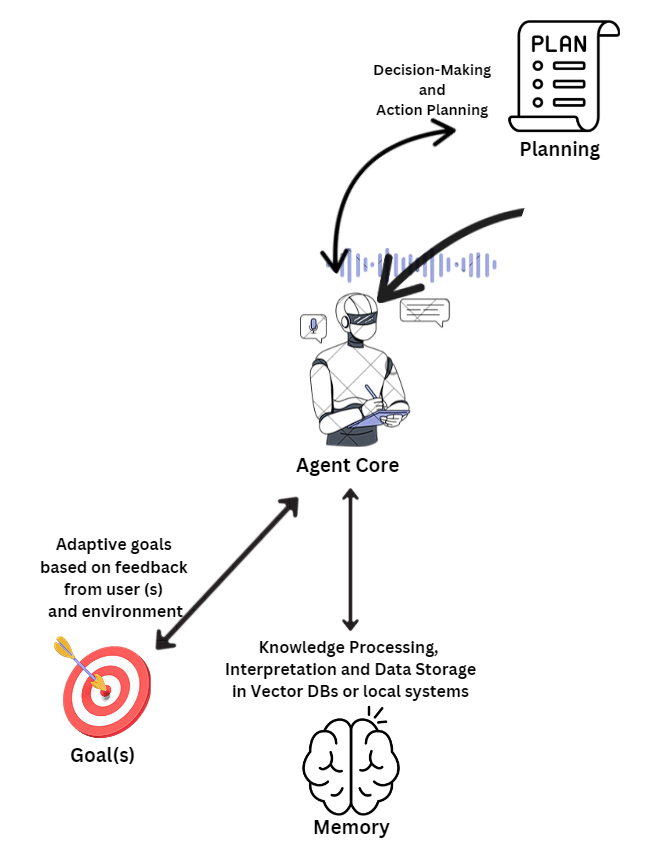

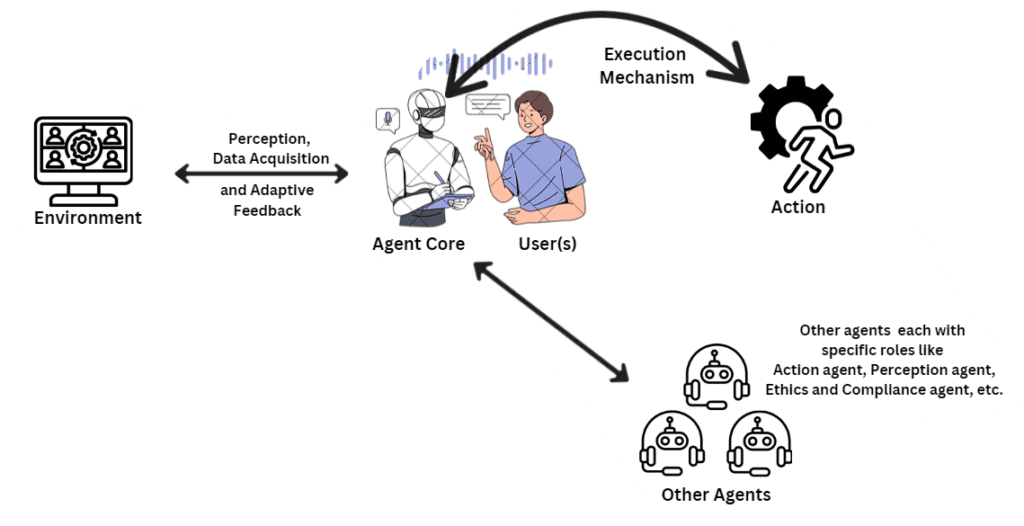

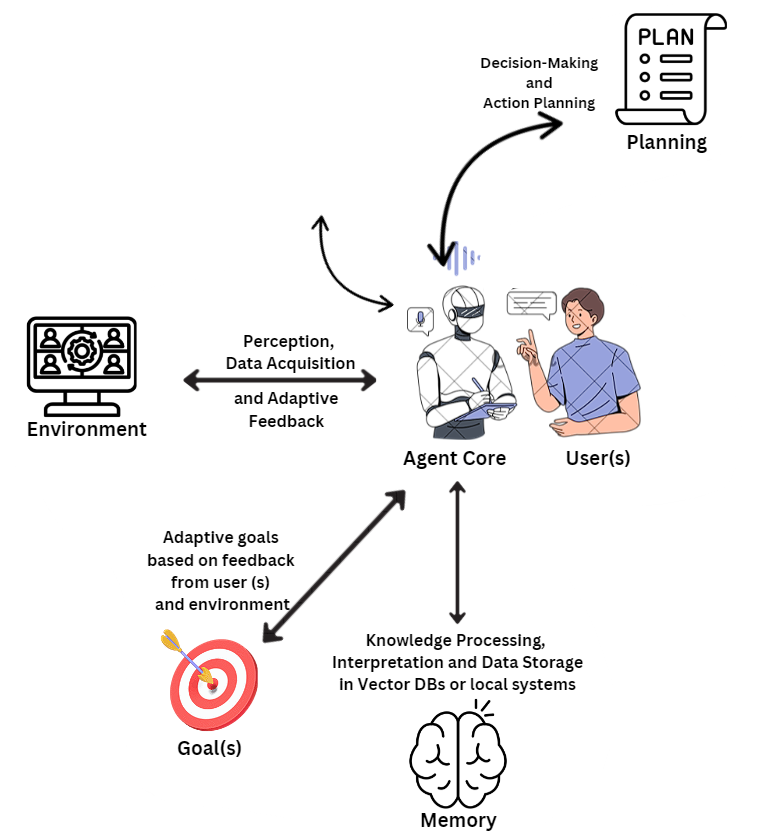

Unified Building Blocks of Agentic AI

Agentic AI systems, whether embodied in robotic agents or virtual AI agents, share fundamental components that enable autonomy, intelligent decision-making, and self-improvement. These elements form a structured workflow that efficiently allows AI systems to operate in physical and digital environments.

Perception and Data Acquisition

Perception is the AI system’s gateway to the world, as robots rely on sensors like cameras, LiDAR, and microphones to interpret physical surroundings, while virtual agents extract and process structured and unstructured data from digital sources. Both leverage advanced techniques such as sensor fusion and natural language processing (NLP), respectively, to derive meaningful insights, enabling them to understand and perceive their environment.

Knowledge Processing and Interpretation

Once data is acquired, AI agents transform raw inputs into structured intelligence. Virtual AI agents analyze text, recognize patterns, and construct knowledge graphs, while robots process sensory data through machine learning models for object recognition and spatial mapping. This stage ensures that AI, whether navigating a physical space or aggregating digital information, builds an accurate representation of its domain.

Decision-Making and Action Planning

AI-driven autonomy is powered by intelligent decision-making. Robots optimize movement and interactions using reinforcement learning and predictive modeling, while virtual agents evaluate and prioritize information through credibility assessments and contextual analysis. By weighing multiple variables, both types of agents make informed decisions that align with their objectives.

Execution and Interaction Mechanism

Turning decisions into actions is where AI agents make a tangible impact. Virtual AI agents generate structured responses, summaries, or task automation scripts that seamlessly integrate with software applications, while robots execute tasks through actuators and effectors, enabling movement and interaction with objects. In the physical or digital realm, execution bridges intelligence with real-world applications.

Adaptive Learning and Feedback Optimization

Continuous improvement is a defining trait of Agentic AI. Robots refine their actions based on real-time sensor feedback, enhancing precision and efficiency, while virtual AI agents learn from user interactions, refining search strategies and improving response accuracy. Reinforcement learning and iterative optimization ensure that both types of AI agents evolve with experience, becoming more effective over time.

By unifying these core building blocks, Agentic AI systems achieve seamless autonomy, adaptability, and efficiency, driving advancements in both robotics and digital intelligence. This integration marks a significant step toward AI ecosystems capable of handling complex, real-world challenges.

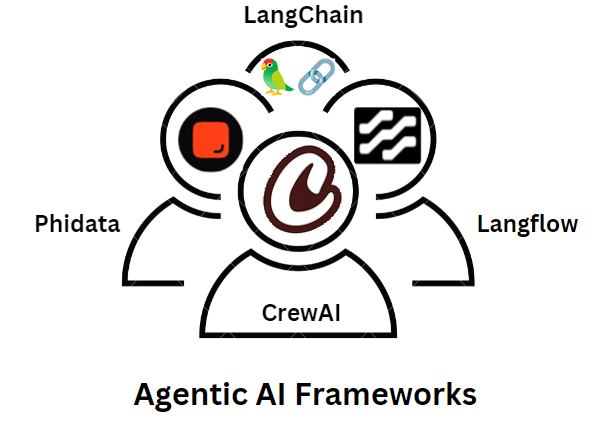

Frameworks for Building Agentic AI

When developing Agentic AI systems, choosing the right framework is crucial for ensuring efficiency, scalability, and seamless integration with other technologies. Below are some of the most popular frameworks that we will be using for building AI agents:

- CrewAI: Its focus on natural language understanding, decision-making, and adaptability makes it a great choice for developing intelligent systems that can plan, learn, and execute tasks in dynamic environments.

- Phidata: Phidata is designed for creating agents that combine data-driven insights with decision-making processes. Its ability to integrate large datasets (vast structured and unstructured data) and perform real-time analysis allows agents to work more efficiently and humanely.

- OpenAI Gym: OpenAI Gym is widely used to develop reinforcement learning (RL) agents. It provides a flexible environment for testing and training AI agents, making it a great option for building agents that need to learn through interaction with their environment.

- Langflow: It excels in allowing users to visually define complex workflows, providing intuitive tools for managing multiple interactions, and ensuring seamless communication between agents and external systems.

We must not make this blog post more theoretical now. So, let’s get our hands dirty with some code, too. Here, we will be showing you two Agentic AI system implementations in great detail. We will be leveraging default OpenAI (users need a subscription to use OpenAI LLM) as well as Google Gemini’s free-to-use LLM (gemini-2.0-flash-exp). Therefore, we will be learning how to implement and execute completely no-cost AI agents, too. Everything is completely free!! All of the code files have been structured well and are being provided free of charge. Learners can download the code by clicking on the Download Code button below.

AI Agents Implementation

DIrectionX

Leveraging the CrewAI framework, this AI agent is capable of generating a well-structured roadmap for the topic given by the user by going through all the relevant articles and content present on learnopencv.com and then creating the roadmap about the topic along with the URLs of the articles, authors and co-authors details, publication date as well as the description of the articles. Users will be able to save the roadmap in a text file or a ReadMe file.

Before coding the files for building the agent, we need to be ready with some of the API keys and then set them up in the Python environment. We will be requiring a Google Serper API Key, an OpenAI API Key, and also a Google Gemini API key for integrating a Web Search tool and an LLM with our project. Instructions for generating the concerned API Keys are as follows –

- Download the code provided and open it in the VS Code editor.

- In your project directory, create a new file named .env (ensure it’s in the root directory of your project).

- To generate the Google Serper API key, visit the Google Serper API website: https://serper.dev/, sign up or log in with your Google account, navigate to the API section, click Get API Key, and then copy the API Key provided for use in your application.

- Now, to generate the Google Gemini API key, visit Google AI Studio (https://aistudio.google.com), sign up or log in with your Google account, select a free Gemini LLM model (gemini-2.0-flash-exp), click Get API Key, and then copy the API Key along with the selected model name.

- Copy your OpenAI API key, too, for those who want to use OpenAI models. We will be learning to implement and execute the second AI agent using both OpenAI LLM as well as the Google Gemini one, everything discussed later in this article.

- Add the following content to the .env file, replacing your_serper_api_key, your_gemini_api_key, and your_openai_api_key with your actual keys as follows:

GOOGLE_API_KEY="your_gemini_api_key"

SERPER_API_KEY="your_serper_api_key"

OPENAI_API_KEY="your_openai_api_key"

- Make sure to install all the required Python libraries required for the project as listed in the requirements.txt file with the help of a CLI command

pip install -r requirements.txt.

After setting up your Python environment, we need to focus on creating 4 Python files: services.py (which will help define the agents for article-searching and then for roadmap generation ), tools.py (will be used for defining the tools to be used for the tasks), tasks.py (Tasks for each agent defined in the services.py file will be assigned here to the respective agents.) and streamlining.py (to streamline the whole process from user to agents, agents using tools and generating their required outputs). Let’s start with the actual coding part.

services.py

from crewai import Agent

from tools import tool

from dotenv import load_dotenv

load_dotenv()

from langchain_google_genai import ChatGoogleGenerativeAI

import os

## call the gemini models

llm=ChatGoogleGenerativeAI(model="gemini-2.0-flash-exp",

verbose=True,

temperature=0.5,

google_api_key=os.getenv("GOOGLE_API_KEY"))

# Creating a Research agent with memory and verbose mode

article_researcher = Agent(

role="Researcher",

goal="Search for the articles related to the {topic} by searching exclusively on 'learnopencv.com'."

"And then you need to make the list of all relevant articles found about the topic and then make a list"

"of all those articles alongwith the article titles, names of all authors and co-authors of the respective"

"articles and also include the date of publication of those articles. The arrangement of articles should be"

"in such a way that a beginner learner can refer to it and then start learning about the articles order-wise"

"to learn about the topic from scratch till advanced knowledge.",

verbose=True,

memory=True,

backstory=(

"You're at the forefront of AI and Computer Vision research."

"Your expertise is in searching the most relevant articles about the topic and make a list out of them."

"Our primary focus is identifying the most relevant article from 'learnopencv.com'."

"Extract and provide the article titles, publication date and the names of all the authors and co-authors "

"of all the relevant articles found from learnopencv.com."

"Make the list of all the relevant articles in such a way that they are arranged to create a well-structured"

"roadmap out of it so that a beginner learner can refer to the roadmap and then start learning about the topic"

"by referring the roadmap from beginning to the end."

),

tools=[tool],

llm=llm,

allow_delegation=True

)

## Creating the article writer agent with tools responsible in writing the final article

article_writer = Agent(

role="Writer",

goal="Generate the well-structured and organized roadmap about the {topic} by evaluating the meta-descriptions"

"of the most relevant articles found on learnopencv.com like which article to be put first and which article"

"after the another one so that it will be a roadmap for a beginner learner to start learning about the topic"

"by referring the roadmap from beginning to the end.",

verbose=True,

memory=True,

backstory=(

"You generate the final well-structured and organized roadmap in the most professional way."

"Your generated roadmap should be insightful and must be based on "

"the best-matching articles about the topic from learnopencv.com and the order of the articles"

"in the roadmap must be in such a way that the first article will be that of an introductory article on the"

"topic and then gradually the level of the articles increases."

"The order of the articles to be structured in the roadmap must be in such a way that the beginner learner"

"will start going through all the articles mentioned in the roadmap order-wise and he/she will be able to learn"

"everything about the topic from scratch till advanced knowledge as he/she continues with the roadmap."

"Ensure the roadmap must include the exact titles of the articles, their publication dates and the names of all"

"the authors and co-authors of the articles found from learnopencv.com."

),

tools=[tool],

llm=llm,

allow_delegation=False

)

From the above code block, first, the environment variables, including the Gemini API key, are loaded using the dotenv module. The ChatGoogleGenerativeAI is initialized with the Gemini model and configured with specific settings. The article_researcher agent is tasked with searching ‘learnopencv.com’ for relevant articles and extracting necessary article info. The article_writer agent uses the research data from the previous agent to create an organized roadmap. It evaluates the articles’ meta-descriptions and ensures the content is arranged logically from beginner to advanced levels.

tools.py

import os

from dotenv import load_dotenv

from crewai_tools import SerperDevTool

load_dotenv()

os.environ['SERPER_API_KEY'] = os.getenv('SERPER_API_KEY')

tool = SerperDevTool()

In the above code block, the SerperDevTool is initialized and assigned to the tool variable, enabling interaction with the Serper API. It also loads environment variables from the .env file using the dotenv module and sets the SERPER_API_KEY in the environment. The whole setup allows secure access to the Serper API by storing the API key in environment variables rather than hardcoding it in the script.

tasks.py

from crewai import Task

from tools import tool

from services import article_researcher,article_writer

# Research task

research_task = Task(

description=(

"Identify the most relevant articles about the {topic} from learnopencv.com."

"Create a well-structured roadmap for a beginner learner so that he/she can go"

"through all individual articles one-by-one to completely know about the topic from"

"scratch till advanced knowledge about the topic"

"Include the article title, date and authors and co-authors for all the articles found"

"on learnopencv.com about the topic for creating the roadmap."

"Make sure to not to repeat any article in the roadmap."

),

expected_output='''List of all the relevant articles with the article titles, published date

and all authors and co-authors information in order so that a beginner learner

can go through all articles order-wise to learn about the topic from scratch

till advanced knowledge.''',

tools=[tool],

agent=article_researcher,

sources=["https://learnopencv.com"] # Restricting search to learnopencv.com

)

# Writing task with language model configuration

write_task = Task(

description=(

"Compose a well-structured roadmap for the {topic}."

"Focus on all important articles related to the topic."

"Extract the meta-description of all the articles found and then arrange the articles in a"

"way so that the beginner learner can start learning about the topic by starting from the start of the roadmap."

"Ensure that the roadmap must include all author and co-author details details and date of publication for all the"

"articles to be put in the roadmap found from learnopencv.com."

"Make sure to include the names of all the authors and co-authors and publication dates too."

"The roadmap must be well-structured and organised in a manner such that the beginner learner can directly look at it"

"and then can directly look for the article which he/she wants to look upon."

),

expected_output='''A well-structured roadmap for a beginner learner to refer, on the {topic} found

on learnopencv.com and the roadmap must also include article titles, date and author

information of all the articles to be structured in the roadmap.''',

tools=[tool],

agent=article_writer,

async_execution=False,

output_file='Roadmap.md' # Example of output customization

)

In the above code block, two tasks, a research task and a writing task, have been declared and assigned to the Agents declared in services.py utilizing the Task class from crewai. The research_task focuses on identifying relevant articles about a specific topic from learnopencv.com and creating a well-structured roadmap for a beginner learner while write_task generating a well-structured roadmap based on the articles found. The write_task also uses the article_writer agent and outputs the roadmap in a markdown file (Roadmap.md).

streamlining.py

from crewai import Crew,Process

from tasks import research_task,write_task

from services import article_researcher,article_writer

crew=Crew(

agents=[article_researcher,article_writer],

tasks=[research_task,write_task],

process=Process.sequential,

)

## Starting the Task Execution process

result=crew.kickoff(inputs={'topic':''})

print(result)

The above code block defines the execution flow for the two previously created tasks, research_task and write_task, using the Crew class from crewai. The Process.sequential method ensures that tasks are executed sequentially, one after another.

After the creation of the mentioned 4 files, we just need to mention the topic in the streamlining.py file and then execute it using python streamlining.py.

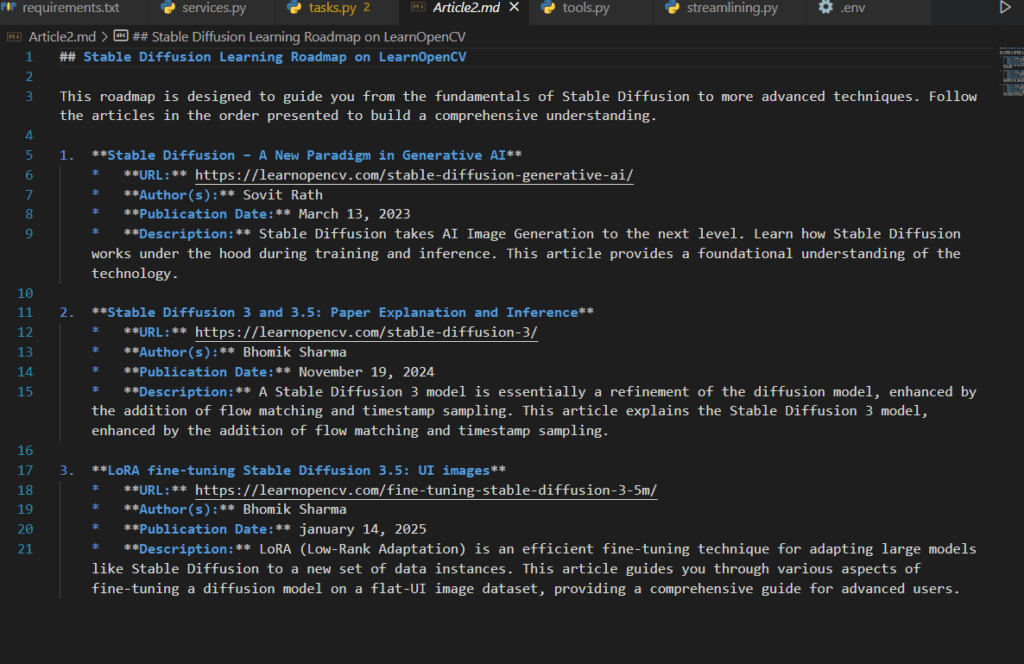

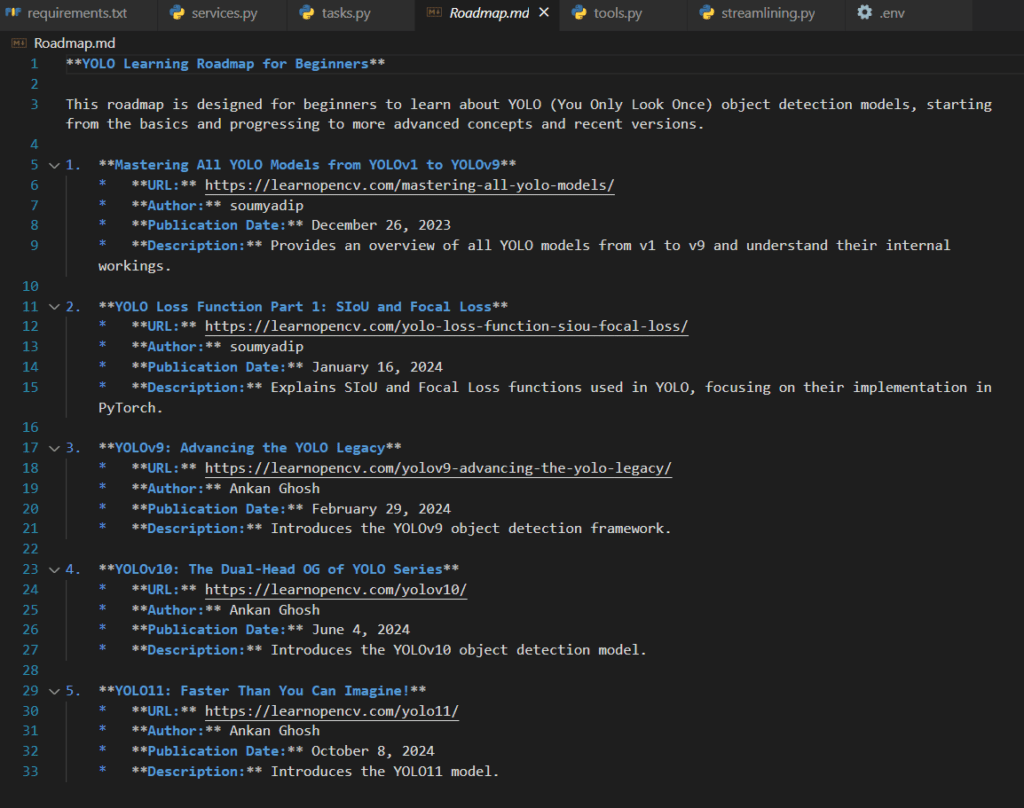

The topic and the output generated are as follows –

Topic – Stable Diffusion

Topic – YOLO

As can be inferred, our AI agent is performing well in creating the roadmap for the specified topics.

SmartRetriever

DIrectionX operates similarly to a large language model (LLM), but now we’re shifting gears to develop an AI Agent but something different than LLMs. This agent will scrape a website (URL to be provided by the user), store the scraped data in a .txt file, convert it into a vector database, and store it in ChromaDB. Users will then be able to interact with this database using queries in the command-line interface (CLI) through LLM-powered responses. All of this will be accomplished locally, offering a seamless experience directly from the user’s system.

For SmartRetriever, we will be using both CrewAI (for web-scraping and storing the scraped data) and Phidata frameworks (to create the vector database and then integrate the scraped data with LLM so that the user can ask queries from the Knowledge Base generated out of the vector database) along with the default OpenAI LLM as well as a free Gemini one.

For the RAG-based agent SmartRetriever, the users are also instructed to install Docker Desktop in their local systems to store the vector database locally in their respective systems.

To use Docker Desktop for the application, the users will have to run the following command in their CLI to create the Docker Containers –

docker run -d \

-e POSTGRES_DB=ai \

-e POSTGRES_USER=ai \

-e POSTGRES_PASSWORD=ai \

-e PGDATA=/var/lib/postgresql/data/pgdata \

-v pgvolume:/var/lib/postgresql/data \

-p 5532:5432 \

--name pgvector \

phidata/pgvector:16

Instructions for setting up the Python environment are similar to what has been instructed for DirectionX, which we will be using for SmartRetriever too. The file structure has been simplified. There will be only three Python files:

scraper.py(This will help in scraping the contents of the URL provided by the user and then storing the well-structured scraped data in a .txt file).interaction_using_openai.py(used for storing the contents of the .txt file in a vector database, creating a Knowledge Base out of the vector data, and then allowing the user to ask queries and solutions from the Knowledge Base using the default OpenAI model or the free Google Gemini model through the user’s local system through CLI ).interaction_using_gemini_free.py(usage similar to that ofinteraction_using_openai.pyexcept for some minor changes to integrate Gemini with the Knowledge Base).

scraper.py

from crewai import Agent, Task, Crew

from langchain_openai import ChatOpenAI

from crewai_tools import ScrapeWebsiteTool

import os

from dotenv import load_dotenv

from langchain_google_genai import ChatGoogleGenerativeAI

load_dotenv()

## call the gemini models

llm=ChatGoogleGenerativeAI(model="gemini-2.0-flash-exp",

verbose=True,

temperature=0.5,

google_api_key=os.getenv("GOOGLE_API_KEY"))

# Instantiate tools

site = 'https://docs.opencv.org/3.4/d9/d25/group__surface__matching.html'

web_scrape_tool = ScrapeWebsiteTool(website_url=site)

# Create agents

web_scraper_agent = Agent(

role='Web Scraper',

goal='Effectively Scrape data on the websites to use it with LLM',

backstory='''You are expert web scraper, your job is to scrape all the data

so that to integrate the scraped data with LLM.

''',

tools=[web_scrape_tool],

verbose=True,

llm = llm

)

# Define tasks

web_scraper_task = Task(

description='Scrape all the data on the site to use it for decision making.',

expected_output='All the content of the website.',

agent=web_scraper_agent,

output_file = 'data.txt'

)

# Assemble a crew

crew = Crew(

agents=[web_scraper_agent],

tasks=[web_scraper_task],

verbose=True,

)

# Execute tasks

result = crew.kickoff()

print(result)

with open('results.txt', 'w') as f:

f.write(result)

The ChatGoogleGenerativeAI function is instantiated using the gemini-2.0-flash-exp model, with the Google API key loaded from the environment. The ScrapeWebsiteTool is initialized with the URL of the OpenCV documentation page to scrape relevant content for decision-making. This web_scraper_agent is created to scrape data from the provided website. The task web_scraper_task specifies scraping all content from the site and storing it in data.txt. The Crew class is used to assemble the agent and task. The crew.kickoff() method starts the task execution, and the scraped content is saved to a file results.txt. This setup enables automated scraping and processing of website data using a predefined workflow with the LLM.

interaction_using_openai.py

import typer

import os

from typing import Optional,List

from phi.agent import Agent

from phi.knowledge.text import TextKnowledgeBase

from phi.storage.agent.postgres import PgAgentStorage

from phi.vectordb.chroma import ChromaDb

from dotenv import load_dotenv

load_dotenv()

db_url = "postgresql+psycopg://ai:ai@localhost:5532/ai"

knowledge_base = TextKnowledgeBase(

path="/home/opencvuniv/RAG_Agent/results.txt",

# Table name: ai.text_documents

vector_db=ChromaDb(

collection="text_documents",

path="/home/opencvuniv/RAG_Agent/",

),

)

knowledge_base.load()

storage=PgAgentStorage(table_name="rag_agent",db_url=db_url)

def rag_agent(new: bool = False, user: str = "user"):

session_id: Optional[str] = None

if not new:

existing_sessions: List[str] = storage.get_all_session_ids(user)

if len(existing_sessions) > 0:

session_id = existing_sessions[0]

agent = Agent(

session_id=session_id,

user_id=user,

knowledge_base=knowledge_base,

storage=storage,

# Show tool calls in the response

show_tool_calls=True,

# Enable the assistant to search the knowledge base

search_knowledge=True,

# Enable the assistant to read the chat history

read_chat_history=True,

)

if session_id is None:

session_id = agent.session_id

print(f"Started Run: {session_id}\n")

else:

print(f"Continuing Run: {session_id}\n")

agent.cli_app(markdown=True)

if __name__=="__main__":

typer.run(rag_agent)

The above code block defines a RAG (Retrieval-Augmented Generation) Agent using the phi (Phidata) library, enabling interactive querying and response generation based on a knowledge base and storage. The knowledge base is initialized with a path to a text file (results.txt) and a vector database (ChromaDb) to store and retrieve relevant documents. The default OpenAIEmbedder has been used internally by default to embed the data for similarity searches. The knowledge base is loaded for further querying. This PgAgentStorage is used to store the agent’s data, like run information, in a PostgreSQL database. The rag_agent function initiates an assistant, which can either start a new session or continue an existing one based on the session_id. The assistant can search the knowledge base, retrieve information, and interact with the user. The cli_app function initiates the interaction, and the agent uses the knowledge base and chat history to provide relevant answers.

interaction_using_gemini_free.py

import typer

import os

from typing import Optional,List

from phi.agent import Agent

from phi.knowledge.text import TextKnowledgeBase

from phi.storage.agent.postgres import PgAgentStorage

from phi.vectordb.chroma import ChromaDb

from phi.embedder.google import GeminiEmbedder

from phi.model.google import Gemini

from dotenv import load_dotenv, find_dotenv

load_dotenv(find_dotenv())

api_key = os.getenv('GOOGLE_API_KEY')

db_url = "postgresql+psycopg://ai:ai@localhost:5532/ai"

knowledge_base = TextKnowledgeBase(

path="/home/opencvuniv/RAG_Agent/results.txt",

# Table name: ai.text_documents

vector_db=ChromaDb(

collection="text_documents",

path="/home/opencvuniv/RAG_Agent/",

embedder=GeminiEmbedder(api_key=api_key),

),

)

knowledge_base.load()

storage=PgAgentStorage(table_name="rag_agent",db_url=db_url)

def rag_agent(new: bool = False, user: str = "user"):

session_id: Optional[str] = None

if not new:

existing_sessions: List[str] = storage.get_all_session_ids(user)

if len(existing_sessions) > 0:

session_id = existing_sessions[0]

agent = Agent(

session_id=session_id,

user_id=user,

model=Gemini(id="gemini-2.0-flash-exp"),

knowledge_base=knowledge_base,

api_key=api_key,

storage=storage,

# Show tool calls in the response

show_tool_calls=True,

# Enable the assistant to search the knowledge base

search_knowledge=True,

# Enable the assistant to read the chat history

read_chat_history=True,

)

if session_id is None:

session_id = agent.session_id

print(f"Started Run: {session_id}\n")

else:

print(f"Continuing Run: {session_id}\n")

agent.cli_app(markdown=True)

if __name__=="__main__":

typer.run(rag_agent)

In the above code block, all functionalities are the same except for a few modifications. The GeminiEmbedder is used to embed the data for similarity searches. We even have to explicitly pass the api_key (having Gemini free model’s API Key) parameter to vector_db so that GeminiEmbedder is being used instead of the default OpenAIEmbedder model. It is also instructed to pass the api_key parameter in the Agent function just to make sure that Google Gemini’s LLM is being leveraged for executing the whole Agentic flow.

The overall execution flow for the SmartRetriever RAG AI Agent has been summarized and attached below. The results are pretty impressive and can be viewed here.

After setting up everything, we just have to run the scraper.py file first using the CLI command python scraper.py for web-scraping and storing the scraped data, all using the CrewAI framework. Then the final step leveraging the Phidata framework is to give the scraped data file’s path to either interaction_using_openai.py file or interaction_using_gemini_free.py based on the user’s preferences and then execute the file using python interaction_using_openai.py or python interaction_using_gemini_free.py command in the CLI. Now, the user will be able to interact with the Knowledge Base created out of the scraped content already stored in the results.txt file. The whole sequential process has been shown in the video format above.

Conclusion

Agentic AI is transforming the landscape of intelligent systems, shifting from traditional reactive models to proactive, autonomous agents. With capabilities like autonomy, proactivity, and adaptability, these systems can make decisions, plan actions, and evolve based on real-time feedback, enabling them to handle complex, dynamic tasks.

The frameworks available for developing AI agents, such as CrewAI, Phidata, and Langflow, provide powerful tools for building autonomous systems capable of interacting with both humans and other agents. Agentic AI offers immense potential to automate tasks, streamline workflows, and drive intelligent decision-making. As this technology evolves, it will continue to redefine the role of AI in our daily lives and industries, turning machines into capable collaborators and decision-makers.

References

- Building Effective Agents by Anthropic

- CrewAI Framework’s Documentation

- Phidata Framework’s Documentation

- E-book Agentic AI

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning