The backbone of every computer vision application is the data used. The quality of data determines how good the final application will perform. It is needless to say that sometimes we need to collect and annotate the data ourselves to create the best computer vision application. In this regard, CVAT annotation tool is one of the leading applications for labeling computer vision datasets. In this article, we are going to explore all the possible annotation techniques that CVAT provides.

CVAT annotation tool is an image and video annotation toolset distributed by OpenCV. Anyone can create an account on CVAT.ai and label their data for free. CVAT can annotate the image or video frames using rectangles (bounding boxes), polygons (masks), keypoints, ellipses, polylines and more. CVAT also has an exhaustive list of annotation formats to export the object dataset labels.

Note: It is recommended to run CVAT on the latest version of Google Chrome browser.

- Capabilities of CVAT Annotation tool?

- Using CVAT Annotation Tool

- Create Bounding Boxes using CVAT Annotation Tool

- Create Polygon Masks using CVAT Annotation Tool

- Keypoint Annotations using CVAT Annotation Tool

- Simplify Video Annotation using CVAT Annotation Tool

- OpenCV Tools and AI Assistance in CVAT

- Local and AWS Deployment

- Deploying SAM with GPU Support

- Conclusion

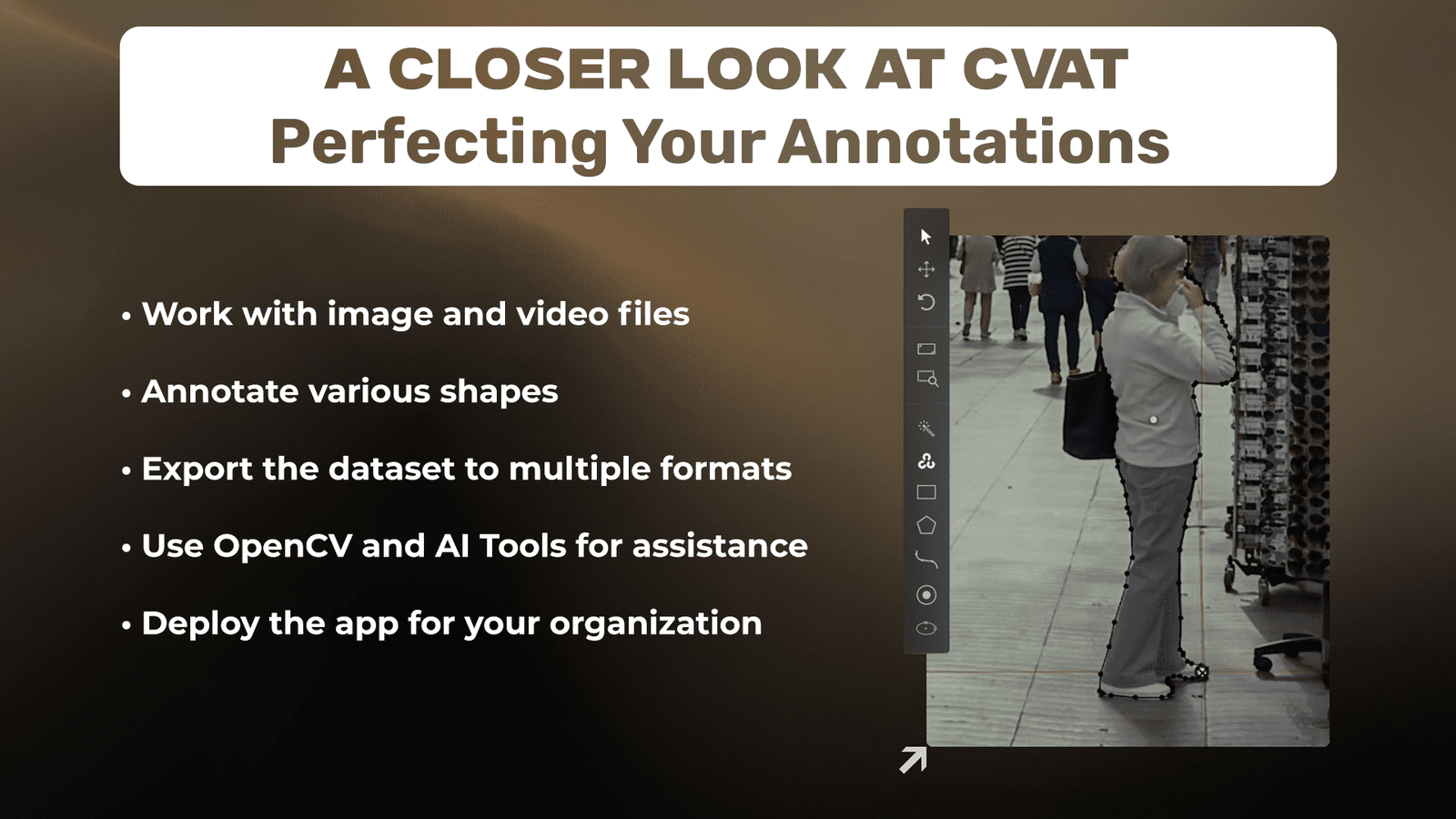

Capabilities of CVAT Annotation Tool

CVAT Annotation Tool is one of the leading applications for annotating your data. To test it out, you can use the web app CVAT.ai, which has most of the functionalities installed with some limitations. CVAT can also be deployed locally or by an organization. Currently you can:

- Work with image and video files

- Annotate various shapes

- Export the dataset to multiple formats

- Use OpenCV and AI Tools for assistance

- Deploy the app for your organization

Using CVAT Annotation Tool

Login to CVAT.ai using Google or Github, or create an account using email. The data can be organized using organizations, projects, tasks, and jobs on CVAT. Projects nest under organizations, and tasks nest under projects. The task is split into multiple jobs depending on the amount of data.

Multiple users can be invited to an organization. These users can annotate together. The jobs, tasks, and projects can be assigned to other users to either annotate or review the annotations.

Object labels can be created on the project page or the task page. Enter the object name, set up the label appearance, and (optionally) restrict the shape of the label.

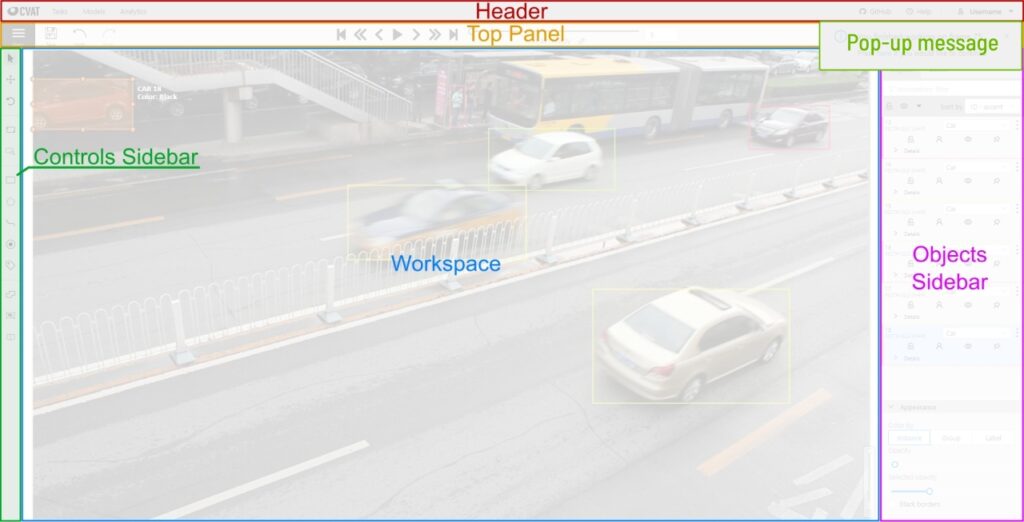

The annotations page interface consists of the following:

- Header– it is always pinned on the top, and helps navigate to different sections of CVAT.

- Top panel– used to browse through the data.

- Controls sidebar– helper tools like zooming and various annotation shapes like rectangles and keypoints are found here.

- Objects sidebar– it hosts objects in the frame, labels in the project, the appearance settings, and annotation reviews.

- Workspace– it displays the images.

- Important messages, like server responses, save confirmation, etc come up as pop-ups.

Annotating is a tedious process. Using a mouse for everything can quickly become exhausting. To make things easier, CVAT takes inputs from the keyboard. CVAT is full of keyboard shortcuts. These shortcuts help us annotate images faster.

You can export the annotations in multiple formats using CVAT, depending on the type of annotation, viz. rectangle, keypoint, or image and video data. Save your work, open the menu, and click on export dataset.

Create Bounding Boxes Using CVAT Annotation Tool

We use bounding boxes to detect objects in an image. The problem is called Object Detection. CVAT can label objects like rectangles to work with the object detection task.

There are two ways to annotate a bounding box: the two-point method and four-point method.

- Two-point– bounding boxes are created using two opposite corners of the rectangle.

- Four-point– bounding boxes are created using the top-most, bottom-most, left-most and right-most points of the object.

To create a bounding box, click on the rectangle tool from the control’s toolbar. Select the object label, and click on the shape button. Two red guiding lines will appear on the screen. These resulting boxes can be resized and rotated.

Create Polygon Masks using CVAT Annotation Tool

Polygon annotations or masks are used for semantic/instance segmentation tasks. It is used to precisely localize the objects in the image.

Create a mask by selecting the polygon tool from the controls sidebar. Then select the label, and click on the shape. A limited number of points can also be given for the annotation. The object will be created when the desired number of points are drawn.

To start annotating, click on the edge of the object. This will create the starting point. Now select a direction, and start making line segments, traversing the edge of the object. A polygon annotation specifies the starting point and the direction of the annotation.

Creating polygons is time-consuming. To increase productivity, CVAT annotation tool uses the following shortcuts:

- Press

shiftwhile drawing the point to start a continuous stream of points. - When drawing,

right-clickto remove the previous point. - To adjust the individual points, simply click and drag the point.

- To delete points, press

alt, andleft-clickthe points to delete. - After the annotation is created, use

shiftto add or remove portions of the annotation. - To help with multiple polygons in a frame, use automatic bordering using the

ctrlbutton.

As an alternative to the polygon tool, you can also use the brush tool. The brush tool makes hollow masks or other annotations.

Keypoint Annotations using CVAT Annotation Tool

Tasks like facial keypoints, or body pose make use of keypoint annotations. Keypoints in CVAT can be created by two methods. Using the keypoint tool or using the skeleton tool.

The keypoint tool is as straightforward as other shape tools. Select the tool, the label, input the number of points, and click on the shape to start annotating.

The skeleton tool, however, is a template annotation. It is created with labels. Skeletons can have points or edges. The points can be connected using edges, and each point can be personalized. The points created by the keypoint tools cannot be individually personalized.

When drawing the skeleton, the template is enclosed in a bounding box. You can draw and rotate and resize the bounding box similar to two-point rectangle annotation. The individual elements can also be adjusted.

Skeletons are preferred for repetitive tasks. Dlib’s facial landmark detection is made up of 68 points. Annotating 68 points for every face quickly becomes tedious. Using a skeleton makes the job easy and completes quickly.

Simplify Video Annotation Using CVAT Annotation Tool

We were clicking on the shape button for image annotations. For video annotations, we will be clicking the track button.

Creating the shape is the same as before, but the object now has much more functionality. Check out the object card for extra buttons. These buttons include the outside property, the occluded property, and the toggle keyframe property button.

Keyframes are used to animate the position and shape of the annotation. The animation is called interpolation. Skip a few frames till the motion of the object changes. Now modify the bounding box in this frame. CVAT will interpolate the bounding box between the two keyframes.

When the object is occluded, switch on the occluded property button. The object border is converted to a dotted line. The shortcut for it is Q.

When the object gets too small to annotate, or you want to finish the annotation, or the object exits the screen, click on the switch outside property. The shortcut for it is O.

There are special rules for polygon and keypoint annotations.

- For polygon annotations, if you want to redraw the object, take note of the starting point and the direction of the annotation. However, it is not necessary to redraw the polygon everytime.

- Keypoint interpolation only works for a single point. So when tracking keypoints, make sure to input the number of points as 1. The objects can then be grouped together with a

group_idattribute to them.

If an object gets completely occluded but reappears in the video, you can merge multiple tracks into a single track using the merge shape/track button. The tracks can be split by using the split button.

Similar to object interpolation, objects can be propagated to the next frame. To propagate the object is to create multiple copies of the annotation for the subsequent frames. Note the object IDs. Each object will have a different object ID, but the tracked objects have the same ID.

OpenCV Tools and AI Assistance in CVAT

CVAT employs OpenCV tools and AI-assistance to ease the annotation process.

The available OpenCV tools are intelligent scissors, histogram equalization, and MIL tracker. Head to the OpenCV icon on the control toolbar. Wait for the library to load.

Under drawing, you’ll find intelligent scissors. These are used to create polygon annotations. Click on the starting point and traverse the edge of the object. OpenCV will create the edges automatically. Ensure the previous point does not leave the restrictive threshold bounding box before creating the next point.

When the contrast is off in the images, use OpenCV’s histogram equalization to grasp the object better.

Objects can be tracked in a video using OpenCV’s MIL tracker. Create a bounding box around the object then navigate to the next frame. OpenCV will track the object in this frame. Continue to the next frame till the object needs to be tracked.

Although the OpenCV tools relieve us, they are prone to failures. Disadvantages of OpenCV Tools:

- Intelligent scissors fail when the background and foreground colors are similar.

- Instead of Histogram equalization, change the color settings globally.

- And Tracker MIL does not resize the bounding box. Moreover, if another object crosses over the object being tracked, MIL will track the new object.

AI models on CVAT will help you create polygon masks, detect the objects in the frame, and track the objects in a video sequence. AI tools can be accessed using the wand tool. Click on the question mark to learn more about the model.

Most interactors (polygon masks) require positive and negative points to create the annotation.

Many state of the art detection models like YOLOv5 are available for object detection under the detectors tab. The model labels (labels from YOLOv5) need to match with the task label (labels in CVAT).

Drawbacks of AI tools:

- Failed server responses

- Unavailability of AI models

- Inability to auto annotate the task in its entirety

| You can also create your very own automated image annotation tool using OpenCV and Python, and understand the logic behind pyOpenAnnotate. |

Local and AWS Deployment

CVAT can be used online using CVAT.ai web app. CVAT can also be deployed locally or on AWS. For local or AWS deployment we will be using docker.

Deploy CVAT Annotation Tool locally

To deploy CVAT locally, follow these instructions:

- Clone the CVAT github repository using

git clone https://github.com/opencv/cvat. - Then change directory to CVAT.

cd cvat. - Now start all the CVAT containers using docker compose using

docker compose up -d. This will activate all the services of CVAT like the UI, database, etc. Once all the services are up, cvat is running - For first time launch, setup the superuser. Run the command

docker exec -it cvat_server bash. - Once the

cvat_servercontainer is running, execute the python file~/manage.py createsuperuser. - Finally, goto

localhost:8080on google chrome to access CVAT. Login using the superuser credentials.

Deploy CVAT Annotation Tool on AWS Server

To deploy CVAT on AWS, follow these steps. Most of the instructions remain the same:

- Clone the CVAT github repository using

git clone https://github.com/opencv/cvat. - Then change directory to CVAT.

cd cvat. - Export the

CVAT_HOSTvariable and pass the public ipv4 address of the AWS server. - Now start all the CVAT containers using docker compose using

docker compose up -d. This will activate all the services of cvat like the UI, database, etc. Once all the services are up, cvat is running. - For first time launch, setup the superuser. Run the command

docker exec -it cvat_server bash. - Once the

cvat_servercontainer is running, execute the python file~/manage.py createsuperuser. - Setting the

CVAT_HOSTvariable lets us connect to CVAT from any computer with internet access. - In the AWS dashboard, goto security groups. Select the security group of the CVAT AWS server and under actions, edit inbound rules. Add a rule, for custom TCP connections and expose port 8080 for any IPv4 or just my IP.

- Finally, goto

public-ipv4:8080on google chrome to access CVAT. Login using the superuser credentials.

Deploying SAM with GPU Support

To deploy models using GPU, you’ll need to follow the following steps:

- Make sure you have Docker and NVIDIA Container Toolkit installed on your system. Follow the instructions given in their websites.

- Clone the CVAT repository:

git clone https://github.com/opencv/cvat. - Then change directory to CVAT:

cd cvat. - Now start all the CVAT containers using

docker compose -f docker-compose.yml -f components/serverless/docker-compose-serverless.yml up -d. This will activate the regular services of CVAT as well as nuclio. Once all the services are up, cvat is running. - For first time launch, setup the superuser. Run the command

docker exec -it cvat_server bash. - Once the

cvat_servercontainer is running, execute the python file~/manage.py createsuperuserand follow the on screen instructions. - Check the nuclio version in the file:

components/serverless/docker-compose-serverless.ymland downloadNuctl command line toolof the same version. Edit the following code snippet:wget https://github.com/nuclio/nuclio/releases/download/<version>/nuctl-<version>-linux-amd64 - After downloading nuclio, give it a proper permission and do a softlink:

sudo chmod +x nuctl-<version>-linux-amd64sudo ln -sf $(pwd)/nuctl-<version>-linux-amd64 /usr/local/bin/nuctl

- Finally, deploy YOLOv7 on CPU using

./serverless/deploy_cpu.sh ./serverless/onnx/WongKinYiu/yolov7/nuclio - And, deploy SAM on GPU using

./serverless/deploy_gpu.sh ./serverless/pytorch/facebookresearch/sam/nuclio - To check the hosted models, run

nuctl get functions. - Nuclio dashboard is hosted on

localhost:8070

Conclusion

CVAT is an ever evolving tool hosted under OpenCV’s banner. It is open source and free for even commercial usage.

In this post we learned about CVAT and its capabilities. We saw the UI and hierarchy of CVAT, and learned how to annotate a few shapes for image and for video. We used the OpenCV tools and AI assistance in our workflow, and deployed CVAT annotation tool locally and on an AWS server.

References

- https://www.cvat.ai/

- https://github.com/opencv/cvat

- https://opencv.github.io/cvat/docs/manual/

- https://youtube.com/playlist?list=PLfYPZalDvZDLvFhjuflhrxk_lLplXUqqB

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning