Last week, Qualcomm made waves in the maker community by acquiring Arduino. At the event “From Blink to Think” the all new Arduino Uno Q was unveilled. A board that promises linux powered ML on Arduino while retaining micrcontroller level control. I am genuinely excited to get my hands on it once it ships. But here’s the thing – Arduino already had several AI capable “dual-brain” boards. Some released nearly a decade ago. So why is the Uno Q creating such a buzz? To explore that, let’s try deploying ML models on Arduino board (an older version) to see what it’s truely capable of. Later we will relate the ability on the basis of specs.

What’s covered in the post?

- Understand Arduino Uno Q, and what to expect from it

- Train a classification model from scratch using Tensorflow

- Deploy TfLite model on Arduino Nano 33 BLE and build a Gradio interface

- A Brief History of Tiny ML on Arduino

- Arduino Uno Q SBC: What's New?

- Why Arduino Nano 33 BLE Now After Five Years?

- Installation of Necessary Tools for Deploying ML on Arduino

- Training Classification Model on MNIST Digits Dataset

- Sketch for Deploying ML on Arduino

- Gradio App to Manage Inputs for Deploying ML on Arduino

- Deploying ML Models on Arduino Conclusion

A Brief History of Tiny ML on Arduino

ML on Arduino is not new. Uno Q is not the first AI capable board that Arduino developed. Several boards were already capable of AI or TinyML workloads. These boards laid the foundation for edge AI on Arduino, long before hybrid SBCs (Single Board Computer) like the Uno Q emerged. Following are few boards where TinyML models could be deployed.

| BOARD | MCU, SRAM, FLASH | MPU, RAM, FLASH |

|---|---|---|

| Arduino Yun (2013) | 2.5 kB, 32 kB | 64 MB, 16 MB |

| Arduino Tian (2016) | 32 kB, 256 kB | 64 MB, 16 MB + 4GB eMMC |

| Arduino Nano 33 BLE (2019) | 256 kB, 1 MB | |

| Portenta H7 (2019) | 1 MB, 2 MB | 8 MB, 16 MB |

| Portenta X8 (2022) | 1 MB, 2 MB | 2 GB, 16 GB eMMC |

Apart from this, there are a lot more MCUs, developed for audio and vision applications. Even today, Portenta X8 MCU is still a lot more powerful than the one in Arduino Uno Q. So why does Uno Q matter now?

Arduino Uno Q SBC: What’s New?

The Arduino Uno Q marks a major evolution in the Arduino universe. Not just because of its specs but, because now it’s a part of Qualcomm Edge AI. Let’s look at what Qualcomm has been doing recently.

2.1 Qualcomm’s Take On Full-stack Edge AI with ML on Arduino

On March 10th 2025, they acquired Edge Impulse. A move to integrate edge AI Dev platform to Qualcomm’s stack. Then in April, Movian AI was acquired with an objective to strengthen the R&D capability. Alphawave Semi was acquired in June expanding Qualcomm’s backend, networking and data center reach. Now, with Arduino in their stack, brings Edge AI hardware and a huge community. It’s a strategic move for Qualcomm to grab the massive edge AI market.

Why ML on Arduino Could Improve Now?

Earlier AI-ready boards (like Portenta X8 or Nano 33 BLE) were great but stayed within niche developer circles. Now it will have the following advantages.

- Full stack ecosystem from Qualcomm

- Standardized developer workflow – with App Lab, Arduino AI Studio, and more

2.2 Technical Specs of Arduino Uno Q

Following are the processor and MCU specifications of the Arduino Uno Q. I was also hoping for the 8 GB memory variant. You can’t really use mainstream OS in 2GB RAM. Also, no support for external SD card 👎. Similar to Portenta X8, it will also support linux flavored light weight distro only. It does have a dedicated GPU and optimized neural capabilities through Qualcomm’s SDKs, giving it a solid edge in AI + control integration.

| Micro Processor Unit, MPU | Micro Controller Unit, MCU |

|---|---|

| 🔵Dragonwing QRB2210 (Cortex-A53) 🔵Adreno GPU 3D graphics accelerator 🔵2GB LPDDR4 RAM 🔵16 GB eMMC built-in Flash | 🔵STM32U585 MCU (Cortex-M33) 🔵2MB Flash 🔵786 kB SRAM 🔵Floating Point Unit, Single Precision ( FP32) |

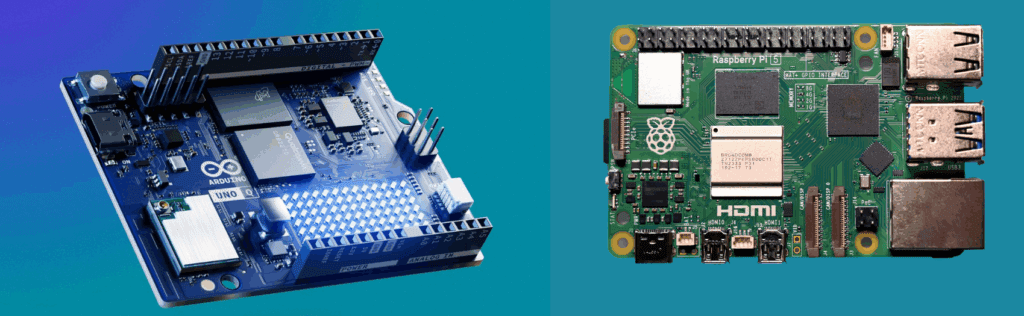

2.4 Is Arduino Uno Q a Raspberry Pi Killer?

Yes, ML on Arduino is evolving now. However, Arduino’s philosophy is centered on low energy consumption, efficiency and speed. Looking at the specifications, it’s nowhere close to Raspberry Pi 5. It is not designed to replace Raspberry Pi but rather to bridge the gap between full fledged SBCs and MCUs. Although I am not sure how it branches ahead in future.

The UNO Q’s MCU gives it deterministic timing for tasks like motor control, sensor fusion, robotics etc. Raspberry Pi alone can’t do these things precisely. Interested in measuring Pi’s power? Check out my previous article on Raspberry Pi: VLM on Edge.

Why Arduino Nano 33 BLE Now After Five Years?

I got this board during COVID 19 pandemic from the US. The board has reached “End of Life” support from Arduino as of now. It was bought just as a collection, hoping to do something with it. Back then, I had decent knowledge of working with embedded systems from hobby projects. However, I had little to no knowledge of Machine Learning. Let alone deploying ML on Arduino. Hence could not implement anything in it. After a while it was forgotten, and it remained hidden for a while😅. Untill recently when I heard the news of Qualcomm acquiring Arduino and releasing Uno Q SBC, and I was rearranging my collection.

In between, my domain of research shifted to Classical Computer Vision, and then Deep Learning. It was definitely not an easy journey. Fortunately, I got introduced to OpenCV Courses early. It has very well structured modules for Deep Learning using Tensorflow and PyTorch. Checkout OpenCV courses below, it was worth my time.

Installation of Necessary Tools for Deploying ML on Arduino

We will need to install Arduino IDE, tensorflow, and some helper packages for the BLE board. You can go ahead and install Arduino IDE software from the official website here. Once done, download the Arduino tfLite support package provided with download code. It’s available under root > libs > Arduino_TensorFlowLite.zip.

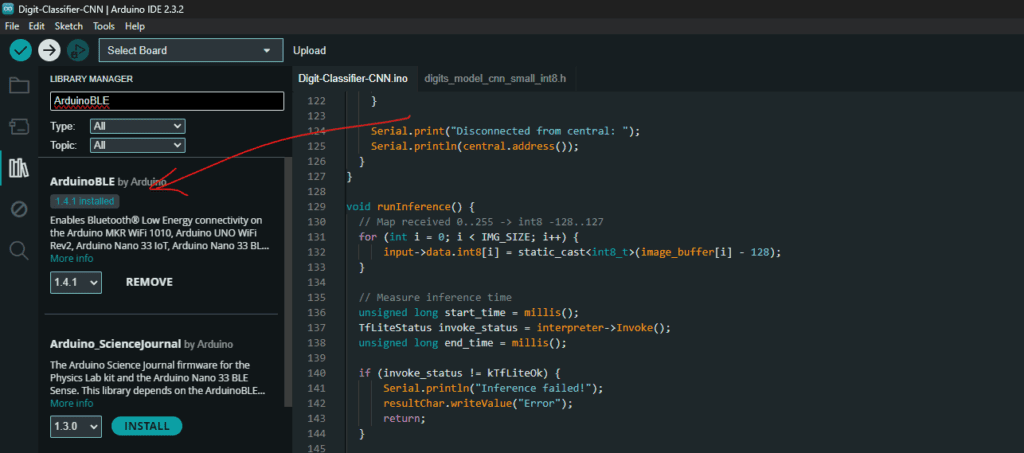

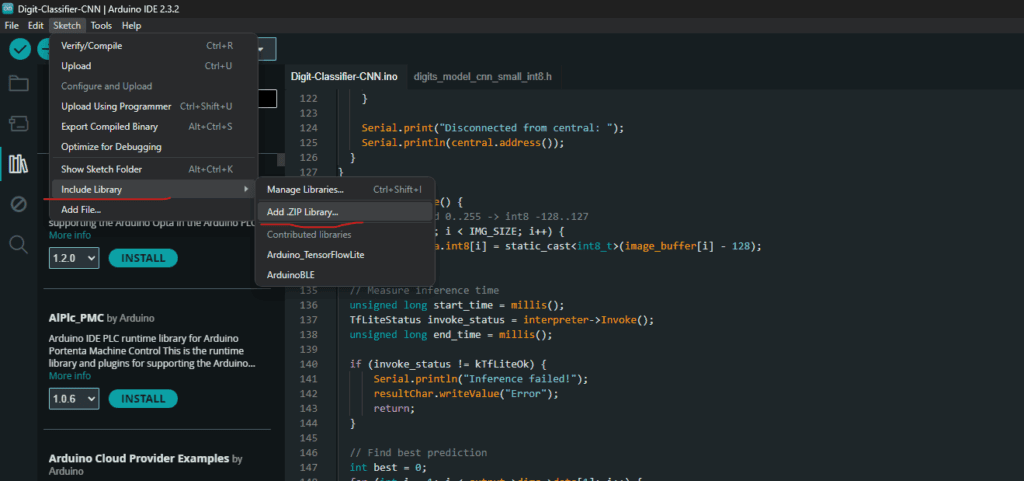

Step 1: Open Arduino IDE and go to Sketch > Include Library > Manage Library in the menu bar. Search for ArduinoBLE, and install the package.

Step 2: Similarly go to Manage Library, but this time proceed through ‘Add .zip library’ sub menu. Navigate to the downloaded code folder and select Arduino_TensorFlowLite.zip once prompted. This will allow ML on Arduino workflow.

Step 3: We will need XXD tool for conversion for tfLite models to Arduino compatible header files. On mac it comes pre-installed with vim editor. If not, install vim editor using the link.

For windows, it is not pre-installed. Use the same link provided above to download and install. Add vim installation directory to PATH in environment variable.

On Ubuntu, you can install it using sudo apt install xxd. Verify with the following command on terminal/command prompt for successful installation.

xxd --version

Step 4: Now go ahead and install Tensorflow in a python or conda environment. Following this, install gradio using pip install gradio command. That’s all we need for now.

Training Classification Model on MNIST Digits Dataset

To demonstrate TinyML in action, let’s train a simple digit classification model on the MNIST dataset. It contains 60,000 grayscale images of handwritten digits. We’ll build and train a lightweight neural network from scratch. The model will learn to identify digits by extracting spatial and intensity patterns from 28×28 pixel images, and we will be using a CNN architecture.

5.1 Import Dependencies

import os, random

import numpy as np

import tensorflow as tf

from tensorflow.keras.preprocessing.image import ImageDataGenerator

from sklearn.model_selection import train_test_split

from PIL import Image, ImageFilter

import matplotlib.pyplot as plt

5.2 Load and Pre-Process MNIST Classification Dataset

We begin by loading and preprocessing the MNIST dataset, which contains grayscale images of handwritten digits from 0 to 9. Each image was normalized between 0 and 1 and reshaped to include a single channel (28×28×1). We also converted the labels into one-hot encoded vectors for multi-class classification.

# Load and preprocess dataset

(X_train, y_train), (X_test, y_test) = tf.keras.datasets.mnist.load_data()

X_train = X_train.astype(np.float32) / 255.0

X_test = X_test.astype(np.float32) / 255.0

# Expand dims → (N, 28, 28, 1)

X_train = np.expand_dims(X_train, -1)

X_test = np.expand_dims(X_test, -1)

# One-hot encode labels

y_train_onehot = tf.keras.utils.to_categorical(y_train, 10)

y_test_onehot = tf.keras.utils.to_categorical(y_test, 10)

5.3 Add Data Augmentation

We have added more advanced augmentations in the notebook as well. I am not explaining it here to limit the length of the blog. However, if you are in doubt, please feel free to ask in the comments below. We also have a very detailed blog post on Implementing a CNN using Tensorflow and Keras. Checkout for more details.

# Base geometric augmentations

base_datagen = ImageDataGenerator(

rotation_range=15,

width_shift_range=0.1,

height_shift_range=0.1,

zoom_range=0.1,

fill_mode="nearest"

)

base_datagen.fit(X_train)

5.4 Create and Compile A Compact CNN Model

Our CNN consists of two convolutional layers with ReLU activation that progressively learn spatial features. Followed by max pooling layers to reduce spatial dimensions and extract dominant features. The output is then flattened and passed through a fully connected dense layer with 64 neurons for feature integration, followed by a softmax output layer that classifies the image into one of ten categories.

Model Summary:

- Input: 28×28 grayscale image

- Parameters: 54,000 trainable weights (Approx.)

- Loss: Categorical Crossentropy

- Optimizer: Adam

- Metric: Accuracy

# Compact CNN

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(16, (3,3), activation='relu', input_shape=(28,28,1)),

tf.keras.layers.MaxPooling2D((2,2)),

tf.keras.layers.Conv2D(32, (3,3), activation='relu'),

tf.keras.layers.MaxPooling2D((2,2)),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(10, activation='softmax')

])

model.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.summary()

5.5 Train and Evaluate the Model

# Train

batch_size = 64

epochs = 20

history = model.fit(

augmented_generator(X_train, y_train_onehot, batch_size),

validation_data=(X_test, y_test_onehot),

steps_per_epoch=len(X_train)//batch_size,

epochs=epochs,

verbose=1

)

# Evaluate

loss, acc = model.evaluate(X_test, y_test_onehot, verbose=0)

print(f"Test Accuracy: {acc:.4f}")

5.6 Quantization of the Classification Model

Arduino Nano 33 BLE does not support floating point operations. Hence, we have to convert the model to INT8 quantized.

We are using a representative dataset from the training data to calibrate weights and activations during conversion. It ensures accurate scaling from float32 to int8. The final quantized model is then saved as a .tflite file.

# Quantization (INT8)

def representative_dataset():

for i in range(1000):

img = X_train[i:i+1].astype(np.float32)

yield [img]

# Initialise converter

converter = tf.lite.TFLiteConverter.from_keras_model(model)

# Enable qunatization

converter.optimizations = [tf.lite.Optimize.DEFAULT]

# Assign calibration data

converter.representative_dataset = representative_dataset

# Force INT8 quantization

converter.target_spec.supported_ops = [tf.lite.OpsSet.TFLITE_BUILTINS_INT8]

converter.inference_input_type = tf.int8

converter.inference_output_type = tf.int8

# Convert and Save Model

tflite_model = converter.convert()

# Save TFLite model

with open("digits_model_cnn_small_int8.tflite", "wb") as f:

f.write(tflite_model)

print("INT8 TFLite compact model saved.")

print("Model size:", len(tflite_model)/1024, "KB")

5.7 Convert To Compatible Header File for ML on Arduino

We will convert the quantized TfLite model to Arduino compatible header file now. Run the following cell in the current working directory and done.

!xxd -i digits_model_cnn_small_int8.tflite > digits_model_cnn_small_int8.h

Note: Sometime, the header file may get saved in UTF16 format. It is not supported by the Arduino compiler. As observed on windows, make sure to convert to UTF8 format using windows Text editor tool. Check out the video below for the steps.

Sketch for Deploying ML on Arduino

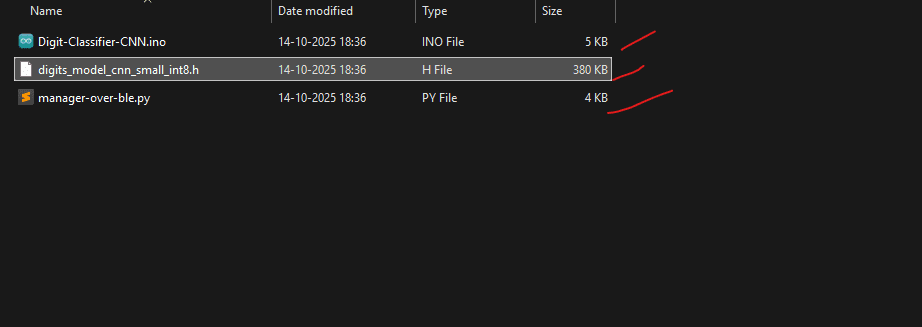

Once header file is ready in correct format, create a new sketch in Arduino IDE and save it (any name). The Digit-Classifier-CNN.ino file and the folder will have same name. Move the header file digits_model_cnn_small_int8.h to this directory. As you can see in the downloaded code folder, it is already present. At this point you can connect the Arduino Nano 33 BLE board, and upload the code directly for test run. It should compile successfully and upload the code.

After running the manager_over_ble.py script, you should be able to send images over bluetooth and get prediction. However, this isn’t going to work. As the MAC address of your bluetooth device will be different.

6.1 Retrieve Bluetooth device MAC Address

Let’s go ahead and create a new arduino sketch as shown below. It will simply print the address of your Arduino BLE device. Make sure to match the baudrate of code and Serial Monitor, otherwise you will only see garbage value. In case you are seeing nothing, check if you have selected the correct USB port. Also reset the board once using the physical button on board (single click, while connected to USB port).

#include <ArduinoBLE.h>

void setup() {

Serial.begin(115200);

while (!Serial);

if (!BLE.begin()) {

Serial.println("Starting BLE failed!");

while (1);

}

// Retrieve and print the local BLE MAC address

String mac = BLE.address();

Serial.print("BLE MAC Address: ");

Serial.println(mac);

}

void loop() {

}

Re-upload the Digit-Classifier-CNN.ino code to the board and modify manager_over_ble.py script with proper address. Now you should be able to upload images and get predictions. Let’s take a look at the arduino sketch now.

6.2 Include Libraries and Dependencies to Run ML on Arduino

#include <ArduinoBLE.h>

#include <TensorFlowLite.h>

#include "digits_model_cnn_small_int8.h"

#include "tensorflow/lite/micro/all_ops_resolver.h"

#include "tensorflow/lite/micro/micro_interpreter.h"

#include "tensorflow/lite/micro/micro_error_reporter.h"

#include "tensorflow/lite/schema/schema_generated.h"

#include "tensorflow/lite/version.h"

#include <Arduino.h>

6.3 Define Global Variables To Prepare Arduino Nano 33 BLE

In the following snippet, we define memory and model structures for TFlite inference on-device. Setup BLE (bluetooth low energy), and a debug logger for serial output.

Communication Setup

Since we are using bluetooth for communication, we have to set up these variables. The BLE device can’t receive it all at once. Its max intake capacity is 128 bytes at once. Hence, we have to send it in chunks.

- 28×28 grayscale image, so 784 bytes allocated for incoming pixel data

received_bytesvariable tracks how many bytes have been receivedimage_readyis a flag that becomestruewhen a full image is received

6.4 TFLite Micro Setup

Next is Tensorflow Lite Micro setup. This is the library that enables TinyML in microcontrollers. You can checkout the GitHub repository for amazing work done so far by. However, the Arduino TFlite library was removed from Arduino Libs sometime back (it was kind of a duplicate). You might see some errors if you use the package from GitHub directly. No worries here, I have uploaded a ZIP file in the download code. Let’s see what’s in TFLite Micro setup globals.

tflErrorReporterhandles error and debug messagesresolverregisters all available TFLite operators (Conv2D, Dense, etc.)modelwill point to the loaded.tflitemodel in flash memoryinterpreterruns inference using the modelinputandoutputare pointers to the model’s input/output tensors.tensorArenais a memory buffer (50 KB) where all intermediate tensors and activations are stored during inference.

One of the areas where you have to be careful is tensorArena size. This is reserved for inference specifically. This is a static memory block, no dynamic memory allocation happens during inferencing. It means about 206 kB for program stack, BLE buffers, global variables, and other tasks. Making it bigger may fail rest of the operations, making it too small will also fail in model loading. If you are trying to fit a different model, you may need to experiment a little with the value.

#define IMG_SIZE 28 * 28 // 784 bytes

uint8_t image_buffer[IMG_SIZE];

int received_bytes = 0;

bool image_ready = false;

// TensorFlow Lite globals

tflite::MicroErrorReporter tflErrorReporter;

tflite::AllOpsResolver resolver;

const tflite::Model* model;

tflite::MicroInterpreter* interpreter;

TfLiteTensor* input;

TfLiteTensor* output;

constexpr int tensorArenaSize = 50 * 1024;

uint8_t tensorArena[tensorArenaSize];

// BLE configuration

BLEService digitService("19b10000-e8f2-537e-4f6c-d104768a1214"); // custom service UUID

BLECharacteristic imageChar("19b10001-e8f2-537e-4f6c-d104768a1214", BLEWriteWithoutResponse | BLEWrite, IMG_SIZE);

BLECharacteristic resultChar("19b10002-e8f2-537e-4f6c-d104768a1214", BLERead | BLENotify, 32);

extern "C" void DebugLog(const char* s) {

Serial.print(s);

}

6.5 Setup Function for Arduino BLE

This setup() function initializes everything needed for running the classifier. We begin by starting serial communication for debugging, then initialize BLE module. After BLE setup, TensorFlow Lite Micro is initialized and model is loaded.

Check for version compatibility, and allocates memory for tensors within the predefined tensor arena. If everything succeeds, we go ahead with retrieving pointers to the model’s input and output tensors. Then reports how much of the tensor arena memory was used. Finally, the device is ready to receive images over BLE for inference.

void setup() {

Serial.begin(115200);

while (!Serial);

Serial.println("Starting BLE Digit Classifier...");

Serial.println("Initializing BLE...");

if (!BLE.begin()) {

Serial.println("Starting BLE failed!");

while (1);

}

Serial.println("BLE initialized.");

BLE.setLocalName("DigitClassifier");

BLE.setAdvertisedService(digitService);

digitService.addCharacteristic(imageChar);

digitService.addCharacteristic(resultChar);

BLE.addService(digitService);

imageChar.writeValue((uint8_t)0);

resultChar.writeValue("Waiting");

Serial.println("Starting BLE advertise...");

BLE.advertise();

Serial.println("BLE Device Active, Waiting for Connection...");

Serial.println("Initializing TensorFlow Lite...");

model = tflite::GetModel(digits_model_cnn_small_int8_tflite);

if (model->version() != TFLITE_SCHEMA_VERSION) {

Serial.println("Model schema mismatch!");

while (1);

}

interpreter = new tflite::MicroInterpreter(model, resolver, tensorArena, tensorArenaSize, &tflErrorReporter);

Serial.println("Allocating tensors...");

TfLiteStatus status = interpreter->AllocateTensors();

if (status != kTfLiteOk) {

Serial.println("Tensor allocation failed!");

while (1);

}

input = interpreter->input(0);

output = interpreter->output(0);

Serial.println("Setup complete. Ready to receive images over BLE.");

// Print memory used

size_t used_memory = interpreter->arena_used_bytes();

Serial.print("Tensor arena used: ");

Serial.print(used_memory);

Serial.print(" bytes / ");

Serial.print(tensorArenaSize);

Serial.println(" bytes total");

}

6.6 Function to Run Inference

The runInference() function performs on-device digit recognition by first converting the received 28×28 image from 0–255 to INT8 (-128 to 127) format, then running it through the model.

- It identifies the predicted digit by selecting the output with the highest score

- Dequantizes it to compute a confidence value

- Sends the result via BLE

void runInference() {

// Map received 0..255 -> int8 -128..127

for (int i = 0; i < IMG_SIZE; i++) {

input->data.int8[i] = static_cast<int8_t>(image_buffer[i] - 128);

}

// Measure inference time

unsigned long start_time = millis();

TfLiteStatus invoke_status = interpreter->Invoke();

unsigned long end_time = millis();

if (invoke_status != kTfLiteOk) {

Serial.println("Inference failed!");

resultChar.writeValue("Error");

return;

}

// Find best prediction

int best = 0;

for (int i = 1; i < output->dims->data[1]; i++) {

if (output->data.int8[i] > output->data.int8[best]) best = i;

}

// Compute confidence

float scale = output->params.scale;

int zero_point = output->params.zero_point;

float confidence = (output->data.int8[best] - zero_point) * scale;

char result[32];

sprintf(result, "Digit:%d Conf:%.2f", best, confidence);

resultChar.writeValue(result);

Serial.print("Predicted: ");

Serial.println(result);

Serial.print("Inference time (ms): ");

Serial.println(end_time - start_time);

Serial.print("Tensor arena used: ");

Serial.println(interpreter->arena_used_bytes());

}

6.7 Main Loop Function Running ML on Arduino

The loop() function continuously checks for a BLE central device connection. When a central connects, it resets the image buffer and waits while the connection is active. We read the incoming image data is read in chunks and store in image_buffer. Once full image (IMG_SIZE) is received, image_ready boolean is set to true. The runInference() funcrtion is called to perform prediction.

After inference, image_ready flag is reset and loop starts agains.

void loop() {

BLEDevice central = BLE.central();

if (central) {

Serial.print("Connected to central: ");

Serial.println(central.address());

received_bytes = 0;

image_ready = false;

while (central.connected()) {

if (imageChar.written()) {

int len = imageChar.valueLength();

const uint8_t* data = imageChar.value();

for (int i = 0; i < len && received_bytes < IMG_SIZE; i++) {

image_buffer[received_bytes++] = data[i];

}

if (received_bytes >= IMG_SIZE) {

image_ready = true;

received_bytes = 0;

}

}

if (image_ready) {

Serial.println("Image received. Running inference...");

runInference();

image_ready = false;

}

}

Serial.print("Disconnected from central: ");

Serial.println(central.address());

}

}

Gradio App to Manage Inputs for Deploying ML on Arduino

7.1 Import Dependencies and Define Globals

Here, you have to replace DEVICE_ADDR = "84:45:7d:35:39:74" with your bluetooth MAC address.

import time, asyncio

import gradio as gr

import numpy as np

from PIL import Image

from bleak import BleakClient

# BLE configuration

DEVICE_ADDR = "84:45:7d:35:39:74" # Replace with your board's BLE MAC

IMG_UUID = "19b10001-e8f2-537e-4f6c-d104768a1214" # image write characteristic

RESULT_UUID = "19b10002-e8f2-537e-4f6c-d104768a1214" # result notify characteristic

TARGET_SIZE = (28, 28)

PREVIEW_SIZE = (128, 128)

CHUNK = 128 # BLE write chunk size in bytes

7.2 Function To Send Image to Arduino Nano BLE over Bluetooth

In the following function, we are prprocessing the image, preparing for BLE comm, and sending and receiving information as required. Following are the steps followed.

- Load the image, convert to grayscale, and resize to model input.

- Convert the image to bytes for BLE transfer.

- Connect to the BLE device.

- Set up a callback to receive the inference result from the MCU.

- Send the image in small BLE-safe chunks.

- Wait for the MCU to send back the prediction (with a timeout).

- Stop notifications and return the prediction along with a preview of the image.

# Send image to BLE + wait for prediction

async def send_image_ble(image_path):

# Load image and resize to match model input

img = Image.open(image_path).convert("L").resize(TARGET_SIZE)

arr = np.array(img, dtype=np.uint8)

# Convert to bytes for BLE transfer

data_bytes = arr.tobytes()

async with BleakClient(DEVICE_ADDR) as client:

if not client.is_connected:

raise Exception("BLE connection failed")

print("✅ Connected to BLE device")

result_text = None

# Callback for inference result

def callback(sender, data):

nonlocal result_text

try:

result_text = data.decode(errors="ignore").strip()

print("Received result:", result_text)

except Exception as e:

print("Decode error:", e)

await client.start_notify(RESULT_UUID, callback)

# Send image in chunks (BLE-safe)

print("Sending image data...")

for i in range(0, len(data_bytes), CHUNK):

await client.write_gatt_char(IMG_UUID, data_bytes[i:i+CHUNK], response=False)

await asyncio.sleep(0.03)

# Wait for MCU inference result

print("⏳ Waiting for inference result...")

for _ in range(100):

if result_text:

break

await asyncio.sleep(0.05)

await client.stop_notify(RESULT_UUID)

if result_text is None:

result_text = "No response from MCU"

return result_text, img.resize(PREVIEW_SIZE).convert("L")

def send_image_sync(image_path):

"""Synchronous wrapper for Gradio callback"""

return asyncio.run(send_image_ble(image_path))

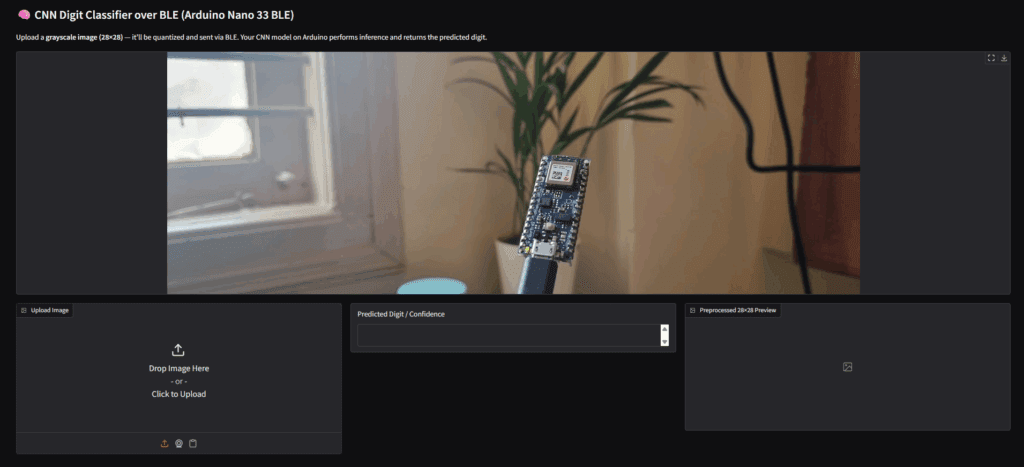

7.3 Gradio App UI to Send Image and Receive Prediction

# Gradio UI

with gr.Blocks() as demo:

gr.Markdown("## CNN Digit Classifier over BLE (Arduino Nano 33 BLE)")

gr.Markdown(

"Upload a **grayscale image (28×28)** — it’ll be quantized and sent via BLE. "

"Your CNN model on Arduino performs inference and returns the predicted digit."

)

gr.Image("../arduino-nano-33-BLE.jpg", show_label=False, elem_id="banner")

with gr.Row():

inp = gr.Image(type="filepath", label="Upload Image")

out_text = gr.Textbox(label="Predicted Digit / Confidence")

out_preview = gr.Image(label="Preprocessed 28×28 Preview")

inp.change(fn=send_image_sync, inputs=inp, outputs=[out_text, out_preview])

if __name__ == "__main__":

demo.launch()

Deploying ML Models on Arduino Conclusion

With this we wrap up the article ML on Arduino. I hope you enjoyed reading the article and found something new. The board Nano 33 BLE, despite of not having a MPU, did well. All within tiny-contrained memory of 256 kB. Ofcourse this will not provide ground breaking accuracy, but performance is still commendable. When, Nano 33 can handle tasks like classification, we can definitely hope better results with Arduino Uno Q.

I just hope that in the long run, Arduino maintains it’s open source, community oriented ethos. Which could be difficult to follow under a profit driven giant like Qualcomm.

Yes it is very much possible. Just don’t expect to run large models and do real time object detection and tracking on multiple objects.

As iteresting as Arduino Uno Q SBC sounds, it’s not built to replace Raspberry Pi any time soon. It’s phillosophy is centered around low power consumption, efficiency, and low latency microcontroller operations.

It ships with a custom debian OS, unable to comment as of Nov, 2025 regarding mainstream linux os or Ubuntu support.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning