To develop AI systems that are genuinely capable in real-world settings, we need models that can process and integrate both visual and textual information with high precision. This is the focus of Large Multimodal Models (LMMs), which combine language understanding with visual perception to enable richer, more context-aware intelligence. Among the most significant open-source contributions to this field is LLaVA (Large Language and Vision Assistant)—a model that bridges vision and language through a tightly coupled architecture built for practical reasoning across modalities.

In this post, we go beyond a surface-level summary and take a comprehensive look at the technical foundations of LLaVA and its enhanced version, LLaVA-1.5. We’ll explore the model architecture, the two-stage alignment and fine-tuning strategy, the carefully curated multimodal datasets released with the project, and the key implementation innovations that have made LLaVA a cornerstone of the open-source multimodal ecosystem.

- Visual Instruction Tuning

- LLaVA’s Open-Source Datasets

- LLaVA Architecture: A Model of Simplicity

- The Two-Stage Training Regimen: A Step-by-Step Breakdown

- LLaVA-1.5: The “Aha!” Moments and Key Technical Improvements

- Performance Benchmarks

- Getting Hands-On: Inference Code

- Key Takeaways: LLaVA in a Nutshell

- Conclusion

- References

Visual Instruction Tuning

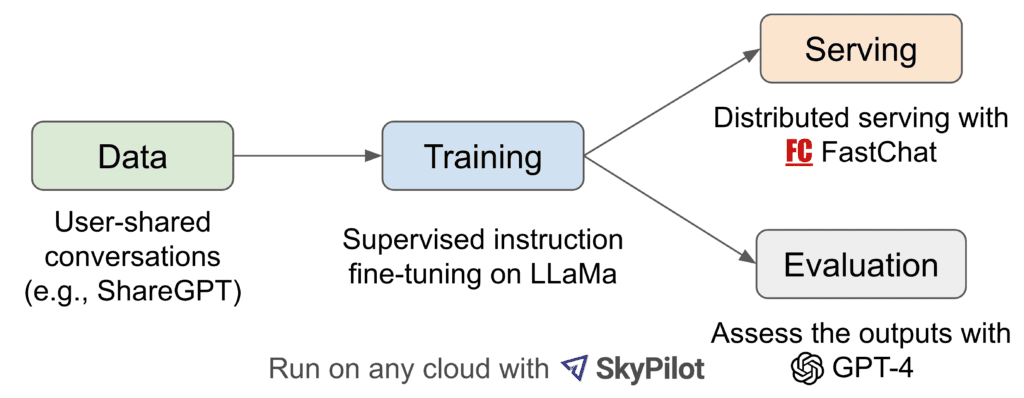

Instruction tuning was the key innovation that transformed base language models into capable, conversational assistants like ChatGPT. However, extending this paradigm to multimodal models—particularly those involving images—poses significant challenges. Curating large-scale datasets of image-based instructions and responses manually is prohibitively slow, costly, and difficult to scale.

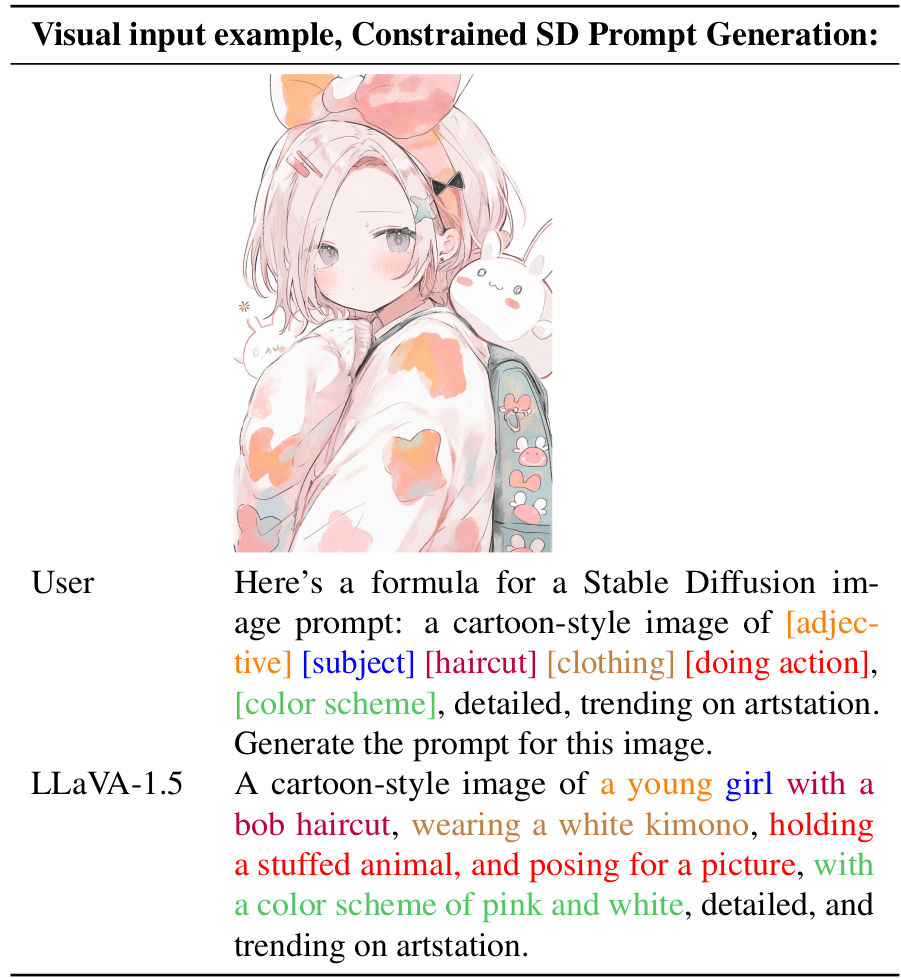

To address this, the authors of the LLaVA paper proposed a practical and scalable alternative: Visual Instruction Tuning. Instead of relying on extensive human annotation, they harnessed the advanced reasoning capabilities of a powerful existing LLM—GPT-4—to generate multimodal instruction-response pairs. By prompting GPT-4 with image captions and relevant context, it was effectively turned into a data generator. This strategy not only improved data efficiency but also enabled the creation of a diverse, high-quality dataset that played a critical role in LLaVA’s training and overall performance.

LLaVA’s Open-Source Datasets

The LLaVA team made a landmark contribution to the multimodal AI community by not only curating but also open-sourcing the data required to train LLaVA models. This move significantly accelerated innovation in the open-source ecosystem and provided a solid foundation for future multimodal research and applications.

LLaVA-Instruct-150K

The first major dataset introduced was LLaVA-Instruct-150K, designed to enable vision-language instruction tuning. The team began with images from the well-established MSCOCO dataset and used GPT-4 in an in-context learning setup to generate three distinct instruction-following data types for each image:

- Conversations: Multi-turn, plausible dialogues between a user and an assistant discussing the image. These teach the model to handle natural conversational flow and context retention.

- Detailed Descriptions: Rich and nuanced paragraphs that go beyond basic captioning, helping the model develop fine-grained scene understanding and descriptive articulation.

- Complex Reasoning: Instructional prompts that require logical inference, spatial reasoning, and interpretation of visual relationships, enabling the model to tackle higher-order visual questions.

The Upgrade: LLaVA-1.5 Data Mixture

For LLaVA-1.5, the authors significantly expanded and diversified the training corpus to address specific limitations in the LLaVA-Instruct-150k dataset. The resulting 665K-sample instruction-tuning dataset is a carefully engineered blend of various data sources, each targeting a unique capability:

- Academic VQA Benchmarks: Incorporating datasets such as GQA, OKVQA, and A-OKVQA, this addition strengthens the model’s performance on fact-based and commonsense visual question answering—particularly short, precise responses.

- OCR & Text-Rich Image Datasets: Datasets like OCR-VQA, TextVQA, and ScienceQA were included to improve the model’s ability to read, understand, and reason over textual elements embedded within images—an essential real-world skill.

- Region-Level Grounding Data: Using RefCOCO and Visual Genome, the model was trained to understand region-referenced language, such as “the red ball on the right,” improving its spatial grounding and referential accuracy.

- Text-Only Instruction Data (~40K): Recognizing the foundational role of language modeling, the team included high-quality, text-only instructions from ShareGPT. This enhanced the reasoning, dialogue coherence, and overall intelligence of the Vicuna language backbone—leading to stronger multimodal capabilities overall.

This strategically curated data mixture is arguably as critical to LLaVA’s performance as the model architecture itself. By targeting both general capabilities and specific weaknesses, the dataset plays a central role in enabling LLaVA-1.5 to perform robustly across a wide range of vision-language tasks.

To know about the directory structure for the datasets used, refer to the below chart:

├── coco

│ └── train2017

├── gqa

│ └── images

├── ocr_vqa

│ └── images

├── textvqa

│ └── train_images

└── vg

├── VG_100K

└── VG_100K_2

LLaVA Architecture: A Model of Simplicity

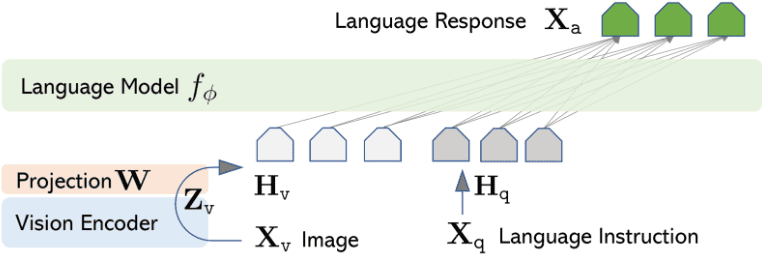

The architecture of LLaVA stands out for its simplicity and effectiveness. Rather than constructing a massive end-to-end model from scratch, the LLaVA team adopted a modular approach: seamlessly integrating two powerful, pre-trained models using a lightweight, trainable component that acts as a bridge between modalities. This design is both computationally efficient and highly scalable.

Vision Encoder (The “Eyes”): CLIP ViT

At the visual front end, LLaVA employs a pre-trained Vision Transformer (ViT) from CLIP—specifically, openai/clip-vit-large-patch14. Trained on hundreds of millions of image-text pairs using a contrastive learning objective, CLIP has proven to produce robust, semantically meaningful visual embeddings.

LLaVA-1.5 upgrades the encoder to openai/clip-vit-large-patch14-336, increasing the input resolution to 336×336, allowing the model to capture finer visual details—an important factor in improving downstream performance.

Images are first resized and split into 14×14 pixel patches, which are then passed through the transformer to generate a sequence of feature embeddings. Importantly, the vision encoder is kept frozen during training. This preserves CLIP’s strong generalization capabilities and reduces the overall number of trainable parameters.

Language Model (The “Brain”): Vicuna

The core reasoning and language generation capabilities are provided by Vicuna, an instruction-tuned version of Meta’s LLaMA. In LLaVA-1.5, the model uses lmsys/vicuna-13b-v1.5, which is known for its conversational fluency and strong instruction-following behavior.

- The Vicuna LLM is responsible for interpreting visual prompts, generating responses, and maintaining dialogue coherence.

- Thanks to instruction tuning, it excels in producing detailed, context-aware text outputs—crucial for visual question answering, scene description, and multimodal interaction.

Modality Translator (The “Bridge”): MLP Projector

The vision encoder and language model operate in distinct representational spaces, so LLaVA introduces a small but critical component to translate between them: the projector module.

- In the original LLaVA, this was a simple linear projection layer that mapped CLIP’s output embeddings (1024-dim) into the language model’s input space (e.g., 5120-dim for Vicuna-13B). Despite its simplicity, it enabled effective vision-language alignment.

- LLaVA-1.5 introduces a more expressive two-layer MLP (Multi-Layer Perceptron) with a GELU activation. This non-linear transformation greatly enhances the model’s ability to bridge modalities, enabling better alignment of visual semantics with the language model’s expectations.

- The projector is the only trainable component in LLaVA’s architecture (besides the final fine-tuning layers), making the system both efficient to train and easy to adapt.

The Two-Stage Training Regimen: A Step-by-Step Breakdown

One of the defining strengths of LLaVA lies in its efficient and modular training strategy. Rather than training the entire model from scratch, the LLaVA team adopted a two-stage training process that strategically leverages pre-trained components and focuses training only where it’s truly needed. Here’s a detailed look at each stage:

Stage 1: Pre-training for Feature Alignment

Goal: Teach the MLP projector to translate visual features into a format the language model can understand.

- What’s Trained: Only the MLP projector is trained. Both the CLIP vision encoder and the Vicuna LLM remain completely frozen.

- The Data: A carefully filtered 558K subset of image-caption pairs from datasets like LAION, CC, and SBU, selected to emphasize concrete visual concepts (e.g., objects and scenes containing identifiable nouns).

- The Task: Standard auto-regressive language modeling. Given an input image, the model must predict its caption, token by token. The only trainable component—the projector—must learn to map CLIP’s visual features into the language model’s embedding space in a way that facilitates this prediction.

- The Outcome: A checkpoint where the MLP projector functions as a competent modality translator, aligning vision embeddings with the LLM’s internal representation space.

- Training Time: Highly efficient—this stage completes in approximately 6 hours on a single 8×A100 GPU node.

Stage 2: End-to-End Instruction Fine-tuning

Goal: Convert the initialized model into a useful, conversational, multimodal assistant.

- What’s Trained: Both the MLP projector and the Vicuna LLM are now unfrozen and fine-tuned jointly. The CLIP vision encoder remains frozen to preserve its pre-trained visual understanding.

- The Data: The comprehensive 665K instruction tuning mixture discussed earlier, includes:

- Multi-turn conversations

- Visual question answering (VQA)

- OCR-based reasoning

- Region-level grounding

- Text-only instruction tuning

- The Task: Instruction-following fine-tuning using diverse multimodal prompts. The model learns to:

- Understand and respond to image-based questions

- Generate detailed scene descriptions

- Read and reason over textual content within images

- Maintain coherent and helpful conversations

- Training Time: About 20 hours on an 8×A100 GPU node. Training is distributed and memory-efficient, facilitated by DeepSpeed ZeRO for model parallelism and optimization.

Why This Matters

This two-phase training approach is highly resource-efficient and avoids redundant computation:

- Stage 1 ensures the projector understands how to “speak the LLM’s language.”

- Stage 2 allows the full system to generalize and behave like a capable assistant—without ever touching the vision encoder.

Together, these stages enable LLaVA to achieve strong performance across a wide range of multimodal tasks, all while maintaining a lightweight and scalable training pipeline.

LLaVA-1.5: The “Aha!” Moments and Key Technical Improvements

LLaVA-1.5 represents a major advancement—not just because it’s larger, but because of smart design choices. Its improvements come from solving real problems that have challenged multimodal models for a long time. These updates help LLaVA-1.5 handle a wider range of tasks more effectively, without slowing down or using too many extra resources.

The Conversation vs. Q&A Dilemma: Solved by Response Format Prompting

A huge challenge for LMMs is handling different types of tasks. Models fine-tuned on academic VQA datasets like GQA, and OKVQA (which have short, one-word answers) become terrible at natural, long-form conversation.

LLaVA-1.5 introduces an elegant yet simple solution: Response Format Prompting.

- For VQA-type tasks, the input prompt includes a suffix such as: “Answer the question using a single word or phrase.”

- This addition provides explicit instruction signals to the language model, guiding it to choose the appropriate response length and tone based on the task.

- The result is a model that can fluidly switch between concise, fact-based answers and natural, conversational responses—without needing task-specific fine-tuning splits.

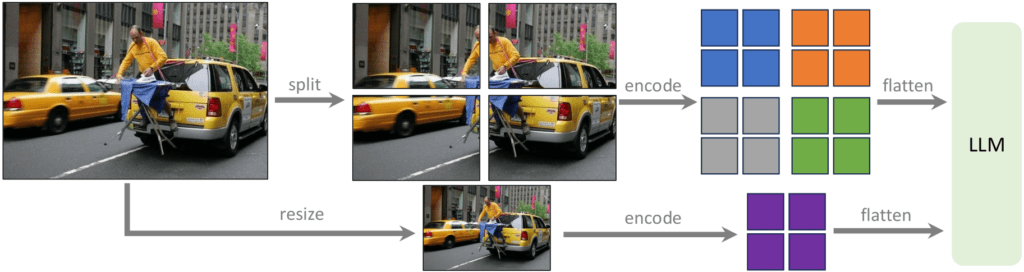

Seeing in HD: High-Resolution Image Understanding with LLaVA-1.5-HD

One of the biggest technical bottlenecks in Vision Transformers (ViTs), including CLIP, is their fixed input resolution—typically capped at 224×224 or 336×336 pixels. Scaling up resolution usually requires retraining the ViT or interpolating positional embeddings, which can degrade performance.

LLaVA-1.5-HD introduces a clever multi-scale processing method to overcome this limitation without altering the frozen vision encoder. Here’s how it works:

High-Resolution Image Processing Pipeline:

- Image Slicing: The input image is divided into a grid of smaller patches, each sized to match the native input resolution of the CLIP encoder (336×336).

- Independent Encoding: Each patch is passed through the frozen CLIP ViT independently, generating localized feature embeddings.

- Global Context Encoding: A downsampled version of the full image is also processed by CLIP to capture holistic scene-level context.

- Feature Fusion: The model concatenates all patch-level features along with the global context features, which are then passed through the MLP projector to interface with the LLM.

This technique gives LLaVA a fine-grained view of visual content—akin to “seeing in HD”—while preserving computational efficiency and architectural modularity.

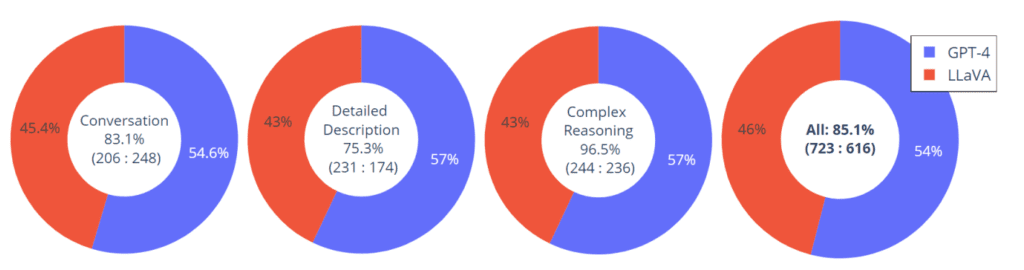

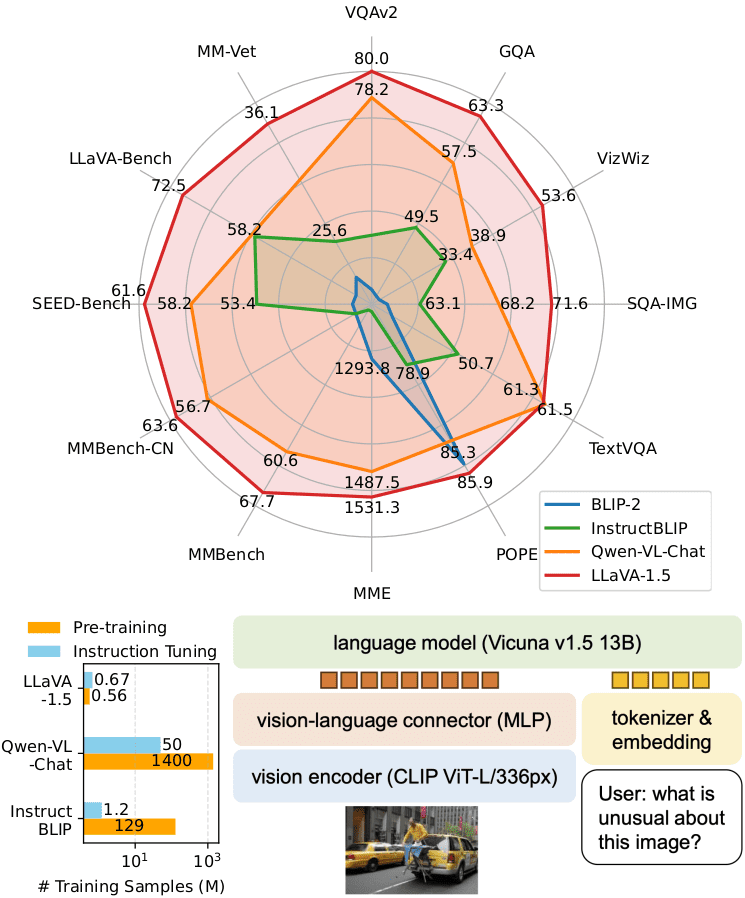

Performance Benchmarks

Let’s look at the ablation study from the LLaVA-1.5 paper, which shows how each improvement contributed to the final result.

| # | Method Modification | LLM | Resolution | GQA | MME | MM-Vet |

| 0 | Original LLaVA Baseline | 7B | 224 | _ | 809.6 | 25.5 |

| 1 | + VQAv2 | 7B | 224 | 47.0 | 1197.0 | 27.7 |

| 2 | + Format Prompting | 7B | 224 | 46.8 | 1323.8 | 26.3 |

| 3 | + MLP VL Connector | 7B | 224 | 47.3 | 1355.2 | 27.8 |

| 4 | + OKVQA/OCR | 7B | 224 | 50.0 | 1377.6 | 29.6 |

| 5 | + Scale-up Resolution | 7B | 336 | 51.4 | 1450 | 30.3 |

| 6 | + Scale-up LLM | 13B | 336 | 63.3 | 1531.3 | 36.1 |

When the model is scaled up to 13B parameter LLM, results obtained are state-of-the-art across the board.

| Model | LLM | Image Size | Pre-train Size | Finetune Size | VQAv2 | GQA | TextVQA |

| BLIP-2 | Vicuna-13B | 224×224 | 129B | – | 65.0 | 41 | 42.5 |

| QwenVL | Qwen-7B | 448×448 | 1.4B* | 50M* | 78.8 | 59.3 | 63.8 |

| LLaVA-1.5 | Vicuna-7B | 336×336 | 558K | 665K | 78.5 | 62.0 | 58.2 |

| LLaVA-1.5 | Vicuna-13B | 336×336 | 558K | 665K | 80.0 | 63.3 | 61.3 |

| LLaVA-1.5-HD | Vicuna-13B | 448×448 | 558K | 665K | 81.8 | 64.7 | 62.5 |

| PaLI-X-55B (Specialist SOTA) | – | – | – | – | 86.1 | 72.1 | 71.4 |

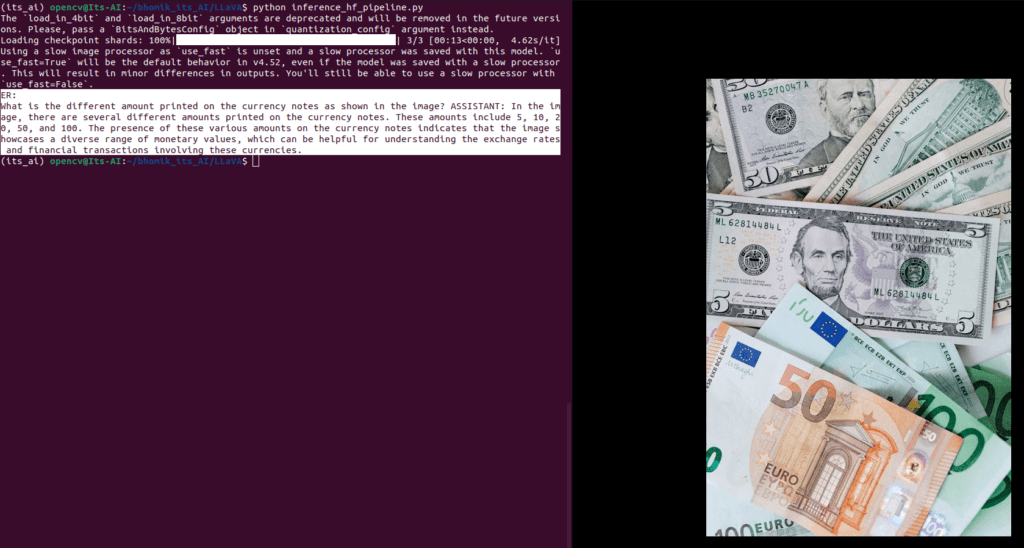

Getting Hands-On: Inference Code

LLaVA’s open-source nature is its greatest strength. You can download and run it. So, let’s begin some coding 🙂

There are two ways you can perform inferencing with LLaVA. One way is to clone LLaVA’s GitHub repository and follow the instructions provided there. The other way is to use Huggingface hosted model and perform inferencing using transformers library provided by Huggingface.

After experimenting with both the ways, we would highly suggest you to proceed with Huggingface variant as its pipeline is completely robust with little to no dependency issues, unlike cloning github repo and then inferencing. Hence in this blog post we will provide the instructions and results using Huggingface’s pipeline.

Finally we can start now:

#installing the transformers library, highly recommended to perform all the experiments in a new virtual environment.

pip install transformers

#importing the necessary libraries.

import torch

import requests

from PIL import Image

from transformers import AAutoProcessor, LlavaForConditionalGeneration

In the below code snippet we will instantiate the model and processor object.

model_id = "llava-hf/llava-1.5-7b-hf"

model = LlavaForConditionalGeneration.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

low_cpu_mem_usage=True,

load_in_4bit = True

).to(0)

processor = AutoProcessor.from_pretrained(model_id)

As, you would be probably wondering the reason why are we experimenting with a 7B model when a 13B model would definitely perform better. The reason is that the required VRAM is more than 6GB for a 13B model and we performed all our experiments on our local machine which had only 6GB VRAM.

Even the 7B model requires around 8GB VRAM, if executed without using 4bit configuration. Hence, we use the load_in_4_bit flag to reduce the memory requirement down to 6GB( around 4.9GB ).

conversation = [

{

"role": "user",

"content": [

{"type": "text", "text": "input_prompt"},

{"type": "image"},

],

},

]

prompt = processor.apply_chat_template(conversation, add_generation_prompt=True)

In the above code snippet, we tend to define the prompt template which tells the model in what structure the output needs to be shown. We can even define a template in the structure similar to JSON and then the model will mimic the format and print the output likewise.

image = "input_image"

raw_image = Image.open(image)

inputs = processor(images=raw_image, text=prompt, return_tensors='pt').to(0, torch.float16)

output = model.generate(**inputs, max_new_tokens=200, do_sample=False)

print(processor.decode(output[0][2:], skip_special_tokens=True))

Finally, decoding and generating the output of the model as shown in the code above.

The above image is a screenshot of executing LLaVA inference script (link to download, provided below) through terminal and the corresponding image as well.

Key Takeaways: LLaVA in a Nutshell

- The Core Idea Was “Visual Instruction Tuning”: LLaVA’s breakthrough was using GPT-4 to automatically generate a massive dataset of image-based conversations and questions. This bypassed the slow, expensive process of human annotation and kickstarted modern multimodal training.

- The Architecture is Smart and Efficient: LLaVA works by connecting a frozen vision encoder (CLIP) and a frozen large language model (Vicuna) with a small, trainable MLP projector. This design leverages the power of pre-trained models without costly, full-scale retraining.

- A Clever Two-Stage Training Process: First, only the MLP projector is pre-trained to learn how to “translate” images into a language the LLM understands. Second, the projector and the LLM are fine-tuned together on instruction data to become a helpful visual assistant.

- LLaVA-1.5 Mastered Different Tasks with Better Data: The leap to state-of-the-art performance came from enriching the training data with academic VQA and OCR datasets, and using “response format prompting” to teach the model how to handle both short, factual answers and long, conversational replies.

- It Created the Open-Source Blueprint for VLMs: More than just a model, LLaVA provided a powerful, reproducible, and accessible roadmap for building high-performance visual assistants, democratizing research and setting the stage for the next wave of multimodal AI.

Conclusion

LLaVA wasn’t just another AI model—it was a big step forward for open-source research. By keeping things simple—using strong pre-trained models and adding a small piece to connect them—it showed that powerful visual assistants don’t need huge, complex setups. More importantly, LLaVA made it easier for others to build and improve multimodal AI. It set a clear example that’s still helping researchers and developers create AI that can both see and understand the world, just like we do.

References

- Github Repo

- Improved Baselines with Visual Instruction Tuning paper(LLaVA-1.5).

- LLaVA Hugging Face

- LLava paper

- LLaVA-NeXT official blog post

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning