If you haven’t been to the Wizarding World of Harry Potter or don’t know what an ‘interactive wand’ is, you are kinda missing out on a really well designed experience that uses computer vision to create magical interactions. As this video shows, when you visit the Wizarding World at Universal Studios, you can buy a wand that can be used to interact with mechatronic displays and objects that are placed in strategic spots throughout the park.

How does this work??

Well, the wand actually has a glass-like material attached to the tip that reflects IR light very efficiently, acting like a marker that’s used in IR-based motion capture systems.

The wand as seen through an IR camera (when IR light is projected on it):

An IR transceiver system, consisting of IR light emitters and an IR camera, tracks the motion of the wand in its field of view and uses image processing to correlate this motion to a stored gesture, which then triggers different interactions.

My Setup

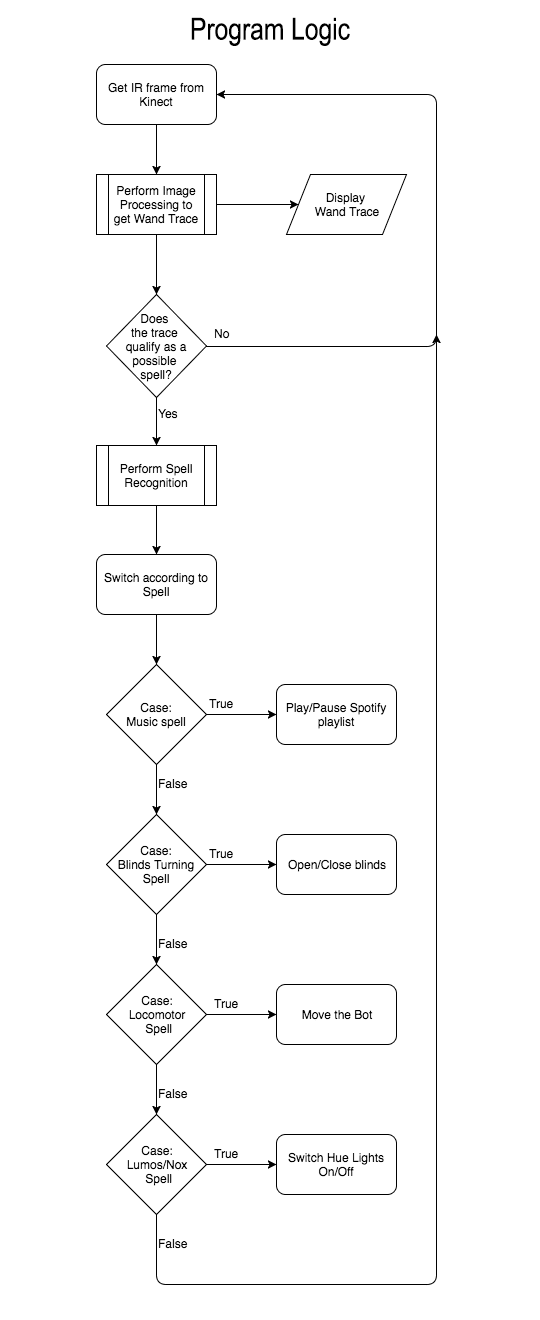

I used a very similar setup for my project. I used the Xbox One Kinect as my IR transceiver (take a look at the Kinect’s IR light projections on this post and guess why I didn’t use Xbox 360 Kinect) and using OpenCV, performed wand tracking and ‘spell’ recognition- which can also be called as character recognition. This post will only cover the CV part in detail, but to understand how it fits in the overall structure of the program, take a look at the flowchart below.

The entire code for this project is on GitHub but we would be concerned only with the ‘ImageProcessor’ class files in the repo.

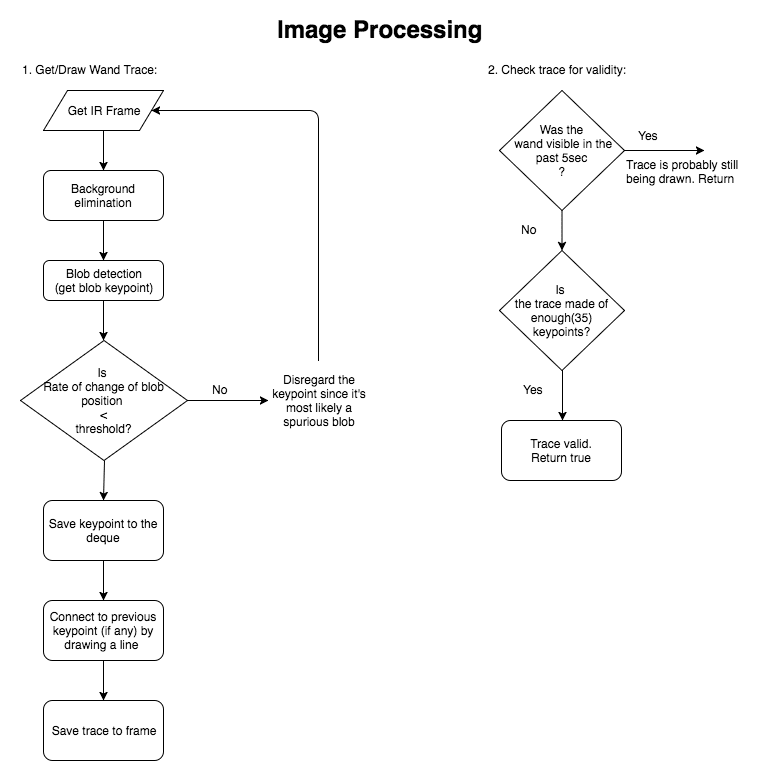

As shown in flowchart above, for every frame received from Kinect’s IR camera, we start by performing blob detection, looking for a small sized, white blob. Given below are the parameters used for the blob detector in OpenCV.

// Set thresholds

_params.minThreshold = 150;

_params.maxThreshold = 255;

// Filter by color

_params.filterByColor = true;

_params.blobColor = 255;

// Filter by Area

_params.filterByArea = true;

_params.minArea = 0.05f;

_params.maxArea = 20;

// Filter by Circularity

_params.filterByCircularity = true;

_params.minCircularity = 0.5;

// Filter by Convexity

_params.filterByConvexity = true;

_params.minConvexity = 0.5;

// Filter by Inertia?

_params.filterByInertia = false;

// Create the blob detector

_blobDetector = SimpleBlobDetector::create(_params);

The problem with using just blob detection is that it picks up reflections from the surroundings as well. So we minimize this interference by performing background elimination. We use a Gaussian Mixture-based Background/Foreground Segmentation Algorithm (MOG2). This gets rid of almost all static reflections. Reflections on items like eyeglasses and watches would still cause interference though.

Next, to filter out unwanted blobs that weren’t removed in background elimination, we check if the blob is moving at a reasonable speed. Spurious blobs(noise) jump in and out of frame, as opposed to our wand movement, which is expected to follow a pretty constant speed. As you can see, there’s hardly any noise visible afterward. The picture below shows the trace superimposed on the frame after background subtraction.

To get a complete trace, we use a deque to store the detected consecutive blob keypoints. Using a deque makes it easy to resize the queue dynamically as we draw the trace. I recorded the max number of keypoints that each of my spell traces consisted of, and set that as the max size limit of the deque (we don’t want the deque to grow uncontrollably). And then, draw a line between the last keypoint and the new one. Since the framerate of the Kinect is pretty good, these line segments are small enough to form a smooth curve

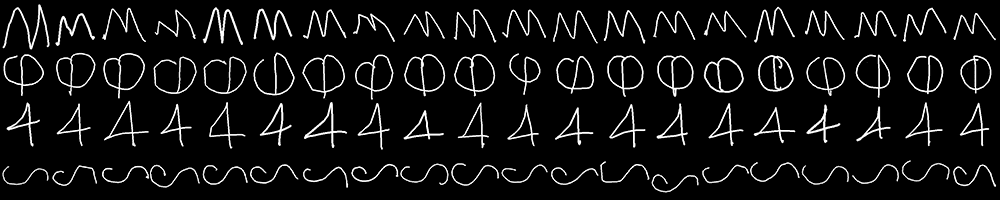

The final piece of the puzzle is spell recognition. I referred to Satya’s tutorial on handwritten digits classification to build this part. The only major difference in my implementation is the size of training dataset used. I wasn’t up for building a dataset of 5000 reference images so I used a little trick – I used ‘characters’ that would be very easy for the SVM to distinguish from each other. So, even if a small dataset were used for training, it would still be able to classify the characters with great accuracy. (It still involved quite a few hours of drawing, saving and sorting the trace images.)

Well, once the training is in order, we use the trained model to perform spell recognition. We use a combination of time, trace size & speed-based check to determine if the trace is a valid character. If it is, then we perform character classification and use the result to perform actions! There is a problem with using this kind of ‘spell check’ though. There is no foolproof way to rule out a trace that wasn’t drawn correctly or is not a trained character; if such a false trace was provided to SVM, it would falsely classify the trace to be the character it finds closest to. This would be largely solved if some method was used to determine the ‘confidence level’ of the classification. I am open to suggestions & corrections.

That said, after a fair bit of practice in wand maneuvering, you can easily get a well-working character recognition system that would happily perform the right spells.

That’s it for the Computer Vision part! If you are interested in learning about the other components of the project too, you can check out the Instructable here: http://www.instructables.com/id/Tech-Magic-Home-Automation-Using-Interactive-Wand-/

Huge thanks to Satya for his detailed tutorials, because of which I could understand complex computer vision & machine learning concepts easily.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning