In today’s information age, we’re constantly bombarded with questions. Whether it’s researching a historical event, troubleshooting a tech issue, or simply satisfying our curiosity, finding the right answer can feel like a never-ending quest. Retrieval-Augmented Generation (RAG) systems – are powerful tools designed to help us navigate this information overload. We already know how useful LLMs can be in a lot of scenarios, but they have their limitations. The combination of RAG with LLMs helps with these limitations and allows for tackling complex factual queries.

This article takes you on a journey into the exciting realm of RAG systems. We’ll start by seeing some of the limitations of large language models (LLMs) and see how RAG with LLMs helps in addressing these limitations. We’ll then delve into building our RAG system using LangChain, a user-friendly framework. Then we will go further by constructing Question-and-answer (Q&A) chains within the RAG system, allowing for a more nuanced and interactive dialogue. Finally, we’ll introduce the concept of memory to create more functional chatbots. By the end of this article, you’ll be equipped to build a powerful and dynamic LLM solution that leverages the strengths of both pre-trained models and up-to-date knowledge sources. So, buckle up and get ready to unlock the potential of these intelligent information retrieval systems!

Limitations of Large Language Models

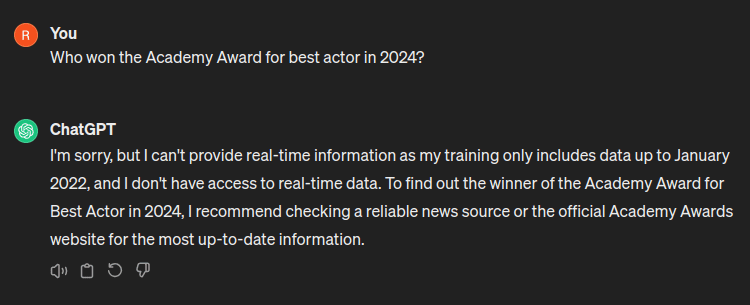

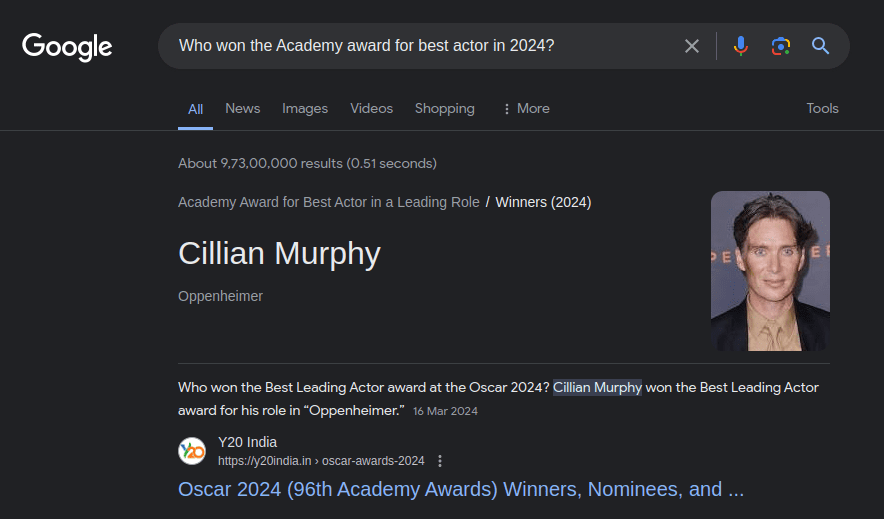

ChatGPT’s or any other publicly available LLM’s reliance on training data can restrict its usefulness in certain business scenarios beyond code generation. The limitation arises from two factors: the training data it relies on and its tendency to fabricate information, also known as hallucination. Imagine you’re a customer service representative, and a client asks about a new policy update implemented in February 2024. Since most LLM training datasets would have been cut off before this date, it wouldn’t have access to this specific information. In this scenario, LLMs might provide an outdated response or, even worse, fabricate details about the new policy, potentially leading to customer confusion and frustration. At the time of writing, if you ask ChatGPT about events that occurred after January 2022, it will probably give a response like this:

Now, this is certainly not helpful. So, what can we do about it?

- Train or fine-tune your model: Fine-tuning or training a large language model is a daunting task. Not only are the computational resources expensive but the sheer effort required to curate and prepare the massive datasets needed can also be impractical for many applications. To understand more about fine-tuning, check out our article on Fine-Tuning LLMs.

- Using Retrieval Augmented Generation (RAG): By providing access to an up-to-date knowledge base, RAG makes it significantly cheaper and easier to implement advanced capabilities compared to traditional methods like training from scratch or fine-tuning. In this article, we’ll explore how to leverage RAG with language models on HuggingFace. We will put the model to the test by conducting a short analysis of its ability to answer questions about the recent Academy Awards (2024) using a Wikipedia knowledge base.

Libraries & Pre-requisites

The framework used for RAG is LangChain, which is open-source. They provide APIs enabling you to build applications on top of large language models. We also leverage sentence transformers and the transformers library from Hugging Face.

The code below will install everything we need for this tutorial. The code is written in colab and you can download it with the commands below.

%pip install --upgrade --quiet langchain langchain-community langchainhub

%pip install accelerate bitsandbytes faiss-cpu pypdf sentence-transformers

Pre-Processing

Preprocessing a PDF document is straightforward. We’ll leverage LangChain’s PyPDFLoader() class to load the document. This class automatically converts the PDF into a format suitable for LangChain’s downstream tasks. Explore LangChain’s other document loaders for working with different file types.

The next step is to divide the document into smaller chunks. This facilitates efficient retrieval of query-specific text. We’ll use the RecursiveCharacterTextSplitter() class for this purpose. This class iteratively splits the document using delimiters like double newline (“\n\n”), single newline (“\n”), space (” “), and finally, individual characters, until the chunks reach a specified size (measured in characters). We can and will modify these delimiters to respect the sentence boundaries by adding (“.”) as a delimiter after the double newline (“\n\n”) delimiter. In question-answering applications, maintaining some overlap between sentences is crucial for context. You can achieve this using the chunk_overlap parameter, which defines the number of characters shared between consecutive chunks.

The pre-processing code is outlined below.

from langchain_community.document_loaders import PyPDFLoader

from langchain_text_splitters import RecursiveCharacterTextSplitter

loader = PyPDFLoader("location-of-your-file")

data = loader.load()

text_splitter = RecursiveCharacterTextSplitter(chunk_size=500, chunk_overlap=100, separators=[

"\n\n",

".",

"\n",

" ",

"",

])

all_splits = text_splitter.split_documents(data)

Sentence Embeddings and Vector Store

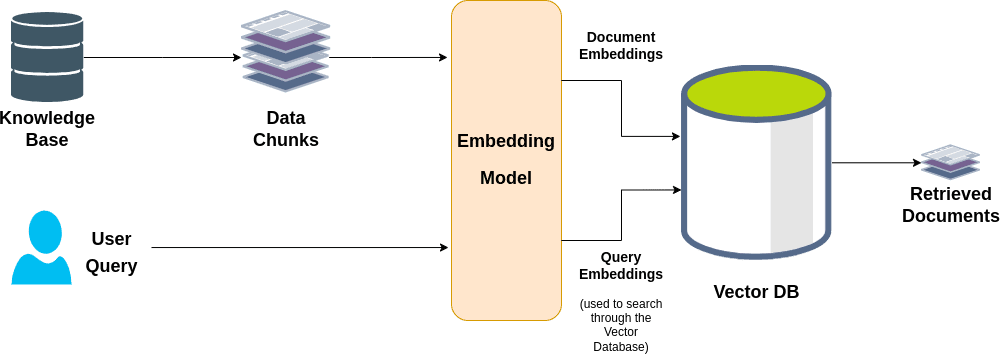

Before diving into the technical aspects of building an RAG system, let’s explore the concept of sentence embeddings. This is crucial for understanding how RAG methods function.

In essence, large language models don’t truly grasp text; they operate on numerical representations. Sentence embeddings play a vital role in language modeling by effectively converting text into numbers. These embeddings are learned from a deep neural network’s dense layer, with the specific structure varying with networks.

Simply put, sentence embeddings are numerical representations of sentences that capture their semantic meaning. We can obtain these embeddings from pre-trained models available through Hugging Face’s sentence transformers library.

One of the most common ways to store and search over unstructured data is to embed it and store the resulting embedding vectors. During the time of query, embed the unstructured query and retrieve the embedding vectors that are ‘most similar’ to the embedded query. A vector store takes care of storing embedded data and performing vector search for you. A key part of working with vector stores is creating the vector to put in them, which is usually created via embeddings. We will use the HuggingFaceEmbeddings() class to load the all-MiniLM-l6-v2 model, which creates 384-dimensional embeddings.

from langchain_community.embeddings import HuggingFaceEmbeddings

from langchain.vectorstores import FAISS

# Path to the pre-trained model you want to use

modelPath = "sentence-transformers/all-MiniLM-l6-v2"

# Create a dictionary specifying CPU as model configuration for computations

model_kwargs = {'device':'cpu'}

# Create a dictionary with encoding options

encode_kwargs = {'normalize_embeddings': False}

# Initialize an instance of HuggingFaceEmbeddings with the specified parameters

hf = HuggingFaceEmbeddings(

model_name=modelPath, # Provide the pre-trained model's path

model_kwargs=model_kwargs, # Pass the model configuration options

encode_kwargs=encode_kwargs # Pass the encoding options

)

# Creating the vectore store

vectorstore = FAISS.from_documents(all_splits, hf)

# Persist the vectors locally on disk

vectorstore.save_local("directory-path-for-storing-the-vectors")

# Load from local storage

# vectorstore = FAISS.load_local("directory-path-for-storing-the-vectors", hf, allow_dangerous_deserialization=True) # allow_dangerous_deserialization indicates whether it is safe or not to laod the specified vectors

This vector store will be used to create a retriever. A retriever is an interface that returns documents based on an unstructured query. In essence, a vector store retriever acts as an intermediary between your application and the vector store. It simplifies the process of retrieving documents by leveraging the vector store’s built-in search functionalities. These functionalities include similarity search and Maximum Marginal Relevance (MMR), allowing the retriever to find documents most relevant to your query efficiently.

# Creating retriever from the vectorstore with a search configuration where it retrieves up to 4 relevant splits/documents.

retriever = vectorstore.as_retriever(search_kwargs={"k": 4})

We can use the retriever to get relevant documents for a given query as shown below:

docs = retriever.get_relevant_documents("Who won the Academy award for best actor in 2023?")

for doc in docs:

print(doc)

''' Output:

page_content='. Retrieved March 14, 2024.\n"Will Smith isn\'t the only actor to cause consternation at the world\'s most coveted film\nawards."\nStarkey, Adam (January 23, 2023). "Who has won the most Oscars?" (https://www.nme.c\nom/news/film/who-won-most-oscars-3382332). NME. Archived (https://web.archive.org/w\neb/20230124013232/https://www.nme.com/news/film/who-won-most-oscars-3382332)\nfrom the original on January 24, 2023. Retrieved March 14, 2024' metadata={'source': '/content/drive/MyDrive/RAG/Academy_Awards.pdf', 'page': 33}

page_content='.[9][10]\nThe first Best Actor awarded was Emil Jannings, for\nhis performances in The Last Command and The\nWay of All Flesh. He had to return to Europe before\nthe ceremony, so the Academy agreed to give him\nthe prize earlier; this made him the first Academy\nAward winner in history' metadata={'source': '/content/drive/MyDrive/RAG/Academy_Awards.pdf', 'page': 1}

page_content='. Retrieved March 14,\n2024. "And are the Oscars, given out by Hollywood\'s most prestigious professional\nassociation, the biggest prize in the world — or just in America?"' metadata={'source': '/content/drive/MyDrive/RAG/Academy_Awards.pdf', 'page': 32}

page_content='Best in films in 2023\n \nAward Best Actor Best Actress\nWinner Cillian Murphy\n(Oppenheimer)Emma Stone\n(Poor Things)\n \nAward Best Supporting\nActorBest Supporting\nActress\nWinnerRobert Downey Jr' metadata={'source': '/content/drive/MyDrive/RAG/Academy_Awards.pdf', 'page': 1} '''

You can try different queries and see the retrieved documents for yourself to better understand the capabilities of this approach.

The entire process is summarized in the diagram below:

Loading LLM with HuggingFace

Next, we will load an LLM and see how our RAG system can enhance its capabilities. We’ll begin by importing the relevant libraries. To understand how a LLM works you can check out our previous article on Deciphering LLMs.

from langchain.llms.huggingface_pipeline import HuggingFacePipeline

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

from transformers import BitsAndBytesConfig

First, we need to load the model. You’ll notice two flags in the from_pretrained call:

- device_map ensures the model is moved to your GPU(s)

- load_in_8bit applies 8-bit dynamic quantization to massively reduce the resource requirements.

Next, you need to preprocess your text input with a tokenizer.

model_id = "pankajmathur/orca_mini_3b"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

device_map="auto",

load_in_8bit=True

)

pipe = pipeline("text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=1024

)

llm = HuggingFacePipeline(pipeline=pipe)

The pipeline() class makes it simple to use any model from the Hub for inference on any language, computer vision, speech, and multimodal tasks. Even if you don’t have experience with a specific modality or aren’t familiar with the underlying code behind the models, you can still use them for inference with the pipeline(). We’ll leverage this class here to make the inference process simple.

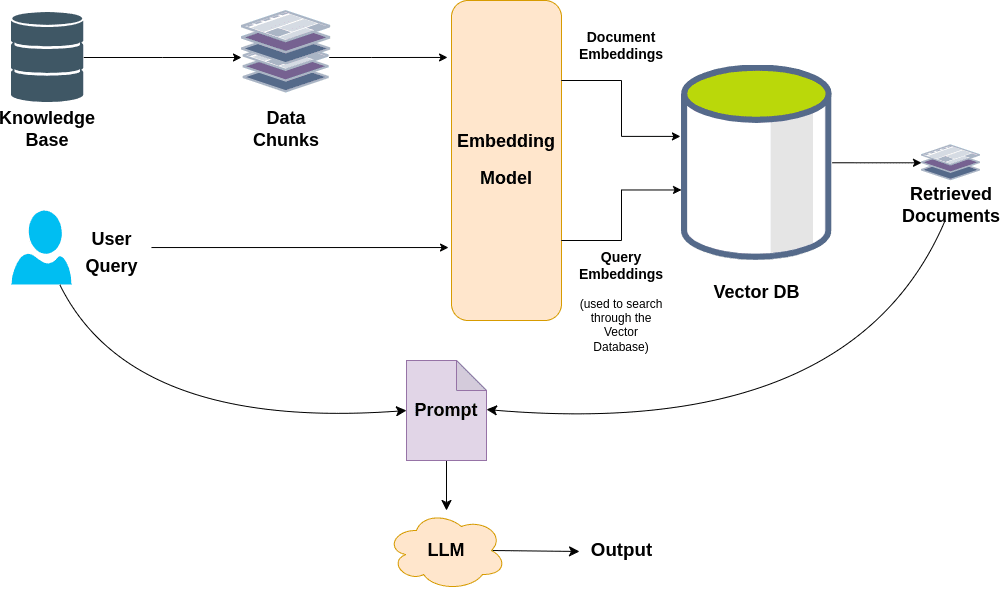

Retrieval QA Chain

Now, let’s explore how we can leverage those retrieved snippets (from the vector store) to improve our LLM’s response. We may also need to compress the relevant splits to fit into the LLM context. Finally, we send these splits along with a prompt and user query to the language model to get the answer.

We will create our question-answering chain using the RetrievalQA class from LangChain. We will pass our language model, retriever, and RAG prompt to create a chain that is backed by a retrieval step for answering our questions. The prompt template has instructions about how to use the context. We will use the standard template available on LangChain for retrieval-based question answering. You can create your own prompt using the PromptTemplate class from LangChain.

from langchain.chains import RetrievalQA

from langchain import hub

# Loads the latest version of the RAG prompt

prompt = hub.pull("rlm/rag-prompt", api_url="https://api.hub.langchain.com")

qa_chain = RetrievalQA.from_chain_type(

llm, retriever=retriever, chain_type_kwargs={"prompt": prompt}, chain_type="stuff"

)

By default, the “stuff” method is used here in which we feed all retrieved document chunks into a single call to the language model. However, this approach becomes impractical when dealing with a large number of documents that exceed the model’s context window limitations. To address this challenge, several alternative methods exist, including MapReduce, Refine, and MapRerank. We don’t need these methods here, so we will go ahead with the default method and see how RAG with LLMs empowers access to real-time information.

Let’s start by asking the question directly to our LLM without using the RAG system.

llm.invoke('Who won the Academy award for best actor in 2024?')

''' Output:

Who won the Academy award for best actor in 2024?

Answer: Will Smith won the Academy award for best actor in 2024.'''

You can do a quick search online to see that this is not true.

Now let’s explore how RAG with LLMs helps us find the most relevant information from our Wikipedia knowledge base to empower better question-answering.

question = "Who won the Academy award for best actor in 2024?"

result = qa_chain.run({"query": question})

print(result)

''' Output:

Human: You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, just say that you don't know. Use three sentences maximum and keep the answer concise.

Question: Who won the Academy award for best actor in 2024?

Context: .[9][10]

The first Best Actor awarded was Emil Jannings, for

his performances in The Last Command and The

Way of All Flesh. He had to return to Europe before

the ceremony, so the Academy agreed to give him

the prize earlier; this made him the first Academy

Award winner in history

. Retrieved March 14,

2024. "And are the Oscars, given out by Hollywood's most prestigious professional

association, the biggest prize in the world — or just in America?"

. Retrieved March 14, 2024.

"Will Smith isn't the only actor to cause consternation at the world's most coveted film

awards."

Starkey, Adam (January 23, 2023). "Who has won the most Oscars?" (https://www.nme.c

om/news/film/who-won-most-oscars-3382332). NME. Archived (https://web.archive.org/w

eb/20230124013232/https://www.nme.com/news/film/who-won-most-oscars-3382332)

from the original on January 24, 2023. Retrieved March 14, 2024

Best in films in 2023

Award Best Actor Best Actress

Winner Cillian Murphy

(Oppenheimer)Emma Stone

(Poor Things)

Award Best Supporting

ActorBest Supporting

Actress

WinnerRobert Downey Jr

Answer: Cillian Murphy won the Best Actor award in 2024, and Emma Stone won the Best Actress award. '''

Great! This is indeed the information we were looking for. You can play around with the questions and see how our RAG system performs for yourself.

But you can notice that there is no concept of memory available in the RAG system that we built. Without knowledge of the previous conversations, our system cannot answer follow-up questions. You can check this by asking follow-up questions based on the previous responses. In the next section, we will show you how to include the concept of memory to create a functional chatbot.

Question Answering like a ChatBot

This is going to be similar to what we have created in the previous section but with an additional concept of chat history. This will allow it to take in the chat history to answer the follow-up questions asked by the user. We will be working with the ConversationBufferMemory. This keeps a list of buffer chat messages in the history and passes it along with the question to the chatbot every time. We will define the memory key as “chat_history” and return_messages as True. The return_messages when set to True will return the chat history as a list of messages as opposed to a single string. Next, we will create a ConversationalRetrievalChain, which adds a step on top of the RetrievalQA chain. It not only adds memory but instead a step that takes the history and the new question and condenses this question into a standalone question (this is done by creating a prompt with the chat history and new question and passing it to the LLM for getting the standalone question) which is then used to look up relevant documents in the vector store and answer the question.

from langchain.chains import ConversationalRetrievalChain

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory(

memory_key="chat_history",

return_messages=True

)

chat_qa = ConversationalRetrievalChain.from_llm(

llm,

retriever=retriever,

memory=memory

)

Now, let’s ask some questions and see what happens.

question = "Who won the Academy Award for best actor in 2024?"

result = chat_qa({"question": question})

print(result['answer'])

''' Output:

Use the following pieces of context to answer the question at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer.

.[9][10]

The first Best Actor awarded was Emil Jannings, for

his performances in The Last Command and The

Way of All Flesh. He had to return to Europe before

the ceremony, so the Academy agreed to give him

the prize earlier; this made him the first Academy

Award winner in history

. Retrieved March 14,

2024. "And are the Oscars, given out by Hollywood's most prestigious professional

association, the biggest prize in the world — or just in America?"

. Retrieved March 14, 2024.

"Will Smith isn't the only actor to cause consternation at the world's most coveted film

awards."

Starkey, Adam (January 23, 2023). "Who has won the most Oscars?" (https://www.nme.c

om/news/film/who-won-most-oscars-3382332). NME. Archived (https://web.archive.org/w

eb/20230124013232/https://www.nme.com/news/film/who-won-most-oscars-3382332)

from the original on January 24, 2023. Retrieved March 14, 2024

Best in films in 2023

Award Best Actor Best Actress

Winner Cillian Murphy

(Oppenheimer)Emma Stone

(Poor Things)

Award Best Supporting

ActorBest Supporting

Actress

WinnerRobert Downey Jr

Question: Who won the Academy Award for best actor in 2024?

Helpful Answer: Cillian Murphy won the Academy Award for best actor in 2024.'''

Let’s look at the follow-up question.

question = "For which film did he win the award?"

result = chat_qa({"question": question})

print(result['answer'])

''' Output:

Use the following pieces of context to answer the question at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer.

.[9][10]

The first Best Actor awarded was Emil Jannings, for

his performances in The Last Command and The

Way of All Flesh. He had to return to Europe before

the ceremony, so the Academy agreed to give him

the prize earlier; this made him the first Academy

Award winner in history

. Retrieved March 14, 2024.

"Will Smith isn't the only actor to cause consternation at the world's most coveted film

awards."

Starkey, Adam (January 23, 2023). "Who has won the most Oscars?" (https://www.nme.c

om/news/film/who-won-most-oscars-3382332). NME. Archived (https://web.archive.org/w

eb/20230124013232/https://www.nme.com/news/film/who-won-most-oscars-3382332)

from the original on January 24, 2023. Retrieved March 14, 2024

. Retrieved March 14,

2024. "And are the Oscars, given out by Hollywood's most prestigious professional

association, the biggest prize in the world — or just in America?"

.bbc.co.uk/newsround/25761294) from the original on

January 20, 2014. Retrieved April 5, 2022. "The Oscars are thought to be the most

prestigious film awards in the world."

Whipp, Glenn (January 9, 2023). "Awards show power rankings, from worst to first" (http

s://www.latimes.com/entertainment-arts/awards/story/2023-01-09/ranking-awards-shows-

oscars-golden-globes). Los Angeles Times. ISSN 0458-3035 (https://www.worldcat.org/is

sn/0458-3035). Archived (https://web.archive

Question: Given the following conversation and a follow up question, rephrase the follow up question to be a standalone question, in its original language.

Chat History:

Human: Who won the Academy Award for best actor in 2024?

Assistant: Use the following pieces of context to answer the question at the end. If you don't know the answer, just say that you don't know, don't try to make up an answer.

.[9][10]

The first Best Actor awarded was Emil Jannings, for

his performances in The Last Command and The

Way of All Flesh. He had to return to Europe before

the ceremony, so the Academy agreed to give him

the prize earlier; this made him the first Academy

Award winner in history

. Retrieved March 14,

2024. "And are the Oscars, given out by Hollywood's most prestigious professional

association, the biggest prize in the world — or just in America?"

. Retrieved March 14, 2024.

"Will Smith isn't the only actor to cause consternation at the world's most coveted film

awards."

Starkey, Adam (January 23, 2023). "Who has won the most Oscars?" (https://www.nme.c

om/news/film/who-won-most-oscars-3382332). NME. Archived (https://web.archive.org/w

eb/20230124013232/https://www.nme.com/news/film/who-won-most-oscars-3382332)

from the original on January 24, 2023. Retrieved March 14, 2024

Best in films in 2023

Award Best Actor Best Actress

Winner Cillian Murphy

(Oppenheimer)Emma Stone

(Poor Things)

Award Best Supporting

ActorBest Supporting

Actress

WinnerRobert Downey Jr

Question: Who won the Academy Award for best actor in 2024?

Helpful Answer: Cillian Murphy won the Academy Award for best actor in 2024.

Follow Up Input: For which film did he win the award?

Standalone question: Who won the Academy Award for best actor in 2024?

Helpful Answer: Cillian Murphy won the Academy Award for best actor in 2024.'''

In the results, we can see the prompts used as well. Although the answer was not correct we can see that our system remembers the previous conversations. Looking at the standalone question we can say that the LLM had not done a good job at all in rephrasing it. To improve on this we can try changing to a better LLM or changing the prompt used for phrasing the standalone question. You can play around by changing the prompt and the LLM model to see what happens.

While powerful, a system like this can be limited by the RAG system’s limited search capabilities. A basic RAG system uses keywords to retrieve relevant data and might retrieve a lot of irrelevant information from the knowledge base (Wikipedia in this case) based on the user’s prompt. It might miss crucial details or pull irrelevant data, making the LLM’s job harder. Luckily, there are ways to improve this, and using ReAct agents is one of them. We’ll be diving deeper into these approaches in future articles!

Conclusion

In this article, we delved into the limitations of large language models and explored solutions to overcome them. We then built an RAG system from the ground up. This involved loading data, splitting it into manageable chunks, storing it in a vector database, and finally crafting a retrieval system that gets relevant documents for specific user queries. We then pushed further by integrating an LLM with our RAG system, significantly enhancing its capabilities. Finally, to transform our retrieval-based system into a better conversational partner, we introduced the concept of memory, allowing it to function as a functional chatbot.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning