If you are unfamiliar with safety compliance and are debating whether or not to read this blog post, let’s answer the most important question first.

- Why should you read this blog post?

- What can we learn from the first ever self driving car

- Why safety standards in software?

- Introduction to MISRA and AUTOSAR standards

- Building AUTOSAR compliant deep learning inference application with TensorRT

- Verifying AUTOSAR compliance with PCLint

- Where to go from here?

- Lessons for startups and CTOs

- Summary

- Resources

1. Why should you read this blog post?

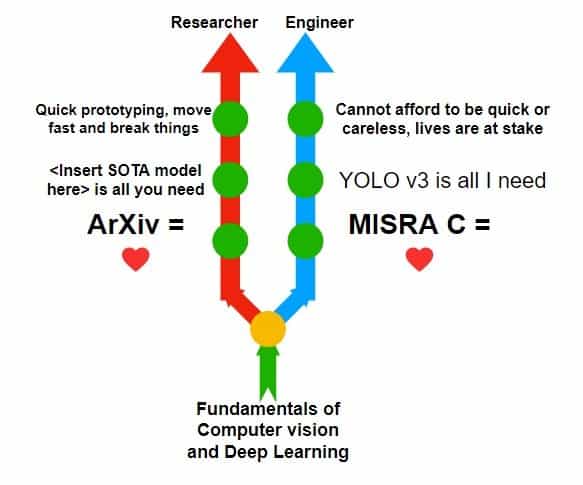

Most online courses and tutorials teach you the details of computer vision algorithms but not how to apply them in the industry. We all started our journey into deep learning by training LeNet on the MNIST dataset and watching in joy as the loss started going down. However, developing cool products like robots, autonomous drones or self-driving cars requires a much wider set of skills than just creating and training neural networks in a framework of your choice. Most content about computer vision and machine learning is focused on getting you up to speed with algorithms but doesn’t address the quantum leap in skill set required to create working products. The much discussed lack of AI talent in the industry worldwide is at least partly a lack of engineers who can build real products and not just proofs of concept. Memetically, the situation is like this:

Figure 1. State Of The AI meme

In this series of posts, we are trying to address this knowledge gap by introducing several aspects to keep in mind when designing a product that will be used by millions of people. With this context in mind, the goal of this blog post is to help you land a job in industry. If you would like to use your AI chops to self driving cars or autonomous drones or industrial robots, this post is for you.

Figure 2. AI Researcher v/s Engineer

If you are a regular reader of LearnOpenCV, you have likely developed a deep understanding of the foundations of computer vision. Armed with this knowledge, you can decide to pursue one of the two paths in your career – researcher or engineer. Figure 2 shows a brief summary of the mindset or key concerns these two job roles have. This is a huge simplification and nothing stops you from acquiring skills to be both, but if you are just getting started in your career, this could be a useful blueprint to start with.

2. What can we learn from the first ever self driving car

In the last part of this series, we introduced the basics of industrial computer vision and explained the differences between a proof-of-concept and a product.In this second part, we will go deeper and

- Understand the importance of safety standards using case studies.

- Introduce the MISRA standard and understand some specifics of MISRA C++.

- Create a TensorRT application in C++ compliant with MISRA standard.

- Understand how to verify MISRA compliance with an industry standard tool.

- Introduce functional safety standards, and

- Provide two specific pieces of advice to startups with a case study of the drone delivery industry.

Before we dive into these topics, let’s set the stage with a bit of historical context. In 2011, the influential founder of Venture Capital firm Adreesen Horowitz, Marc Andreesen made an important observation. He stated that more and more industries were being run on software and that quite simply, ‘software is eating the world’. Astute readers will note that this was about a year before Hinton et. Al.’s paper on convolutional neural networks shocked the AI community and kickstarted the modern AI revolution as we know it.

In the previous post, we dived deep into the differences between proofs of concept and a product. In the context of that discussion, we can see that after the rise of deep learning, several ideas which used to be POCs were now mature enough to become real products and that deep learning has only accelerated the pace at which software is eating the world.

Figure 3. Photo of Stanley self driving car with many sensors

Let’s look at a prominent example. In 2005, Stanford University’s ‘Stanley’ autonomous vehicle successfully completed the long 212 km (132 miles) journey and famously won the DARPA Grand Challenge. Stanley was the first autonomous vehicle to ever fulfill the challenge posed by DARPA and relied heavily on the then state-of-the-art computer vision algorithms for perception and navigation. This was a full seven years before Hinton’s paper. Yet, while Stanley absolutely deserves it’s spot at the National Museum of American History (where it is currently located), for all its successes, it was a tech demo.

Autonomous driving, as a serious commercial endeavor, could only be realized after deep neural networks made it really possible. We have been and will be, giving the example of autonomous driving in this blog post, but the arguments apply to other fields such as autonomous drones, smart agriculture, wheeled delivery robots, warehouse robots, etc.

This progress, however, has brought its own set of questions and challenges which most AI tutorials fail to even mention. While a one-off demo like Stanley can certainly prove that self driving is possible, here are some additional engineering considerations when converting such POCs to real products:

- How to make sure that the software running on the robot is bug-free?

- How to make sure that the hardware of the robot is robust and bug-free?

- How to make sure that large teams building complex intelligent robots can debug and maintain the code many years after it is written?

Why focus on safety and being bug free? It’s because bugs in industrial products can cause huge financial losses and in extreme cases can even put human lives at stake. Therefore, industries have evolved certain best practices to reduce the possibility of unforeseen, unintended bugs, commonly known as safety standards.

3. Why safety standards in software?

Anybody who has written more than a few lines of code will relate to the fact that bugs can creep into your code quite easily. This is not a problem when writing code for practice and learning. However, when software is deployed into a real robot, the stakes get much higher. The more complex software gets, the more difficult it becomes to eliminate bugs and ensure safe working of the robot. This is not a problem unique to computer vision and AI programs. Ever since software began ‘eating the world’ in Marc Andreesen’s words, industries have established well known and time tested best practices in the form of coding guidelines to make sure that bugs are not introduced inadvertently. Standards ensure the code looks about the same no matter who writes it or when. This makes it easier to identify bugs and avoid jeopardizing user’s safety. We will go into details of the MISRA coding standard, but before we do that, let’s imagine a world without any standards.

Suppose a team of 100 people is working on developing the software for a self-driving car. The car is equipped with a bunch of cameras, LIDARs and Radars. A complex perception module containing many neural networks uses information from all these sensors to perceive the environment and finally outputs two numbers to steer the vehicle – the speed (or throttle) and direction (or yaw). Suppose these engineers spend six months developing a mammoth perception system containing hundreds of thousands of lines of C or C++ code related to hardware drivers, neural networks, and task schedulers. The team has not followed any industry standards and every programmer has written code in their own style. However, they have used unit tests very well and managed to iron out bugs from every submodule of the perception stack. At the end of the development process they hook everything up, only to find that the outputs of throttle and yaw are incorrect due to some bugs somewhere within the codebase. So, now they have a huge C++ codebase of several hundred thousand lines of code to debug and only two numbers (throttle and yaw) in the output to give them any feedback as to whether or not they are making progress.

What went wrong? Didn’t they debug every submodule independently with unit tests? How could they end up here? In other words, what factors led to unidentified bugs in this project? Here is a non-exhaustive list:

Unit tests can lie: ‘Pure software’ industries like web development or app development can sometimes ensure 100% bug-free codebases with well designed unit tests alone. This drastically changes when software and hardware interact, like in a robot or car. When software interacts with multiple pieces of hardware and multiple other pieces of software, it is usually not possible to write unit tests to verify all inputs that a module may receive. There may be subtle bugs that occur once in a million or once in 10 million or even once in a billion times. These are usually very hard to even reproduce, much less write unit tests for. When human life is at stake, even bugs that occur once in a billion times can be quite deadly, as we will see later with a case study.

Figure 4. Two of the four reasons why programming standards are necessary while developing safety critical products

Programming variability among people: Every programmer has their own style of writing code. If everyone writes C++ in their own style, others may find it very difficult to understand and follow the code. It is a common joke that a programmer doesn’t understand the code they wrote last Friday on the next Monday. Imagine, then, trying to understand the code written by another person in their idiosyncratic style years after it was written.

Large teams and long time: What if someone from the team leaves the company during the course of the project? Who can take the responsibility of checking and debugging their code? If nobody can understand their code sufficiently enough to identify very rare bugs, the team might have to rewrite that part of the code from scratch!

Limitations of programming languages: This is a huge and often under-appreciated factor. No programming language is perfect. For example, we know from the previous blog post in this series that python is not used in real products because it is slow and doesn’t comply with safety standards. So, are C or C++ perfect? Unfortunately no. C and C++ while being fast, come with their own set of limitations. These limitations can be categorized into two:

- C and C++ assume that the programmer is perfect.

- C and C++ assume that the hardware is perfect.

C and C++ give full control of the hardware to the programmer. For example, consider this simple bit of C code which loops through the memory and writes the contents of the memory to standard output:

#include <stdio.h>

//you will need to slightly modify

//the script to make it work on arduino

int main(void)

{

int q=8;

int *x=&q;

printf("Value of x is %lu\n", (unsigned long)x);

printf("value at x is %d\n", *x);

while(true)

{

x++;

printf("%d\n", *x);

}

return 0;

}

You can run this code on a computer or upload it to a microcontroller board like Arduino and run it there. If you try to run this program on a computer, you will find that it will print out a few lines until the OS of your computer kicks in and gives you a segfault. If, however, you try to run this on a microcontroller such as an Arduino, it will happily dump the contents of the whole RAM without ever giving any error. This can cause a huge nightmare in a product! Imagine if some part of the code accidentally overwrites a memory location meant for another subsystem! In other words, the C programming language has allowed you to do whatever you want to your hardware since it assumes that the programmer fully understands what they are doing. This is often not the case.

As you can see, people, time, and programming languages all play a part and can make it a nightmare to develop fast, performant and reliable systems at an industrial scale.

As an addendum to the previous discussion, although it is not as easy to demonstrate this with code, any problem or bugs in hardware cannot ever be identified in software using C or C++. In a hazardous industrial environment with electromagnetic noise, cosmic rays and X rays can and do cause bit flips in memory or logic of the processor’s integrated circuit. C and C++ assume that no such problems ever occur and have no mechanisms built in to verify or check for hardware integrity. This is why, for example, ECC memory (typically used in servers and industrial equipment) requires specialized hardware built directly into the IC and is not just a software update. Although coding standards do not help us out here, we mention it for completeness since it is an important consideration for us as engineers (see section 7)

Now that we have some understanding of the need for coding standards, let’s look at a case study.

3.1 Case study: Bad software can kill

Although fictional, the above example of the team of self-driving car engineers, closely resembles a real-life incident from many years ago.

On August 28th, 2009, a Toyota Lexus crashed in San Diego, USA, killing all four passengers in the car. While traffic accidents are not uncommon, what was different about this one was that one of the passengers had called the emergency number 911 while the car was moving. He narrated that the car was behaving weirdly and stepping on the brakes did not slow down the vehicle. Something was wrong with their car. This crash and the testimony from the passengers moments before their death, triggered investigations into crashes dating back to 2002. It turned out that over the past several years, many people had reported their Toyotas experiencing ‘unintended acceleration’ or UA. This UA was eventually found to be responsible for as many as 89 deaths.

Extensive investigation of Toyota’s source code, which included a cameo by NASA, eventually revealed that a bug in the software that controlled the Electronic Throttle Control System (ETCS) was responsible for certain vehicles accelerating when they should have slowed down! In other words, a software bug had killed 89 people and nobody knew or would have known for several years, if the Lexus crash in 2009 did not provide such a smoking gun in the form of victim testimonies. Toyota eventually agreed to pay a massive $1.2 billion fine to avoid further prosecution. As we mentioned before, just like it is not possible to write unit tests for all conditions that may occur, it is also not possible to test the system for all conditions. Toyota was testing all vehicles it produced for any bugs or inconsistencies but even professional testing failed to uncover these bugs because they were so rare.

The full details of the bug and Toyota’s response to it are well documented on the internet and we will not spend much time discussing the gory details here. What is important for us as computer vision engineers is to realize what led to this bug and what could have been done to prevent it.

- First, investigation revealed that the ETCS source code was highly unorganized ‘spaghetti code’. Spaghetti code is a term used to describe a codebase which has been put together with a hack-ey, ‘if it ain’t broke, dont fix it’ attitude. While ‘move fast and break things’ can be an excellent attitude to have for creating mobile apps, such an attitude cannot be applied to writing safety critical code. How could the ETCS codebase end up being so messy? A part of the reason was that it was written by a large number of people over many years, which meant that nobody truly understood the code enough to identify the rare UA bug.

- It was found that the code had thousands of global variables. The thing about global variables is that any function can read or modify them, and this can lead to memory corruption. As we noted earlier, C and C++ let the programmer do anything they want, assuming that the programmer is perfect. However, this can and in this case, did, encourage bad programming practices. In fact, it was precisely memory corruption that led to some tasks ‘dying’ in the system and caused UA. The source code and several details of the bug have not been revealed publicly to protect Toyota’s IP, so we can never be sure, but perhaps, global variables led to memory corruption and ultimately UA.

- Last and most importantly, it was revealed that Toyota did not follow any coding standards in their system. If they had followed the well established MISRA coding standard (more about MISRA in the next section), they might have prevented this bug from ever occurring. NASA tested the source code and found 7,134 violations of the MISRA standard while later more thorough investigations revealed about 81,000 violations! A rule of thumb in industry is that for every 30 violations of the MISRA C safety standard, 3 minor bugs and one major bug is likely to creep in. With this context, you can appreciate that Toyota was actually really lucky in not having hundreds of critical bugs, but ultimately, not lucky enough.

3.2 What we need to know as a computer vision engineer

The previous case study shows that if software is eating the world and computer vision is set to accelerate this process, we as computer vision engineers will have to go beyond just writing correct and bug-free code. We must be aware that our computer vision software exists as a subsystem in a much more complex system. Even if a submodule by itself is bug-free, the system as a whole can still turn out to have deadly bugs when these subsystems interact with each other in unintended ways. Safety compliance standards provide a time-tested way to avoid unintended bugs. Needless to say, writing performant, compliant, bug-free code for computer vision is a non-trivial skill of much demand in the industry.

4. Introduction to MISRA and AUTOSAR standards

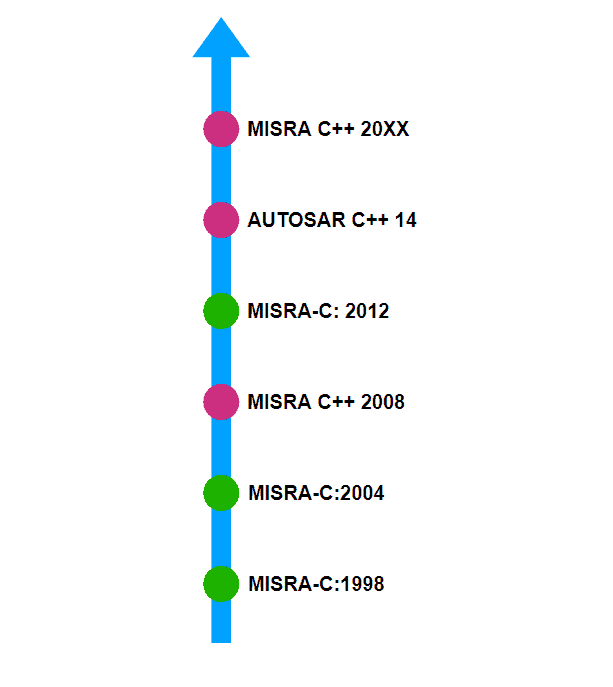

We have alluded to MISRA and AUTOSAR a few times, so let’s look at what these standards are. As always, we begin with a short historical note to put things in context.

Figure 5. Brief history of safety standards in C and C++

4.1 Brief history

C is a dangerous programming language. Most compilers allow you to do almost anything you want, including accessing pointers that don’t belong to the program space. So, C was considered to be unsuitable for use in safety critical systems around the 1980s. However, in the late 1990s, a group from the UK published some guidelines for writing C code for automotive in such a way that the risk of unintended and rare bugs is minimized. Thus, the Motor Industry Software Reliability Association or MISRA was born. The MISRA C standard is basically a set of rules on how to write C code. It contains rules and specifications on what is permissible and what isn’t. In a way, MISRA C is a subset of the C programming language which discards some of the dangerous features of C in order to prevent bugs. Although meant for automotives, the MISRA standard came to be widely adopted in other safety critical industries such as aviation, medical equipment, and even nuclear power plants. Not only that, it even gave rise to other venerable coding standards such as the Joint Strike Fighter C++ coding standard (for weapons) and NASA JPL C coding standards (for robots in space). Without going into details of the history of C++, we note that the C++ equivalent of the MISRA standard was introduced for C++ 2008. Since C++ is a highly dynamic and evolving programming language, the industry needed the coding standard to evolve as quickly as the language itself. When MISRA failed to deliver quick updates, the AUTOSAR development partnership stepped in and released the AUTOSAR C++ standard which supported C++ 2014. Since then, it has been announced that the next iteration of C++ coding standards will be worked out by MISRA and not AUTOSAR. This C++ 2021 version was supposed to be released in 2021 but has not yet been released as of the time of writing.

4.2 Some specifics of MISRA C standard

The MISRA C standard is closed (one needs to pay $20 to access the full version) Here we provide an overview of the standard

In total, MISRA C 1998 has 127 rules, of which 93 are required or mandatory and the rest are advisory or optional. As the name states, mandatory rules must be followed at all times if a codebase claims to be MISRA compliant. However, the remaining 34 rules could be violated if it is not practical to follow them. In such a case, all violations must be documented in a separate file, typically an excel file, stating the reason why an advisory rule was violated. The idea here is to make sure that the programmer is aware of what they are doing, which will also reduce unintended bugs. Now let’s take a look at some specific rules of MISRA C. For each rule, we will answer three basic questions: what, why, and how.

4.2.1 If/else

//non-compliant

if (x)

{

y=x;

}

//Compliant

if (x)

{

y=x;

}

else

{

/*DO NOTHING*/

}

- What is the rule? If and else if statements should always be terminated with an else statement at the very end.

- Why does this rule exist? This rule exists to make sure that the programmer has considered all the cases that can occur. Even if nothing should happen in “else” case, it should be present and typically a programmer will add a simple comment like /*Do nothing*/ in the else loop. This also makes the code clearer for other programmers to read.

- How does the rule prevent bugs? If you see read code containing an if statement with no else at the end, what will you interpret? Did the programmer really consider the else case and decide that nothing needs to be done in that case, or did the programmer forget to consider the else case at all? Clearly, this style of writing code would make the codebase depend heavily on the programmer and there is no way for another person to tell what they were thinking. This rule takes the guesswork out of the equation and ensures clear, bug-free code.

4.2.2 Switch/case/default

//non-compliant

switch (x)

{

case S0:

printf("S0\n");

break;

case S1:

printf("S1\n");

break;

}

//Compliant

switch (x)

{

case S0:

printf("S0\n");

break;

case S1:

printf("S1\n");

break;

default:

printf("Default case!\n");

}

- What? Just like the if/else rule, this one states that every switch statement should contain a default case, showing the code to execute in case none of the cases is true.

- Why? The justification is very similar to the if/else rule. MISRA wants to make sure that the programmer has considered all the cases.

- How? You should be able to understand this by yourself.

4.2.3 Loop counter

//non-compliant

float sum=0.0

for(float x=0; x<10; x+=0.1)

{

sum+=x;

}

//compliant

float sum=0.0

for(int x=0; x<100; x++)

{

sum+=(x/10.0);

}

- What? There are several rules regarding loops and loop counters. Here we will look at only one. This rule states that the loop counter should not be of float data type.

- Why? You are probably familiar with the concept of errors in floating-point arithmetic. The simplest way to understand this is to add 0.1 to itself 10 times in a loop and check if the result is exactly 1. You will find that it is not. In other words, floating-point numbers should never be checked for exact equality because the behavior in such cases depends heavily on the compiler or system being used and can introduce unintended bugs. This mostly affects `for` loops which have a loop counter.

- How? For loops should run a fixed integer number of times. Using floats here can only cause confusion and platform/compiler dependent behavior, as we saw in the code snippet above. Avoiding the use of floats in the loop counter saves us from these unintended bugs.

4.2.4 Unreachable code

//non-compliant

if(false)

{

x=y; //this is unreachable

}

//compliant

//no code, dont have something in the code that you want to never execute

- What? This rule states that there should be no unreachable code anywhere.

- Why? Most of us have at some point used comments, not to write explanations for what we are doing but to comment out code that is not required after debugging. Sometimes though, people go a step further and effectively make code unreachable to make sure that it will not execute. This can cause bugs because now you might have a whole bunch of code that looks like it is doing something but in fact it is not. This also makes code harder to understand, maintain and should therefore be avoided.

- How? We will answer this ‘how’ with a ‘why’. Why have a block of code in your codebase if it is not being used? It can only create problems. At the very minimum, unused code must be commented out, so it is clear that it is not being used. Also, as the code snippet showed, sometimes unreachable code can be a sign of bugs, which this rule can help identify.

4.2.5 Function exit point

//non-compliant

bool foo(int x)

{

if (x<5)

{

return false;

}

else

{

return true;

}

}

//compliant

bool foo(int x)

{

bool result=false;

if(x<5)

{

result=false;

}

else

{

result=true;

}

return result;

}

- What? This rule states that a function should have only one exit point at the very end. In other words, multiple points of exit within the function body are prohibited.

- Why? Having multiple exit points such as the example in the snippet above, make the code obscure and harder to read or maintain.

- How? Having one single exit point makes it easier to follow the code and easier to write unit tests for, since you know exactly where the function will exit in all cases. This is really useful in a complicated function with several nested conditional statements.

- This is just a short flavor of the rules present in the MISRA standard. In addition to these, there are several rules regarding pre-processor directives, function names, arrays, unions, variable names, etc. We cannot reproduce a more detailed version of the standard here because of copyright since the rules are closed source and companies should pay a (small) fee to access them.

Luckily, this limitation is not present in the AUTOSAR standard. The AUTOSAR C++ 2014 standard extends the MISRA C++ 2008 standard (which is again closed source). It is open and a free copy can be obtained here (insert ref.).

4.3 Automated code compliance check

The true power of MISRA, AUTOSAR, or any other coding guidelines comes from the fact that they can be automatically checked for compliance. Since source code is just text, it can be read and checked by another program to make sure that the rules are being followed. This is called ‘static analysis’ because it looks for bugs by examining the source code without running it. As you might have guessed, in contrast to static analysis, dynamic code analysis looks for bugs by evaluating the program while it is running. Dynamic analysis is better used in HPC space but in the world of embedded applications, dynamic analysis is often performed using Hardware in the loop simulation or HILS. In this blog post, we will show how to perform static analysis because it is quite effective and applies to many industries. Dynamic analysis and HILS, on the other hand, are quite industry-dependent, and can only really be learnt effectively on the job.

Now that we have an understanding of coding guidelines and static analysis, we will build an application in TensorRT with these rules in mind.

5. Building AUTOSAR compliant deep learning inference application with TensorRT

Since we have already introduced the key concepts of TensorRT in the first part of this series, here we dive straight into the code. We strongly recommend you go through the first part of this blog series before reading this section. As before, we will target our application to run on Jetson AGX Xavier. We will begin by using the ONNX file created in the previous blog post of this series. Here, we will focus on building the engine, saving the engine to disk, creating an inference pipeline to pass data into the engine, and performing the inference. We will make full use of the hardware of the Jetson by using a feature called zero-copy. Finally, we will run the inference pipeline on some sample data and measure inference throughput performance.

5.1 Helper class for managing bindings

When working with TensorRT engines, we will be mostly interacting with memory buffers for passing data into the TensorRT inference engine and getting results back after inference. Let us declare a binding class for helping manage these operations better.

class iobinding

{

public:

nvinfer1::Dims dims;

std::string name;

float* cpu_ptr=nullptr;

float* gpu_ptr=nullptr;

uint32_t size=0;

uint32_t binding;

uint32_t get_size();

void allocate_buffers();

void destroy_buffers();

void* tCPU;

void* tGPU;

};

iobinding get_engine_bindings(nvinfer1::ICudaEngine* eg, const char* name, bool is_onnx);

iobinding get_engine_bindings(nvinfer1::ICudaEngine* eg, const char* name, bool is_onnx=true)

{

iobinding io;

io.binding=eg->getBindingIndex(name);

if (io.binding<0)

{

std::cout << "Could not find binding of name: " << name << std::endl;

throw std::runtime_error("Binding not found error");

}

io.name=name;

io.dims=validate_shift(eg->getBindingDimensions(io.binding), is_onnx);

io.allocate_buffers();

return io;

}

cudaStream_t create_cuda_stream(bool nonblocking)

{

uint32_t flags = nonblocking?cudaStreamNonBlocking:cudaStreamDefault;

cudaStream_t stream = NULL;

cudaError_t err = cudaStreamCreateWithFlags(&stream, flags);

if (err != cudaSuccess)

return NULL;

//SetStream(stream);

return stream;

}

void iobinding::allocate_buffers()

{

if (!size)

size=get_size();

if (!zero_copy_malloc(&tCPU,&tGPU, size))

throw std::runtime_error("Cannot allocate buffers for binding");

cpu_ptr = (float*)tCPU;

gpu_ptr = (float*)tGPU;

}

void iobinding::destroy_buffers()

{

cudaFreeHost(tCPU);

}

5.2 Building and saving TensorRT engine from ONNX format

Let us see how to build an inference engine with trained network weights. As in part 1 of this series, we export the trained weights into an ONNX file. For creating the engine, we declare an onnxparser object, a network object, a builder object and finally builder config object. As is customary with TensorRT, we work with pointers to these instances rather than with the objects themselves.

builder=nvinfer1::createInferBuilder(gLogger);

builder->setMaxBatchSize(BATCH_SIZE);

network= builder->createNetworkV2(1U << static_cast<uint32_t>(nvinfer1::NetworkDefinitionCreationFlag::kEXPLICIT_BATCH));

parser= nvonnxparser::createParser(*network, gLogger);

if (!(parser->parseFromFile(onnxpath.c_str(), static_cast<uint32_t>(nvinfer1::ILogger::Severity::kWARNING))))

{

//we use parseFromFile instead of parse, since it has better error logging

for (int i=0; i< parser->getNbErrors(); i++)

{

std::cout << parser->getError(i)->desc() <<std::endl;

}

throw std::runtime_error("Could not parse onnx model from file");

}

config = builder->createBuilderConfig();

config->setMaxWorkspaceSize(1<<25);

config->setFlag(nvinfer1::BuilderFlag::kFP16);

engine = builder->buildEngineWithConfig(*network, *config);

if (!save_to_disk(engine, epath))

{

throw std::runtime_error("Failed to serialize engine and save it to disk");

}

else

{

std::cout << "Inference engine serialized and saved to " << epath << std::endl;

}

For simplicity, here we will just show FP16 optimization, since this is the most commonly used precision in industry. INT8 optimization and calibration has been already explained in detail in the python version of this blog post and the C++ equivalents can be constructed easily.

5.3 Creating inference pipeline with zero-copy

In a heterogeneous computing system with a discrete GPU, the CPUs and GPUs have separate memories. Thus, whenever you want to run some code on GPU, you need to transfer the data from CPU to GPU and the results back to the CPU after the code has finished executing. However, in an embedded system like a Jetson board, the CPU and GPU share the same global memory. Thus, we can avoid copying data back and forth between CPU and GPU, which is time consuming and energy intensive. For this, we will use two CUDA functions cudaAllocHostMemory and cudaGetHostToDevicePointer.

bool zero_copy_malloc(void** cpu_ptr, void** gpu_ptr, size_t size)

{

if (size==0) {return false;}

cudaError_t alloc_err = cudaHostAlloc(cpu_ptr, size, cudaHostAllocMapped);

if (alloc_err != cudaSuccess)

return false;

cudaError_t err= cudaHostGetDevicePointer(gpu_ptr, *cpu_ptr, 0);

if (err != cudaSuccess)

return false;

memset(*cpu_ptr, 0, size);

return true;

}

5.4 Saving engine to disk and reading from disk

To avoid building the engine every time we run the application, we will write a function to save the engine to disk after building it. A function to read the engine from disk is also needed.

5.5 Configuring build options with CMake

We will use cmake to find the TensorRT and CUDA libraries and build the project.

cmake_minimum_required(VERSION 3.10 FATAL_ERROR)

set(TRT_LIB_DIR ${CMAKE_BINARY_DIR})

set(TRT_OUT_DIR ${CMAKE_BINARY_DIR})

set(TRT_VERSION "${TRT_MAJOR}.${TRT_MINOR}.${TRT_PATCH}" CACHE STRING "TensorRT project version")

set(TRT_SOVERSION "${TRT_SO_MAJOR}" CACHE STRING "TensorRT library so version")

message("Building for TensorRT version: ${TRT_VERSION}, library version: ${TRT_SOVERSION}")

set(CMAKE_SKIP_BUILD_RPATH True)

set(CMAKE_CXX_STANDARD 14)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

set(CMAKE_CXX_EXTENSIONS OFF)

# Dependencies

set(DEFAULT_CUDA_VERSION 10.2)

set(DEFAULT_CUDNN_VERSION 8.0)

set(DEFAULT_PROTOBUF_VERSION 3.0.0)

set(DEFAULT_CUB_VERSION 1.8.0)

# Dependency Version Resolution

set(CUDA_VERSION ${DEFAULT_CUDA_VERSION})

message(STATUS "CUDA version set to ${CUDA_VERSION}")

set(CUDNN_VERSION ${DEFAULT_CUDNN_VERSION})

message(STATUS "cuDNN version set to ${CUDNN_VERSION}")

find_package(Threads REQUIRED)

find_package(CUDA REQUIRED)

include_directories(

${CUDA_INCLUDE_DIRS}

${CUDNN_ROOT_DIR}/include

)

find_library(CUDNN_LIB cudnn HINTS

${CUDA_TOOLKIT_ROOT_DIR} ${CUDNN_ROOT_DIR} PATH_SUFFIXES lib64 lib)

find_library(CUBLAS_LIB cublas HINTS

${CUDA_TOOLKIT_ROOT_DIR} PATH_SUFFIXES lib64 lib lib/stubs)

find_library(CUBLASLT_LIB cublasLt HINTS

${CUDA_TOOLKIT_ROOT_DIR} PATH_SUFFIXES lib64 lib lib/stubs)

find_library(CUDART_LIB cudart HINTS ${CUDA_TOOLKIT_ROOT_DIR} PATH_SUFFIXES lib lib64)

find_library(RT_LIB rt)

set(CUDA_LIBRARIES ${CUDART_LIB})

set(GPU_ARCHS 72)

# Generate PTX for the last architecture in the list.

list(GET GPU_ARCHS -1 LATEST_SM)

set(GENCODES "${GENCODES} -gencode arch=compute_${LATEST_SM},code=compute_${LATEST_SM}")

set(CMAKE_CUDA_FLAGS "${CMAKE_CUDA_FLAGS} -Xcompiler -Wno-deprecated-declarations")

add_executable(trt_test inferutils.cpp sample.cpp)

target_link_libraries(trt_test ${CUDA_LIBRARIES} nvinfer nvonnxparser stdc++fs)

Here, we are including the TensorRT and CUDA libraries, as well as the header files we wrote as utilities. Finally, after we have included all the necessary libraries in the project, we declare an executable and specify the source files and libraries to be linked to the executable.

5.6 Building the project and measuring performance.

Now, we have everything. Let us build the project and verify that we get the same performance that we got with the python API.

mkdir build

cd build

cmake -DCMAKE_BUILD_TYPE=Debug ../

make

./trt_test

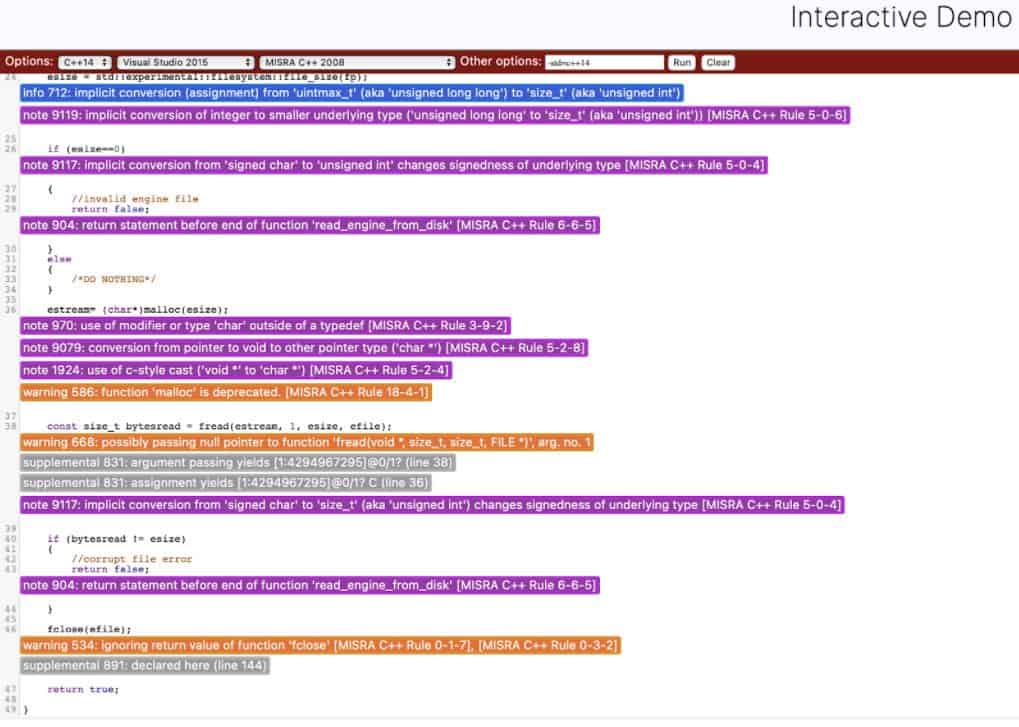

6. Verifying AUTOSAR compliance with PCLint

Notice how we didn’t care about complying to MISRA or AUTOSAR safety standards in the previous section. This is because we can automate syntax checking by using a ‘linting’ tool for static analysis.

6.1 The PCLint tool

Although there are a few open-source linting tools, they do not provide enough support or legal guarantees that industries are looking for. So, commercial tools are the only game in town when it comes to safety compliance. Here, we will use a tool called ‘PCLint’ by Gimpel software. PCLint has been around for decades and has garnered a great reputation in the industry, with some engineers preferring it to other tools that cost 10x more (linting is a serious business with many small and big players in the market). Gimpel offers a live online demo of PCLint. However, most companies are unwilling to paste their secret code into a public web page, so Gimpel also offers a one month free trial on your local machine, if you have a corporate email address. Gimpel didn’t pay us to use this tool or write about it, and all impressions of PCLint are our own. This being the case, let us point out that they have excellent customer support. We contacted Gimpel’s support for learning more about PCLint and getting access to the free trial and their responses were always quite quick and thorough.

6.2 Example snippet for linting

We have written a lot of code in the previous section to get TensorRT inference working. Since, we are just getting our feet wet with linting, let us start small. Rather than analyzing the whole codebase we have written, let us isolate a small fraction of the code and analyze it in isolation. We will analyze the read_engine_from_disk function.

#include <cmath>

#include <filesystem>

#include <cstdlib>

#include <iostream>

#include <fstream>

#include <chrono>

#include <string.h>

bool read_engine_from_disk(char* estream, size_t esize, const std::string enginepath);

bool read_engine_from_disk(char* estream, size_t esize, const std::string enginepath)

{

FILE* efile = NULL;

efile = fopen(enginepath.c_str(), "rb");

//run

if (efile==NULL)

{

return false;

//File not found error

}

std::experimental::filesystem::path fp(enginepath);

esize = std::experimental::filesystem::file_size(fp);

if (esize==0)

{

//invalid engine file

return false;

}

else

{

/*DO NOTHING*/

}

estream= (char*)malloc(esize);

const size_t bytesread = fread(estream, 1, esize, efile);

if (bytesread != esize)

{

//corrupt file error

return false;

}

fclose(efile);

return true;

}

Looking at the above code, we can immediately identify that we have broken several MISRA C rules (and thus AUTOSAR C++ rules, since this later standard is based on earlier one). There are if statements without the corresponding else, and this function has multiple exit points. Will PCLint be able to identify these problems?

6.3 Linting results

The code for this blog post is open source. So, we have no problems pasting it into a public webpage. After pasting the above function, we choose MISRA C++ 2008 compliance (AUTOSAR compliance checking is not available in the public demo). We choose Visual Studio 2013 as the development environment (this just affects the header files). Further, under ‘additional parameters’, we specify that we will use C++14. Once everything is ready, we hit ‘Run’ and the results of static analysis pop up immediately, as shown in these screenshots.

Figure 6. Linting results (part 1)

Figure 7. Linting results (part 2)

As you can see, PCLint has identified many problems with the code. Some of these are direct violations of the MISRA C++ standard and some are advisories.

- For instance, it has clearly identified that this function has multiple exit points and specified the corresponding MISRA rule number.

- Implicit variable conversion was also identified. This can cause bugs if you accidentally convert from one data type to another without realizing.

- There are warnings about file reading functions such as fopen. This is because in this public demo, the platform target is x86 and not ARM. Nevertheless, this is not a violation of the MISRA safety standard itself.

- There are some warnings about the header files, which we will ignore for now (only because this is a tutorial and we don’t want to lose the forest for the trees, never ignore such warnings in actual production code).

6.4 Improving code quality using linting

We can use the advice given by PCLint to improve code compliance. For instance, let’s modify the function to have a single exit point and explicitly specify all type conversions. The modified function looks like this.

#include <cmath>

#include <filesystem>

#include <cstdlib>

#include <iostream>

#include <fstream>

#include <chrono>

#include <string.h>

bool read_engine_from_disk(char* estream, size_t esize, const std::string enginepath);

bool read_engine_from_disk(char* estream, size_t esize, const std::string enginepath)

{

bool result=false;

FILE* efile = NULL;

efile = fopen(enginepath.c_str(), "rb");

//run

if (efile==NULL)

{

result=false;

//File not found error

}

else

{

const std::experimental::filesystem::path fp(enginepath);

esize = (size_t)std::experimental::filesystem::file_size(fp);

if (esize==(size_t)0)

{

//invalid engine file

result=false;

}

else

{

/*DO NOTHING*/

}

estream= (char*)malloc(esize);

const size_t bytesread = fread(estream, 1, esize, efile);

if (bytesread != esize)

{

//corrupt file error

result=false;

}

else

{

result=true;

}

}

fclose(efile);

return result;

}

After pasting this modified code into PCLint and running the static analysis again, we find that these MISRA compliance issues have vanished and the code has become more readable and explicit to anyone who needs to maintain it in the long run.

Figure 8. Linting results

6.5 Impressions and advice

Overall, we found PCLint to be a great tool for static analysis. The screenshots we shared above were all from the public demo, but we also tried the industrial demo on a local machine which performed similarly well. An important thing to note is that as of now PCLint is only available to run on x86 platforms but it can analyze code targeted for ARM as well. Nevertheless, we hope that GImpel will provide native support for ARM architecture in the future, so that code meant for Jetson devices can be analyzed on them directly.

Before wrapping up this section, we want to mention that the way we used static analysis in this blog post is not recommended. We wrote the whole TensorRT application without caring for safety and only later analyzed the compliance. This was done to show the reader how much of a difference static analysis can make. In practical industrial settings, you would introduce all necessary static analysis tools right at the very beginning of the project and let them work as plugins to the IDE being used. This ensures that all code is safety compliant, right from the beginning.

7. Where to go from here?

It would be remiss of us to end this blog post without mentioning that we have just scratched the surface of industrial computer vision. One of the major reasons there are no tutorials on safety compliant computer vision is that most safety functionality is closed source and can only be accessed under NDAs and contracts. There is very little one can reveal in an open setting. Although we have sought to provide you with insights from professionals, our efforts have not been without their limitations. Here is a non-comprehensive list of steps you can take to continue gaining expertise in safety certified embedded computer vision:

- We briefly mentioned some MISRA C rules, but to avoid copyright issues, we cannot provide a comprehensive view of the MISRA standard since the standard is closed source. If you are working in a company, please get them to buy the $20 MISRA standard and read through it. It is well worth it.

- You should spend a few hours reading about ‘functional safety’. In industry, compliance is evaluated for functional safety i.e., not just safety of individual components of a system, but the safety of functions that the embedded system performs. In simple words, for a car to be functionally safe, it is not enough to have safety certified subsystems like cameras and processors, but the whole system’s behavior should conform to certain standards. The dominant functional safety standards are ISO26262 and IEC 61508. Functional safety standards take a comprehensive and expansive look at the whole system from processor to memory to power delivery circuits and of course software and set standards for when, why and how systems should fail. Thus, MISRA and AUTOSAR safety standards sit within a much more expansive set of functional safety standards in industrial applications.

- Please read up about Automotive Safety Integrity Level (ASIL), which are risk classification levels defined under ISO26262.

- We ignored the role of the operating system in this blog post. Just because we write a safety compliant C++ script and use compliant hardware, does not mean that the system becomes fully safety compliant. Safety certified operating systems are a thing too. One of the leaders in this market is BlackBerry (yes, that phone company from the 90s). Their QNX OS is quite popular and compliant with ISO 26262 and IEC 61508. Startups are also coming up in this space. For example, Apex.AI’s Apex.OS is ISO 26262 compliant.

- TensorRT provides a subset of ISO26262 compliant operations in the ‘nvinfer1::safe’ namespace. As with all things safety, access to this namespace is available only to industrial partners who have signed NDAs and perhaps there is a paywall as well. It is quite difficult as an individual to learn more about these safety features, but TensorRT’s open source C++ samples provide an excellent starting point on building safe inference engines.

- Take a look at Jetson Safety Extension Package (JSEP), a set of tools and frameworks provided by NVIDIA to industrial partners who want to create safety compliant products with Jetson devices. JSEP could be the first foray of startups into safety compliant systems as it also contains case studies and advice from safety experts within NVIDIA. You need to sign an NDA, of course.

- We have been focused on Jetson devices, but it is well worth taking a look at NVIDIA’s Drive AGX systems for automotive markets. The Drive ecosystem includes an SDK for developing autonomous driving. Since this line of products is hyper focused on industrially relevant computer vision, NVIDIA has partnered with BlackBerry to provide Drive OS QNX, which leverages QNX OS to provide functional safety compliance.

- We will do a follow up blog post about real time operating systems on Jetson devices, so please stay tuned for that.

- Last but most importantly, please keep in mind that we have given examples from the automotive industry, but these basic principles and ideas are relevant to all industries where safety is important. As an example, MISRA and AUTOSAR safety standards are relevant to smart agriculture, automated construction, mining, healthcare and factory automation to name a few. Please read up about the standards relevant to your industry.

We have provided several references for all these at the end of this post.

8. Lessons for startups and CTOs

If you are a founder or CTO of a startup, we have two specific pieces of advice for you.

Many startups are working on optimizing inference on embedded devices with either NVIDIA’s TensorRT or ARM’s native tools like armnn. However, safety compliance is almost completely ignored by these startups perhaps due to a lack of awareness. Most startups do not understand or do not want to address the safety complaint AI space. Investing in tools to ensure compliance can seem like a burden with no immediate reward. However, linting tools like PCLint are not very expensive. Introducing the right compliance tools at the beginning of your software development cycle would reduce the overhead required for ensuring safety compliance and will definitely help you stand out from the competition.

More importantly, if your intended market does not work out, having a safety compliant software stack will make it quite easy for your company to pivot to new industry verticals and can significantly increase your valuation if you get acquired by a larger player. Think of it this way: If Apple goes shopping for startups to bring self-driving to their rumored Apple Car and they have two choices for companies with similar tech, but one of them has all the necessary automotive compliances, which one would they acquire? They would quite possibly acquire the one whose tech is safety compliant, since it is already proven for safety and they could adapt the tech into a product with minimal effort.

8.1 What can we learn from the debacle of drone delivery

About 8 years ago, in December 2013, Amazon founder Jeff Bezos announced that Amazon was working on a technology to deliver packages autonomously using drones. Autonomous drones could use computer vision and localization to navigate the skies and deliver packages without any human intervention. This was a revolutionary idea which quickly got really hyped up in the media and before long, investor money started pouring in. Drone delivery was supposed to be the autonomous, intelligent and efficient future that every engineer dreams of. Concerns about regulations and safety were raised but it was generally assumed they could be overcome. 8 years after Bezos’ announcement, almost none of us are getting their Amazon orders delivered by drones and investor activity in this area has generally cooled down from its highs of the last decade. What went wrong and what can we learn from it?

It turns out that concerns about safety were not trivial and could not be overcome. Comparing the automotive and drone delivery industries, it stands out that while the former has established comprehensive industry-wide functional safety standards, the latter has not. A report by McKinsey identifies public acceptance and regulation as two of the five major issues to overcome if drone delivery has to become commercially successful. While the drone delivery industry cannot be written off as a failure, it’s highly likely that creating and adopting functional safety standards would have made drone operation safer and helped the industry overcome the regulatory quagmire that it’s currently stuck in.

With this context, here is our second piece of advice for startups. If you are in an industry that does not yet have well established safety standards, you have a huge opportunity for playing a key role in creating them. You can get together with other startup’s working in this field and begin charting out rules, guidelines and standards for safety and eventually, regulators will join in. Early movers have a huge advantage when it comes to establishing safety standards for a new industry. Since setting up industry standards is voluntary, it turns out that even a well established brand like MISRA is struggling due to a lack of experts willing to give time to the latest C++ 202X standard. Getting involved in setting up safety standards could not only save your idea from irrelevance and regulatory hurdles, but also give you a huge leg up against the competition, as expressed perfectly by this meme.

Figure 9. Safety certified meme

9. Summary

For a post about deep learning, this one had surprisingly little content about it, and for good reasons. Having understood the differences between POCs and products in the first part of this series, here, we started out by explaining why software safety standards are required. We saw with the case study of the Toyota UA bug that software bugs can be deadly. With this context, we explored the basics of the MISRA and AUTOSAR automotive safety standards.

We then created a deep learning inference application in C++ using TensorRT. After briefly discussing the differences between static and dynamic analysis, we used a static analysis tool called PCLint to identify some potential bugs in our C++ code. The goal of this section was not to fully debug the several hundreds of lines of code (as that would be far too long to explain line by line in this blog post), but to expose you to static analysis tools. We believe it would have been the first time for the vast majority of our readers to use a static analysis tool to debug a computer vision application.

If you have gone through the process as we explained, you have gained exposure to the tools and processes used by professional computer vision engineers to develop self-driving cars, smart agriculture, automated construction machinery etc. Since we could not cover many aspects of safety compliance in this post, we then listed out a bunch of resources that you should read up on to further your knowledge. Finally, we ended with some advice for startups in computer vision or robotics.

As of the time of writing, NVIDIA itself has an open job listing (link below) for an embedded engineer to develop TensorRT. Knowledge of AUTOSAR, MISRA, ISO26262 and IEC61508 are listed as ‘ways to stand out from the crowd’. This position has remained open for at least 3 months since we started tracking it, underscoring that even a leading company like NVIDIA cannot find all the people they need for developing safety compliant AI. If you have read this long blog post and internalized the lessons from it, you are probably far ahead of the crowd. We hope that this peek into the vast world of safety compliant computer vision will help you grow professionally and move on from the knowledge of deep learning to its real world applications.

10. Resources:

- Winning the DARPA Grand Challenge

- Toyota UA bug analysis

- Lessons from Toyota UA bug

- Introduction to MISRA C

- Introduction to static code analysis

- NVIDIA Drive OS

- BlackBerry QNX OS

- PCLint Plus by Gimpel Software

- Automotive Safety Integrity Levels

- MISRA

- What is functional safety?

- Safety compliant APEX OS

- Jetson Safety Extension

- McKinsey report on drone industry

- NVIDIA Job Listing for TensorRT Developer

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning