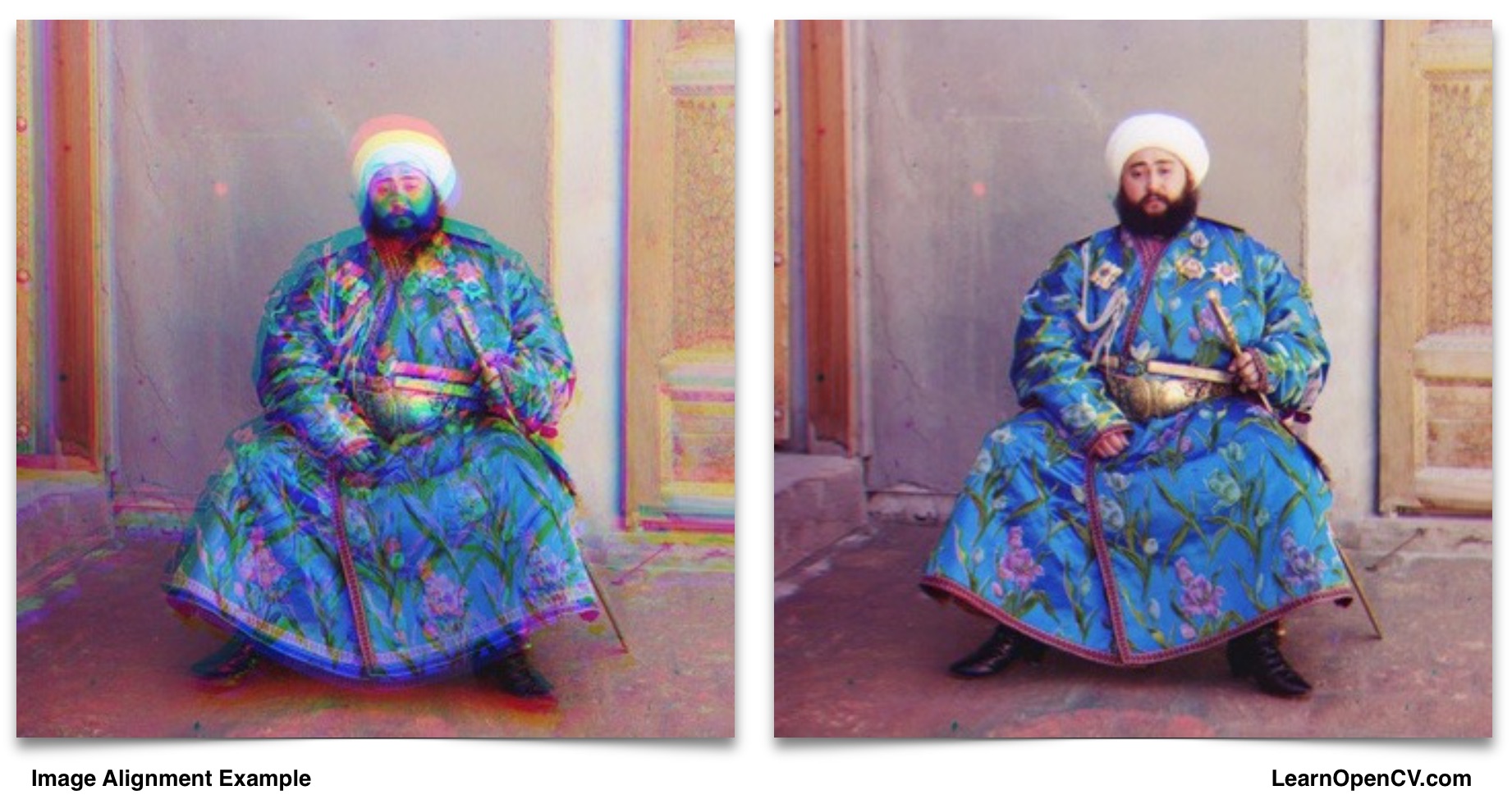

The image on the left is part of a historic collection of photographs called the Prokudin-Gorskii collection. The image was taken by a Russian photographer in the early 1900s using one of the early color cameras. The color channels of the image are misaligned because of the mechanical nature of the camera. The image on the right is a version of the same image with the channels brought into alignment using a function available in OpenCV 3.

You can download the code for all the examples shown in this post from the download section located at the bottom of the post.

In this post we will embark on a fun and entertaining journey into the history of color photography while learning about image alignment in the process. This post is dedicated to the early pioneers of color photography who have enabled us to capture and store our memories in color.

A Brief and Incomplete History of Color Photography

The idea that you can take three different photos using three primary color filters (Red, Green, Blue) and combine them to obtain a color image was first proposed by James Clerk Maxwell ( yes, the Maxwell ) in 1855. Six years later, in 1861, English photographer Thomas Sutton produced the first color photograph by putting Maxwell’s theory into practice. He took three grayscale images of a Ribbon ( see Figure 2 ), using three different color filters and then superimposed the images using three projectors equipped with the same color filters. The photographic material available at that time was sensitive to blue light, but not very sensitive to green light, and almost insensitive to red light. Although revolutionary for its time, the method was not practical.

By the early 1900s, the sensitivity of photographic material had improved substantially, and in the first decade of the century a few different practical cameras were available for color photography. Perhaps the most popular among these cameras, the Autochrome, was invented by the Lumière brothers.

A competing camera system was designed by Adolf Miethe and built by Wilhelm Bermpohl, and was called Professor Dr. Miethe’s Dreifarben-Camera. In German the word “Dreifarben” means tri-color. This camera, also referred to as the Miethe-Bermpohl camera, had a long glass plate on which the three images were acquired with three different filters. A very good description and an image of the camera can be found here.

In the hands of Sergey Prokudin-Gorskii the Miethe-Bermpohl camera ( or a variant of it ) would secure a special place in Russian history . In 1909, with funding from Tsar Nicholas II, Prokudin-Gorskii started a decade long journey of capturing Russia in color! He took more than 10,000 color photographs. The most notable among his photographs is the only known color photo of Leo Tolstoy.

Fortunately for us, the Library of Congress purchased a large collection of Prokudin-Gorskii’s photographs in 1948. They are now in the public domain and we get a chance to reconstruct Russian history in color!

It is not trivial to generate a color image from these black and white images (shown in Figure 3). The Miethe-Bermpohl camera was a mechanical device that took these three images over a span of 2-6 seconds. Therefore the three channels were often mis-aligned, and naively stacking them up leads to a pretty unsatisfactory result shown in Figure 1.

Well, it’s time for some vision magic!

Motion models in OpenCV

In a typical image alignment problem we have two images of a scene, and they are related by a motion model. Different image alignment algorithms aim to estimate the parameters of these motion models using different tricks and assumptions. Once these parameters are known, warping one image so that it aligns with the other is straight forward.

Let’s quickly see what these motion models look like.

The OpenCV constants that represent these models have a prefix MOTION_ and are shown inside the brackets.

- Translation ( MOTION_TRANSLATION ) : The first image can be shifted ( translated ) by (x , y) to obtain the second image. There are only two parameters x and y that we need to estimate.

- Euclidean ( MOTION_EUCLIDEAN ) : The first image is a rotated and shifted version of the second image. So there are three parameters — x, y and angle . You will notice in Figure 4, when a square undergoes Euclidean transformation, the size does not change, parallel lines remain parallel, and right angles remain unchanged after transformation.

- Affine ( MOTION_AFFINE ) : An affine transform is a combination of rotation, translation ( shift ), scale, and shear. This transform has six parameters. When a square undergoes an Affine transformation, parallel lines remain parallel, but lines meeting at right angles no longer remain orthogonal.

- Homography ( MOTION_HOMOGRAPHY ) : All the transforms described above are 2D transforms. They do not account for 3D effects. A homography transform on the other hand can account for some 3D effects ( but not all ). This transform has 8 parameters. A square when transformed using a Homography can change to any quadrilateral.

In OpenCV an Affine transform is stored in a 2 x 3 sized matrix. Translation and Euclidean transforms are special cases of the Affine transform. In Translation, the rotation, scale and shear parameters are zero, while in a Euclidean transform the scale and shear parameters are zero. So Translation and Euclidean transforms are also stored in a 2 x 3 matrix. Once this matrix is estimated ( as we shall see in the next section ), the images can be brought into alignment using the function warpAffine.

Homography, on the other hand, is stored in a 3 x 3 matrix. Once the Homography is estimated, the images can be brought into alignment using warpPerspective.

Image Registration using Enhanced Correlation Coefficient (ECC) Maximization

The ECC image alignment algorithm introduced in OpenCV 3 is based on a 2008 paper titled Parametric Image Alignment using Enhanced Correlation Coefficient Maximization by Georgios D. Evangelidis and Emmanouil Z. Psarakis. They propose using a new similarity measure called Enhanced Correlation Coefficient (ECC) for estimating the parameters of the motion model. There are two advantages of using their approach.

- Unlike the traditional similarity measure of difference in pixel intensities, ECC is invariant to photometric distortions in contrast and brightness.

- Although the objective function is nonlinear function of the parameters, the iterative scheme they develop to solve the optimization problem is linear. In other words, they took a problem that looks computationally expensive on the surface and found a simpler way to solve it iteratively.

findTransformECC Example in OpenCV

In OpenCV 3, the motion model for ECC image alignment is estimated using the function findTransformECC . Here are the steps for using findTransformECC

- Read the images.

- Convert them to grayscale.

- Pick a motion model you want to estimate.

- Allocate space (warp_matrix) to store the motion model.

- Define a termination criteria that tells the algorithm when to stop.

- Estimate the warp matrix using findTransformECC.

- Apply the warp matrix to one of the images to align it with the other image.

Let’s dive into the code to see how to use it. The comments provide an explanation.

C++ code

// Read the images to be aligned Mat im1 = imread("images/image1.jpg"); Mat im2 = imread("images/image2.jpg"); // Convert images to gray scale; Mat im1_gray, im2_gray; cvtColor(im1, im1_gray, CV_BGR2GRAY); cvtColor(im2, im2_gray, CV_BGR2GRAY); // Define the motion model const int warp_mode = MOTION_EUCLIDEAN; // Set a 2x3 or 3x3 warp matrix depending on the motion model. Mat warp_matrix; // Initialize the matrix to identity if ( warp_mode == MOTION_HOMOGRAPHY ) warp_matrix = Mat::eye(3, 3, CV_32F); else warp_matrix = Mat::eye(2, 3, CV_32F); // Specify the number of iterations. int number_of_iterations = 5000; // Specify the threshold of the increment // in the correlation coefficient between two iterations double termination_eps = 1e-10; // Define termination criteria TermCriteria criteria (TermCriteria::COUNT+TermCriteria::EPS, number_of_iterations, termination_eps); // Run the ECC algorithm. The results are stored in warp_matrix. findTransformECC( im1_gray, im2_gray, warp_matrix, warp_mode, criteria ); // Storage for warped image. Mat im2_aligned; if (warp_mode != MOTION_HOMOGRAPHY) // Use warpAffine for Translation, Euclidean and Affine warpAffine(im2, im2_aligned, warp_matrix, im1.size(), INTER_LINEAR + WARP_INVERSE_MAP); else // Use warpPerspective for Homography warpPerspective (im2, im2_aligned, warp_matrix, im1.size(),INTER_LINEAR + WARP_INVERSE_MAP); // Show final result imshow("Image 1", im1); imshow("Image 2", im2); imshow("Image 2 Aligned", im2_aligned); waitKey(0);

Python code

# Read the images to be aligned im1 = cv2.imread("images/image1.jpg"); im2 = cv2.imread("images/image2.jpg"); # Convert images to grayscale im1_gray = cv2.cvtColor(im1,cv2.COLOR_BGR2GRAY) im2_gray = cv2.cvtColor(im2,cv2.COLOR_BGR2GRAY) # Find size of image1 sz = im1.shape # Define the motion model warp_mode = cv2.MOTION_TRANSLATION # Define 2x3 or 3x3 matrices and initialize the matrix to identity if warp_mode == cv2.MOTION_HOMOGRAPHY : warp_matrix = np.eye(3, 3, dtype=np.float32) else : warp_matrix = np.eye(2, 3, dtype=np.float32) # Specify the number of iterations. number_of_iterations = 5000; # Specify the threshold of the increment # in the correlation coefficient between two iterations termination_eps = 1e-10; # Define termination criteria criteria = (cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, number_of_iterations, termination_eps) # Run the ECC algorithm. The results are stored in warp_matrix. (cc, warp_matrix) = cv2.findTransformECC (im1_gray,im2_gray,warp_matrix, warp_mode, criteria) if warp_mode == cv2.MOTION_HOMOGRAPHY : # Use warpPerspective for Homography im2_aligned = cv2.warpPerspective (im2, warp_matrix, (sz[1],sz[0]), flags=cv2.INTER_LINEAR + cv2.WARP_INVERSE_MAP) else : # Use warpAffine for Translation, Euclidean and Affine im2_aligned = cv2.warpAffine(im2, warp_matrix, (sz[1],sz[0]), flags=cv2.INTER_LINEAR + cv2.WARP_INVERSE_MAP); # Show final results cv2.imshow("Image 1", im1) cv2.imshow("Image 2", im2) cv2.imshow("Aligned Image 2", im2_aligned) cv2.waitKey(0)

Reconstructing Prokudin-Gorskii Collection in Color

The above image is also part of the Prokudin-Gorskii collection. On the left is the image with unaligned RGB channels, and on the right is the image after alignment. This photo also shows that by the early 20th century the photographic plates were sensitive enough to beautifully capture a wide color spectrum. The vivid red, blue and green colors are stunning.

The code in the previous section can be used to solve a toy problem. However, if you use it to reconstruct the above image, you will be sorely disappointed. Computer Vision in real world is tough, things often do not really work out of the box.

The problem is that the red, green, and blue channels in an image are not as strongly correlated if in pixel intensities as you might guess. For example, check out the blue gown the Emir is wearing in Figure 3. It looks quite different in the three channels. However, even though the intensities are different, something in the three channels is similar because a human eye can easily tell that it is the same scene.

It turns out that the three channels of the image are more strongly correlated in the gradient domain. This is not surprising because even though the intensities may be different in the three channels, the edge map generated by object and color boundaries are consistent.

An approximation of the image gradient is given below

C++

using namespace cv;

using namespace std;

Mat GetGradient(Mat src_gray)

{

Mat grad_x, grad_y;

Mat abs_grad_x, abs_grad_y;

int scale = 1;

int delta = 0;

int ddepth = CV_32FC1; ;

// Calculate the x and y gradients using Sobel operator

Sobel( src_gray, grad_x, ddepth, 1, 0, 3, scale, delta, BORDER_DEFAULT );

convertScaleAbs( grad_x, abs_grad_x );

Sobel( src_gray, grad_y, ddepth, 0, 1, 3, scale, delta, BORDER_DEFAULT );

convertScaleAbs( grad_y, abs_grad_y );

// Combine the two gradients

Mat grad;

addWeighted( abs_grad_x, 0.5, abs_grad_y, 0.5, 0, grad );

return grad;

}

Python

import cv2 import numpy as np def get_gradient(im) : # Calculate the x and y gradients using Sobel operator grad_x = cv2.Sobel(im,cv2.CV_32F,1,0,ksize=3) grad_y = cv2.Sobel(im,cv2.CV_32F,0,1,ksize=3) # Combine the two gradients grad = cv2.addWeighted(np.absolute(grad_x), 0.5, np.absolute(grad_y), 0.5, 0) return grad

Now we are ready to reconstruct a piece of Russian history! Read the inline comments to follow

C++ Code

using namespace cv;

using namespace std;

// Read 8-bit color image.

// This is an image in which the three channels are

// concatenated vertically.

Mat im = imread("images/emir.jpg", IMREAD_GRAYSCALE);

// Find the width and height of the color image

Size sz = im.size();

int height = sz.height / 3;

int width = sz.width;

// Extract the three channels from the gray scale image

vector<mat>channels;

channels.push_back(im( Rect(0, 0, width, height)));

channels.push_back(im( Rect(0, height, width, height)));

channels.push_back(im( Rect(0, 2*height, width, height))); </mat>

// Merge the three channels into one color image

Mat im_color;

merge(channels,im_color);

// Set space for aligned image.

vector<mat> aligned_channels;

aligned_channels.push_back(Mat(height, width, CV_8UC1));

aligned_channels.push_back(Mat(height, width, CV_8UC1));</mat>

// The blue and green channels will be aligned to the red channel.

// So copy the red channel

aligned_channels.push_back(channels[2].clone());

// Define motion model

const int warp_mode = MOTION_AFFINE;

// Set space for warp matrix.

Mat warp_matrix;

// Set the warp matrix to identity.

if ( warp_mode == MOTION_HOMOGRAPHY )

warp_matrix = Mat::eye(3, 3, CV_32F);

else

warp_matrix = Mat::eye(2, 3, CV_32F);

// Set the stopping criteria for the algorithm.

int number_of_iterations = 5000;

double termination_eps = 1e-10;

TermCriteria criteria(TermCriteria::COUNT+TermCriteria::EPS,

number_of_iterations, termination_eps);

// Warp the blue and green channels to the red channel

for ( int i = 0; i &lt; 2; i++)

{

double cc = findTransformECC (

GetGradient(channels[2]),

GetGradient(channels[i]),

warp_matrix,

warp_mode,

criteria

);

if (warp_mode == MOTION_HOMOGRAPHY)

// Use Perspective warp when the transformation is a Homography

warpPerspective (channels[i], aligned_channels[i], warp_matrix, aligned_channels[0].size(), INTER_LINEAR + WARP_INVERSE_MAP);

else

// Use Affine warp when the transformation is not a Homography

warpAffine(channels[i], aligned_channels[i], warp_matrix, aligned_channels[0].size(), INTER_LINEAR + WARP_INVERSE_MAP);

}

// Merge the three channels

Mat im_aligned;

merge(aligned_channels, im_aligned);

// Show final output

imshow("Color Image", im_color);

imshow("Aligned Image", im_aligned);

waitKey(0);

Python Code

import cv2 import numpy as np if __name__ == '__main__': # Read 8-bit color image. # This is an image in which the three channels are # concatenated vertically. im = cv2.imread("images/emir.jpg", cv2.IMREAD_GRAYSCALE); # Find the width and height of the color image sz = im.shape print sz height = int(sz[0] / 3); width = sz[1] # Extract the three channels from the gray scale image # and merge the three channels into one color image im_color = np.zeros((height,width,3), dtype=np.uint8 ) for i in xrange(0,3) : im_color[:,:,i] = im[ i * height:(i+1) * height,:] # Allocate space for aligned image im_aligned = np.zeros((height,width,3), dtype=np.uint8 ) # The blue and green channels will be aligned to the red channel. # So copy the red channel im_aligned[:,:,2] = im_color[:,:,2] # Define motion model warp_mode = cv2.MOTION_HOMOGRAPHY # Set the warp matrix to identity. if warp_mode == cv2.MOTION_HOMOGRAPHY : warp_matrix = np.eye(3, 3, dtype=np.float32) else : warp_matrix = np.eye(2, 3, dtype=np.float32) # Set the stopping criteria for the algorithm. criteria = (cv2.TERM_CRITERIA_EPS | cv2.TERM_CRITERIA_COUNT, 5000, 1e-10) # Warp the blue and green channels to the red channel for i in xrange(0,2) : (cc, warp_matrix) = cv2.findTransformECC (get_gradient(im_color[:,:,2]), get_gradient(im_color[:,:,i]),warp_matrix, warp_mode, criteria) if warp_mode == cv2.MOTION_HOMOGRAPHY : # Use Perspective warp when the transformation is a Homography im_aligned[:,:,i] = cv2.warpPerspective (im_color[:,:,i], warp_matrix, (width,height), flags=cv2.INTER_LINEAR + cv2.WARP_INVERSE_MAP) else : # Use Affine warp when the transformation is not a Homography im_aligned[:,:,i] = cv2.warpAffine(im_color[:,:,i], warp_matrix, (width, height), flags=cv2.INTER_LINEAR + cv2.WARP_INVERSE_MAP); print warp_matrix # Show final output cv2.imshow("Color Image", im_color) cv2.imshow("Aligned Image", im_aligned) cv2.waitKey(0)

Further Improvements

If you were to actually make a commercial image registration product, you would need to do a lot more than what my code does. For example, this code may fail when the mis-alignment is large. In such cases you would need to estimate the warp parameters on a lower resolution version of the image, and initialize the warp matrix for higher resolution versions using the parameters estimated in the low-res version. Furthermore, findTransformECC estimates a single global transform for alignment. This motion model is clearly not adequate when there is local motion in the images ( e.g. the subject moved a bit in the two photos). In such cases, an additional local alignment needs be done using say an optical flow based approach.

You can comment on this post to let me know if you would like a follow up post where I discuss these improvements with supporting code ( obviously! ).

Credits

I got the idea of using the Prokudin-Gorskii collection to demonstrate image alignment from Dr. Alexei Efros’ class at the University of California, Berkeley.

The Ribbon image was obtained from wikiepedia and is in the public domain.

The Prokudin-Gorskii collection is also in the public domain, and images were obtained from the Library of Congress.