Generative Models

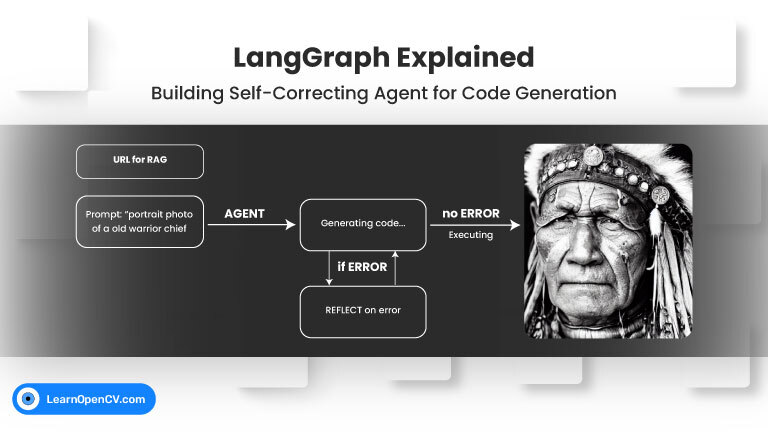

Welcome back to our LangGraph series! In our previous post, we explored the fundamental concepts of LangGraph by building a Visual Web Browser Agent that could navigate, see, scroll, and summarize

Developing intelligent agents, using LLMs like GPT-4o, Gemini, etc., that can perform tasks requiring multiple steps, adapt to changing information, and make decisions is a core challenge in AI development.

The ultimate goal for many in artificial intelligence is to build agents that can perceive, reason, and act in our complex physical world. Meta AI has made a significant stride

NVIDIA’s Cosmos Reason1 is a family of Vision Language Models trained to understand the physical world and make decisions for embodied reasoning. What makes Cosmos Reason1, as a promising contender

To develop AI systems that are genuinely capable in real-world settings, we need models that can process and integrate both visual and textual information with high precision. This is the

With a performance that blurs the line between silicon and carbon intelligence, ChatGPT (o4-mini-high) has stormed the legendary JEE Advanced battlefield, leaping past 1.5 million brilliant minds to claim a

The landscape of Artificial Intelligence is rapidly evolving towards models that can seamlessly understand and generate information across multiple modalities, like text and images. Salesforce AI Research has introduced BLIP3-o,

Ever watched an AI-generated video and wondered how it was made? Or perhaps dreamed of creating your own dynamic scenes, only to be overwhelmed by the complexity or the need

Unsloth has emerged as a game-changer in the world of large language model (LLM) fine-tuning, addressing what has long been a resource-intensive and technically complex challenge. Adapting models like LLaMA,