SimLingo is a remarkable model that combines autonomous driving, language understanding, and instruction-aware control—all in one unified, camera-only framework. It not only delivered top rankings on CARLA Leaderboard 2.0 and Bench2Drive, but also secured first place in the CARLA Challenge 2024.

By building on powerful vision-language backbones (InternVL2‑1B and Qwen2‑0.5B), SimLingo achieves impressive results without relying on costly sensors like LiDAR or radar.

- All-in-One Perception, Understanding, and Action

- Teaching Language to Drive: How Action Dreaming Works

- Inside the Model: Chain-of-Thought Commentary

- High-Performance Results

- Complete Open-Source Ecosystem

- Why SimLingo Stands Out

- Where It Could Go Next

- Code Implementation

- Conclusion

- References

All-in-One Perception, Understanding, and Action

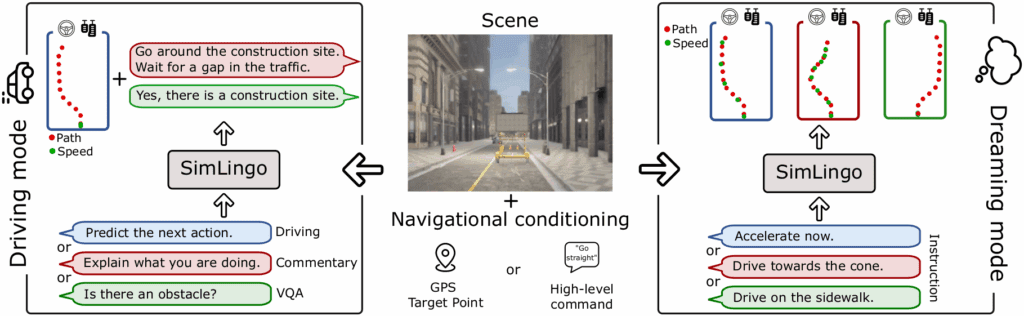

Unlike conventional systems that separate perception, planning, and reasoning, SimLingo blends them seamlessly. It processes live video input, outputs immediate driving controls, answers questions about the scene, and even follows verbal instructions. This triangular capability—drive, understand, explain—is wrapped into a single real-time model, enabling richer interaction and adaptability on the road.

Teaching Language to Drive: How Action Dreaming Works

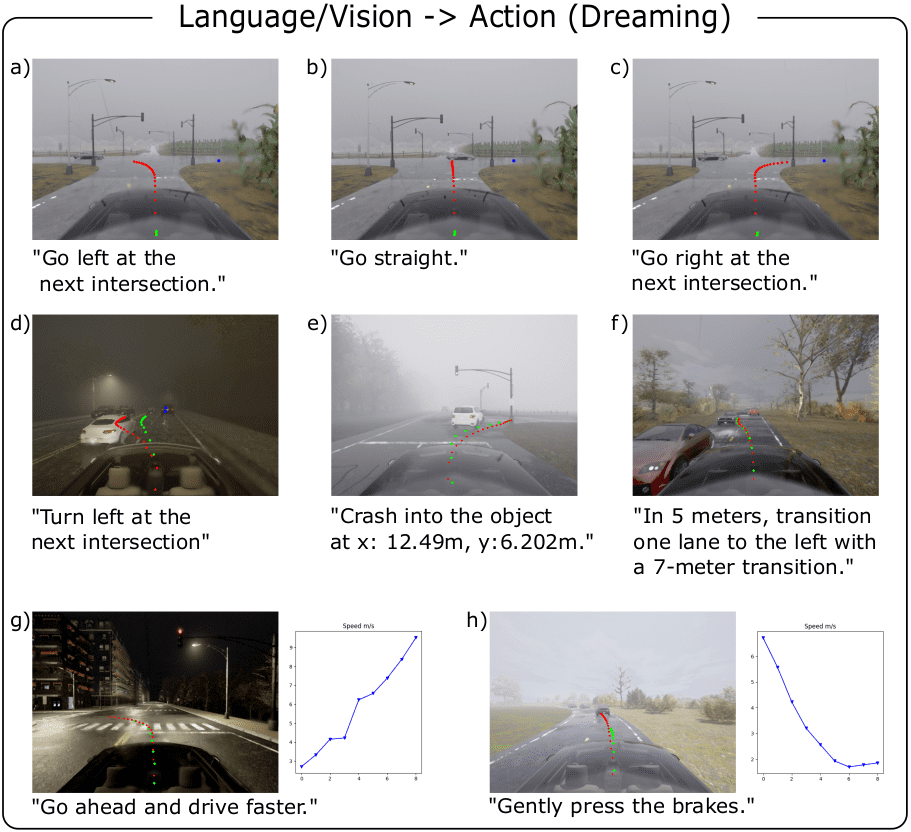

SimLingo’s breakthrough innovation is Action Dreaming. In traditional imitation learning, a model observes camera frames and replicates expert actions. But language instructions often get ignored if the same visual input always leads to the same outcome. To avoid this, SimLingo augments each input frame with multiple potential future trajectories—like turning left, speeding up, or braking.

Each possible path gets paired with a unique instruction such as “turn left” or “slow down.” The model must then generate the correct action based on both vision and instruction. In essence, the model is forced to truly ground its behavior in the language it hears, rather than relying on visual shortcuts. This makes SimLingo not just visually accurate, but also linguistically sensible.

Inside the Model: Chain-of-Thought Commentary

Before deciding on a driving action, SimLingo generates a short reasoning caption—like “Pedestrian crossing ahead, slowing down” or “Lane is clear, maintaining speed.” This chain-of-thought commentary adds significant transparency. Developers and users can now trace how the model thinks in each moment, making debugging and validation more intuitive. Though commentary didn’t significantly impact performance in initial studies, it’s a powerful layer for explainability and future safety monitoring.

High-Performance Results

- Leading Driving Scores: SimLingo topped the CARLA Leaderboard 2.0 and Bench2Drive benchmarks, which include hundreds of realistic, closed-loop driving scenarios such as emergency braking, merging, overtaking, and sign recognition.

- Language Understanding: For visual question answering on driving scenes, SimLingo achieved nearly 79% accuracy in GPT-based evaluations. It also succeeded in instruction-following at a rate of around 81%—handling directives like lane changes or obstacle avoidance.

- Unified Performance: It maintained top-tier driving results while simultaneously excelling in language tasks, proving that vision, language, and control can coexist without conflict.

These results demonstrate SimLingo’s ability to perform end-to-end tasks without needing extra sensors or separate modules.

Complete Open-Source Ecosystem

SimLingo is fully open source with a complete ecosystem for researchers and practitioners:

- Over 3.3 million samples created from CARLA 2.0, covering high-resolution images, optional LiDAR, object annotations, vehicle state, question-answer pairs, commentary, and multiple instruction-to-action trajectories.

- Ready-to-run scripts for data collection (using a rule-based expert called PDM-lite), language annotation, dreaming-based augmentation, model training, and evaluation in closed-loop simulations.

- Pretrained models released in June 2025, along with inference code and evaluation tools, making it simple to replicate or build upon the results.

Installation involves cloning the repo, setting up CARLA, installing dependencies via conda, downloading the dataset from Hugging Face, and running training or evaluation scripts.

Why SimLingo Stands Out

- Cost-Efficient Deployment

- By relying on cameras only, SimLingo avoids high costs and calibration challenges of LiDAR or radar while still achieving benchmark-leading driving performance.

- Explainability and Trust

- The commentary adds a narrative layer to each action, helping with debugging, transparency, and potential regulatory compliance.

- Human Interaction Ready

- Question-answering and instruction-following enable seamless human-in-the-loop scenarios—ideal for ride-sharing cars, collaborative fleet systems, and situational assistance.

- Framework for Greater Robotics

- The architecture—combining vision-language training, action dreaming, and reasoning commentary—is broadly applicable to robotics, including drones, home assistants, and industrial automation.

Where It Could Go Next

Despite its strengths, SimLingo is currently confined to simulated environments. Transitioning to real-world driving will introduce complexities like dynamic weather, sensor noise, and unpredictable agents. The chain-of-thought module, though excellent for interpretability, hasn’t yet improved actual driving performance. But with further data and integration, it could become central to decision-making.

Another avenue involves enriching language instructions with real-world speech and colloquial variations—making SimLingo more adaptable to natural interaction beyond scripted commands.

Code Implementation

The open-source framework makes it perfect for experimentation and academic research.

Installation

Add the following paths to PYTHONPATH in ~/.bashrc file:

export CARLA_ROOT=/path/to/CARLA/root

export WORK_DIR=/path/to/simlingo

export PYTHONPATH=$PYTHONPATH:${CARLA_ROOT}/PythonAPI/carla

export SCENARIO_RUNNER_ROOT=${WORK_DIR}/scenario_runner

export LEADERBOARD_ROOT=${WORK_DIR}/leaderboard

export PYTHONPATH="${CARLA_ROOT}/PythonAPI/carla/":"${SCENARIO_RUNNER_ROOT}":"${LEADERBOARD_ROOT}":${PYTHONPATH}

Now, run the following commands:

git clone https://github.com/RenzKa/simlingo.git

cd simlingoy./setup_carla.sh

conda env create -f environment.yaml

conda activate simlingo

Download Dataset

The authors have provided the Dataset over Huggingface. The uploaded data contains the driving dataset, VQA, Commentary, and Dreamer labels. You can also download the whole dataset using git with gitLFS as well:

# Clone the repository

git clone https://huggingface.co/datasets/RenzKa/simlingo

# Navigate to the directory

cd simlingo

# Pull the LFS files

git lfs pull

Single files can also be downloaded:

wget https://huggingface.co/datasets/RenzKa/simlingo/resolve/main/[filename].tar.gz

Collect Dataset(Optional)

The collect_dataset_slurm.py script helps generate custom training data using the PDM-Lite expert and CARLA tools

Train the Model

Run the training shell scripts train_simlingo_seed1.sh to reproduce experiments or tune hyperparameters

Evaluate Driving and Language

Below provided script runs the agent in CARLA, provides driving metrics, and evaluates language tasks.

bash ./start_eval_simlingo.py --mode closed_loop

In case of GPU VRAM constraints, you can provide --RenderOffScreen flag with the CARLAUE4.sh bash calling. Results provided by the authors demonstrates the potential of SimLingo:

Conclusion

SimLingo achieves a rare balance: top-tier autonomous driving, robust language understanding, and clear alignment between language and action, all in a vision-only framework.

The Action Dreaming approach ensures instructions actually shape behavior. Chain-of-thought commentary improves transparency. And comprehensive evaluation across driving and language benchmarks highlights its multi-faceted capabilities.

This makes SimLingo a strong foundation for future research and a promising candidate for deployment in interactive, explainable, camera-only automation systems. Whether you’re working on robot agents, vehicle autonomy, or interactive AI systems, SimLingo is an excellent model to experiment with, adapt, and extend.

Let me know if you’d like me to generate diagrams, walkthrough model architecture visually, or focus deeper on specific sections!

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning