The common saying is, “A picture is worth a thousand words.” In this post, we will take that literally and try to find the words in a picture! In an earlier post about Text Recognition, we discussed how Tesseract works and how it can be used along with OpenCV for text detection and recognition. This time, we will look at a robust approach for Text Detection Using OpenCV, based on a recent paper : EAST: An Efficient and Accurate Scene Text Detector.

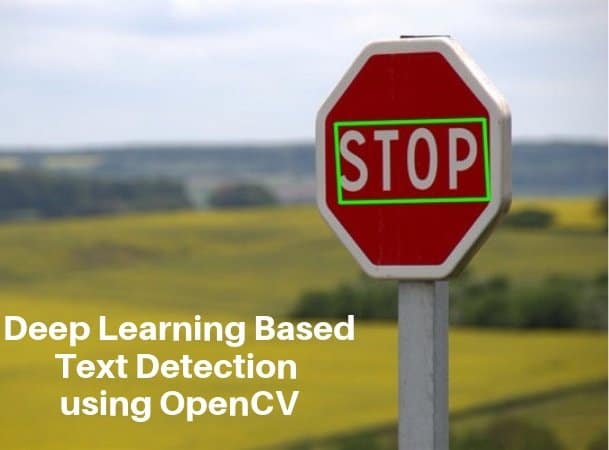

It should be noted that text detection is different from text recognition. In text detection, we only detect the bounding boxes around the text. But, in text recognition, we actually find what is written in the box. For example, in the image below, text detection will give you the bounding box around the word, and text recognition will tell you that the box contains the word STOP.

Text Recognition engines such as Tesseract require the bounding box around the text for better performance. Thus, this detector can be used to detect the bounding boxes before doing Text Recognition.

- Graphic Card: GTX 1080 Ti

- Network fprop: ~50 ms

- NMS (C++): ~6 ms

- Overall: ~16 fps

The TensorFlow model has been ported to be used with OpenCV, and they have also provided a sample code. We will discuss how it works step by step. You will need OpenCV >= 3.4.3 to run the code. Let’s detect some text in images!

The steps involved are as follows:

- Download the EAST Model

- Load the Model into memory

- Prepare the input image

- Forward pass the blob through the network

- Process the output

Step 1: Download EAST Model

The EAST Model can be downloaded from this dropbox link: https://www.dropbox.com/s/r2ingd0l3zt8hxs/frozen_east_text_detection.tar.gz?dl=1.

Once the file has been downloaded (~85 MB), unzip it using

tar -xvzf frozen_east_text_detection.tar.gz

You can also extract the contents using the File viewer of your OS.

After unzipping, copy the .pb model file to the working directory.

Step 2: Load the Network

We will use the cv::dnn::readnet or cv2.dnn.ReadNet() function for loading the network into memory. It automatically detects configuration and framework based on the file name specified. In our case, it is a pb file, and thus, it will assume that a Tensorflow Network is to be loaded.

C++

Net net = readNet(model);

Python

net = cv.dnn.readNet(model)

Step 3: Prepare Input Image

We need to create a 4-D input blob for feeding the image to the network. This is done using the blobFromImage function.

C++

blobFromImage(frame, blob, 1.0, Size(inpWidth, inpHeight), Scalar(123.68, 116.78, 103.94), true, false);

Python

blob = cv.dnn.blobFromImage(frame, 1.0, (inpWidth, inpHeight), (123.68, 116.78, 103.94), True, False)

There are a few parameters we need to specify to this function. They are as follows :

- The first argument is the image itself

- The second argument specifies the scaling of each pixel value. In this case, it is not required. Thus we keep it as 1.

- The default input to the network is 320×320. So, we need to specify this while creating the blob. You can experiment with any other input dimension, also.

- We also specify the mean that should be subtracted from each image since this was used while training the model. The mean used is (123.68, 116.78, 103.94).

- The next argument is whether we want to swap the R and B channels. This is required since OpenCV uses BGR format and Tensorflow uses RGB format.

- The last argument is whether we want to crop the image and take the center crop. We specify False in this case.

Step 4: Forward Pass

Now that we have prepared the input, we will pass it through the network. There are two outputs of the network. One specifies the geometry of the Text-box, and the other specifies the confidence score of the detected box. These are given by the layers :

- feature_fusion/concat_3

- feature_fusion/Conv_7/Sigmoid

This is specified in the code as follows:

C++

std::vector<String> outputLayers(2);

outputLayers[0] = "feature_fusion/Conv_7/Sigmoid";

outputLayers[1] = "feature_fusion/concat_3";

Python

outputLayers = []

outputLayers.append("feature_fusion/Conv_7/Sigmoid")

outputLayers.append("feature_fusion/concat_3")

Next, we get the output by passing the input image through the network. As discussed earlier, the output consists of two parts: scores and geometry.

C++

std::vector<Mat> output;

net.setInput(blob);

net.forward(output, outputLayers);

Mat scores = output[0];

Mat geometry = output[1];

Python

net.setInput(blob)

output = net.forward(outputLayers)

scores = output[0]

geometry = output[1]

Step 5: Process The Output

As discussed earlier, we will use the outputs from both the layers ( i.e. geometry and scores ) and decode the positions of the text boxes along with their orientation. We might get many candidates for a text box. Thus, we need to filter out the best looking text boxes from the lot. This is done using Non-Maximum Suppression.

Decode

C++

std::vector<RotatedRect> boxes;

std::vector<float> confidences;

decode(scores, geometry, confThreshold, boxes, confidences);

Python

[boxes, confidences] = decode(scores, geometry, confThreshold)

Non-Maximum Suppression

We use the OpenCV function NMSBoxes ( C++ ) or NMSBoxesRotated ( Python ) to filter out the false positives and get the final predictions.

C++

std::vector<int> indices;

NMSBoxes(boxes, confidences, confThreshold, nmsThreshold, indices);

Python

indices = cv.dnn.NMSBoxesRotated(boxes, confidences, confThreshold, nmsThreshold)

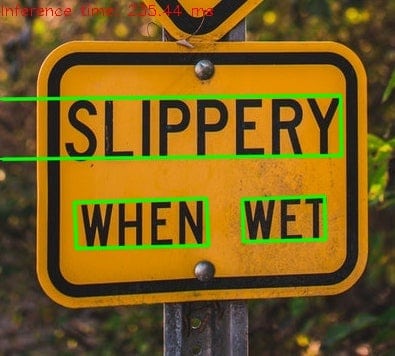

Results

Given below are a few results.

As you can see, it can detect texts with varying Backgrounds, Fonts, Orientations, Sizes, Color. The last one worked pretty well, even for deformed Text. There are, however, some mis-detections but we can say, overall it performs very well.

As the examples suggest, it can be used in a wide variety of applications such as Number plate Detection, Traffic Sign Detection, detection of text on ID Cards etc.

References

- EAST: An Efficient and Accurate Scene Text Detector

- Tensorflow Implementation

- OpenCV Samples [C++], [Python]

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning