Model Optimization

Unsloth has emerged as a game-changer in the world of large language model (LLM) fine-tuning, addressing what has long been a resource-intensive and technically complex challenge. Adapting models like LLaMA,

In the previous posts of the TFLite series, we introduced TFLite and the process of creating a model. In this post, we will take a deeper dive into the TensorFlow

In this article, we will learn how to create a TensorFlow Lite model using the TF Lite Model Maker Library. We will fine-tune a pre-trained image classification model on the

The recent trend in developing larger and larger Deep Learning models for a slight increase in accuracy raises concerns about their computational efficiency and wide scaled usability. We can not

You can scarcely find a good article on deploying computer vision systems in industrial scenarios. So, we decided to write a blog post series on the topic. The topics we

In this post, we will learn how to select the right model using Modelplace.AI. Selecting the right model will make your application faster, help you scale it to millions of

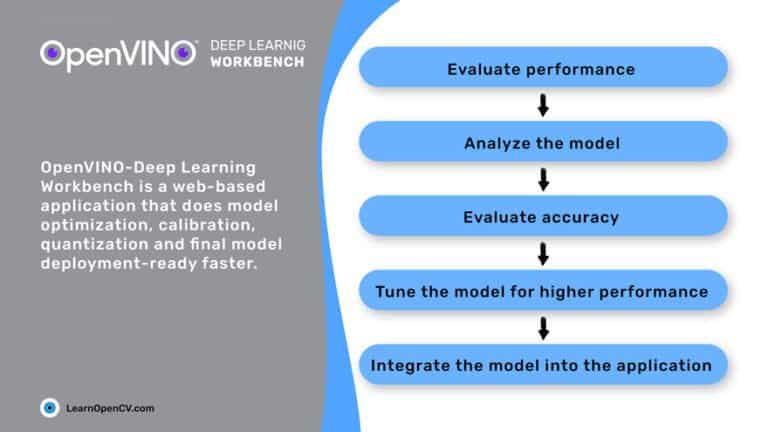

The Intel-OpenVINO Toolkit provides many great functionalities for Deep-Learning model optimization, inference and deployment. Perhaps the most interesting and practical tool among them is the Deep-Learning (DL) workbench. Not only

Traditionally, Deep-Learning models are trained on high-end GPUs. But for inference, Intel CPUs and edge devices like NVidia’s Jetson and Intel-Movidius VPUs are preferred. Most of these Intel CPUs come

Deep Learning models inferencing on video stream inputs in computer vision applications are mostly used for object detection, image segmentation, and image classification. In many cases, we fail to get