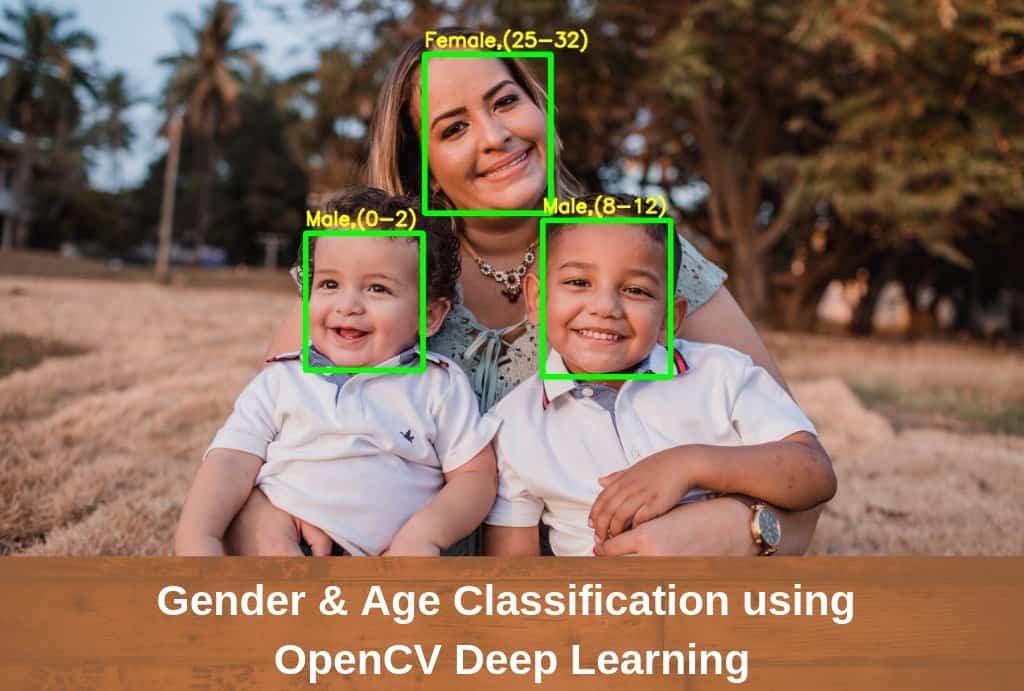

In this tutorial, we will discuss an interesting application of Deep Learning applied to faces. We will estimate the age and figure out the gender of the person from a single image. The model is trained by Gil Levi and Tal Hassner. We will discuss in brief the main ideas from the paper and provide step by step instructions on how to use the model in OpenCV. We will learn Gender and Age Classification using OpenCV.

1. Gender and Age Classification using CNNs

The authors have used a very simple convolutional neural network architecture, similar to the CaffeNet and AlexNet. The network uses 3 convolutional layers, 2 fully connected layers and a final output layer. The details of the layers are given below.

- Conv1 : The first convolutional layer has 96 nodes of kernel size 7.

- Conv2 : The second conv layer has 256 nodes with kernel size 5.

- Conv3 : The third conv layer has 384 nodes with kernel size 3.

- The two fully connected layers have 512 nodes each.

They have used the Adience dataset for training the model.

1.1. Gender Prediction

They have framed Gender Prediction as a classification problem. The output layer in the gender prediction network is of type softmax with 2 nodes indicating the two classes “Male” and “Female”.

1.2. Age Prediction

Ideally, Age Prediction should be approached as a Regression problem since we are expecting a real number as the output. However, estimating age accurately using regression is challenging. Even humans cannot accurately predict the age based on looking at a person. However, we have an idea of whether they are in their 20s or in their 30s. Because of this reason, it is wise to frame this problem as a classification problem where we try to estimate the age group the person is in. For example, age in the range of 0-2 is a single class, 4-6 is another class and so on.

The Adience dataset has 8 classes divided into the following age groups [(0 – 2), (4 – 6), (8 – 12), (15 – 20), (25 – 32), (38 – 43), (48 – 53), (60 – 100)]. Thus, the age prediction network has 8 nodes in the final softmax layer indicating the mentioned age ranges.

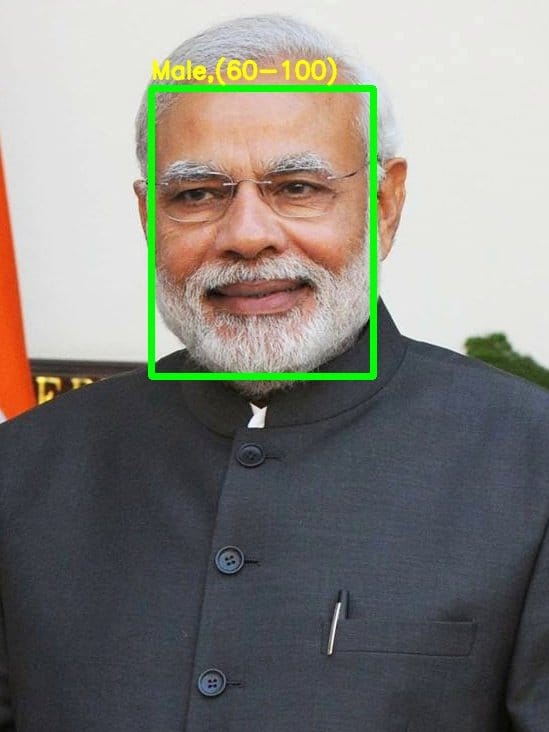

It should be kept in mind that Age prediction from a single image is not a very easy problem to solve as the perceived age depends on a lot of factors and people of the same age may look pretty different in various parts of the world. Also, people try very hard to hide their real age!

For example, can you guess the age of the two eminent personalities?

Narendra Modi PM of India

Ajit Doval NSA of India

I can bet you’ll google it when I reveal their real age!

Narendra Modi is 68 and Ajit Doval is 74 years! Just imagine how hard it would be for a machine to correctly predict their age.

2. Code Tutorial

The code can be divided into four parts:

- Detect Faces

- Detect Gender

- Detect Age

- Display output

NOTE: Please download the model weights file ( Gender, Age ) which is not provided along with the code. Download the files and keep them along with the other code files given with this post.

After you have downloaded the code, you can run it using the sample image provided or using the webcam.

C++ Usage

#Using sample image

./AgeGender sample1.jpg

Python Usage

#Using sample image

python AgeGender.py --input sample1.jpg

Let us have a look at the code for gender and age prediction using the DNN module in OpenCV. Please download the code as the code snippets given below are only for the important parts of the code.

2.1. Detect Face

We will use the DNN Face Detector for face detection. The model is only 2.7MB and is pretty fast even on the CPU. More details about the face detector can be found in our blog on Face Detection. The face detection is done using the function getFaceBox as shown below.

C++

tuple<Mat, vector<vector<int>>> getFaceBox(Net net, Mat &frame, double conf_threshold)

{

Mat frameOpenCVDNN = frame.clone();

int frameHeight = frameOpenCVDNN.rows;

int frameWidth = frameOpenCVDNN.cols;

double inScaleFactor = 1.0;

Size size = Size(300, 300);

// std::vector<int> meanVal = {104, 117, 123};

Scalar meanVal = Scalar(104, 117, 123);

cv::Mat inputBlob;

cv::dnn::blobFromImage(frameOpenCVDNN, inputBlob, inScaleFactor, size, meanVal, true, false);

net.setInput(inputBlob, "data");

cv::Mat detection = net.forward("detection_out");

cv::Mat detectionMat(detection.size[2], detection.size[3], CV_32F, detection.ptr<float>());

vector<vector<int>> bboxes;

for(int i = 0; i < detectionMat.rows; i++)

{

float confidence = detectionMat.at<float>(i, 2);

if(confidence > conf_threshold)

{

int x1 = static_cast<int>(detectionMat.at<float>(i, 3) * frameWidth);

int y1 = static_cast<int>(detectionMat.at<float>(i, 4) * frameHeight);

int x2 = static_cast<int>(detectionMat.at<float>(i, 5) * frameWidth);

int y2 = static_cast<int>(detectionMat.at<float>(i, 6) * frameHeight);

vector<int> box = {x1, y1, x2, y2};

bboxes.push_back(box);

cv::rectangle(frameOpenCVDNN, cv::Point(x1, y1), cv::Point(x2, y2), cv::Scalar(0, 255, 0),2, 4);

}

}

return make_tuple(frameOpenCVDNN, bboxes);

}

Python

def getFaceBox(net, frame, conf_threshold=0.7):

frameOpencvDnn = frame.copy()

frameHeight = frameOpencvDnn.shape[0]

frameWidth = frameOpencvDnn.shape[1]

blob = cv.dnn.blobFromImage(frameOpencvDnn, 1.0, (300, 300), [104, 117, 123], True, False)

net.setInput(blob)

detections = net.forward()

bboxes = []

for i in range(detections.shape[2]):

confidence = detections[0, 0, i, 2]

if confidence > conf_threshold:

x1 = int(detections[0, 0, i, 3] * frameWidth)

y1 = int(detections[0, 0, i, 4] * frameHeight)

x2 = int(detections[0, 0, i, 5] * frameWidth)

y2 = int(detections[0, 0, i, 6] * frameHeight)

bboxes.append([x1, y1, x2, y2])

cv.rectangle(frameOpencvDnn, (x1, y1), (x2, y2), (0, 255, 0), int(round(frameHeight/150)), 8)

return frameOpencvDnn, bboxes

2.2. Predict Gender

We will load the gender network into memory and pass the detected face through the network. The forward pass gives the probabilities or confidence of the two classes. We take the max of the two outputs and use it as the final gender prediction.

C++

string genderProto = "gender_deploy.prototxt";

string genderModel = "gender_net.caffemodel";

Net genderNet = readNet(genderModel, genderProto);

vector<string> genderList = {"Male", "Female"};

blob = blobFromImage(face, 1, Size(227, 227), MODEL_MEAN_VALUES, false);

genderNet.setInput(blob);

// string gender_preds;

vector<float> genderPreds = genderNet.forward();

// printing gender here

// find max element index

// distance function does the argmax() work in C++

int max_index_gender = std::distance(genderPreds.begin(), max_element(genderPreds.begin(), genderPreds.end()));

string gender = genderList[max_index_gender];

Python

genderProto = "gender_deploy.prototxt"

genderModel = "gender_net.caffemodel"

ageNet = cv.dnn.readNet(ageModel, ageProto)

genderList = ['Male', 'Female']

blob = cv.dnn.blobFromImage(face, 1, (227, 227), MODEL_MEAN_VALUES, swapRB=False)

genderNet.setInput(blob)

genderPreds = genderNet.forward()

gender = genderList[genderPreds[0].argmax()]

print("Gender Output : {}".format(genderPreds))

print("Gender : {}".format(gender))

2.3. Predict Age

We load the age network and use the forward pass to get the output. Since the network architecture is similar to the Gender Network, we can take the max out of all the outputs to get the predicted age group.

C++

string ageProto = "age_deploy.prototxt";

string ageModel = "age_net.caffemodel";

Net ageNet = readNet(ageModel, ageProto);

vector<string> ageList = {"(0-2)", "(4-6)", "(8-12)", "(15-20)", "(25-32)", "(38-43)", "(48-53)", "(60-100)"};

ageNet.setInput(blob);

vector<float> agePreds = ageNet.forward();

int max_indice_age = distance(agePreds.begin(), max_element(agePreds.begin(), agePreds.end()));

string age = ageList[max_indice_age];

Python

ageProto = "age_deploy.prototxt"

ageModel = "age_net.caffemodel"

ageNet = cv.dnn.readNet(ageModel, ageProto)

ageList = ['(0 - 2)', '(4 - 6)', '(8 - 12)', '(15 - 20)', '(25 - 32)', '(38 - 43)', '(48 - 53)', '(60 - 100)']

ageNet.setInput(blob)

agePreds = ageNet.forward()

age = ageList[agePreds[0].argmax()]

print("Gender Output : {}".format(agePreds))

print("Gender : {}".format(age))

2.4. Display Output

We will display the output of the network on the input images and show them using the imshow function.

C++

string label = gender + ", " + age; // label

cv::putText(frameFace, label, Point(it->at(0), it->at(1) -20), cv::FONT_HERSHEY_SIMPLEX, 0.9, Scalar(0, 255, 255), 2, cv::LINE_AA);

imshow("Frame", frameFace);

Python

label = "{}, {}".format(gender, age)

cv.putText(frameFace, label, (bbox[0], bbox[1]-20), cv.FONT_HERSHEY_SIMPLEX, 0.8, (255, 0, 0), 3, cv.LINE_AA)

cv.imshow("Age Gender Demo", frameFace)

3. Results

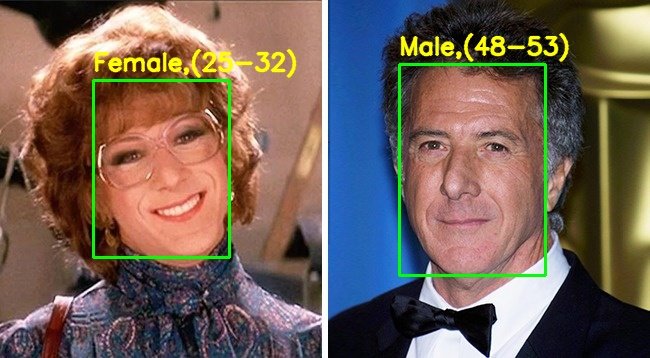

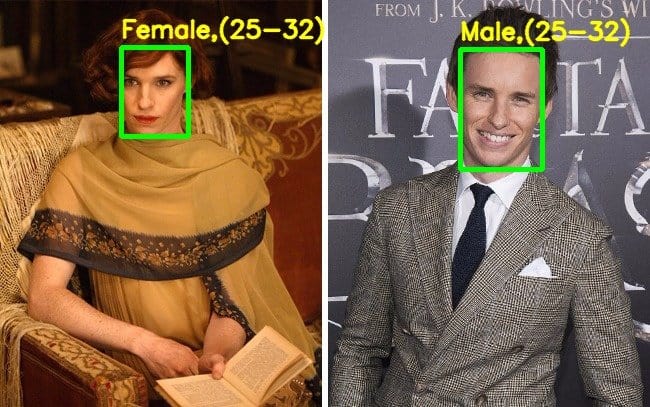

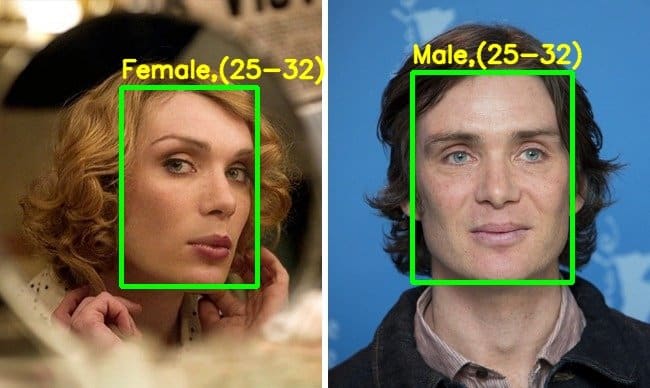

We saw above that the network is able to predict both Gender and Age to high level of accuracy. Next, we wanted to do something interesting with this model. Many actors have portrayed the role of the opposite gender in movies.

We want to check what AI says about their looks in these roles and whether they are able to fool the AI.

We used images from this article which shows their actual photographs along with those from the movies in which they changed their gender. Let’s have a look.

Angelina Jolie in Salt

Dustin Hoffman in Tootsie

Eddie Redmayn in The Danish Girl

Elle Fanning in 3 Generations

Amanda Bynes in Shes the Man

John Travolta in Hairspray

Cillian Murphy in Breakfast on Pluto

It seems apart from Elle Fanning, everyone else was able to fool the AI with their looks in the opposite gender. Also, it is very difficult to predict the Age of celebrities just from images.

Finally, let’s see what our model predicts for the two example we took in the beginning of the post.

4. Observations

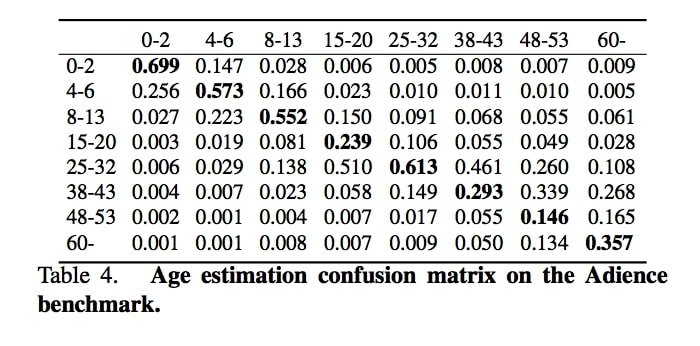

Even though the gender prediction network performed well, the age prediction network fell short of our expectation. We tried to find the answer in the paper and found the following confusion matrix for the age prediction model.

The following observations can be made from the above table :

- The age groups 0-2, 4-6, 8-13 and 25-32 are predicted with relatively high accuracy. ( see the diagonal elements )

- The output is heavily biased towards the age group 25-32 ( see the row belonging to the age group 25-32 ). This means that it is very easy for the network to get confused between the ages 15 to 43. So, even if the actual age is between 15-20 or 38-43, there is a high chance that the predicted age will be 25-32. This is also evident from the Results section.

Apart from this, we observed that the accuracy of the models improved if we use padding around the detected face. This may be due to the fact that the input while training were standard face images and not closely cropped faces that we get after face detection.

We also analysed the use of face alignment before making predictions and found that the predictions improved for some examples but at the same time, it became worse for some. It may be a good idea to use alignment if you are mostly working with non-frontal faces.

We discuss face alignment and other important applications on faces in our online course. Check out the link below.

5. Conclusion

Overall, I think the accuracy of the models is decent but can be improved further by using more data, data augmentation and better network architectures.

One can also try to use a regression model instead of classification for Age Prediction if enough data is available.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning