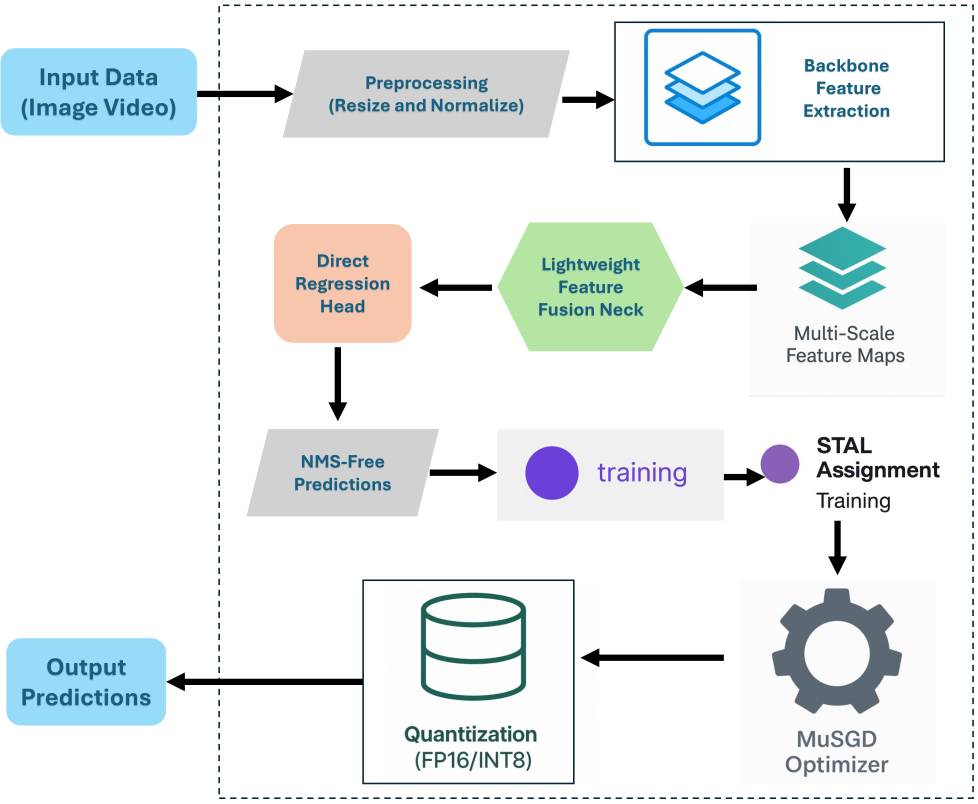

Every few months, the computer vision community prepares for a new YOLO release, typically faster, marginally lighter, and incrementally more accurate than the last. YOLOv26 (object detector), released by Ultralytics in January 2026, breaks that pattern.

Rather than increasing architectural complexity, YOLOv26 adopts an edge-first engineering approach. The focus shifts to latency, export paths, and hardware-friendly design. The result is a detector specifically designed for applications in robotics, drones, mobile devices, and embedded systems.

This article examines YOLOv26 from an engineering and deployment perspective, key architectural decisions, performance characteristics, and why it may be the most practically useful YOLO release in years.

Design Philosophy of YOLOv26

Recent YOLO generations have achieved accuracy improvements by adding complexity, including transformer blocks, distribution-based regression heads, and increasingly expensive post-processing pipelines. While effective on high-end GPUs, these design choices often introduced friction during deployment, particularly when exporting to ONNX, TensorRT, or INT8 runtimes.

YOLOv26’s architecture is guided by three core principles:

- Simplicity – Remove components that complicate inference and deployment.

- Efficiency – Optimize for CPUs, NPUs, and low-power accelerators.

- Practical Innovation – Borrow ideas from large-scale LLM training only where they demonstrably improve stability or efficiency.

The result is a model that trades marginal theoretical gains for real-world reliability and predictability.

Core Architectural Innovation

End-to-End, NMS-Free Detection

Traditional YOLO models depend on Non-Maximum Suppression (NMS) to remove duplicate bounding boxes. While effective, NMS is:

- Sequential by nature

- Difficult to parallelize

- A frequent source of deployment complexity and latency jitter

YOLOv26 introduces a One-to-One detection head, enabling the model to directly predict a fixed set of object hypotheses. This eliminates the need for NMS entirely.

Why this matters:

- Inference latency becomes deterministic, even in crowded scenes.

- The detection pipeline becomes fully end-to-end, simplifying exports to ONNX, TensorRT, and edge runtimes.

For applications that still benefit from traditional behavior, Ultralytics offers a dual-head configuration, allowing users to switch back to a One-to-Many + NMS setup when required.

Removal of Distribution Focal Loss (DFL)

Distribution Focal Loss (DFL), introduced in earlier YOLO versions, improved bounding box precision but came with notable downsides:

- Increased computational overhead

- Poor compatibility with INT8 quantization

- Instability on low-power hardware

YOLOv26 removes DFL entirely, replacing it with a simplified regression head. In practice, this leads to no meaningful accuracy degradation, while significantly improving:

- Quantization robustness (FP16 / INT8)

- Inference efficiency on edge devices

This change alone makes YOLOv26 far easier to deploy on platforms such as Jetson Orin, Raspberry Pi, and mobile NPUs.

MuSGD: LLM-Inspired Optimization

One of the most novel contributions in YOLOv26 is its optimizer: MuSGD.

MuSGD combines:

- The stability and reliability of classic SGD

- The fast convergence properties of Muon, an optimizer originally designed for large language model training

By adapting Muon-style optimization to vision models, YOLOv26 achieves:

- Faster convergence

- More stable training dynamics

- Reduced sensitivity to hyperparameters

For practitioners, this translates into shorter training cycles and less tuning overhead, particularly on large or diverse datasets.

Small Target Awareness: STAL and ProgLoss

Lightweight detectors often struggle with small or distant objects. YOLOv26 explicitly addresses this with two complementary techniques:

- ProgLoss (Progressive Loss Balancing): Dynamically reweights loss components during training to avoid overfitting to large, easy objects.

- STAL (Small-Target-Aware Label Assignment): Biases label assignment toward small and partially occluded targets.

Together, these mechanisms improve recall for small objects without increasing model size or computational cost.

YOLOE-26: Open-Vocabulary Instance Segmentation

Alongside standard YOLOv26 models, Ultralytics introduces YOLOE-26, an open-vocabulary variant.

YOLOE-26 supports text and visual-prompted instance segmentation, enabling detection of objects not explicitly defined during training:

model.set_classes(["hard hat", "safety vest"])

This capability is particularly valuable for:

- Industrial safety and compliance systems

- Rapidly changing environments

- Applications where class definitions evolve frequently

YOLOE-26 brings vision-language flexibility to a real-time, edge-friendly detector, previously achievable only with much heavier models.

Performance Benchmarks

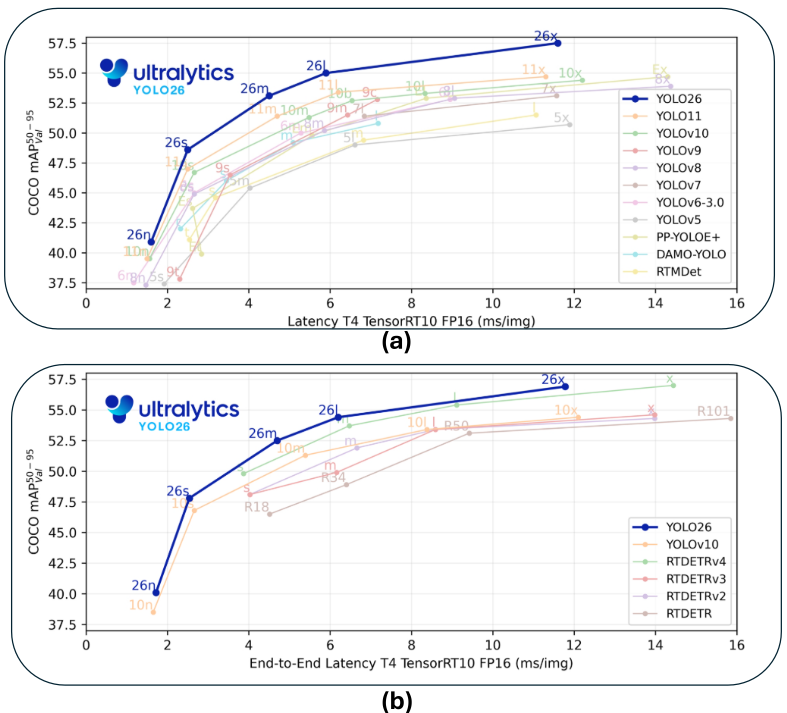

YOLOv26 delivers its most significant gains where they matter most: CPU and edge inference. The image below shows the mAP score achieved by YOLOv26 compared with models such as YOLOv10 and RTDETR.

COCO Benchmark (640px resolution)

| Model | Size | mAP (50–95) | CPU (ONNX) | GPU (T4 TensorRT) |

|---|---|---|---|---|

| YOLOv26-n | Nano | 40.9 | ~39 ms | 1.7 ms |

| YOLOv26-m | Medium | 53.1 | ~220 ms | 4.7 ms |

Key observations:

- Up to 43% faster on CPUs compared to YOLOv11/12

- Competitive accuracy relative to heavier transformer-based detectors

- Sub-2ms latency for the Nano model on T4 GPUs

Using YOLOv26 in Practice

Installation

pip install ultralytics

Inference

from ultralytics import YOLO

model = YOLO("yolo26n.pt")

results = model.predict("image.jpg")

results[0].show()

Export

model.export(format="onnx")

The absence of NMS and DFL significantly simplifies deployment pipelines compared to earlier YOLO versions.

Real-World Applications

YOLOv26 is particularly well-suited for:

- Robotics: Deterministic latency enables smoother control loops

- Manufacturing: Improved small-object detection for defect inspection

- Drones: Lower compute and power requirements extend flight time

- Mobile & Embedded Vision: Clean INT8/FP16 deployment without custom post-processing

YOLOv26 vs Previous Generations

YOLOv26 vs YOLOv8 / YOLOv11

Earlier YOLO versions, such as YOLOv8 and YOLOv11, prioritized accuracy improvements through architectural additions like Distribution Focal Loss, more complex heads, and heavier post-processing pipelines.

YOLOv26 takes a different path:

| Aspect | YOLOv8 / YOLOv11 | YOLOv26 |

|---|---|---|

| NMS Dependency | Required | Removed (One-to-One Head) |

| Bounding Box Regression | DFL-based | Simplified regression |

| Quantization Robustness | Moderate | High (INT8/FP16 friendly) |

| Edge Deployment | Manual optimization often needed | Clean, end-to-end export |

| Latency Stability | Scene-dependent | Deterministic |

For GPU-centric training benchmarks, accuracy remains comparable. For deployment on CPUs, Jetsons, and embedded accelerators, YOLOv26 is substantially easier to operationalize.

YOLOv26 vs RT-DETR

RT-DETR and other transformer-based detectors excel in high-capacity GPU environments, but they introduce challenges for real-time and edge scenarios:

- Heavier memory footprint

- Higher latency on CPUs

- More complex export pipelines

YOLOv26 achieves competitive accuracy with a fraction of the computational and operational overhead, making it the more practical choice for latency-sensitive and power-constrained systems.

Deploying YOLOv26

YOLOv26 stands out because its research contributions translate cleanly into production benefits:

Research Choices → Deployment Impact

- NMS-free design → Fully end-to-end inference graphs

- Removal of DFL → Stable INT8 and FP16 quantization

- MuSGD optimizer → Faster, more stable training convergence

- STAL + ProgLoss → Improved small-object recall without added compute

In practice, this means:

- Fewer custom post-processing kernels

- More predictable latency profiles

- Faster bring-up on edge hardware

- Lower maintenance cost across deployment targets

YOLOv26 effectively closes the gap between academic design and real-world deployment constraints.

Final Thoughts

YOLOv26 represents a rare architectural reset. Instead of layering new components onto an already complex system, it removes friction both computational and operational.

By eliminating NMS and DFL, embracing LLM-inspired optimization, and explicitly targeting edge hardware, Ultralytics delivers a detector that feels engineered for deployment rather than evaluation alone.

For real-time vision systems outside the data center, YOLOv26 is arguably the most practical YOLO release to date.

References

Frequently Asked Questions

For edge and CPU-bound deployments, yes, significantly. GPU-only accuracy is comparable, but YOLOv26 is far easier to deploy.

Yes. The training pipeline and dataset format remain consistent with previous Ultralytics YOLO versions.

Absolutely. The Nano variant is particularly well-suited due to its NMS-free, quantization-friendly design.