Tiny Vision Language Models (VLMs) are rapidly transforming the AI landscape. Almost every week, new VLMs with smaller footprints are being released. These models are finding applications across diverse fields – agriculture, robotics, manufacturing, healthcare, wellness, forensics, and more. In this article, you will learn how to run a VLM on Jetson Nano using Huggingface Transformers.

Learning Objectives:

- Setup Jetson Orin Nano with Jetpack, CUDA toolkit

- Install Huggingface transformers and related libraries

- Run LiquidAI, Moondream2, FastVLM, and SmolVLM

- Why VLM on Jetson Nano?

- How to Setup Jetson Nano for Running VLM

- Huggingface Framework for Inferencing VLM on Jetson Nano

- Inference Using Moondream2

- Inference using LiquidAI

- Inference Using FastVLM Family from Apple

- Inference Using SmolVLM Family

Why VLM on Jetson Nano?

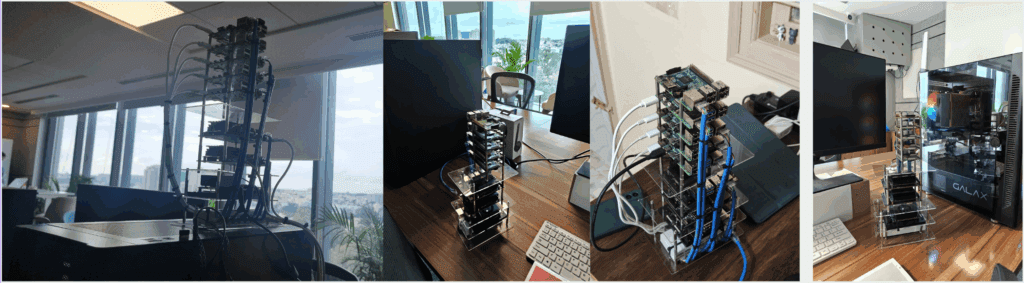

Previously, we built a Raspberry Pi and Jetson Nano cluster to test various VLMs (and LLMs) on hardwares with limited resources. We compared how the various boards perform while running Qwen2.5VL and Moondream2 using Ollama. Out of all the boards – Jetson Orin Nano (out of the box) was performing well by a huge margin. Checkout VLM on Edge: Worth the Hype or Just a Novelty?.

VLMs on Jetson Nano is generating promising results. Given the 8 GB unified memory – it can also handle some models with parameter count as high as 7B (8 bit quantized).

How to Setup Jetson Nano for Running VLM?

Setting up on edge devices isn’t always straightforward. Dependency conflicts, deprecations, and even unreleased binaries (as of September 2025) can make the process frustrating. That’s where this guide comes in – follow along, and you’ll have your Jetson Orin Nano running VLMs in minutes.

We are using Jetson Orin Nano Devkit, 8GB with 256 GB SSD. One of the parks of using this board is that – it comes with 2 additional slots for installing M.2 SSDs. Note that it only accepts PCIe NVMe drives. The SATA based drives are not supported. Moreover, it is designed for Gen 3 interface hence buying Gen 4 PCIe SSDs will not make it faster. Those will be supported but at the speed of Gen 3. You can also use an SD card but read/write speed will be slower.

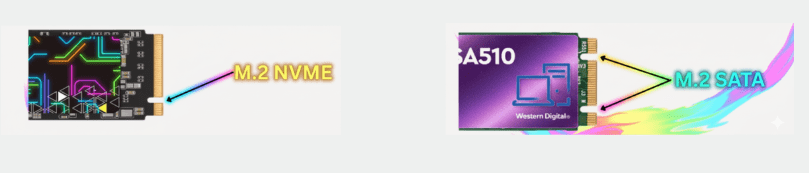

Q. How to identify PCIE or SATA drive?

You can take a look at specifications while buying one. In terms of physical appearance, SATA drives have 2 notches and PCIe has 1 notch (usually).

2.1 Flash Jetson Orin Nano with Jetpack

Jetpack in Jetson is a Board Support Package (BSP) that comes bundled with Ubuntu OS, Jetson Platform Services, and AI stacks (CUDA, TensorRT, CuDNN etc). We will use Nvidia SDK Manager to flash Jetpack 6 onto the board. Follow the steps provided in the official website.

Note: Jetpack 6 comes with CUDA 12.6. It can’t be changed directly when needed.

2.2 Install and Verify Jetson Essentials

Let’s check if nvidia-jetpack stack is installed correctly using the following commands. If it is not installed, we will use the following bash commands to perform the same.

apt list --installed | grep nvidia-jetpack

dpkg-query --show nvidia-l4t-core

# Add NVIDIA APT Repositories

sudo bash -c 'echo "deb https://repo.download.nvidia.com/jetson/common r34.1 main" >> /etc/apt/sources.list.d/nvidia-l4t-apt-source.list'

sudo bash -c 'echo "deb https://repo.download.nvidia.com/jetson/t234 r34.1 main" >> /etc/apt/sources.list.d/nvidia-l4t-apt-source.list'

# Update and Upgrade the System

sudo apt update

sudo apt dist-upgrade

# Install Jetpack SDK and Verify

sudo apt install nvidia-jetpack

apt list --installed | grep nvidia-jetpack

apt list --installed | grep cuda-toolkit

apt list --installed | grep cudnn

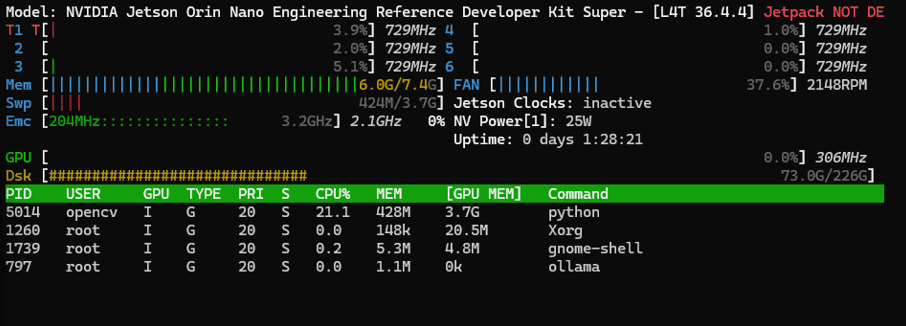

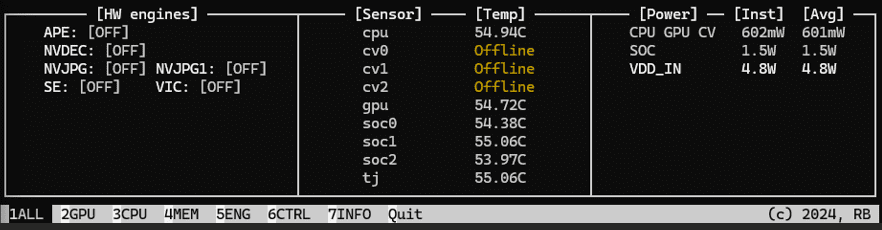

The command nvidia-smi does not work on jetson orin nano. We have to install jtop monitoring utility for similar results. It provides real time information of CPU/GPU/Thermals. Once installed, you may have to reboot the board for jtop to take effect. In our case, we had to restart the services as shown below.

sudo apt update

sudo apt upgrade -y

sudo apt install python3-pip -y

sudo -H pip3 install -U jetson-stats

# sudo systemctl restart jtop.service

# sudo reboot now

# jtop

2.3 Install BLAS + Set CUDA Version

OpenBLAS is the linear algebra accelerator. We are also exporting CUDA versions as shown below to match subsequent installs.

sudo apt-get install -y python3-pip libopenblas-dev

export CUDA_VERSION=12.6

2.4 Install Sparse Matrix Library (cuSPARSELt)

The following commands downloads and installs NVIDIA’s cuSPARSELt repo and adds keyring to the trusted sources. If you are installing Jetpack 5, then you will get CUDA 11.x. The final command will be sudo apt -y install cusparselt-cuda-11 in that case.

wget https://developer.download.nvidia.com/compute/cusparselt/0.8.1/local_installers/cusparselt-local-tegra-repo-ubuntu2204-0.8.1_0.8.1-1_arm64.deb

sudo dpkg -i cusparselt-local-tegra-repo-ubuntu2204-0.8.1_0.8.1-1_arm64.deb

sudo cp /var/cusparselt-local-tegra-repo-ubuntu2204-0.8.1/cusparselt-local-tegra-C4CC87E1-keyring.gpg /usr/share/keyrings/

sudo apt update

sudo apt -y install cusparselt-cuda-12

2.5 Install PyTorch, TorchAudio, TorchVision (Jetson-Compatible Wheels)

The compatible pre-built binaries for aarch64 are available in PyPi Jetson AI Labs. We are choosing Jetpack 6 and CUDA 12.6 in our case. If your versions have changed, choose preferrences accordingly.

wget https://pypi.jetson-ai-lab.io/jp6/cu126/+f/590/92ab729aee2b8/torch-2.8.0-cp310-cp310-linux_aarch64.whl#sha256=59092ab729aee2b8937d80cc1b35d1128275bd02a7e1bc911e7efa375bd97226 -O torch-2.8.0-cp310-cp310-linux_aarch64.whl

wget https://pypi.jetson-ai-lab.io/jp6/cu126/+f/de1/5388b8f70e4e1/torchaudio-2.8.0-cp310-cp310-linux_aarch64.whl#sha256=de15388b8f70e4e17a05b23a4ae1f55a288c91449371bb8aeeb69184d40be17f -O torchaudio-2.8.0-cp310-cp310-linux_aarch64.whl

wget https://pypi.jetson-ai-lab.io/jp6/cu126/+f/1c0/3de08a69e9554/torchvision-0.23.0-cp310-cp310-linux_aarch64.whl#sha256=1c03de08a69e95542024477e0cde95fab3436804917133d3f00e67629d3fe902 -O torchvision-0.23.0-cp310-cp310-linux_aarch64.whl

python3 -m pip install numpy

python3 -m pip install --no-cache torch-2.8.0-cp310-cp310-linux_aarch64.whl

python3 -m pip install torchvision-0.23.0-cp310-cp310-linux_aarch64.whl

python3 -m pip install torchaudio-2.8.0-cp310-cp310-linux_aarch64.whl

2.6 Install cuDSS (CUDA Sparse Solver)

CUDA Direct Sparse Solver or cuDSS is an NVIDIA library designed to solve sparse linear systems of equations on GPUs. Sparse systems appear a lot in scientific computing, optimization, circuit simulation, machine learning, and graph analytics – basically for large matrices but most entries are zeros. The following commands will downoad, install, and verify the same.

mkdir -p tmp_cudss && cd tmp_cudss

CUSPARSE_SOLVER_NAME="libcudss-linux-sbsa-0.6.0.5_cuda12-archive"

curl -L -O https://developer.download.nvidia.com/compute/cudss/redist/libcudss/linux-sbsa/${CUSPARSE_SOLVER_NAME}.tar.xz

tar xf ${CUSPARSE_SOLVER_NAME}.tar.xz

sudo cp -a ${CUSPARSE_SOLVER_NAME}/include/* /usr/local/cuda/include/

sudo cp -a ${CUSPARSE_SOLVER_NAME}/lib/* /usr/local/cuda/lib64/

cd ..

rm -rf tmp_cudss

sudo ldconfig

ls /usr/local/cuda/lib64 | grep cudss

Huggingface Framework for Inferencing VLM on Jetson Nano

Huggingface is one of the most popular open-source ecosystems for machine learning. It provides thousands of pre-trained models including VLMs. The models can be easily downloaded and run with just a few lines of code. It is pythonic, and provides finer control for experimenting on models including quantization to increase speed and reduce memory usage.

3.1 Install Core Libraries ans Authenticate

pip install transformers accelerate huggingface_hub

Install Huggingface and core libraries using the commands below. You will also have to authenticate to download some gated models from the hub using command hf_auth login. It will promt as shown below where you have to enter a token. Initially, follow the link to genrate a new token.

We will load the models with fp16 precision or whatever version is hosted by default. The list of models that we will use for test run are as follows:

- Moondream2

- LFM2-VL-450M from LiquidAI

- LFM2-VL-1.6B from LiquidAI

- FastVLM-1.5B from Apple

- FastVLM-500M from Apple

- SmolVLM2-2.2B-Instruct from Huggingface

3.2 Install BitsAndBytes Library

Hugging Face Transformers integrates BitsandBytes so you can load large LLMs in 4-bit/8-bit precision with a single flag (load_in_4bit=True or load_in_8bit=True). It helps reduce GPU memory requirements by 2x to 4x, enabling you to run larger models. The quantization can improve inference/training speed depending on hardware and model.

Note: Sometime quantization makes models slower on CPU.

You can not install bitsandbytes directly from PyPi on Jetson Orin Nano because it is not compiled for aarch64. You can compile from the source but the best way is to use the wheels from Jetson Labs.

wget https://pypi.jetson-ai-lab.io/jp6/cu126/+f/d46/6b5819e312dd5/bitsandbytes-0.48.0.dev0-cp310-cp310-linux_aarch64.whl#sha256=d466b5819e312dd5fb7fa4226a074e2d90baed93d479897f7941a32a2a729e12 -O bitsandbytes-0.48.0.dev0-cp310-cp310-linux_aarch64.whl

pip install bitsandbytes-0.48.0.dev0-cp310-cp310-linux_aarch64.whl

Moondream2 VLM on Jetson Inferencing

Moondream2 is a compact, open-source vision-language model crafted by Vikhyat Korrapati (github username vikhyatk). What makes it impressive is its efficiency. With just 1.86B parameters, Moondream2 balances performance and resource usage. Tasks supported by the model are as follows.

- Image captioning

- Visual question answering (VQA)

- Zero-shot object detection

- Pointing

- It also features OCR and Gaze detection ability but it is done through VQA process only.

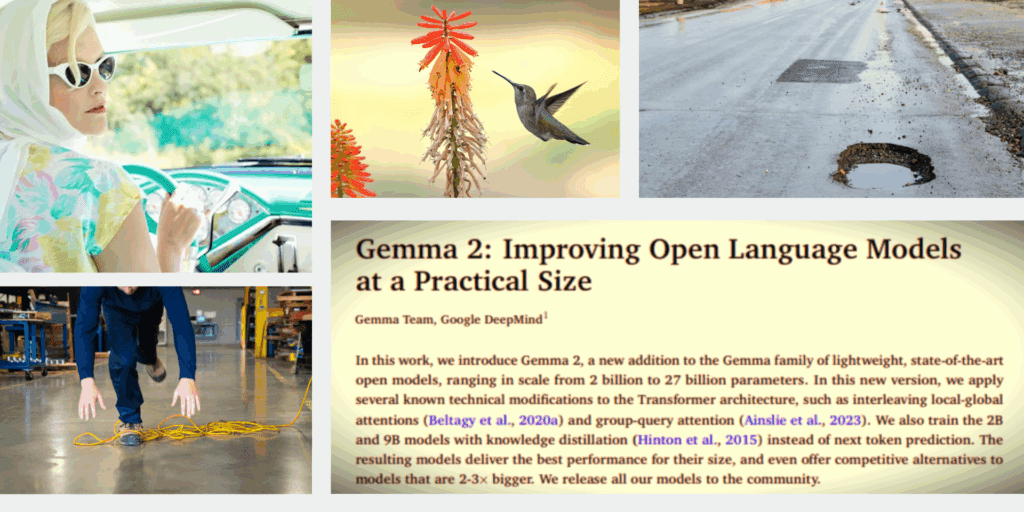

We will be using the following images to test tiny VLM on jetson orin nano board. There is a person sitting in a car, one generic image of flower and a bird, pothole image, another image where a person is about to fall by tripping on a cable, and a text rich image.

4.1 Import Dependencies

import time

from PIL import Image, ImageDraw

# Import mattplotlib to plot image outputs

import matplotlib.pyplot as plt

%matplotlib inline

plt.rcParams['image.cmap'] = 'gray'

from transformers import AutoModelForCausalLM, AutoTokenizer

4.2 Load Moondream2 Model

model = AutoModelForCausalLM.from_pretrained(

"moondream/moondream-2b-2025-04-14",

revision="2025-06-21",

trust_remote_code=True,

device_map="auto",

)

dtype = next(model.parameters()).dtype

print(dtype)

4.3 Normal Caption using Moondream2

You can also perform short, and long captioning by changing the length parameter.

print('Normal caption:')

t1 = time.time()

normal_caption = model.caption(img, length="normal")["caption"]

for t in normal_caption:

print(t, end="", flush=True)

t2 = time.time()

diff = t2 - t1

print(f"\Caption Time : {round(diff,2)}")

Normal caption:

A hummingbird with a green and gray body and a long, slender beak is captured in mid-flight, hovering near a vibrant red flower. The flower, with its numerous small, orange petals, is attached to a green stem. The background is a soft, out-of-focus yellowish-beige, providing a contrast to the vivid colors of the flower and hummingbird. Another red flower is partially visible in the bottom left corner of the image.\Caption Time : 8.144.4 VQA using MoonDream – Example 1

# Visual Querying

qimg = Image.open('../tasks/potholes.png')

print("\nVisual query: 'How many potholes are there in the image?'")

print(model.query(qimg, "How many potholes are there in the image?")["answer"])

Visual query: 'How many potholes are there in the image?'

There is one pothole in the image.4.5 VQA using MoonDream – Example 2

# Visual Querying

qimg = Image.open('../tasks/cable-trip.jpg')

print("\nVisual query: 'Why is the person falling?'")

print(model.query(qimg, "Why is the person falling?")["answer"])

Visual query: 'Why is the person falling?'

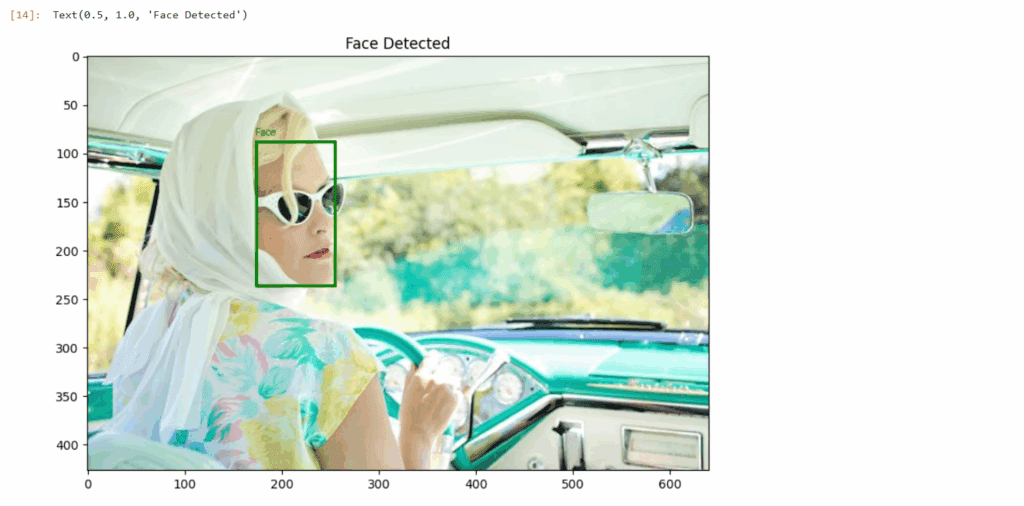

The person is falling because they have tripped over a yellow extension cord on the floor. The cord is tangled and lies on the ground, causing the person to lose their balance and fall. This incident highlights the importance of being cautious and aware of one's surroundings, especially in industrial or commercial environments where electrical cords and equipment are present.4.6 Object Detection using Moondream2 VLM on Jetson

# Object Detection

imgf = Image.open('../tasks/driving-gaze.jpg')

print("\nObject detection: 'face'")

objects = model.detect(imgf, "face")["objects"]

print(f"Found {len(objects)} face(s)")

w, h = imgf.size

Object detection: 'face'

Found 1 face(s)

# Create draw object

draw = ImageDraw.Draw(imgf)

# Loop over bboxes

for bbox in objects:

# Convert normalized to pixel coords

x_min = int(bbox['x_min'] * w)

y_min = int(bbox['y_min'] * h)

x_max = int(bbox['x_max'] * w)

y_max = int(bbox['y_max'] * h)

# Draw rectangle (outline only)

draw.rectangle([x_min, y_min, x_max, y_max], outline="green", width=3)

# Optionally add text

draw.text((x_min, y_min - 15), "Face", fill="green")

plt.figure(figsize = [20, 8])

plt.subplot(121); plt.imshow(imgf); plt.title('Face Detected')

The notebooks for LiquidAI, FastVLM, and SmolVLM have been included in the download code section. We will show one example of caption for each.

LFM2-VL LiquidAI VLM on Jetson Inferencing

Liquid Foundation Models (LFMs) are developed by the company LiquidAI. A novel model class built on dynamics, signal processing, and numerical linear algebra instead of traditional transformers. Released in mid-2025, LFM2-VL extends their LFM2 text-only models into the vision-language domain. There are two main model variants – LFM2-VL-450M and LFM2-VL-1.6B. Tasks supported by the model are as follows.

- Image captioning

- Visual question answering (VQA)

- Grounding supported through VQA, not native

Let’s take a look at the code to see how model is loaded and caption inference is executed.

5.1 Import Dependencies and Load LFM2-VL-1.6B

from transformers import AutoProcessor, AutoModelForImageTextToText

from transformers.image_utils import load_image

# Load model and processor

model_id = "LiquidAI/LFM2-VL-1.6B"

model = AutoModelForImageTextToText.from_pretrained(

model_id,

device_map="auto",

dtype="bfloat16",

trust_remote_code=True

)

processor = AutoProcessor.from_pretrained(model_id, trust_remote_code=True)

5.2 Prepare Inputs and Run Inference

# Load image and create conversation

image = Image.open("../tasks/bird.jpg")

query = "What do you see in the image? Answer in 100 words."

conversation = [

{

"role": "user",

"content": [

{"type": "image", "image": image},

{"type": "text", "text": query},

],

},

]

# Generate Answer

inputs = processor.apply_chat_template(

conversation,

add_generation_prompt=True,

return_tensors="pt",

return_dict=True,

tokenize=True).to(model.device)

t1 = time.time()

# Generate output

outputs = model.generate(**inputs, max_new_tokens=128)

t2 = time.time()

# Decode the output

gen = processor.batch_decode(outputs, skip_special_tokens=True)[0]

print(gen)

print(f"\n Generation Time : {round(t2-t1, 2)} s")

user

What do you see in the image? Answer in 100 words.

assistant

The image showcases a hummingbird in flight, hovering near a vibrant flower. The hummingbird's wings are blurred, capturing its rapid movement. The flower is striking, with a green stem and a mix of orange and yellow petals. The background is a soft blur of yellow and green, creating a warm, natural setting. This scene beautifully illustrates the symbiotic relationship between hummingbirds and flowers, highlighting the intricate details of both creatures in their natural habitat.

Generation Time : 7.95 sInference Using FastVLM Family from Apple

FastVLM is Apple’s groundbreaking vision-language model introduced at CVPR 2025, with an official blog post on July 23, 2025. It stands out because of the following factors.

- Its FastViTHD hybrid encoder, enabling fewer image tokens and extremely fast processing.

- Tremendously reduced TTFT (up to 85× faster vs. prior models).

- Strong performance across various VLM benchmarks while remaining small and efficient.

- Smooth on-device capability, particularly on Apple Silicon, preserving user privacy and enabling offline use.

- A working browser demo that shows the model’s real-time captioning capabilities with the 0.5B variant.

It has three primary variants – 0.5B, 1.5B, and 7B models. The optimized formats such as fp16, int4, and int8 versions are also available on huggingface. Tasks supported in by the model are as follows.

- Image captioning

- Visual question answering (VQA)

- Grounding supported through VQA, not native

6.1 Load FastVLM-1.5B

from transformers import AutoTokenizer, AutoModelForCausalLM

path = "apple/FastVLM-1.5B"

IMAGE_TOKEN_INDEX = -200 # what the model code looks for

# Load

tok = AutoTokenizer.from_pretrained(path, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

path,

dtype=torch.float16,

device_map="auto",

trust_remote_code=True,

)

6.2 Pre-Processing Steps

messages = [

{"role": "user", "content": "<image>\nDescribe this image in detail."}

]

rendered = tok.apply_chat_template(

messages, add_generation_prompt=True, tokenize=False

)

pre, post = rendered.split("<image>", 1)

# Tokenize the text *around* the image token (no extra specials!)

pre_ids = tok(pre, return_tensors="pt", add_special_tokens=False).input_ids

post_ids = tok(post, return_tensors="pt", add_special_tokens=False).input_ids

# Splice in the IMAGE token id (-200) at the placeholder position

img_tok = torch.tensor([[IMAGE_TOKEN_INDEX]], dtype=pre_ids.dtype)

input_ids = torch.cat([pre_ids, img_tok, post_ids], dim=1).to(model.device)

attention_mask = torch.ones_like(input_ids, device=model.device)

# Preprocess image via the model's own processor

img = Image.open("../tasks/bird.jpg").convert("RGB")

px = model.get_vision_tower().image_processor(images=img, return_tensors="pt")["pixel_values"]

px = px.to(model.device, dtype=model.dtype)

6.3 Generate Result – Inference

# Generate

t1 = time.time()

with torch.no_grad():

out = model.generate(

inputs=input_ids,

attention_mask=attention_mask,

images=px,

max_new_tokens=128,

)

t2 = time.time()

print(tok.decode(out[0], skip_special_tokens=True))

print(f"Generation Time: {round(t2-t1,2)}")

A vibrant outdoor photograph captures a striking scene dominated by a bright red spike of flowers at the top of the image, contrasting sharply against a yellowish-white background. The focal point of the right side of the image is a blue-tinted hummingbird mid-flight against this backdrop. The bird, with its distinct black eye and long black beak, gracefully hovers directly in front of the flower stalk, as if it’s about to land on the blooms. Its body is a beautiful mosaic of colors, with greenish speckles on the side, and gray streaks along its body.

The flower cluster itself is long and cylindrical,

Generation Time: 13.86SmolVLM on Jetson Nano

SmolVLM family is from the creators of Huggingface. In 2024, Jan SmolVLM-2B models were released. Next SmolVLM-256M and SmolVLM-500M in Jan, 2025. These are the smallest VLM in the world as of today. The video capable models were released in Feb, 2025. Similar to above models, SmolVLM family also supports most tasks through VQA interface without any native functions.

- Image captioning

- Visual question answering (VQA)

- Grounding supported through VQA, not native

from transformers import AutoProcessor, AutoModelForImageTextToText

from transformers.image_utils import load_image

model_path = "HuggingFaceTB/SmolVLM2-2.2B-Instruct"

processor = AutoProcessor.from_pretrained(model_path)

model = AutoModelForImageTextToText.from_pretrained(

model_path,

torch_dtype=torch.bfloat16

).to("cuda")

image = Image.open("../tasks/bird.jpg")

query = "Can you describe this image?"

messages = [

{

"role": "user",

"content": [

{"type": "image", "path": "../tasks/bird.jpg"},

{"type": "text", "text": query },

]

},

]

inputs = processor.apply_chat_template(

messages,

add_generation_prompt=True,

tokenize=True,

return_dict=True,

return_tensors="pt",

).to(model.device, dtype=torch.bfloat16)

generated_ids = model.generate(**inputs, do_sample=False, max_new_tokens=64)

generated_texts = processor.batch_decode(

generated_ids,

skip_special_tokens=True,

)

print(generated_texts[0])

User:

Can you describe this image?

Assistant: The image depicts a hummingbird in mid-flight, hovering near a flower. The hummingbird is captured in a dynamic pose, with its wings spread wide and its long, thin beak extended towards the flower. The flower itself is an Aloe polyphylla, characterized by its tall, slender stem and a cluster ofVLM on Jetson Nano Conclusion

Setting up VLM in a resource contrained environment is like trying to fit an elephant inside a fridge. Still the performance on Jetson Orin Nano is decent. The speed is also somewhat comparable to consumer GPU like RTX 3060 12 GB.

So that’s all about setting up a pipeline to run VLM on Jetson Nano. I hope you enjoyed reading the post. With the right setup and Hugging Face support, models like Moondream2, LiquidAI’s LFM2-VL, Apple’s FastVLM, and Huggingface’s SmolVLM can run decent on this compact board. Each offering different strengths in speed, size, and capability. The nano is a promising, capable platform for real-time, private, and low-power AI applications.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning