Why buy a whole pizza when you’ll only eat one slice? That’s exactly how traditional LLM inference works: you reserve huge chunks of GPU memory for every request, even if most of it sits unused. Result? Low throughput, slow responses, and GPU bills that hurt. vLLM fixes it with PagedAttention. It hands out memory in small, reusable slices and keeps the GPU 100% busy with continuous batching. Same hardware, 4x more tokens per second compared to transformers. So in 2025, should we stop using Transformers fully? Continue reading to know more.

What’s covered in the blog post?

- Introduction to vLLM

- Architecture explanation

What is vLLM?

vLLM is a free, open-source inference engine designed to make LLMs fast and cheap in production. Created by UC Berkeley researchers in 2023. As of today, it is used by almost every major open-source LLM provider.

vLLM replaces the slow default sampling loop in Hugging Face Transformers. With just a few lines of code, we can serve models 4x faster, handle hundreds of users at once, and run an OpenAI-compatible API locally, all for free.

Previously, we worked with DeepSeek-OCR and saw how performance varies with Transformers and vLLM pipeline. With transformers – it tooks 60s to process a single image. Whereas with vLLM it processed 15 images in about 6 seconds. That’s the difference vLLM creates compared to Huggingface Transformers.

It is interesting to realise how much compute power we were wasting before this. Want to know more about how it works? Continue reading.

How vLLM Runs LLMs Faster and Cheaper?

vLLM makes LLMs faster and cheaper by fixing the two biggest hidden wastes in traditional inference: memory fragmentation and GPU idle time.

- PagedAttention: instead of wasting huge blocks of GPU memory on each request (like the pizza problem), it splits memory into small reusable pages, cutting memory waste by up to 90%.

- Continuous batching: it dynamically mixes new requests with ongoing ones so your GPU is never idle, delivering 3 to 10x higher throughput on the exact same hardware.

2.1 What is KV Cache?

When a large language model (like Llama-3 or Mixtral) generates text, it uses Transformer attention to remember everything it has seen so far.

- K = Keys

- V = Values

For every token the model has already produced, it stores a tiny vector (key + value) that represents that token’s meaning and position. Together, all these stored keys and values form the KV cache (also called the attention cache or key-value cache).

The problem is – The KV cache grows with every token in the sequence (input + output).

At 70B parameters and 32k context, one single user can eat 20 to 80 GB of GPU memory just for this cache! Traditional Hugging Face stores each user’s KV cache as one giant contiguous block. Resulting in massive fragmentation.

2.2 PagedAttention: The Core Trick That Makes vLLM Magical

The solution is inspired from the way computers solve hard disk fragmentation. Interstingly, it was solved 40 years ago!

PagedAttention performs the following:

- Divide the KV cache into small fixed-size pages (say 16 or 512 tokens per page)

- Store these pages in a non-contiguous page table instead of one giant block (just like virtual memory in Linux/Windows)

- When a sequence needs more space, vLLM allocates only the pages it actually needs

- They can be scattered anywhere in GPU memory

- When a sequence finishes or gets preempted, its pages are instantly returned to a global free pool

- New requests (or continuing ones) grab pages from that pool

Further more, pagedAttention introduces the following:

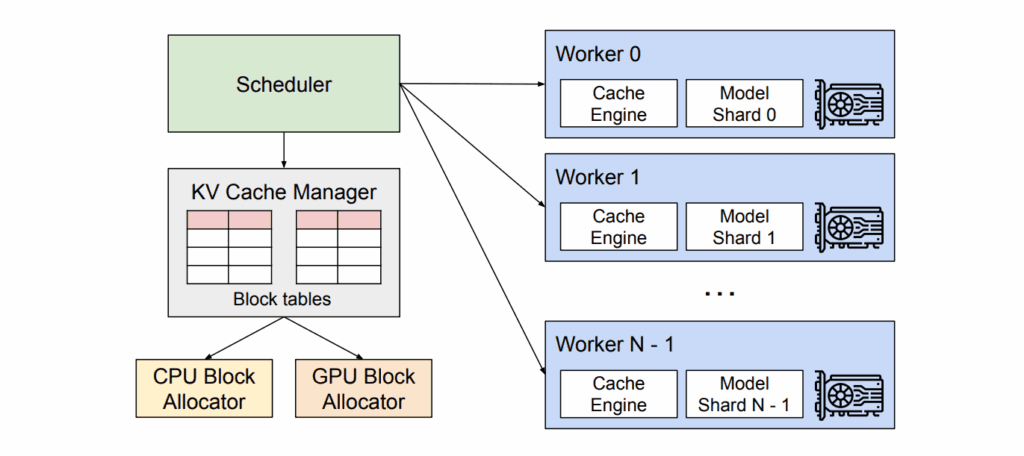

- Block table: a simple array that maps each sequence to its list of physical page numbers (the attention kernel reads this instead of assuming contiguous memory)

- Copy-on-write sharing: when we fork a prompt (say tool-use agents), identical prefix pages are shared between sequences until one of them writes

2.3 How Continuous Batching Works on Top of PagedAttention?

Normal batching works like a school bus: The bus waits until every kid is ready, then starts the route. If one kid is slow, everyone waits. When a kid reaches their stop early, their seat stays empty until the whole trip ends. That’s exactly what Hugging Face Transformers does:

- build a batch of prompts, the run prefill for all of them

- Generate one token at a time for the entire batch

- As soon as one sequence finishes, its row in the batch becomes dead weight

- Meaning GPU still processes empty slots

- Resulting in throughput collapse

Continuous (dynamic) batching + PagedAttention = Uber instead of a school bus:

- The GPU generates one token step for the current running sequences.

- The moment a sequence finishes (or hits max_tokens), vLLM instantly

- removes it from the running batch

- frees its KV pages back to the global pool

- At the same exact step, vLLM inserts any pending new requests that are waiting in the queue

- The next token generation starts immediately with the new mix

2.4 Other Optimizations Introduced by vLLMs

The above two features aren’t the only ones from vLLM. As of 2025, it is a time tested framework with many more optimizations.

(a). Prefix Caching (zero-overhead): Automatically reuses KV pages of identical prompt prefixes (e.g., system prompt, tools, few-shot examples) across users (if exists).

(b). Chunked Prefill: Splits huge prompts (32k plus) into chunks so prefill runs in parallel with decoding instead of blocking everything.

(c). FlashAttention-3: Uses the absolute fastest custom attention kernels.

(d). Optimized CUDA kernels for PagedAttention: Custom kernels that read the block table directly on GPU (no slow CPU copies).

(e). Multi-LoRA batching: Serve hundreds of different LoRA adapters in the same batch with almost zero overhead.

(f). Speculative decoding support: Runs Medusa, Lookahead, or speculative tokens natively.

(g). Tensor + Pipeline Parallelism built-in: One-line –tensor-parallel-size 8 works perfectly with PagedAttention. Scales cleanly to 8 nos of H100 or multi-node without headaches.

(h). Smart scheduling & preemption: Automatically pauses long-running sequences to let short interactive ones jump the queue.

(i). RadixAttention cache: Tree-based cache reuse for repeated n-grams. Read more about it our previous article DeepSeek OCR Paper explained.

How To Use vLLM?

Installing vLLM is easiest using PyPi. If you are using nightly builds, stick to uv package manager and isolated environments.

pip install vllm

3.1 Loading LLM and Running Inference

You can use majority of the models from Huggingface with vLLM. Check vLLM documentations for supported models here. Let’s see how to load and run inference with models like Llama-3-70B.

from vllm import LLM, SamplingParams

llm = LLM(model="meta-llama/Meta-Llama-3-70B-Instruct", tensor_parallel_size=2)

prompts = ["Hello, my name is", "The future of AI is"]

sampling_params = SamplingParams(temperature=0.8, max_tokens=128)

outputs = llm.generate(prompts, sampling_params)

for output in outputs:

print(output.outputs[0].text)

3.2 Serving LLM As OpenAI Compatible API

python -m vllm.entrypoints.openai.api_server --model meta-llama/Meta-Llama-3-70B-Instruct --tensor-parallel-size 2

Now we can point LangChain, OpenAI Python client, LlamaIndex, etc. to http://localhost:8000/v1 and it just works.

3.3 When Should You Use vLLM?

In the following conditions, one should consider using vLLM.

- More than one concurrent user

- Running LLMs or VLMs and paying for GPUs by the hour

- Need OpenAI-compatible API without paying OpenAI

3.4 When vLLM is Not Required?

If your objective is research oriented or only ever do single requests locally, Then plain Transformers is simpler. Huggingface has 500k+ models, and all models will not be supported. There you will have to do with Transformers.

Some cases where TensorRT-LLM will be more efficient.

- Low user load (1-10)

- Short prompts (512 token max)

- Decode heavy

Would you like to see a full comparison of TensorRT-LLM vs vLLM? let us know in the comments below.

vLLM Conclusion

vLLM isn’t just another inference library. Probably it’s the biggest free performance upgrade the open-source LLM world has ever seen. Just few lines of code, and you instantly turn a sluggish, Hugging Face setup into a blazing-fast, OpenAI-compatible service. Handling hundreds of users on the exact same GPUs. With higher throughput, lower cloud bills, longer contexts, and almost zero extra complexity.

With this, we wrap up the introductory article on vLLM. We talked about pagedAttention, continuos batching, and other optimizations done by vLLM as of 2025. I hope you enjoyed the article and learned a thing or two. Make sure to provide feedback below for improvements, feature additions or next blog post request if any. Happy Learning!

Yes vLLM is much more faster and efficient than using Transformers directly for inference.

vLLM is fully open source. Yes it is free to use.

Companies like Meta, Mistral AI, Cohere, and IBM uses vLLM for deploying their models.

No vLLM does not support all models from Huggingface.