LLM performance isn’t just about bigger GPUs, it’s about smarter serving. If you’ve ever watched a 32B model hog an H100 while users wait, you’ve met the real problems in LLM serving. Memory fragmentation, stalled scheduling, and token‑bound decoding. This post breaks down the six core issues of Autoregressive Inference and how various engines fixes them.

Learning Objectives:

- Why models fail in production?

- Core technical concepts to understand model failure with Autoregressive Inference

- The ideas that fixed these issues and present day solutions

- A Brief Revisit to Autoregressive Inference

- Six Major Problems in LLM Serving

- KV Cache – The Heart of Autoregressive Inference

- No Continuous Batching

- Prefill-Decode Imbalance and Decode Starvation

- No prefix caching / Prompt sharing

- Lack of Multi-LoRA/Adapter Serving

- Lack of Speculative Decoding at Scale

- Conclusion: Problems in LLM Serving

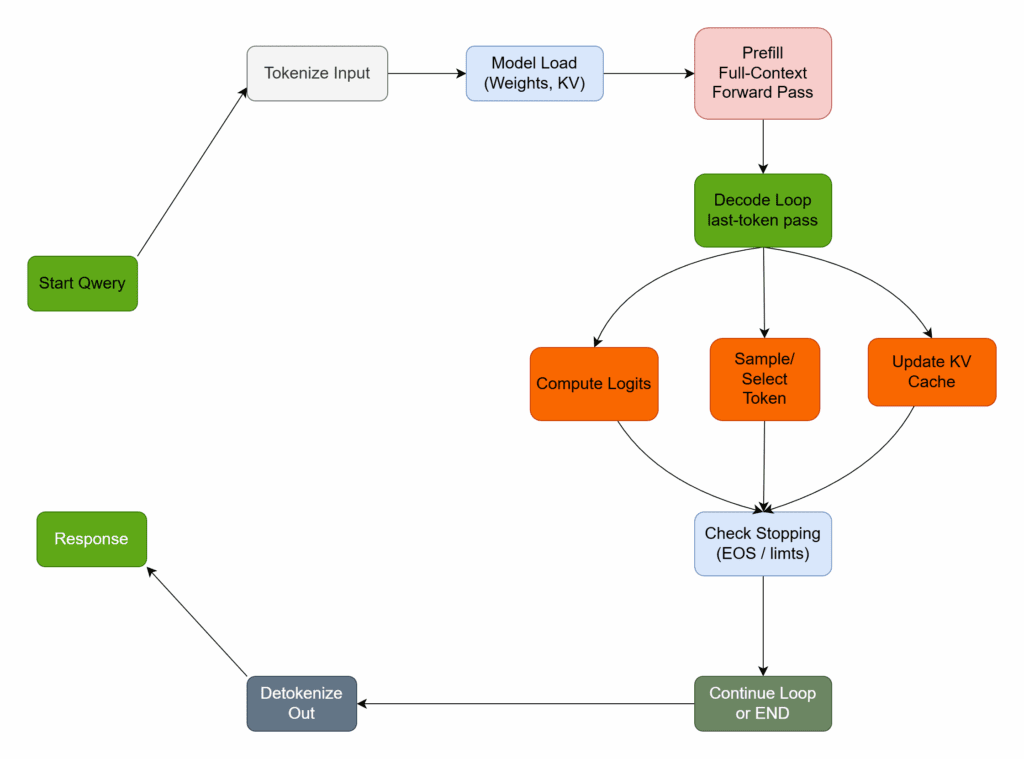

A Brief Revisit to Autoregressive Inference

This workflow is straightforward yet fundamentally memory-bound. Each new token still triggers a forward pass and heavy KV cache traffic. So TTFT and throughput depend on how well we manage caching, batching, and scheduling. Grasping this prefill-then-decode cycle is the foundation for understanding problems in LLM serving.

👋TTFT: Time to First Token, time between when user clicks send and when first output token actually arrives in the client.

Six Major Problems in LLM Serving

ChatGPT was revolutionary not only because of the model it introduced. It was also because of how it served millions of users without fail. The recipe was in-house and no open source serving engines were there. Not until end of 2023 when vLLM introduced pagedAttention and Continuous batching. As of today, there are many frameworks that solved the following problems in LLM serving.

- KV Cache fragmentation and alignment

- No continuous (dynamic) batching

- Prefill-Decode imbalance and scheduling starvation

- No prefix caching / Prompt sharing

- No multi-LoRA / adapter serving

- No speculative decoding at scale

In this post, we will use Metal-Llama-70B-Instruct as our reference model. Precision – BF16, GPU – H100 80 GB vRAM.

KV Cache – The Heart of Autoregressive Inference

The issue of memory fragmentation, on of the biggest problems in LLM serving. KV cache is the short-term memory of an autoregressive LLM. It stores Keys & Values for every past token, so attention doesn’t recompute the entire history. With Transformers backend, the KV cache for each request is allocated to a giant contiguous Tensor.

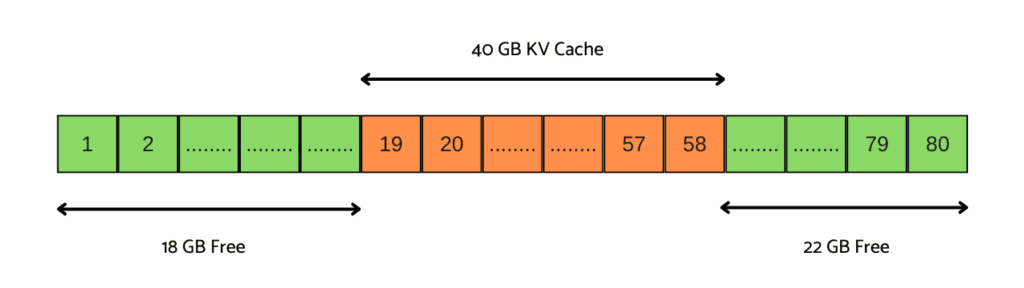

Taking example of 80 GB vRAM of the H100 GPU as 80 slots. Where the driver (CUDA in our case) allocates memory for KV cache. Let’s not think about model loading for now.

With 128k token context, it need KV chache of 40GB approx. Let’s look at an example of 2 users trying to access inference. Here’s what will happen when users starts sending queries.

Step 1: User 1 Reserves a 40GB slot. Even if he sends query amounting to 100 token. The slot starting position can be random. Well not exactly random, CUDA follows 1 GiB alignment rule for H100. Meaning the starting address can be 0.0, 1.0, 2.0 GiB and so on.

Step 2: User 2 Send another query, but get OOM error. As no single contiguous block of 40 GB available.

Step 3: User 1 got the response. Now memory is freed. Running nvidia-smi shows 80 GB available.

Step 4: User 2 tries again but it shows OOM error even after having 80 GB free vRAM.

🤔What exactly happened?

Now, even though 80 GB memory is free, each block contains tiny amount of metadata from previous allocation. CUDA does not frees them (even if it does, no major improvment). Now the allocated chunk of memory is sitting there useless.

This is the KV Cache fragmentation issue. It limits the number of concurrent users. It was simply a huge sink wasting memory. The only way to improve the results were:

- Quantize models heavily

- Reduce max_token_length brutally

Although OpenAI had solved this issue internally, no open source solution were available untill vLLM introduced pagedAttention in 2023.

No Continuous Batching

Static batching without any schedular. While KV cache fragmentation killed the GPU memory after 5 to 10 users, lack of continuous batching killed the latency and throughput even with 1 to 2 users.

Almost all open source servers used static batching before 2023. Where a request had to wait untill current batch of generation (completely) finishes. The results were catastrophic. A single user generating 500 tokens could block the entire GPU for 10-20s focring other users to wait.

Continuous batching introduced by vLLM allows new requests to join the batch and finished sequences to leave at every decoding step.

- Implements an iteration-level scheduler that wakes up every single decode step

- Dynamically rebuilds the running batch on every iteration, no fixed batches

- Continuously drains new incoming requests and immediately adds them when space is available

- Instantly removes finished sequences (EOS or max-tokens) and frees their KV cache pages

- Mixes prefill (new prompts) and decode (ongoing generations) in the same step

- Uses smart heuristics (priority weighting, token budgets, decode-first preference) to keep latency low and GPU saturated

- Executes the entire dynamic batch in one single fused CUDA kernel

- Handles variable sequence lengths without any padding, thanks to PagedAttention block tables

- Streams output tokens to users the moment they are generated (no waiting for batch completion)

Present Day Solutions

- HuggingFace TGI: dynamic/continuous batching with per-iteration scheduling and automatic request merging

- SGLang: continuous batching + RadixAttention for cache-aware scheduling

- TensorRT-LLM: In-flight batching (NVIDIA’s name for continuous batching) with maximum kernel fusion

- DeepSpeed-FastGen: Dynamic SplitFuse continuous batching optimized for MoE and multi-GPU setups

- Aphrodite Engine: vLLM fork with improved cont. batching and prefix caching

- LMDeploy: Persistent batching mode that continuously adds/removes sequences every step

Prefill-Decode Imbalance and Decode Starvation

Long prompt creates issue. The issue with longer prompt – say 32k tokens. Prefill is a greedy giant that can eat the entire GPU for seconds, starving hundreds of tiny decode users and making your service feel completely broken. Let’s see it with example of a sequence.

At t=0ms,

- User 1 sends a 32k token prompt

- Prefill starts | but 200 decode users are already running

- The 32k prefill hogs the 80-95% of teh GPU for 2-8 seconds

- The 200 decode users get starved, genrating only 1 token in 5 to 10s.

The solution is again from vLLM. Perfil-Decode phase separation with Priority Scheduling.

Present Day Methods

- Chunked prefiull (vLLM and TGI) : Breaks long prefills into small chunks of 512 – 2048 tokens and interleave with decode.

- Decode Priority Scheduling (vLLM, SGLang) : Decode sequences always run first. Prefill only uses leftover sequences.

- Prefill-only steps (TensorRT-LLM, DeepSpeed-FastGen): Run a few pure-prefill steps when queue is empty, then switch back to decode.

- Dual-Queue system (vLLM): Separate queues + token budgets

No prefix caching / Prompt sharing

The common inputs were considered unique. Let’s take a look at structure of a query. It consists of the following (in general).

- Same system prompt (say 150 token)

- Fixed chat history template (say 50 token)

- Variable user message

So 200 token for 1 user. This does not look like a lot right? But let’s calculate for 300 concurrent users. COnsidering same fp16 Meta-Llama-3-70B-Instruct model.

From model info:

Total layers = 80

KV heads (GQA) = 8

Head dimension = 128

bytes per weight = 2 (since bf16)

Elements per K or V = KV heads x head dimension

= 8 x 128 = 1024

1 token = Layers x (2x(K+V)) x bytes per weight

= 80 x (2 x (2048+2048)) x 2

= 327680 bytes

= 0.328 MB

200 token = 200 x 0.328 MB

= 65.6 MB

For 300 users = 300 x 65.6 MB

= 19680 MB

= 19.6 GB approx.

So we are wasting 20 GB, where only 65 MB could have worked for all.

Existing Solutions Today

- Immutable prefix caching: Introduced by vLLM where identical prefix is computed once and shared by all users. When a user message diverges, the engine forks a private copy from that point.

- Shared System Prompt: Same as above but only for the system prompt. Implementation observed in SGLang, TGI, and Ollama.

- Tree-Structured Cache: Introduced by SGLang in RadixAttention. It builds a tree of common prefixes.

Lack of Multi-LoRA/Adapter Serving

Unique finetuned model requires unique memory. This is the Enterprise killer. Before 2024, serving even handful of customer specific fine tunes were brutally expensive. As every LoRA required it’s own copy of the full base model in vRAM.

Considering a 70B param model, serving even 10 custom models meant hundreds of thousand dollars per month. Which was simply not feasible.

| Use Cases | Hardware Requirement | Cost/Month |

|---|---|---|

| 1 base model | 2 GPU | 160k |

| 100 customers | 200 GPUs | 16M (no kidding!) |

The first solution was introduced in OCT, 2023, by Lequn Chen in the paper “Punica: Multi-Tenant LoRA Serving”. Introducing a custom CUDA kernel called Segmented Gather Matrix-Vector Multiplication. This allowed batched inference across multiple LoRA adapters on a single base model.

Subsequent Developments Solutions Based On Punica

- LoRAX (Nov, 2023)

- Huggingface TGI Multi-LoRA (April, 2024)

- vLLM multi-LoRA (June, 2024)

Lack of Speculative Decoding at Scale

Autoregressive Decode is Fundamentally Momory Bound. Every single token costs a full GPU forward pass. No matter how fast is your hardware, you are stuck at 1 token per step.

This is why even solving above issues (using PageAttention + Continuous Batching + Prefix Caching), we are still only getting 50 token/s (just an idea considering our model and H100 gpu). This is still slow considering 1000+ incoming requests per second (RPS).

In Feb, 2023 – speculative decoding was introduced by DeepMind, Google. Let’s see how forward passes looked like before and after Speculative Decoding for 500 token generation.

Standard Autoregressive Large Model

- Pass 1: Token 1 predicted

- Pass 2: Token 2 predicted

- Pass 3: Token 3 predicted

- …………………….

- …………………….

- Pass 500: Token 500 predicted

For 500 passes say it took 25 sec. For 1 token it needs 50 ms.

Speculative Decoding (tiny + Large Model)

- Run a tiny draft model to predict 4 to 8 token

- Use the large model in parallel to verify all 8 predictions in 1 forward pass

- Accepts first 4 tokens and rejects rest

- For the small model, time taken by small model is negligible

1 big forwad pass generates 4 tokens. So, effective iterations required to generate 500 tokens is, 125 passes. Thus reduces net time by 4x.

Subsequent Developments After DeepMind

- SpecInfer (Oct, 2023): Tree verification at scale.

- SGLang (Dec, 2023): They introduced it natively in the serving engine.

- Medusa (Mar, 2024): Multi-head drafts

- Eagle (Jan, 2024): Dynamic draft trees

Conclusion: Problems in LLM Serving

With this, we wrap up the article – six major existential problems in LLM serving. Modern LLM serving exists because naive transformer inference wastes memory, stalls latency, and doesn’t scale. In this post, we saw six core issues: KV‑cache fragmentation, missing continuous batching, prefill-decode imbalance, lack of prefix caching, expensive multi‑LoRA serving, and the token‑bound nature of decoding.

We got to know the ideas that addressed above issues. Namely – PagedAttention, dynamic/continuous batching with decode‑first scheduling and chunked prefill, shared/immutable prefix caches, Punica‑style SGMV for multi‑tenant LoRA, and speculative decoding.

Together, these principles underpin today’s production engines and the metrics that matter most (TTFT, throughput, VRAM efficiency, and stability).

This happens due to KV-cache fragmentation. Traditional serving engines allocate large, contiguous KV-cache blocks per request. When these blocks are freed, small metadata remnants and alignment constraints prevent CUDA from reusing the memory as a single contiguous region. As a result, new requests fail despite nvidia-smi reporting ample free VRAM. PagedAttention solves this by allocating KV cache in fixed-size pages instead of monolithic tensors.

Without continuous batching, requests must wait for an entire batch to finish generation, causing severe latency spikes and GPU underutilization. Continuous batching rebuilds the batch at every decode step, allowing new requests to join immediately and completed ones to leave. This keeps the GPU saturated while dramatically reducing TTFT and tail latency, even under mixed workloads.

Long prompts trigger expensive prefill phases that can monopolize the GPU for seconds. If prefill and decode are scheduled equally, hundreds of active decode requests may stall, producing tokens extremely slowly. Modern engines fix this using chunked prefill and decode-priority scheduling, ensuring short, interactive requests remain responsive even when long prompts arrive.

Naively serving LoRA-fine-tuned models requires a full copy of the base model per adapter, making multi-tenant serving prohibitively expensive. Techniques like Punica’s segmented gather matrix-vector multiplication allow multiple LoRA adapters to share a single base model in memory and execute in a batched manner. This reduces VRAM usage by orders of magnitude and makes large-scale enterprise deployment feasible.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning