In the evolving landscape of open-source language models, SmolLM3 emerges as a breakthrough: a 3 billion-parameter, decoder-only transformer that rivals larger 4 billion-parameter peers on many benchmarks, while natively supporting six European languages and handling documents up to 128 K tokens.

In this comprehensive blog post, we’ll unpack every aspect of SmolLM3, from its core anatomy to the multi-stage training recipe, dual-mode reasoning interface, and innovative alignment strategy, so that we can understand, reproduce, and deploy this model with confidence.

- SmolLM3 Model Summary

- SmolLM3 Architecture & Model Anatomy

- Training Configuration of SmolLM3

- Pretraining Recipe of SmolLM3

- Long-Context Extension in SmolLM3

- Supervised Fine-Tuning & Chat Template

- Off-Policy Alignment with APO

- Model Merging & Final Checkpoint

- Evaluation & Benchmarks

- Deployment & Usage

- Conclusion

- References

SmolLM3 Model Summary

- High Efficiency at 3 B Scale

- Dual-Mode Reasoning (

thinkvs.no_think) - Extensive Context & Multilingual Support

| Feature | Details |

|---|---|

| Parameters | 3 Billion |

| Pretraining Budget | ≈ 11.2 Trillion tokens |

| Context Length | 64 K tokens native, extrapolated to 128 K via YaRN |

| Languages | English, French, Spanish, German, Italian, Portuguese |

| Reasoning Modes | think: (chain-of-thought) / no_think: (concise answers) |

| SoTA Performance | Tops 3B models; competitive with 4B models |

SmolLM3 Architecture & Model Anatomy

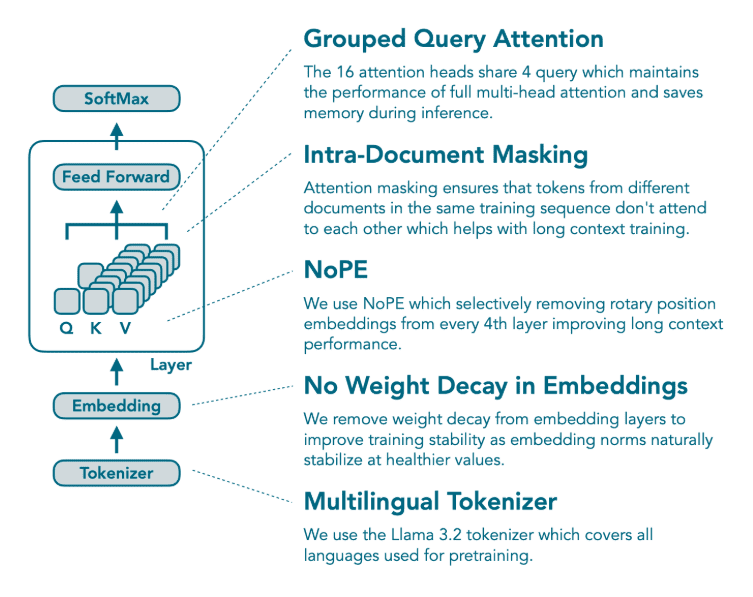

SmolLM3 builds on the proven Llama decoder architecture but introduces several targeted improvements to maximize efficiency, long-context performance, and multilingual capabilities.

Grouped Query Attention (GQA)

SmolLM3’s Grouped Query Attention instead groups its 16 heads into four shared query projections. This reduces the computational cost and memory footprint of query/key/value operations by about 25 percent, without any measurable loss in representational power. As a result, SmolLM3 can devote more resources to inference over longer contexts and richer data.

- What it is: 16 heads sharing 4 query projections

- Benefit: ~25 % reduction in Q/K/V compute and KV cache size without quality loss

NoPE (“No Positional Embedding”) Layers

Positional embeddings help models understand token order, but they can interfere with extrapolating to very long sequences. SmolLM3 adopts a hybrid approach, where every fourth layer omits rotary positional embeddings entirely (“NoPE”). This simple tweak preserves short-context performance while significantly improving the model’s ability to maintain coherence over tens of thousands of tokens.

- Pattern: Every 4th layer omits Rotary Positional Embeddings

- Outcome: Better extrapolation to long contexts with minimal impact on shorter inputs

Intra-Document Masking

When pretraining on a batch containing multiple documents, SmolLM3 ensures that tokens from different documents cannot attend to each other. This intra-document masking preserves the internal coherence of each text and prevents “bleed” between unrelated passages—an essential property when later extending the context window to hundreds of pages.

- Mechanism: Prevents attention across different documents in the same batch

- Result: Preserves per-document coherence, especially for long sequences

Tied Embeddings & Multilingual Tokenizer

SmolLM3 uses the Llama 3.2 tokenizer, a shared 32,000-token vocabulary covering English, French, Spanish, German, Italian, and Portuguese. Input and output embeddings are tied, reducing the total number of parameters, and the embedding matrices are exempt from weight decay during optimization. This stabilizes their norms and preserves representational integrity.

- Tokenizer: Llama 3.2 base, shared 32 K-token vocab

- Embedding: Shared input/output weights, with embeddings exempt from weight decay

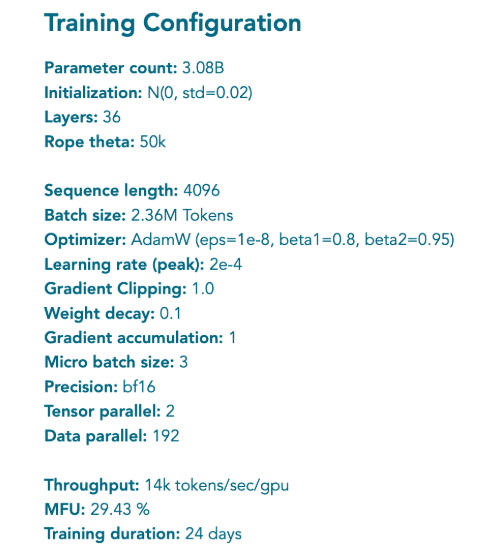

Training Configuration of SmolLM3

Training a model at the scale of SmolLM3, over 11 trillion tokens and an additional 100 billion tokens for context extension, requires careful tuning of optimizer settings, batch schedules, and distributed infrastructure. Below is the exact configuration used throughout both pretraining and long-context adaptation.

Optimizer & Learning‐Rate Schedule

SmolLM3 uses the AdamW optimizer (β₁ = 0.9, β₂ = 0.95, ε = 1 × 10⁻⁸) with a global weight decay of 0.1, excluding the embedding layers, which remain decay-free to preserve their representational norms. Gradients are clipped to a maximum norm of 1.0 to prevent exploding updates. Learning rates follow a Warmup-Stable-Decay (WSD) pattern:

- Warmup (2000 steps): Linear ramp from zero up to the peak learning rate of 2 × 10⁻⁴.

- Stable Phase (~10 trillion tokens): Maintain the peak rate for the bulk of training.

- Decay Phase (final 10 % of tokens): Linear decline from peak down to zero over approximately 1.1 trillion tokens.

This three-stage schedule ensures rapid convergence early on, a sustained learning rate during major updates, and gentle fine-tuning as the model approaches its final parameters.

Batching, Precision & Initialization

- Sequence Length: 4,096 tokens per sample during all three pretraining phases.

- Global Batch Size: ≈ 2.36 million tokens per optimization step (across all GPUs).

- Micro-Batch Size: 3 sequences per GPU.

- Precision: bfloat16 for all forward and backward passes, balancing numerical stability with throughput.

- Weight Initialization: Random normal distribution with μ = 0 and σ = 0.02.

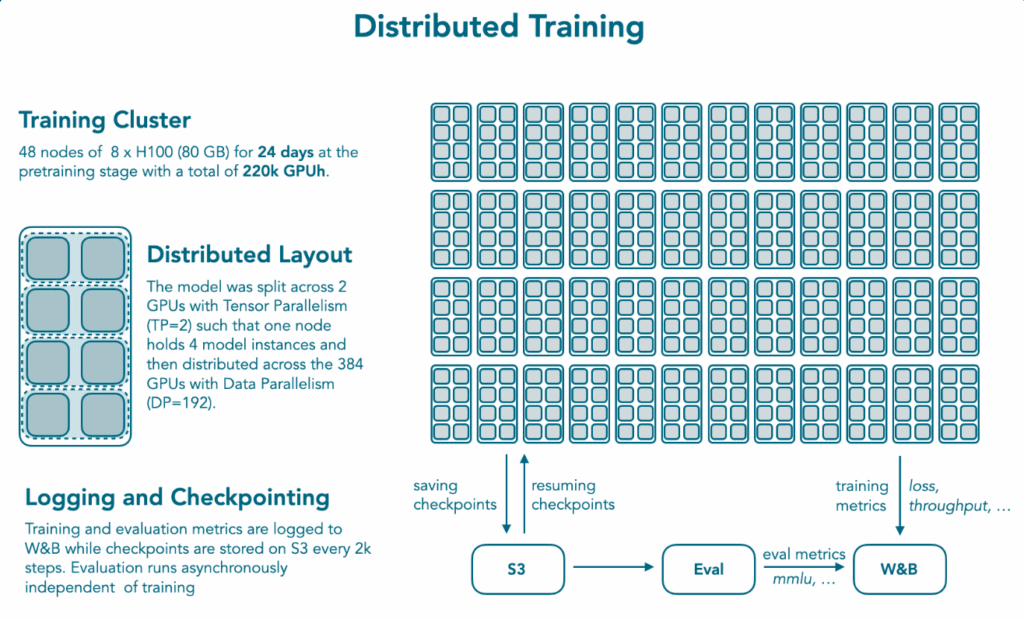

Distributed Infrastructure

Training runs on a cluster of 48 nodes, each equipped with 8 × NVIDIA H100 (80 GB) GPUs, totaling 384 GPUs. The workload is split as follows:

- Tensor Parallelism (TP = 2): Each model replica is sharded across two GPUs on the same node, enabling high-speed cross-GPU communication for the attention layers.

- Data Parallelism (DP = 192): Four such TP=2 replicas per node (8 GPUs ÷ 2) yield 192 replicas, each processing a distinct micro-batch in lockstep.

Overall, this yields a throughput of ~14,000 tokens/sec per GPU, with a measured Model FLOP Utilization (MFU) of 29.4 %—a strong indicator of efficient hardware usage. End-to-end training requires roughly 220,000 GPU-hours (≈ 24 days wall-clock).

Software Stack & Logging

- nanotron: Core training engine, handling optimizer steps, and mixed-precision execution.

- datatrove: High-performance data ingestion and preprocessing library, ensuring seamless delivery of tokens to the GPUs.

- lighteval: Asynchronous evaluation toolkit that loads saved checkpoints and runs validation suites without stalling the main training loop.

All training and evaluation metrics—loss curves, throughput, and benchmark scores—are logged in real time to Weights & Biases (W&B), while model checkpoints are asynchronously saved to Amazon S3 every 2,000 steps, guaranteeing robustness against interruptions.

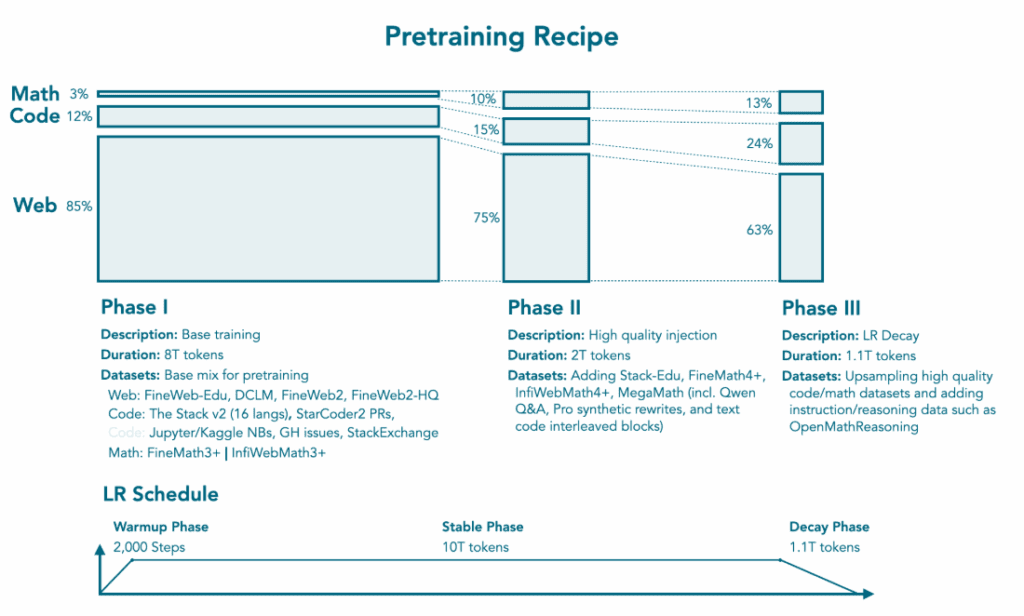

Pretraining Recipe of SmolLM3

SmolLM3’s 11.2 T-token pretraining follows a three-phase curriculum:

Phase I – Base Pretraining (0 → 8 T tokens)

The first phase prioritizes breadth: 85 percent web text, 12 percent code, and 3 percent math/science. Over 8 trillion tokens, the model learns general language patterns, code syntax, and mathematical reasoning basics. This stage uses a 4,096-token context window and a warmup-stable-decay (WSD) learning schedule that ramps up to a peak rate of 2 × 10⁻⁴, holds it steady through most of the tokens, then decays to zero over the final 10 percent.

- Mix: 85 % web text, 12 % code, 3 % math/science

- Goal: Establish broad language and code understanding

Phase II – High-Quality Injection (8 → 10 T tokens)

In the second phase, SmolLM3 injects richer, higher-quality examples: the mix shifts to 75 percent web, 15 percent code, and 10 percent math/science. New datasets like Stack-Edu, FineMath4+, and MegaMath (with Q&A pairs) sharpen the model’s reasoning and coding skills. Over 2 trillion tokens, the model refines its ability to solve complex problems and generate accurate code.

- Mix: 75 % web, 15 % code, 10 % math/science

- Datasets: Stack-Edu, FineMath4+, MegaMath, proprietary synthetic rewrites

- Goal: Sharpen reasoning and coding skills

Phase III – Decay & Niche Domains (10 → 11.1 T tokens)

The final pretraining segment emphasizes specialized domains and gradual learning-rate decay. The mix becomes 63 percent web, 24 percent code, and 13 percent math/science, with upsampled high-quality code/math sources and specialized reasoning corpora such as OpenMathReasoning. As the learning rate declines, the model solidifies its specialized capabilities without overfitting.

- Mix: 63 % web, 24 % code, 13 % math

- Datasets: Upsampled code/math, OpenMathReasoning

- Goal: Solidify specialized capabilities while decaying the learning rate

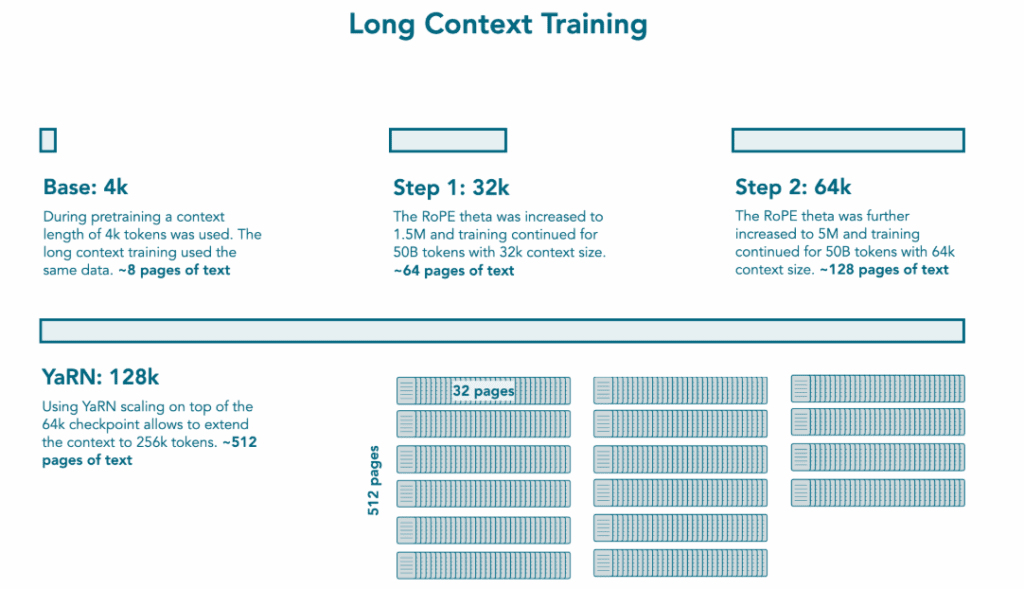

Long-Context Extension in SmolLM3

Beyond its 4 K-token training window, SmolLM3 undergoes 100 billion additional tokens of mid-training to stretch its memory.

- 4 K → 32 K (50B tokens; RoPE θ→1.5M)

- Rotary embedding frequency (RoPE θ) increases to 1.5 million.

- The model continues on a decay-phase mixture, learning to process multi-page documents.

- 32 K → 64 K (50B tokens; RoPE θ→5M)

- RoPE θ further rises to 5 million.

- Another 50 billion tokens cement the model’s ability to read and reason across book-length texts.

- YaRN Extrapolation to 128 K (no further training)

- Without any gradient updates, SmolLM3 applies the “Yet another RoPE extrapolation” technique at inference to handle up to 128,000 tokens—equivalent to hundreds of pages—with stable performance.

This staged approach ensures stable attention over book-length texts.

Supervised Fine-Tuning & Chat Template

To teach instruction following, SmolLM3 uses:

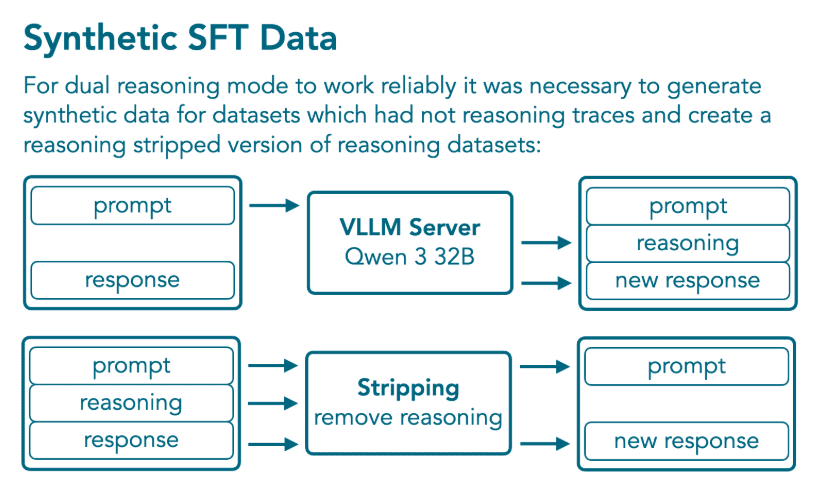

Synthetic Data Generation

Public reasoning datasets are uneven across domains, so SmolLM3’s team created 1.8 billion tokens of synthetic SFT examples using a stronger oracle model (Qwen 3-32B). For each prompt:

- The oracle generates a full chain-of-thought response and a final answer.

- Those traces are stripped to create paired “think” and “no_think” examples.

- The resulting corpus balances 0.8 billion tokens in reasoning mode with 1.0 billion tokens in direct-answer mode.

- Synthetic SFT Data (≈ 1.8 B tokens)

- Generated by querying a Qwen3-32B oracle in

think:mode - Stripped to create a balanced

think(0.8 B) andno_think(1.0 B) examples

- Generated by querying a Qwen3-32B oracle in

Chat Template Design

- A flexible chat format lets users switch modes with simple flags.

- /think: Enables step-by-step reasoning.

- /no_think: Produces concise answers, with empty

<think>…</think>placeholders. - Tool definitions (XML or JSON) and metadata blocks ensure reliable function calling and optional system-prompt overrides.

- Chat Template

- System message with

/thinkor/no_thinkflags - Tool-calling definitions in XML/JSON

- Consistent metadata blocks that can be overridden for customization

- System message with

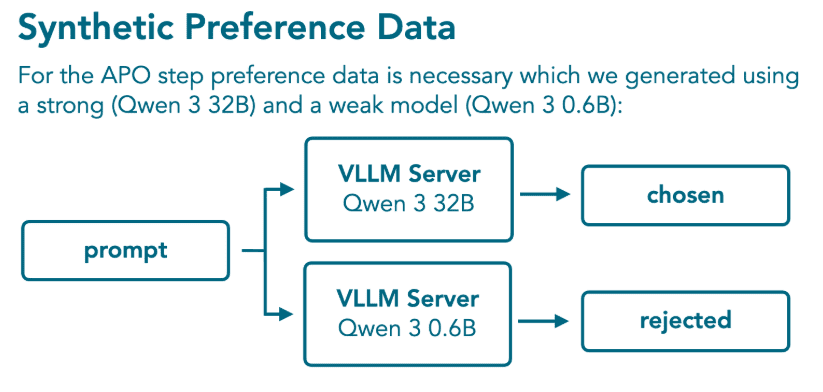

Off-Policy Alignment with APO

To refine its behavior, SmolLM3 uses Anchored Preference Optimization (APO), an off-policy variant of Direct Preference Optimization (DPO).

- Generate Preference Pairs

- For each SFT example, compare “think” vs. “no_think” responses and label them as “chosen” or “rejected.”

- DPO Recap – the core reward formulation used to align a language model to preferred outputs without reinforcement learning.

- APO Loss

- Anchors update symmetrically to the reference model. This symmetric anchoring prevents the model from drifting too far from its pretrained abilities.

- APO yields smoother training and stronger downstream performance than vanilla DPO, especially on complex reasoning tasks.

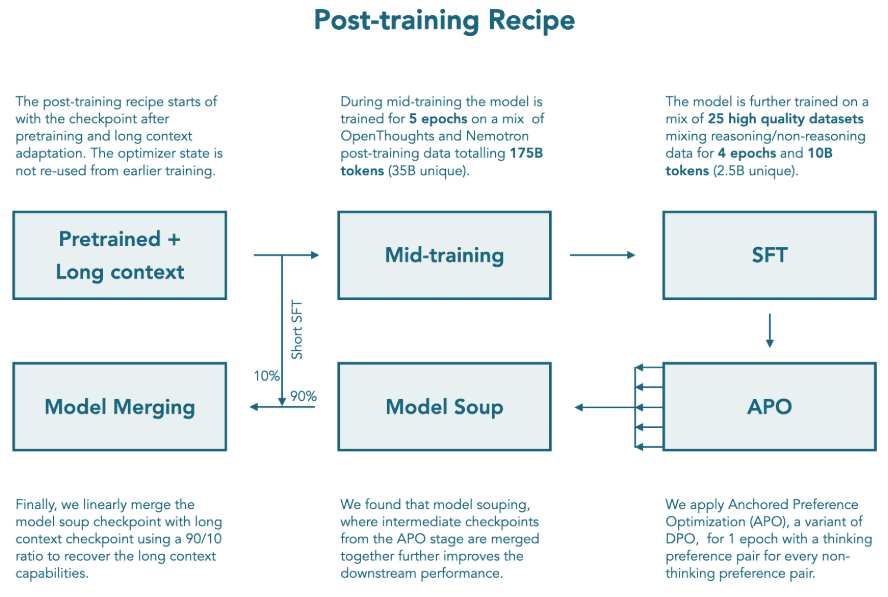

Model Merging & Final Checkpoint

Post-alignment, the team noticed a slight drop in long-context performance. To restore those capabilities, they used a two-step merging strategy:

- Model Soup: Linearly average all checkpoints from the APO stage. This smooths out random variations.

- Linear Blend:

- Merge 90 percent of the APO soup with 10 percent of the pre-SFT long-context checkpoint.

- The result retains robust chain-of-thought and instruction alignment while recovering top-tier performance on 128 K-token tasks.

This produces the final SmolLM3 release.

Evaluation & Benchmarks

Base Model (3 B) Performance

- Won 3 B Class on commonsense, multi-choice, multilingual

- Competitive vs. 4B models on key tasks

- Long-Context (RULER-64K): 67.9 % (3rd place)

Instruct Mode (no_think)

- Win Rate: ~3.7 % vs. other 3B instruct models

- Approaches 4B performance on eight instruction tasks

Multilingual Strength:

Tops Flores-200 translation and averages over 50 percent across French, Spanish, German, Italian, and Portuguese, beating 4B competitors in every language.

Chain-of-Thought (think)

Major Gains:

Gains of +27 pp on AIME math, +15 pp on programming benchmarks, +6 pp on graduate-level reasoning.

- AIME: +27.4 pp

- LiveCodeBench: +15.3 pp

- GPQA: +6.0 pp

Dual-Mode flexibility for speed vs. depth. Delivers a flexible trade-off between concise speed and in-depth reasoning, all in a single model.

Deployment & Usage

SmolLM3 is designed for practical, on-device deployment:

- Frameworks: Transformers, vLLM, ONNX, llama.cpp, MLC

- Inference: Single GPU (12–16 GB VRAM); quantized int8/4 for edge

- Tool-Calling: JSON/XML interfaces for structured outputs

- Privacy: Fully on-device operation for sensitive applications

Conclusion

SmolLM3 represents a new standard in small-scale language modeling. Through meticulous architectural refinements, a transparent multi-phase training curriculum, dual-mode reasoning, and a robust alignment pipeline, it achieves state-of-the-art results at just 3 billion parameters. Whether we’re tackling book-length documents in multiple languages, building instruction-following agents, or deploying private, on-device AI, SmolLM3’s complete blueprint empowers you to push the boundaries of what’s possible with compact, efficient models.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning