SigLIP-2 represents a significant step forward in the development of multilingual vision-language encoders, bringing enhanced semantic understanding, localization, and dense feature extraction capabilities. Built on the foundations of SigLIP, this updated model incorporates several advanced pre-training techniques, making it highly versatile and effective across various applications.

For AI enthusiasts, researchers, and developers, SigLIP 2 isn’t just another iteration; it’s a significant leap forward. Let’s dive into what makes this new model family so compelling.

- Why SigLIP 2 Matters

- Key Improvements in SigLIP 2

- Architecture Overview: Building on Strength

- The Training Objectives: A Multi-faceted Approach

- Performance Highlights: A New Benchmark

- Implications for the AI Community

- Conclusion

- References

Why SigLIP 2 Matters

While models like CLIP have been foundational, SigLIP 2 addresses areas where “vanilla” approaches sometimes fall short. It offers a more holistic understanding by:

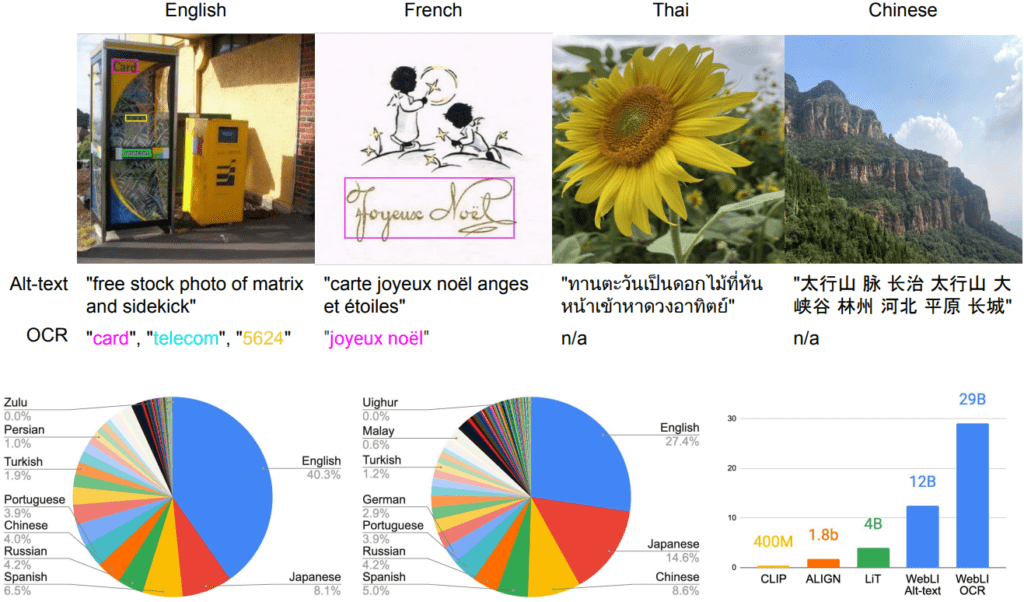

- Elevating Multilingual Capabilities: Truly global AI requires understanding across diverse languages and cultural contexts. SigLIP 2 excels here, showing strong performance on English-focused tasks while delivering robust results on multilingual benchmarks.

- Improving Localization and Dense Features: Moving beyond high-level image-text matching, SigLIP 2 provides finer-grained understanding, crucial for tasks like object detection, segmentation, and referring expression comprehension.

- A Unified, Advanced Training Recipe: SigLIP 2 integrates several powerful, independently developed techniques into a cohesive and effective training strategy.

- Enhanced Fairness and Reduced Bias: Consciously trained on diverse data with de-biasing techniques, SigLIP 2 aims for more equitable AI.

- Open for Innovation: With released model checkpoints, the AI community can build upon SigLIP 2 for a wide array of applications.

Key Improvements in SigLIP 2

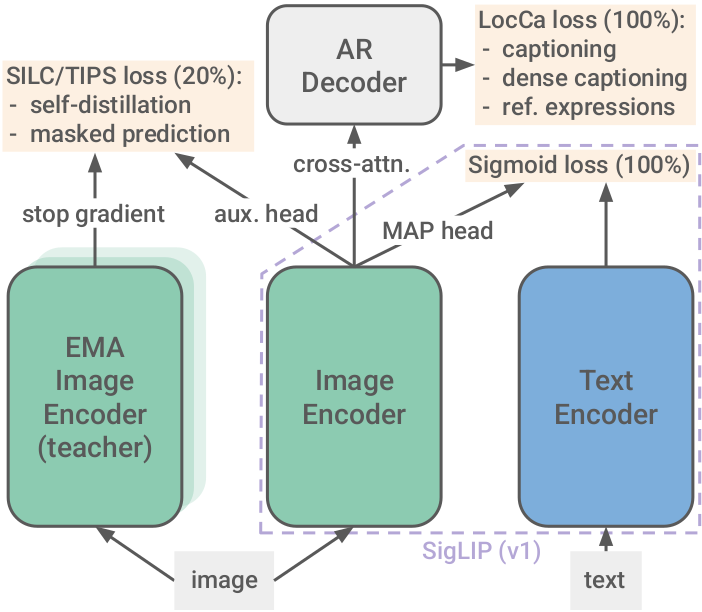

The magic of SigLIP 2 lies in its sophisticated training recipe and architectural considerations. It extends the original SigLIP’s image-text training objective by incorporating:

- Captioning-Based Pretraining (LocCa): An auxiliary decoder is used during pretraining to generate captions and understand referring expressions. This helps the model learn to connect specific image regions with textual descriptions, improving localization.

- Self-Supervised Losses (SILC & TIPS inspired):

- Self-Distillation: The model learns local-to-global correspondences, improving the semantics of un-pooled features.

- Masked Prediction: Similar to techniques in NLP, the model predicts masked image patches, forcing it to learn richer visual representations. These are typically added in the later stages of training.

- Online Data Curation: Intelligent data selection during training helps optimize performance, especially for smaller model variants.

- Native Aspect Ratio & Variable Resolution (NaFlex variant): A special “NaFlex” variant supports multiple input resolutions and preserves the image’s native aspect ratio, significantly benefiting tasks like document understanding and OCR where distortion can be detrimental.

Architecture Overview: Building on Strength

SigLIP 2 maintains backward compatibility with the original SigLIP architecture where possible, allowing for easier adoption. At its core, it typically consists of:

- Image Encoder: A Vision Transformer (ViT) variant processes images, breaking them into patches and learning powerful visual representations.

- Text Encoder: A Transformer-based text encoder (utilizing the multilingual Gemma tokenizer) processes textual input.

- Sigmoid Loss: Unlike CLIP’s contrastive loss, SigLIP uses a sigmoid loss for image-text matching. This involves creating binary classification problems by pairing image and text embeddings from a mini-batch.

- Auxiliary Decoder (During Pretraining Only): As seen with the LocCa component, a standard Transformer decoder is temporarily attached to the un-pooled vision encoder representation for tasks like captioning and grounded captioning. This decoder is not part of the final released model, but its inclusion during training enriches the encoder’s learned representations.

The Training Objectives: A Multi-faceted Approach

SigLIP 2’s strength comes from its comprehensive training strategy that goes beyond simple image-text matching:

- Primary Sigmoid Loss: This is the core objective, training the model to distinguish between matching and non-matching image-text pairs.

- Decoder-Based Losses (from LocCa):

- Image Captioning: Predicting textual descriptions for images.

- Referring Expression Prediction: Predicting bounding box coordinates for captions describing specific image regions.

- Grounded Captioning: Predicting region-specific captions given bounding box coordinates.

- Self-Distillation & Masked Prediction Losses (from SILC/TIPS): These are added at approximately 80% of training completion to refine local semantics and feature representation without disrupting learned image-text alignment.

- Data Strategy: Training utilizes the massive WebLI dataset (10 billion images, 12 billion alt-texts, 109 languages), with a 90% English and 10% non-English split, further enhanced by filtering techniques to mitigate data biases.

Performance Highlights: A New Benchmark

SigLIP 2 models demonstrate significant improvements across various tasks and model scales:

- Superior Zero-Shot Classification & Retrieval: Outperforming previous SigLIP models and other strong baselines.

- Robust Multilingual Performance: Nearly matching specialized multilingual models (mSigLIP) on multilingual tasks while being substantially better on English tasks.

- Enhanced Localization: The decoder-based pretraining leads to notable gains in tasks requiring understanding of specific image regions.

- Better Dense Features: Improvements in tasks like semantic segmentation, depth estimation, and surface normal estimation.

- Improved Fairness: Significantly reduced representation bias compared to its predecessor.

- NaFlex Variant Excellence: The NaFlex models show strong performance, especially on OCR and document-related benchmarks where aspect ratio preservation is key.

Implications for the AI Community

The release of SigLIP 2 and its checkpoints (ViT-B, L, So400m, and g sizes) by Google DeepMind is a boon for the AI community. It provides:

- A stronger foundation for building more capable and versatile VLMs.

- Tools for developing applications that can serve a global, multilingual audience.

- Pathways towards fairer and less biased AI systems.

- Opportunities for research into even more advanced vision-language understanding.

Conclusion

SigLIP 2 represents a significant milestone in the evolution of vision-language models. By ingeniously combining multiple advanced training techniques into a unified recipe and focusing on multilingualism, dense understanding, and fairness, Google DeepMind has provided a powerful new toolset for the AI world. As these models become integrated into downstream applications, we can expect to see even more intelligent, context-aware, and equitable AI solutions emerge.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning