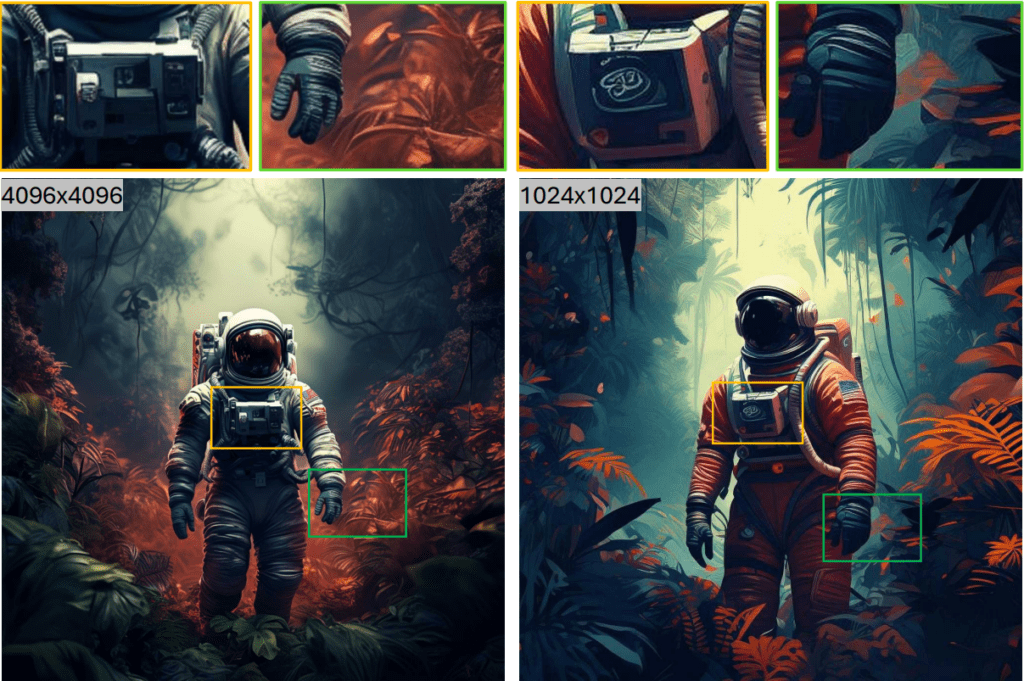

The world of generative AI moves at a lightning speed, constantly pushing the boundaries of what is possible. In the vibrant field of text-to-image synthesis, generating stunningly detailed, high-resolution images often comes with a hefty price tag: massive models, demanding computational resources, and slow generation times. Enter NVIDIA SANA, a groundbreaking image generation model poised to change the game. SANA isn’t just another text-to-image generator; it’s a meticulously engineered system designed for efficiency, speed, and accessibility, capable of producing breathtaking 4096×4096 pixel images.

For artists, designers, researchers, and developers, the dream has always been high-fidelity image generation that is both fast and affordable. SANA represents a significant leap towards making this dream a reality. Let’s delve into the architectural magic that powers SANA and discover why it’s capturing the attention of the AI community.

The High-Resolution Hurdle: Why Big Images Are Hard

Generating high-resolution images (like 1K, 2K, or even 4K) from text prompts presents significant computational challenges:

- Massive Data Representation: Higher resolution means vastly more pixels, translating into larger latent representations that diffusion models must process.

- Computational Complexity: Core mechanisms in popular architectures, like the attention layers in Transformers, often scale quadratically with the input sequence length. Processing the large latent spaces of high-res images becomes exponentially more expensive.

- Slow Sampling: Diffusion models typically require numerous iterative steps (sampling steps) to refine noise into a coherent image. More steps mean slower generation times, especially for large images.

- Memory Demands: Training and running large models capable of high-resolution output requires significant GPU memory (VRAM), limiting accessibility.

Previous solutions often involved enormous models (such as Google’s Imagen or Stability AI’s more substantial SDXL variants) or complex multi-stage pipelines, including upscaling and refinement. While powerful, these approaches often remain out of reach for users without access to high-end hardware. NVIDIA recognized these bottlenecks and engineered SANA from the ground up for efficiency.

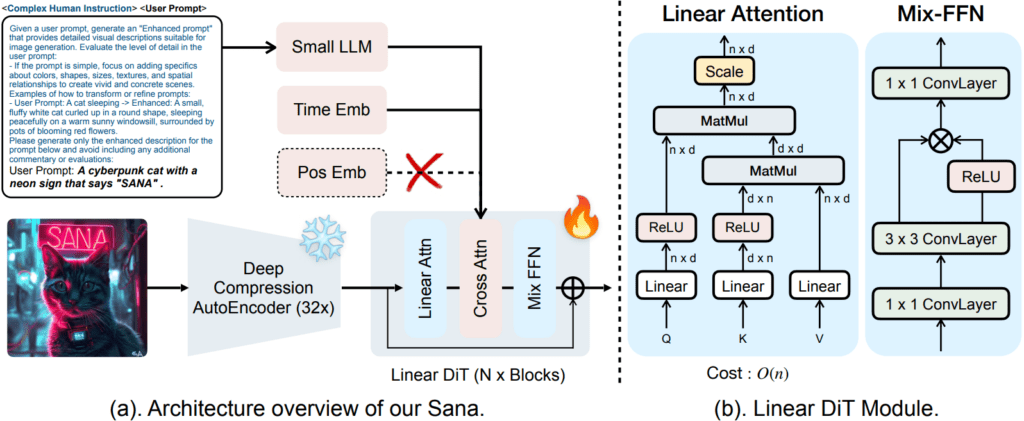

SANA’s Architectural Innovations: Smarter, Not Just Bigger

SANA’s remarkable performance is attributed to a series of architectural choices that optimize every stage of the text-to-image pipeline.

1. Deep Compression Autoencoder (DC-AE): Shrinking the Problem Space

Standard Latent Diffusion Models (LDMs), such as Stable Diffusion, typically employ an autoencoder to compress the input image into a smaller latent space, often achieving a 4x or 8x spatial compression. This reduces the computational load for the core diffusion process.

SANA takes this concept much further with its Deep Compression Autoencoder (DC-AE). This component achieves a staggering 32x spatial compression. Imagine compressing a 1024×1024 image not just to 128×128 latent features, but down to a mere 32×32!

- Impact: This drastic reduction in the size of the latent representation (fewer “latent tokens”) significantly cuts down the sequence length that the subsequent diffusion model needs to handle. This directly translates to:

- Faster Training: Less data to process per iteration.

- Faster Inference: Fewer computations needed during image generation.

- Lower Memory Usage: Smaller latent spaces require less VRAM.

Crucially, the DC-AE is designed to achieve this high compression ratio while preserving the essential details needed to reconstruct a high-fidelity image later.

2. Linear Attention in Diffusion Transformers (DiT): Taming Complexity

The heart of many modern generative models is the Transformer architecture, known for its powerful attention mechanism. However, the standard “softmax attention” has a computational cost that scales quadratically (O(N²)) with the sequence length (N). As SANA processes potentially large latent spaces (even after DC-AE, especially for 4K images) combined with text conditioning, this quadratic scaling becomes a bottleneck.

# Standard attention (O(N²) complexity)

def standard_attention(q, k, v):

attention_weights = softmax(q @ k.transpose(-2, -1) / sqrt(d_k))

return attention_weights @ v

# Linear attention (O(N) complexity)

def linear_attention(q, k, v):

q_prime = F.relu(q)

k_prime = F.relu(k)

kv = k_prime.transpose(-2, -1) @ v

return q_prime @ kv / (q_prime @ k_prime.transpose(-2, -1) + epsilon)

SANA replaces the standard softmax attention with a ReLU-based Linear Attention mechanism within its Diffusion Transformer (DiT) core.

- Impact: Linear attention mechanisms approximate the full attention matrix but scale linearly (O(N)) with the sequence length. This makes the DiT significantly more computationally efficient, especially when dealing with the longer effective sequences resulting from high-resolution image generation. This choice is key to SANA’s speed, allowing it to handle large latent dimensions without performance collapsing.

3. Decoder-Only Text Encoder: Efficient Text Understanding

Many text-to-image models rely on powerful yet large pre-trained text encoders, often utilizing encoder-decoder architectures such as T5. While effective, these encoders add significantly to the model’s overall size and complexity.

SANA adopts a more streamlined approach, utilizing a decoder-only small language model (similar in style to GPT models).

- Impact:

- Reduced Model Size: Decoder-only models are typically more parameter-efficient than encoder-decoder counterparts for comparable performance in specific tasks.

- Enhanced Text-Image Alignment: The paper suggests that this design, potentially combined with “in-context learning” strategies during training (where the model learns to better interpret prompts based on examples seen during training), leads to strong alignment between the text prompt and the generated image, even with a smaller text encoder.

4. Flow-DPM-Solver: Slashing Sampling Steps

The diffusion process itself involves gradually removing noise over many steps. More steps generally lead to higher quality, but drastically increase generation time. Speeding up this sampling process is critical for usability.

SANA introduces the Flow-DPM-Solver, an advanced differential equation solver optimized for diffusion models.

- Impact: This solver significantly reduces the number of sampling steps required to generate a high-quality image compared to traditional solvers. This, combined with efficient training strategies like careful caption labeling and selection (ensuring the model trains on high-quality, informative text-image pairs), helps the model converge faster during training and generate images much more quickly during inference.

Performance That Punches Above Its Weight

The synergy of these architectural innovations results in truly impressive performance metrics:

- Blazing Speed: SANA can generate a 1024×1024 image in under 1 second on a consumer-grade laptop with a 16GB GPU. This is orders of magnitude faster than many comparable high-resolution models.

- Compact Size: The primary SANA model (SANA-0.6B) boasts only 0.6 billion parameters. This is roughly 20 times smaller than models like the Flux-12B, yet it delivers throughput that is reportedly over 100 times faster.

- High Fidelity: Despite its small size and speed, SANA produces images with high fidelity and strict adherence to text prompts, achieving quality comparable to that of much larger, slower models. It demonstrates proficiency up to an impressive 4,096 x 4,096 pixels.

Accessibility: SANA in Your Hands

NVIDIA isn’t keeping this powerful technology locked away. They’ve made SANA accessible through multiple channels:

- Replicate Platform: Users can easily experiment with SANA via API calls on the Replicate platform. Generating an image is affordable (around $0.0018 per run at the time of writing) and fast, with predictions often completing in about 2 seconds on high-end NVIDIA H100 GPUs. This lowers the barrier to entry for developers and creatives who want to integrate SANA into their workflows without managing infrastructure.

- Open Source on GitHub: For researchers and developers who want to dive deeper, modify the model, or build upon it, NVIDIA has released SANA’s code and weights publicly on GitHub. This fosters community involvement, encourages further innovation, and allows for self-hosting and fine-tuning.

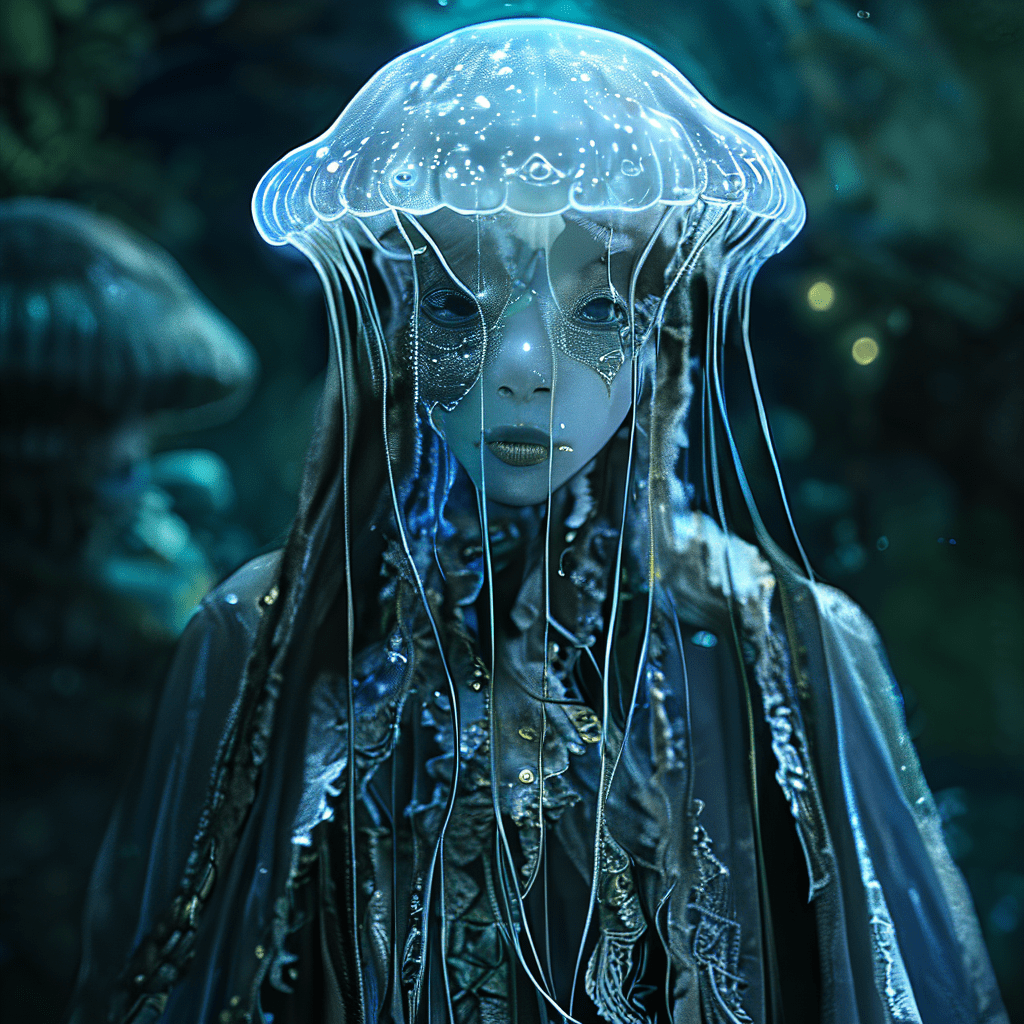

Let’s look at some samples that the SANA 0.6B model generated:

Why SANA Matters: Democratizing High-Resolution AI Art

SANA isn’t just an incremental improvement in the diffusion models space; it represents a potential paradigm shift in text-to-image generation. By drastically reducing the computational resources and time required for high-resolution output, NVIDIA is effectively democratizing access to state-of-the-art generative AI.

- Empowering Creators: Artists and designers can iterate faster, explore more complex ideas, and generate print-quality assets directly, without the need for expensive hardware or lengthy waits.

- Enabling New Applications: Real-time or near-real-time high-resolution image generation opens doors for interactive applications, dynamic content creation, and integration into diverse workflows.

- Driving Research: The open-source release provides a powerful, efficient baseline model for the research community to study, improve, and adapt for various tasks.

Conclusion: A Glimpse into the Future of Generative AI

NVIDIA’s SANA model is a testament to the power of innovative architectural design. By focusing on efficiency at every level – from latent space compression and attention mechanisms to text encoding and diffusion sampling – SANA delivers remarkably high-resolution text-to-image capabilities in a surprisingly compact and fast package. It challenges the notion that cutting-edge quality must always come with prohibitive computational costs.

As SANA becomes more widely adopted and built upon by the community, it’s likely to accelerate innovation across the creative AI landscape. Whether you’re a developer looking to integrate powerful image generation via API, a researcher exploring efficient model architectures, or a creative professional eager for faster high-resolution tools, SANA is a development worth watching closely.

Ready to experience SANA?

- Try it on Replicate: https://replicate.com/nvidia/sana

- Explore the Code on GitHub: https://github.com/NVlabs/Sana

- Read the Paper: https://arxiv.org/abs/2410.10629

- Gradio demo by MIT: https://nv-sana.mit.edu/

The era of fast, accessible, high-resolution AI image generation is dawning, and SANA is leading the charge.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning