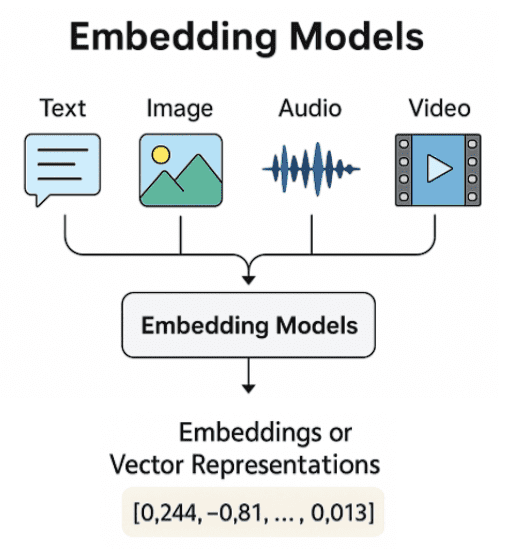

As artificial intelligence continues to advance, Embedding Models have become fundamental to how machines interpret and interact with unstructured data. By translating inputs like text, images, audio, and video into compact numerical vectors, these models enable efficient processing, semantic understanding, and meaningful comparisons across diverse data types.

This blog post comprehensively introduces embedding models, diving into their types, general functioning, and real-world applications across various modalities in great detail.

- What Are Embedding Models?

- How Embedding Models Work?

- Types of Embedding Models by Modality

- Real-World Applications of Embedding Models

- FAQs About Embedding Models

- Conclusion

What Are Embedding Models?

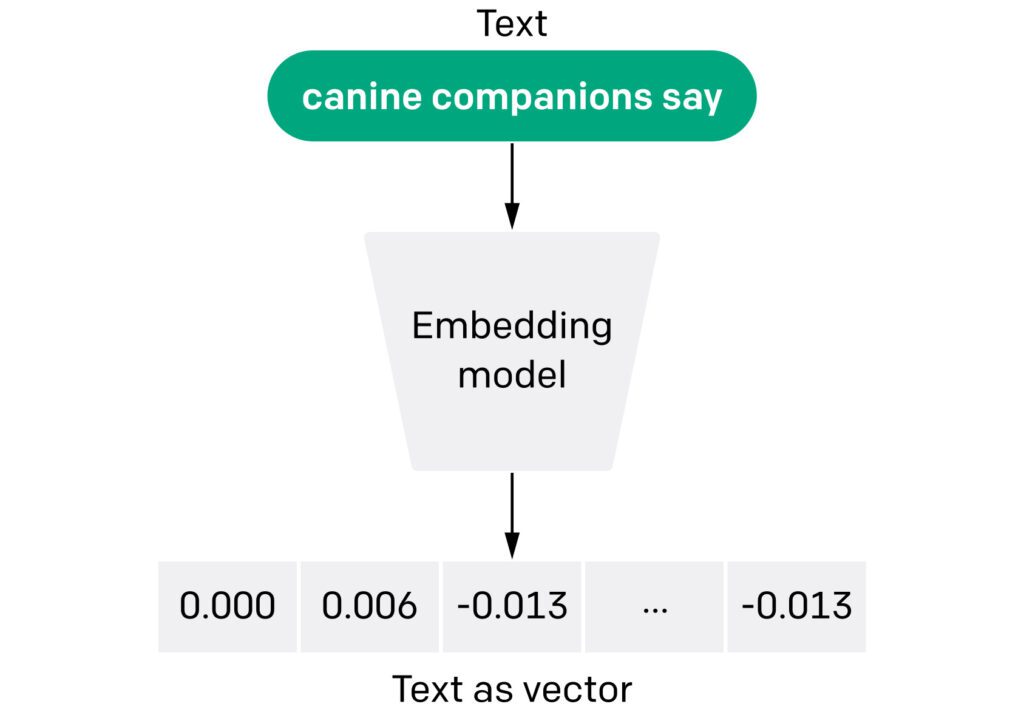

At their core, embedding models are designed to transform high-dimensional, often unstructured data into a lower-dimensional, continuous vector space. Each vector, or embedding, encapsulates the essential features of the input, preserving semantic relationships and structural information. These embeddings enable various downstream tasks, including semantic search, recommendation systems, clustering, classification, and more.

Embedding models are trained to ensure that similar inputs have embeddings that are close in the vector space, while dissimilar ones are placed far apart. This geometric arrangement in the embedding space allows for efficient similarity computations using distance metrics like cosine similarity or Euclidean distance.

How Embedding Models Work?

The functioning of embedding models generally consists of the following stages:

Input Preprocessing

This step varies depending on the data modality:

- Text: Tokenization splits sentences into words or subword units and converts them into token IDs.

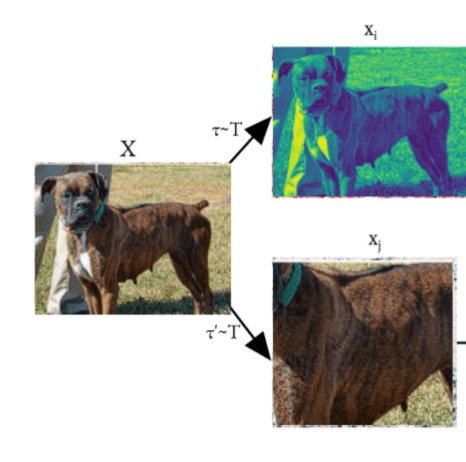

- Images: Resizing, normalization, and sometimes data augmentation (like flipping or cropping) are applied.

- Audio: Audio signals are often converted into spectrograms or processed directly as waveforms.

- Videos: Videos are decomposed into frames and sampled to capture temporal consistency.

Feature Extraction

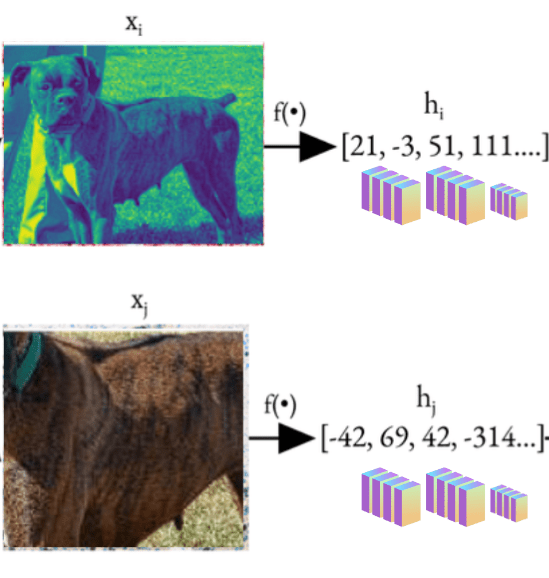

Deep neural network processes the preprocessed input to extract high-level features. This step, too, depends on the data modality as follows –

- For text, transformer encoders learn contextual representations of words or sentences.

- For images, convolutional or transformer-based models learn spatial features.

- For audio, models like Wav2Vec and Whisper learn latent acoustic patterns.

- For videos, spatial features are extracted per frame and then aggregated with temporal models.

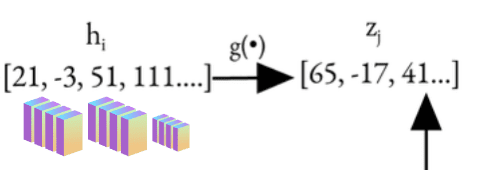

Projection into Embedding Space

The model compresses the extracted features into a fixed-length vector, often using a pooling layer (e.g., mean, max, or CLS token) or a linear projection layer. This vector is the embedding.

Training Objective

Embedding models are usually trained with objectives such as:

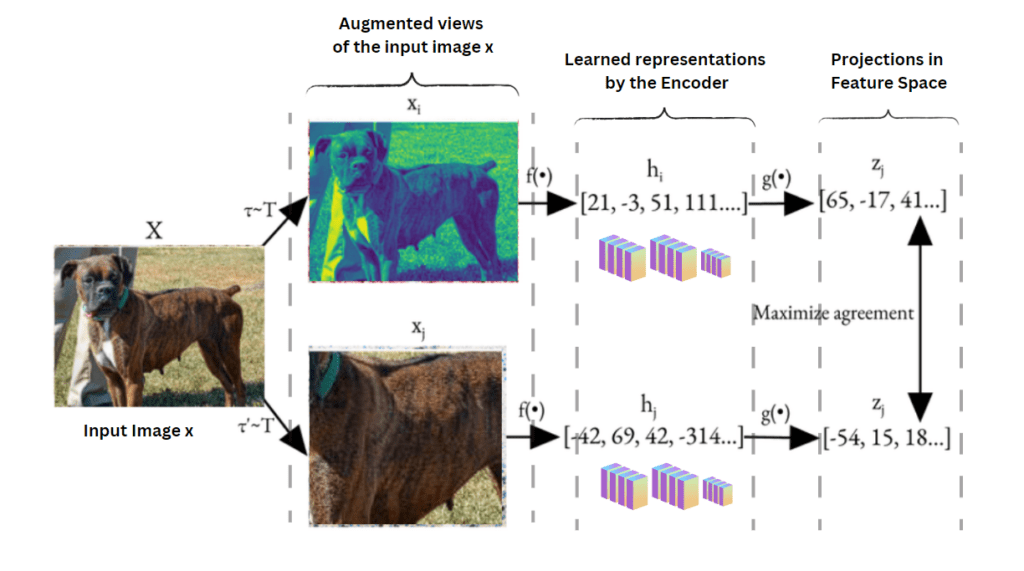

- Contrastive Learning: Pairs of similar inputs (e.g., image-caption pairs) are brought closer in the embedding space, while dissimilar ones are pushed apart.

- Masked Modeling: Predict masked input portions (as in BERT) to encourage contextual understanding.

- Reconstruction: Models like autoencoders attempt to reconstruct the input from its embedding.

- Supervised Classification: Sometimes, embeddings are trained via classification, using the penultimate layer of the network.

- Unsupervised Learning Objective: The model learns to organize data by clustering similar words or images together.

Post-Processing (Optional)

In some cases, embeddings are normalized (e.g., L2-normalization) or dimensionality is reduced using PCA or UMAP for visualization or deployment.

Types of Embedding Models by Modality

Embedding models are tailored to handle various data formats, each with its own unique structure and requirements. Below, we explore how embeddings are generated for different input types, starting with text.

Text Embedding Models

Text embedding models process natural language and produce dense vector representations that capture semantic and syntactic properties. Prominent architectures include:

- BERT (Bidirectional Encoder Representations from Transformers): Trained using masked language modeling and next sentence prediction, BERT captures bidirectional context.

- RoBERTa: An optimized variant of BERT with improved training strategies.

- GPT (Generative Pre-trained Transformer): Primarily generative, GPT can also produce effective embeddings from its intermediate layers.

- Sentence-BERT (SBERT): Fine-tuned specifically for producing sentence-level embeddings suitable for similarity and clustering tasks.

These models are typically trained on large corpora in a self-supervised manner, learning contextual relationships between words, phrases, and sentences.

Image Embedding Models

For images, embedding models convert visual content into vector representations that encode object presence, spatial relationships, texture, and style. Key architectures include:

- CLIP (Contrastive Language-Image Pretraining): Trained on image-text pairs, CLIP maps both modalities into a shared embedding space, enabling cross-modal tasks.

- DINO and SimCLR: Self-supervised contrastive learning models that generate robust visual embeddings without labeled data.

- Vision Transformers (ViT): Treat images as sequences of patches and apply transformer-based attention mechanisms.

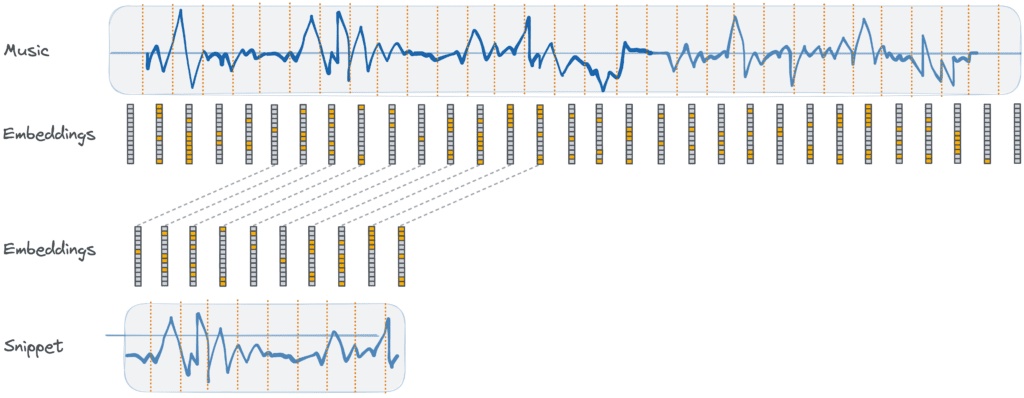

Audio Embedding Models

Audio embedding models process waveforms or spectrograms to create representations that capture phonetic, linguistic, emotional, or acoustic features.

- Wav2Vec 2.0: A self-supervised model by Meta that learns representations from raw audio.

- Whisper: OpenAI’s model for automatic speech recognition, whose intermediate layers can serve as embeddings.

- CLAP (Contrastive Language-Audio Pretraining): Learns audio embeddings aligned with language descriptions.

Audio embeddings are essential for tasks such as speaker identification, emotion detection, and audio classification.

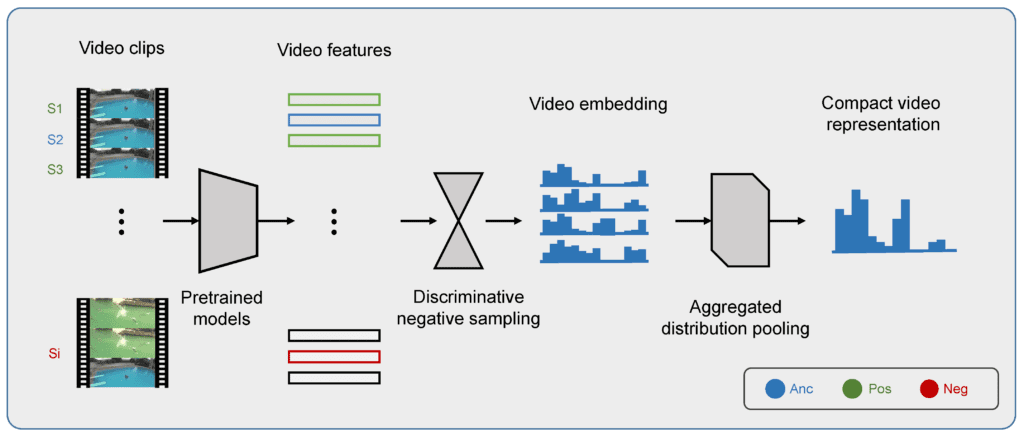

Video Embedding Models

Video embedding models must handle both spatial and temporal dimensions. These embeddings summarize motion, scene changes, and actions.

- VideoBERT: Adapts BERT for joint modeling of video and associated text.

- SlowFast Networks: Combine slow and fast pathways to capture long-term and short-term motion dynamics.

- TimeSformer and ViViT: Transformer-based models that process video frames in a time-aware fashion. It uses pure transformer-based architectures for video classification.

These models often combine frame-level visual embeddings with sequential models to learn rich temporal patterns.

Real-World Applications of Embedding Models

- Search Engines: Power semantic search by retrieving content based on meaning.

- Recommendation Systems: Suggest content based on user or item embeddings (e.g., Spotify, Netflix).

- Audio Analysis: Enable speaker verification, music recommendation, and sound classification.

- Video Understanding: Used for action recognition, scene segmentation, and summarization.

FAQs About Embedding Models

- Q: What tools can I use to explore or visualize embeddings?

You can use tools like UMAP, t-SNE, or PCA for dimensionality reduction and visualization. Libraries like TensorBoard, Plotly, and Weights & Biases support interactive embedding visualizations.

- Q: What’s the difference between traditional features and embeddings?

Unlike handcrafted or sparse traditional features, embedded are learned, dense, and semantic-rich.

- Q: Are embeddings reusable across tasks?

Yes, especially those from foundation models. However, fine-tuning can help for task-specific needs.

- Can embedding models be fine-tuned for custom domains?

Yes. Fine-tuning allows you to specialize a pretrained embedding model on your domain-specific data, which can drastically improve relevance and accuracy in applications like search or classification.

Conclusion

Embedding models are essential in bridging the gap between raw, high-dimensional data and structured, machine-understandable formats. As AI systems continue to evolve, the development of more sophisticated, multimodal embedding techniques will play a critical role in advancing machine understanding across different forms of input. By transforming how we represent data, embedding models are not just tools, they are the foundation of intelligent, adaptive, and scalable AI solutions.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning