News

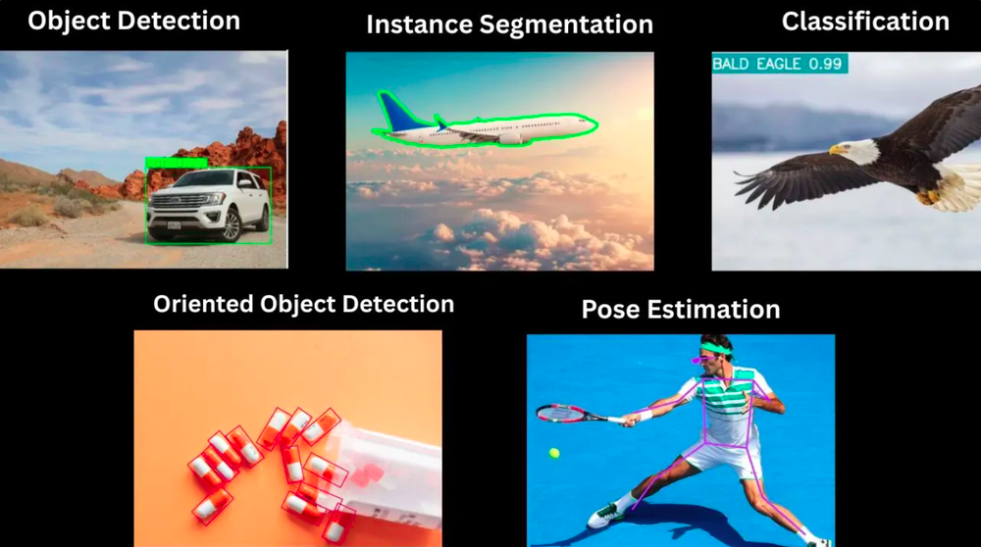

YOLOv26 is an edge-first object detector designed for real-time deployment, featuring NMS-free inference, quantization stability, and predictable latency.

Discover the HOPE architecture, a revolutionary self-modifying AI system that solves catastrophic forgetting and scales to 10 million tokens.

SGLang is a high-performance runtime for structured LLM programs, enabling fast KV cache reuse, parallel execution, and efficient serving.

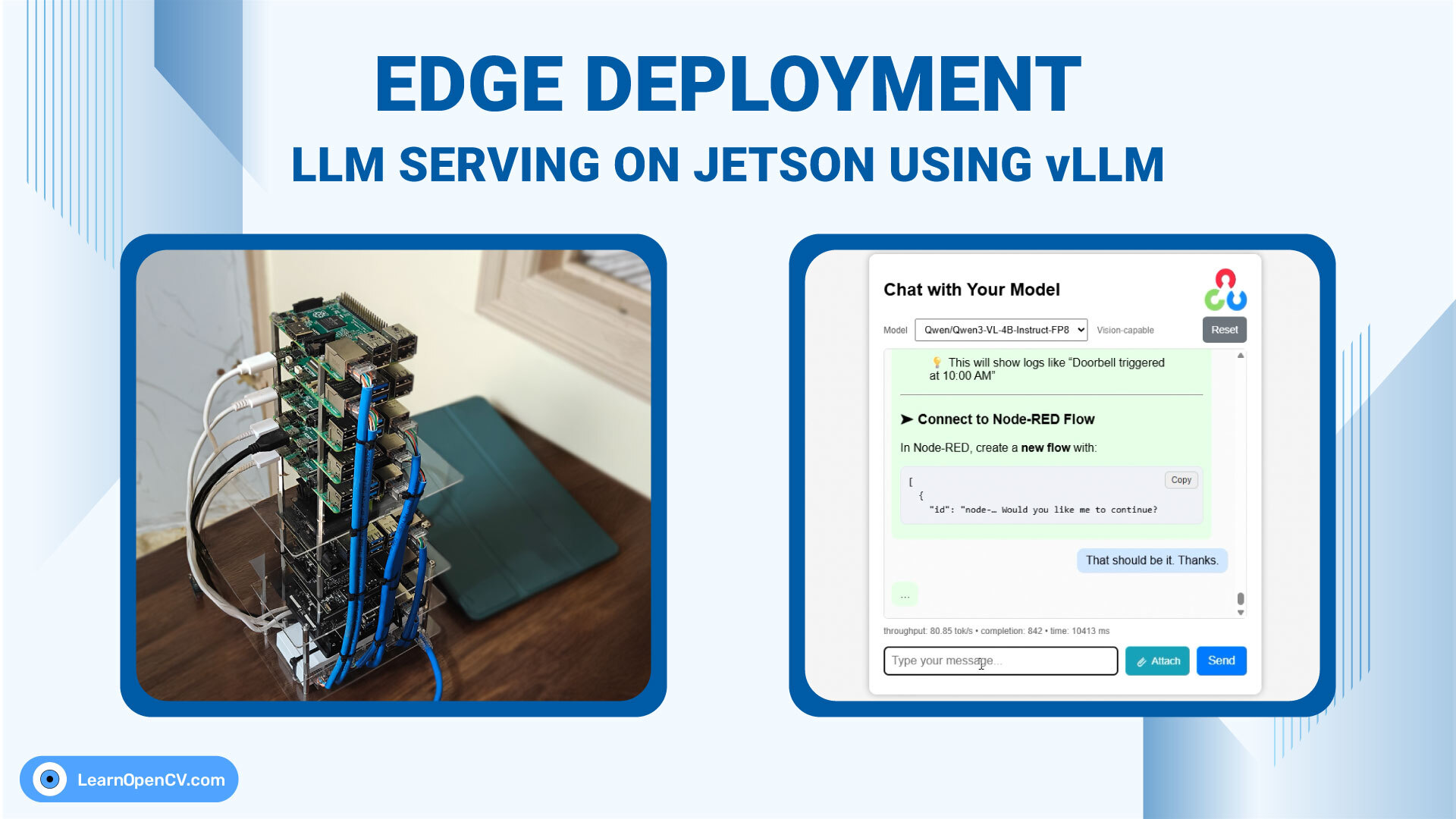

Learn what it really takes to run LLMs on an 8 GB Jetson Orin Nano, covering setup, failures, memory tuning, and a practical comparison between vLLM and llama.cpp. An article

For over a decade, progress in deep learning has been framed as a story of better architectures. Yet beneath this architectural narrative lies a deeper and often overlooked question –

Understanding large GitHub repositories can be time-consuming. Code-Analyser tackles this problem by using an agentic, approach to parse and analyze codebases.

Naive Transformers is good for lab experiments, but not for production. Check out what are the major problems associated with Autoregressive inference. In this post, we will cover how modern

SAM 3D is Meta’s groundbreaking foundation model for reconstructing full 3D shape, texture, and object layout from a single natural image. Learn how it works.

Yet another SOTA model from META, meet SAM-3. Learn about what's new and how to implement your own tracking pipeline using SAM-3.

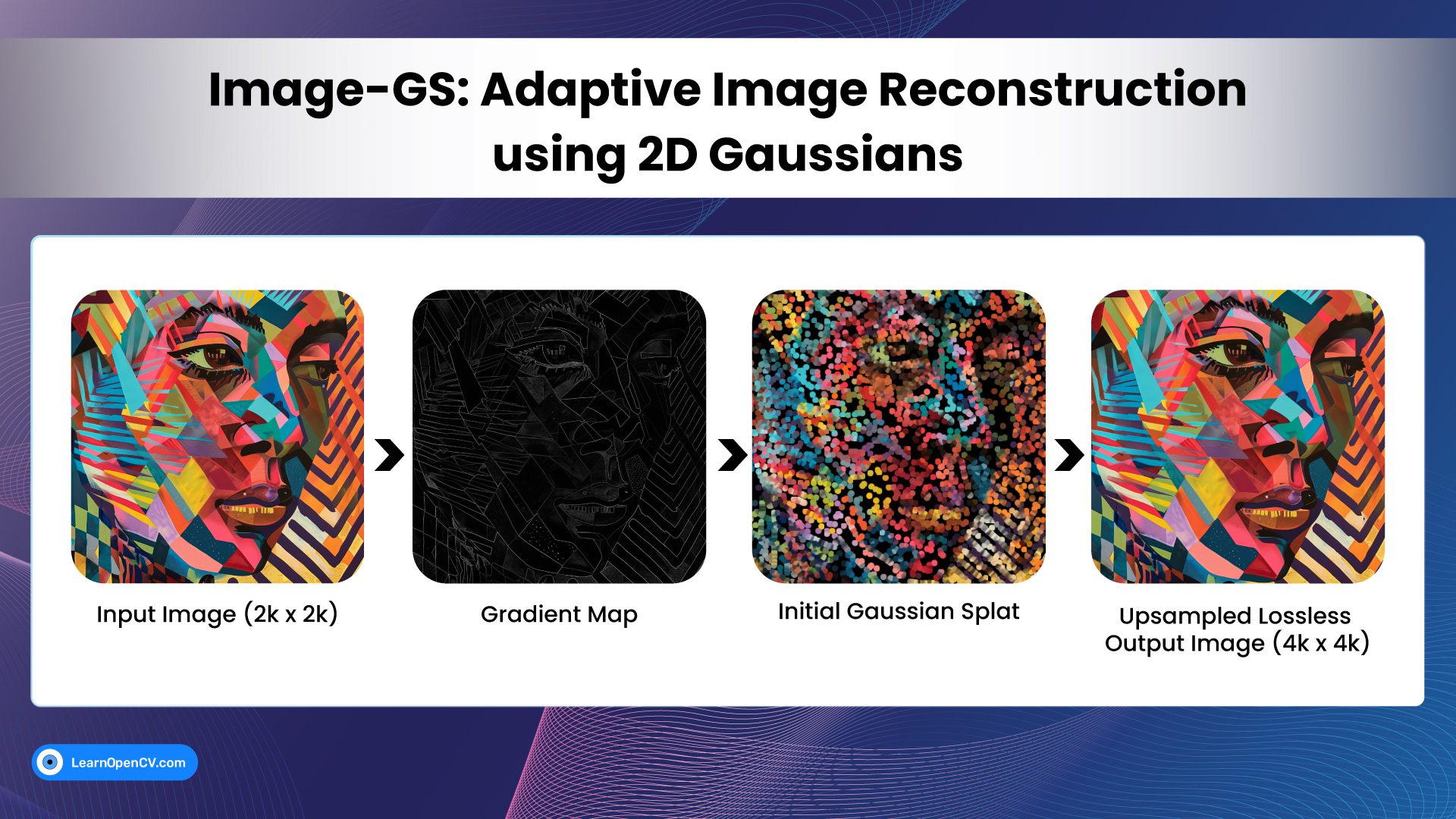

Discover Image-GS, an image representation framework based on adaptive 2D Gaussians, outperforming neural and classical codecs in terms of real-time efficiency.

vLLM Paper Explained. Understand how pagedAttention, and continuous batching works along with other optimizations by vLLM over time.

Processing long documents with VLMs or LLMs poses a fundamental challenge: input size exceeds context limits. Even with GPUs, as large as 12 GB can barely process 3-4 pages at