The rapid growth of video content has created a need for advanced systems to process and understand this complex data. Video understanding is a critical field in AI, where the goal is to enable machines to comprehend, analyze, and interact with video content, much like humans.

In this post, we’ll build and analyze two practical pipelines:

- Video Content Moderation with CLIP + Gemini

- Video Summarization with Qwen2.5-VL

Finally, we’ll show the flow of pipeline needed for one end-to-end solution: first ensuring videos are safe, then automatically summarizing them. This aligns perfectly with real-world use cases in social media, e-learning, corporate training, and compliance.

- The Model Foundations: CLIP, Gemini, and Qwen

- Part 1: Video Content Moderation with CLIP and Gemini

- Part 2: Video Summarization with Qwen2.5-VL

- Part 3: Combining Moderation + Summarization

- Results

- Conclusion

- References

The Model Foundations: CLIP, Gemini, and Qwen

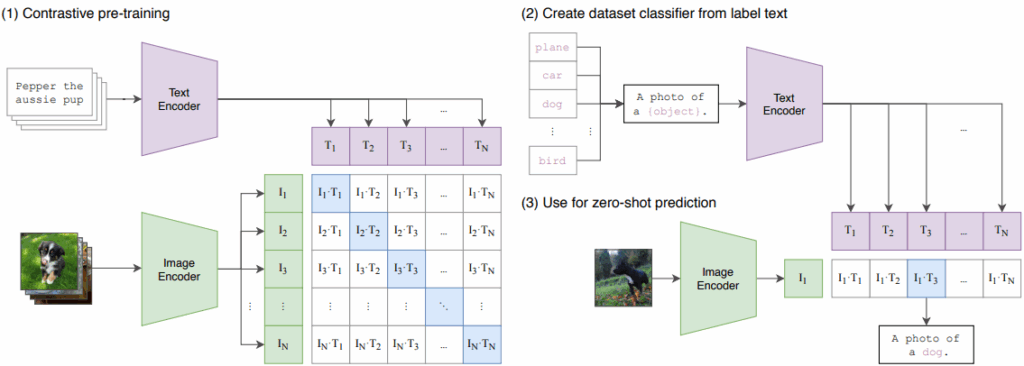

CLIP (Contrastive Language–Image Pretraining)

CLIP, introduced by OpenAI, is trained on 400M image-text pairs. It aligns images and text in the same embedding space:

- If an image and caption match, their embeddings are close.

- If they don’t match, they are pushed apart.

This enables zero-shot classification: you provide natural language prompts (e.g., “this image contains nudity”), and CLIP compares them with the image. No task-specific fine-tuning is needed.

Strengths for moderation:

- Add/remove categories easily by editing prompts.

- Generalizes to new scenarios without retraining.

- Efficient at inference time.

Gemini

Gemini is Google’s family of large multimodal models. In our moderation pipeline, Gemini is used not to classify but to explain why content was flagged. This explanation layer improves trust, auditability, and regulatory compliance.

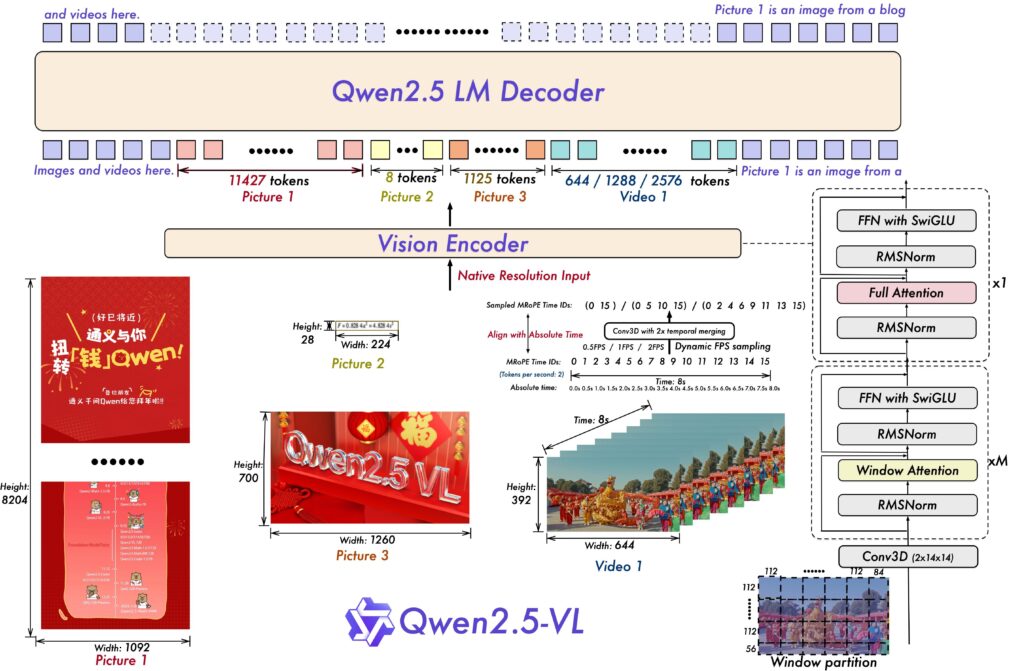

Qwen2.5-VL

Qwen2.5-VL, developed by Alibaba, is a vision-language model optimized for multimodal tasks. Unlike CLIP (alignment), Qwen is a generative model: it takes video input and outputs structured summaries.

Key capabilities:

- Handles videos directly (via sampled frames).

- Instruction following behavior ensures controllable outputs.

- Can produce both bullet points and paragraph summaries.

Together, these models cover the detect → explain → summarize pipeline we need.

Part 1: Video Content Moderation with CLIP and Gemini

We begin with the moderation script. This script extracts frames from a video, classifies each using CLIP, and then uses Gemini to generate a concise explanation for unsafe content.

Imports

import torch

import gradio as gr

from PIL import Image

import cv2

import google.generativeai as genai

from transformers import CLIPProcessor, CLIPModel

import json

torch: tensor operations, GPU acceleration.gradio: interactive interface.PIL.Image: image representation.cv2: frame extraction from videos.google.generativeai: Gemini client.CLIPProcessor,CLIPModel: Hugging Face model and processor for CLIP.json: structured output for flagged frames.

genai.configure(api_key="...")

Configures Gemini with an API key. In production, store this in environment variables( a .env file ).

Loading CLIP

model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

processor = CLIPProcessor.from_pretrained("openai/clip-vit-base-patch32")

- Loads the CLIP ViT-B/32 model.

processortokenizes text prompts and preprocesses images.

Extracting Frames

def extract_frames(video_path):

cap = cv2.VideoCapture(video_path)

frames= []

idx = 0

while True:

ret, frame = cap.read()

if not ret:

break

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

frames.append((idx, Image.fromarray(rgb)))

idx += 1

cap.release()

return frames

- Opens a video file with OpenCV.

- Reads each frame, converts to RGB, wraps as

PIL.Image. - Stores as

(frame_index, image)pairs. - Returns full list of frames.

Frame Classification with CLIP

def classify_frame_with_clip(frame: Image.Image):

text_inputs = [

"this image is normal",

"this image contains nudity",

"this image contains enticing or sensual content",

"this image contains violence"

]

inputs = processor(text=text_inputs, images=frame, return_tensors="pt", padding=True)

with torch.no_grad():

output = model(**inputs)

probs = output.logits_per_image.softmax(dim=-1)

confidence, predicted_class = probs.max(dim=-1)

labels = ["normal", "nudity", "enticing or sensual", "violent"]

return labels[predicted_class.item()], confidence.item()

- Defines candidate labels as natural language.

- Processor encodes text + frame.

- CLIP computes similarity logits.

softmaxconverts logits to probabilities.- Returns the most probable label and its confidence.

Explanations with GEMINI

def get_gemini_explanation(images: list, flagged_frames_: list) -> str:

llm = genai.GenerativeModel("gemini-2.5-flash")

image_parts = []

for img in images:

buffer = io.BytesIO()

img.save(buffer, format="JPEG")

image_parts.append({"mime_type": "image/jpeg", "data": buffer.getvalue()})

prompt = f"""

You are given a set of image frames ... {json.dumps(flagged_frames_, indent=2)}

Analyze the flagged images and explain clearly in no more than 2 lines.

"""

response = llm.generate_content([prompt] + image_parts)

return response.parts[0].text.strip()

- Converts flagged frames into JPEG bytes.

- Builds a prompt with metadata + images.

- Gemini generates a concise explanation.

Moderation Orchestration

def moderate_video(video_file):

frames = extract_frames(video_file)

flagged_frames, img_list = [], []

for idx, img in frames:

label, confidence = classify_frame_with_clip(img)

if label != "normal" and confidence > 0.5:

flagged_frames.append({"frame_id": idx, "classification": label, "confidence": confidence})

img_list.append(img)

if not flagged_frames:

return "All frames are appropriate.", "None", {}

explanation = get_gemini_explanation(img_list, flagged_frames)

final_perct = (len(img_list)/len(frames))*100

return explanation, final_perct, flagged_frames

- Extracts frames.

- Classifies each frame.

- Stores flagged frames above confidence threshold.

- If unsafe, asks Gemini for explanation.

- Computes flagged percentage.

Gradio Interface

iface = gr.Interface(

fn=moderate_video,

inputs=gr.Video(label="Upload a Video"),

outputs=[

gr.Textbox(label="Why is it Flagged?"),

gr.Textbox(label="NSFW Percentage"),

gr.JSON(label="Flagged Frames")

],

title="Video Content Moderation with CLIP and Gemini",

description="Detects unsafe content in a video and provides reasoning."

)

iface.launch(share=True)

- Interactive demo with video upload.

- Shows explanation, percentage, and JSON.

Efficiency Improvements

Currently, CLIP re-encodes text prompts for every frame. Since prompts are fixed, we can precompute embeddings:

with torch.no_grad():

text_inputs = ["this image is normal", "this image contains nudity", "this image contains enticing or sensual content", "this image contains violence"]

text_batch = processor(text=text_inputs, return_tensors="pt", padding=True)

text_embeds = model.get_text_features(**text_batch)

text_embeds = text_embeds / text_embeds.norm(dim=-1, keepdim=True)

For each frame, compute only the image embedding, then compare with cached text embeddings. This reduces redundant compute, especially for long videos.

Part 2: Video Summarization with Qwen2.5-VL

Now that we can detect unsafe content, let’s summarize safe videos with Qwen2.5-VL.

Imports

import torch

from transformers import AutoProcessor, Qwen2_5_VLForConditionalGeneration

from qwen_vl_utils import process_vision_info

AutoProcessor: handles multimodal input encoding.Qwen2_5_VLForConditionalGeneration: instruction-tuned model for summarization.process_vision_info: prepares video data for the model.

Summarization Function

def summarize_video(video_path: str, max_new_tokens: int = 256) -> str:

model_id = "Qwen/Qwen2.5-VL-3B-Instruct"

model = Qwen2_5_VLForConditionalGeneration.from_pretrained(model_id, torch_dtype="auto", device_map="auto")

processor = AutoProcessor.from_pretrained(model_id)

vpath = Path(video_path).resolve()

messages = [{

"role": "user",

"content": [

{"type": "video", "video": f"file://{vpath}"},

{"type": "text", "text": (

"Summarize this video. Return ONLY valid JSON with two keys:\n"

'"bullets": a list of 5-7 concise bullet points,\n'

'"paragraph": a short 120-word paragraph summary.\n'

)},

],

}]

- Loads Qwen model + processor.

- Builds a chat-style message with video and summarization instructions.

Preprocessing and Generation

text = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

image_inputs, video_inputs = process_vision_info(messages)

inputs = processor(text=[text], images=image_inputs, videos=video_inputs, return_tensors="pt").to(model.device)

with torch.no_grad():

output_ids = model.generate(**inputs, max_new_tokens=max_new_tokens)

trimmed = [out_ids[len(in_ids):] for in_ids, out_ids in zip(inputs.input_ids, output_ids)]

out_text = processor.batch_decode(trimmed, skip_special_tokens=True)[0]

- Converts messages into model-ready inputs.

- Runs generation, trimming prompt tokens.

- Decodes into raw text (expected to be JSON).

Parsing JSON

def try_parse_json(text: str):

try:

return json.loads(text)

except:

import re

m = re.search(r"\{[\s\S]*\}", text)

return json.loads(m.group(0)) if m else None

- First attempts strict

json.loads. - Falls back to regex extraction.

Writing Outputs

def write_outputs(obj_or_text, out_path: Path):

if isinstance(obj_or_text, dict) and "bullets" in obj_or_text and "paragraph" in obj_or_text:

bullets = obj_or_text["bullets"]

paragraph = obj_or_text["paragraph"]

md = ["# Video Summary", "", "## Key Points"]

md.extend([f"- {b}" for b in bullets])

md.append("")

md.append("## Short Summary")

md.append(paragraph)

out_path.write_text("\n".join(md), encoding="utf-8")

Saves summary both as Markdown (readable) and JSON (machine-readable).

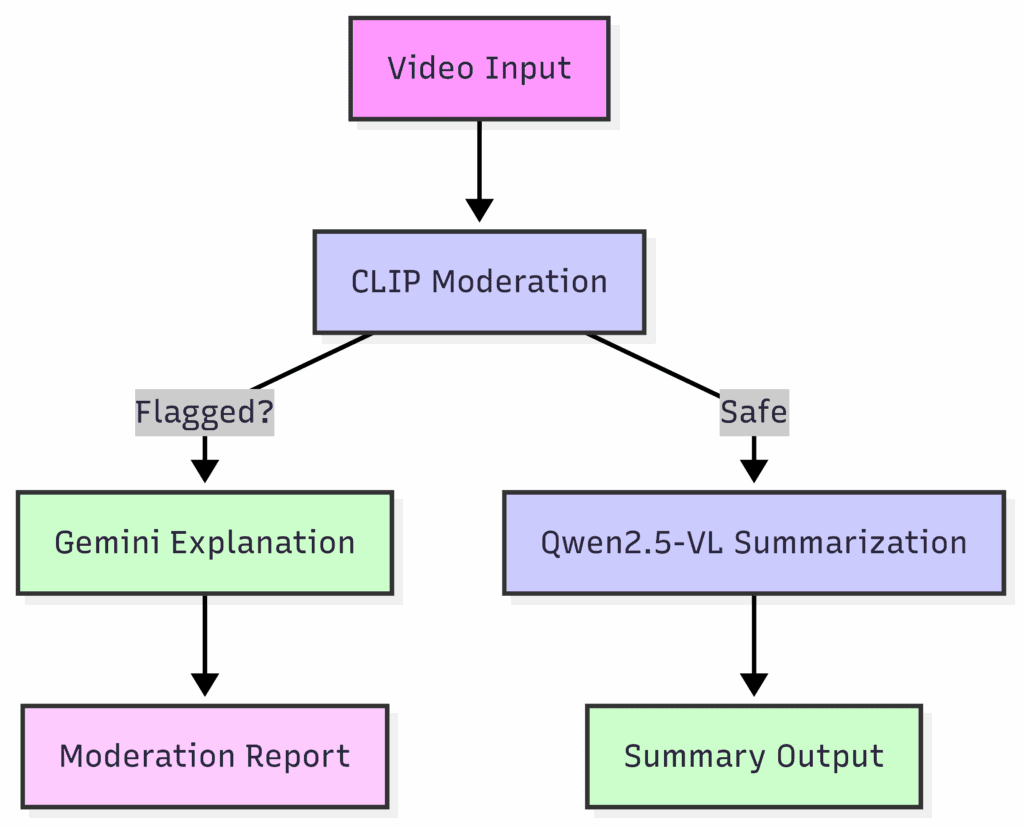

Part 3: Combining Moderation + Summarization

Combining both moderation and summarization scripts can be done as well, with the help of the flow diagram given below:

Why this Matters:

- Platforms: Stop unsafe content before distribution.

- Users: Quickly understand safe videos through summaries.

- Businesses: Compliance + accessibility in one workflow.

Performance Considerations

- Precompute text embeddings (moderation).

- Sample frames at intervals (e.g., 1 fps) instead of decoding every frame.

- Batch inference for CLIP.

- Set strict JSON prompts for Qwen to guarantee parseable output.

Future Directions

- Streaming moderation for live video.

- Unified models (e.g., Qwen Omni) to handle both moderation + summarization in one step.

- Customization: Define categories (political, medical, sensitive).

- Scalability: Deploy with ONNX/TensorRT for efficiency.

Results

The above video showcases a gradio application created for content moderation. The three tabs beside the image input tab, displays the reason for flagging the video( if there is any flagged content ), percentage of flagged content in the whole video and the final block showing the frames which are predicted to contain NSFW content with the confidence score alongside.

The above video is used for inferencing with the script of Video summarization. The output contains two files( .md and .json ). Output summary is provided below:

{

"bullets": [

"Cyclists in colorful jerseys are riding in a tight formation on a city street.",

"The cyclists are pedaling vigorously, maintaining a synchronized pace.",

"Onlookers line the street, some taking photos and others watching the race intently.",

"The scene is bustling with activity as the cyclists approach a curve in the road.",

"A black Audi SUV with 'Dubai Tour' branding drives past, followed by a white police vehicle.",

"The cyclists continue their ride, navigating the curve with precision and speed.",

"The crowd remains engaged, capturing the moment with their cameras and phones."

],

"paragraph":

"The video captures an exhilarating cycling race through a vibrant city street, where cyclists in colorful jerseys maintain a tight formation as they navigate a curve. The scene is lively, with spectators lining the street, some taking photos and others watching the race intently. A black Audi SUV and a white police vehicle drive past, adding to the dynamic atmosphere. The cyclists continue their ride, showcasing their skill and determination as they maneuver around the curve."

}

Conclusion

We built a practical, end-to-end video AI pipeline:

- Moderation with CLIP + Gemini – detects unsafe frames and explains why.

- Summarization with Qwen2.5-VL – produces concise bullet points and a paragraph.

- Unified pipeline – ensuring only safe content gets summarized.

By combining classification, explanation, and summarization, this system delivers safety, interpretability, and accessibility for the overwhelming volume of video content online.

This pipeline demonstrates how multimodal AI can transform content workflows, paving the way for real-world deployments in social platforms, education, and enterprise compliance.

References

- CLIP Documentation: https://huggingface.co/docs/transformers/en/model_doc/clip

- Google API Reference Documentation: https://ai.google.dev/api/generate-content

- Qwen-2.5-VL Documentation Hugging Face: https://huggingface.co/Qwen/Qwen2.5-VL-3B-Instruct

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning