Processing lengthy documents with vision-language models (VLMs or LLMs) poses a fundamental challenge: input size exceeds context limits. Even with GPUs, as large as 12 GB can barely process 3-4 pages at a time. Vector DB and RAG (Retrieval Augmented Generation) address this by enabling semantic retrieval at scale. In this article, we will explore how to leverage vectors DBs to build a robus document processing RAG pipeline.

Objective: Process very long documents through Qwen 3 VL 4B model, and run desired tasks like the following:

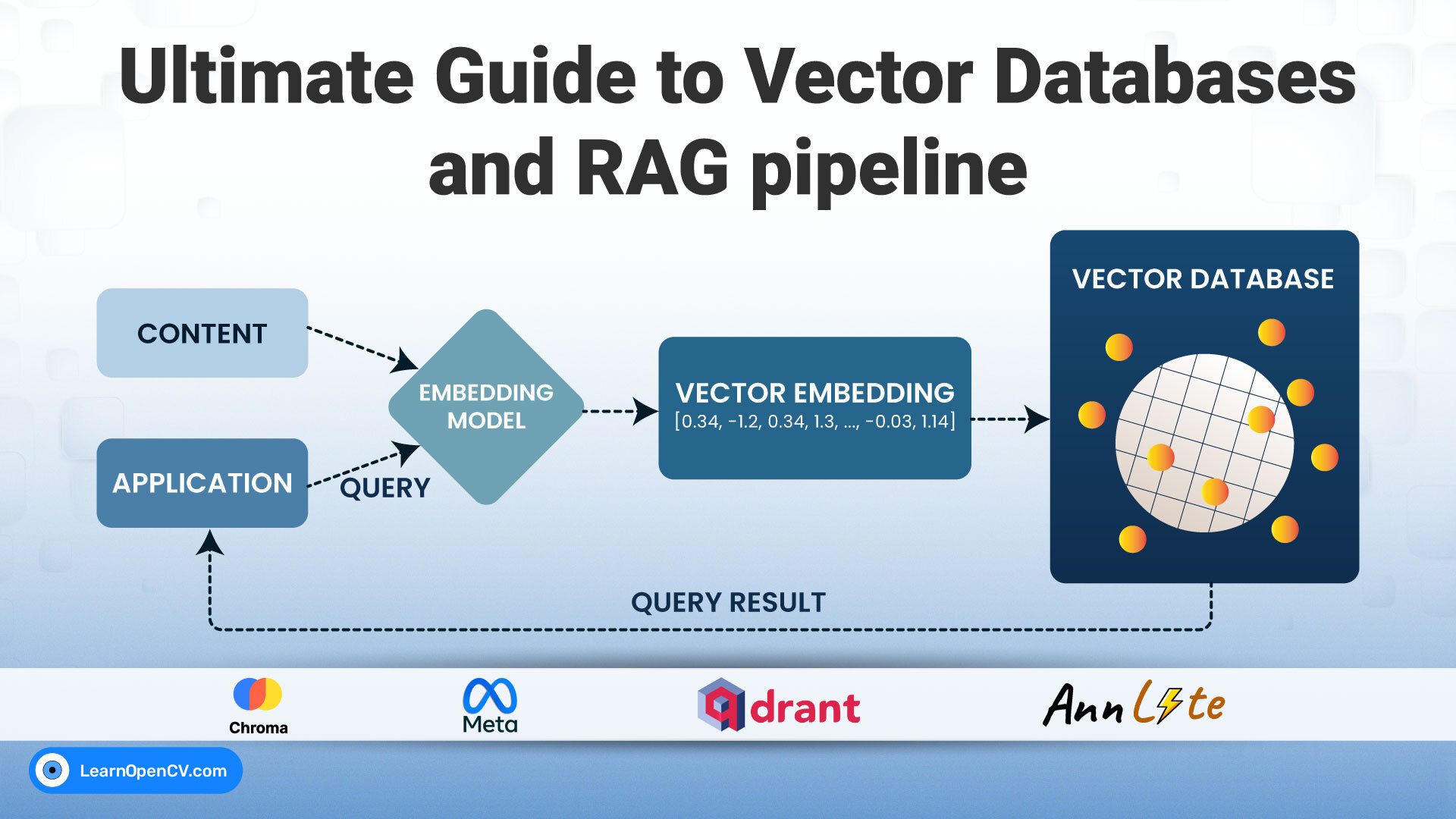

What Is A Vector Database?

A vector database is a specialized storage system. Unlike traditional databases that rely on exact keyword matches, vector databases use approximate nearest neighbor (ANN) search to retrieve the most semantically similar items, enabling fast and scalable similarity search at scale. It is designed to efficiently manage and query the following.

- high-dimensional numerical embeddings

- dense vectors that represent the semantic meaning of text

- images, and more

How is it used in context of RAG?

Large PDFs and long documents overwhelm vision-language models with excessive tokens and high cost. A RAG pipeline with a vector database fixes this by splitting documents into small chunks, embedding them once, and storing them with page metadata. When a user asks a question, the system searches only the most relevant parts in milliseconds. This keeps input tiny, avoids context limits, cuts inference time, and saves GPU memory.

In a RAG pipeline, vector databases handle retrieval. They store document chunks as embeddings ( from models like embeddingGemma ). Each chunk includes metadata like page numbers or file names.

When we ask a question:

- The query turns into a vector.

- The database finds the top-k closest matches.

- Results return in milliseconds, even from millions of entries.

Popular options:

- Qdrant: reliable, great with metadata

- Chroma: simple, easy to use

- FAISS: fast, made by Facebook

This keeps input small, inference fast, and costs low; without losing accuracy. In our blog post, we will be using all these three vectorDB’s but particularly work with Qdrant. The notebooks with examples of other databases have been included in the download code link.

Why use Qdrant vector DB for our application?

Qdrant is an open source vector DB written in RUST for high performance and reliablity. We are using it mostly for better metadata support. Few other advantages that it has are as follows.

- Payload filtering and indexing

- Hybrid search support

- Multiple vectors per object

- Production-ready capabilities

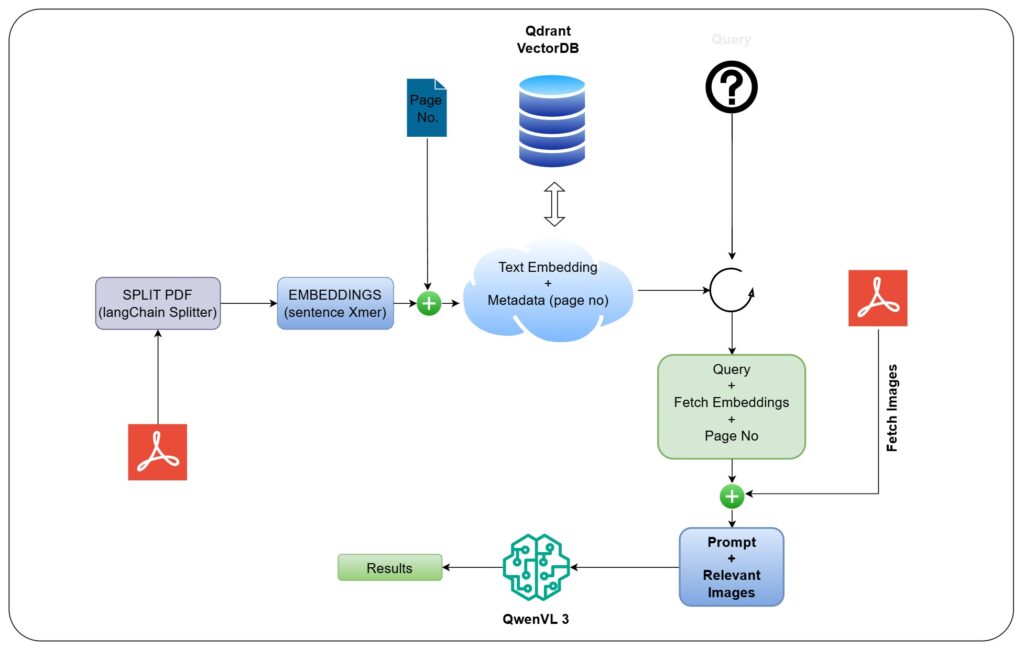

Document RAG Application Overview

Earlier we used readymade RAG pipelines for processing long videos. However, this is about building one from scratch.

As shown in the image above, we are converting the pdf to embeddings and store in the vector database. The RAG pipeline initiates a similarity search, and returns relevant embedding with associated page numbers. Post receiving page number, we fetch the pdf pages as images, and pass onto the VLM with Query + Embeddings + Image.

Imagine processing a 120 page pdf, just to retrieve a single answer. Now, it is narrowed down to specific paragraphs and relevant (3-4) pages.

Setup for Document RAG using Vector DB and RAG

We are using the following systems to test our RAG pipeline.

- 12GB RTX 3060, Windows 11, 16 GB RAM

- 12 GB RTX 3080 Ti, Ubuntu 20.04, 64 GB RAM

Models used:

- Embedding: Sentence Xmer – all-MiniLM-L6-v2

- Vision Language Model: Qwen 3 VL 4B Instruct – FP16

- Small LLM Fallback – Google FLAN T5

Toolset:

- langchain

- fitz

- huggingface

- Qdrant

Why Not Use a Text‐Only LLM?

While we can tokenize the text, embed it, retrieve, and feed into a text‐only LLM, you would incur these risks:

- Table layout is flattened: “Model A 85.2% Top-1” may become “Model A 85.2”.

- Caption separation: the figure caption may not be aligned to the figure itself.

- Visual cues ignored: bar-plots, axes, colors are lost.

For benchmark‐centric summarization and citation‐style output, we want the model to see the original page.

Document RAG: Complete Code Walkthrough

The theoretical architecture detailed above is implemented using a combination of specialized libraries: Qdrant for high-performance vector storage, LangChain for initial document splitting and integration, PyMuPDF (or fitz) for visual page extraction, and the HuggingFace transformers ecosystem for Qwen-VL-3 encoding and generation.

Below, we walk through the Python components, demonstrating how we ingest documents, retrieve context (both text and visual), and synthesize a grounded answer.

1. Setup and Ingestion Preparation

The first phase establishes the environment, loads the target document, and prepares the data for vectorization.

Import Dependencies for Vector DB and RAG

import os, fitz

from PIL import Image

from transformers import Qwen3VLForConditionalGeneration, AutoProcessor

from langchain_community.embeddings import HuggingFaceEmbeddings

from langchain_community.document_loaders import PyPDFLoader

from langchain_qdrant import QdrantVectorStore

from qdrant_client import QdrantClient

from qdrant_client.http.models import Distance, VectorParams

Block 2 (Ingestion Setup – Part 1: Loading and Splitting)

PDF_PATH = "Dataset/QwenVL2.5.pdf"

STORAGE_PATH = "qdrant_storage"

COLLECTION_NAME = "pdf_rag"

loader = PyPDFLoader(PDF_PATH)

documents = loader.load()

# Adjust metadata (crucial for grounding later)

for i, doc in enumerate(documents):

# Ensure 1-based page indexing for human readability/grounding

doc.metadata["page"] = doc.metadata.get("page", 0) + 1

splitter = RecursiveCharacterTextSplitter(chunk_size=256, chunk_overlap=50)

docs = splitter.split_documents(documents)

We iterate through the documents to ensure the page_number metadata is accurate (1-based index). This metadata is the primary link between the text chunks stored in Qdrant and the physical image files needed by Qwen-VL-3. RecursiveCharacterTextSplitter is used to break the raw text into manageable chunks (256 tokens) for embedding.

2. Qdrant Indexing and Multimodal Metadata

We set up Qdrant for local storage and perform the embedding and indexing.

Note that while our final architecture relies on multimodal (image) embeddings, this specific code snippet uses a standard text embedding model for retrieval efficiency, linking the text search to the visual pages.

Block 2 (Ingestion Setup – Part 2: Embedding and Qdrant Indexing)

# Using a standard text encoder for initial retrieval vectors (384 dimensions)

embeddings = HuggingFaceEmbeddings(model_name="sentence-transformers/all-MiniLM-L6-v2")

# Initialize Qdrant Client (local persistence)

client = QdrantClient(path=STORAGE_PATH)

# Create collection if it doesn't exist

if not client.collection_exists(COLLECTION_NAME):

client.create_collection(

collection_name=COLLECTION_NAME,

vectors_config=VectorParams(size=384, distance=Distance.COSINE),

)

# Use LangChain wrapper to add documents and store text/metadata as payload

vector_store = QdrantVectorStore(

client=client,

collection_name=COLLECTION_NAME,

embedding=embeddings,

content_payload_key="text",

metadata_payload_key="metadata"

)

# Store the chunked documents

vector_store.add_documents(docs)

In order to convert our text chunks into queryable vectors we instantiate a lightweight sentence transformer (384-dimensional vectors). A persistent Qdrant instance stores data locally (path=STORAGE_PATH). The collection pdf_rag is defined with 384-dimensional vectors and cosine distance metrics. To ensure that both the raw text chunk and its associated metadata are stored as queryable payloads alongside the vector, content_payload_key and metadata_payload_key are being used.

3. Retrieval: Search and Multimodal Linkage

The retrieval phase has two steps: finding relevant text chunks via vector search, and then using the retrieved page metadata to extract the visual evidence.

3.1 Textual Similarity Search in Vector DB and RAG

The query_with_page function performs the standard RAG retrieval using the text embedding of the user’s query against the vectors stored in Qdrant.

Block 3 (Query Function – query_with_page)

def query_with_page(query: str, k: int = 3) -> List[Dict[str, Any]]:

query_vector = embeddings.embed_query(query)

search_results = client.search(

collection_name=COLLECTION_NAME,

query_vector=query_vector,

limit=k,

with_payload=True,

)

# ... (processing and pretty printing results)

# Filter for unique pages to avoid sending duplicate images to the VLM

filtered_list = []

seen_pages = set()

for hit in search_results:

page = hit.payload.get("metadata", {}).get("page")

if page not in seen_pages:

seen_pages.add(page)

filtered_list.append({

"text": hit.payload.get("text", ""),

"page": page,

"score": hit.score,

# ... other required metadata

})

return filtered_list

# Returns relevant text chunks and their associated page numbers

The above function embeds the user query and asks Qdrant to find the top K nearest neighbors, returning the full text and metadata. The key output of this function is the list of unique page numbers associated with the high-scoring text chunks. This establishes the multimodal linkage. If the user asks about “Native Dynamic Resolution” Qdrant returns text chunks (and their associated pages) that discuss this concept.

3.2 Visual Context Extraction

Using the unique page numbers retrieved from Qdrant, we now pull the necessary images from the original PDF.

Block 5 (Image Extraction – get_page_images)

def get_page_images(pdf_path: str, filtered_list: List[Dict[str, Any]], max_pages: int = 3) -> List[Image.Image]:

# ... (error handling and limiting logic)

doc = fitz.open(pdf_path)

images = []

for res in filtered_list:

page_1b = res["page"] # 1-based index from Qdrant payload

page_0b = page_1b - 1

if page_0b < len(doc):

# Render the page as a high-resolution pixmap (200 DPI)

pix = doc[page_0b].get_pixmap(dpi=200)

# Convert pixmap to a PIL Image object

img = Image.frombytes("RGB", (pix.width, pix.height), pix.samples)

images.append(img)

doc.close()

# ... (matplotlib plotting for visualization)

return images

For each relevant page number obtained from the Qdrant payload, we use doc[page_0b].get_pixmap(dpi=200) to render the page as an image. A high DPI ensures charts and fine text remain eligible for the VLM. The above function returns a list of PIL Image objects, which is the required input format for Qwen-VL-3.

4. Synthesis: Qwen-VL-3 Multimodal Reasoning

In the final step, we load the Qwen-VL-3 model and combine the retrieved text snippets and the PIL images into a single, comprehensive prompt for synthesis.

Block 7 (Qwen-VL Model Loading)

model = Qwen3VLForConditionalGeneration.from_pretrained(

"Qwen/Qwen3-VL-4B-Instruct",

dtype="auto",

device_map="auto"

)

processor = AutoProcessor.from_pretrained("Qwen/Qwen3-VL-4B-Instruct")

Block 8 (Inference Execution)

# 1. Build the Multimodal Message Payload

messages = [

{

"role": "user",

"content": [

# Inject retrieved PIL Image objects

*[

{"type": "image", "image": img}

for img in images_extracted

],

# Inject retrieved text chunks with source citations

*[

{"type": "text", "text": f"[Page {res['page']}] {res['text']}"}

for res in filtered_list

],

# Final instruction guiding the synthesis and grounding

{

"type": "text",

"text": "Summarize the following content from the document in 3-5 sentences, making sure to cite the page number for any claims."

}

]

}

]

# 2. Apply Chat Template and Generate

inputs = processor.apply_chat_template(

messages,

tokenize=True,

add_generation_prompt=True,

return_dict=True,

return_tensors="pt"

).to(model.device)

generated_ids = model.generate(**inputs, max_new_tokens=1668)

# 3. Decode and Print Result

output_text = processor.batch_decode(...)

print(output_text[0])

This workflow successfully closes the loop, demonstrating how Qdrant serves not only as a pure text search index but also as a highly effective coordinate system for linking abstract queries to concrete visual evidence, enabling multimodal RAG.

Conclusion On Document RAG and VectorDB

In this article we have explored how to build a truly multimodal document intelligence system, one that indexes page images, retrieves the right evidence pages, and uses a vision-language model to generate grounded, traceable answers. The synergy of Qdrant for vector + metadata search and Qwen-VL for multimodal reasoning brings us closer to AI that doesn’t just read documents but understands them as a whole.

By deploying this architecture, you turn your document corpus into a searchable, explainable knowledge system. The future of document RAG is not just what does this document say? but what evidence does this answer rest upon? Let this be your foundation for building that future.

References

- VideoRAG: Redefining Long-Context Video Comprehension

- Video-RAG: Training-Free Retrieval for Long-Video LVLMs

- LangGraph: Building self-correcting RAG agent

- GraphRAG: Practical Guide to Supercharge RAG with Knowledge Graphs

- LightRAG: Simple and Fast Alternative to GraphRAG

- ColPali: Redefining Multimodal RAG with Gemini

A Vector DB (Vector Database) is the core retrieval layer in a RAG (Retrieval-Augmented Generation) system. It stores document embeddings, numerical representations of text chunks, and performs semantic similarity search to find the most relevant pieces of information for a query. In Document RAG, the Vector DB enables fast and accurate retrieval of related document sections before passing them to a language model for context-aware generation.

Yes. Document RAG systems can be fully built using open-source Vector DBs like Chroma, Qdrant, Milvus, or pgvector. These databases store and search embeddings efficiently, integrate easily with frameworks like LangChain or LlamaIndex, and support hybrid filtering for better retrieval accuracy. By pairing a local Vector DB with free embedding models from Hugging Face, you can create a fully open-source RAG pipeline without depending on paid APIs.

A typical Document RAG pipeline involves:

– Loading and chunking documents,

– Generating embeddings for each chunk,

– Storing those embeddings in a Vector DB,

– Retrieving the most relevant chunks during a query, and

– Passing them to a language model for final answer generation.

The Vector DB acts as the retrieval brain of the pipeline, enabling scalable and context-aware document reasoning.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning