The ultimate goal for many in artificial intelligence is to build agents that can perceive, reason, and act in our complex physical world. Meta AI has made a significant stride toward this vision with V-JEPA 2, a foundational world model for AI Planning and Robotics that learns the principles of physics purely from observation. It stands as a landmark achievement in self-supervised learning, enabling AI to understand video, predict outcomes, and plan actions with unprecedented zero-shot capability.

This comprehensive guide will break down the V-JEPA 2 architecture, its innovative training methodology, its application in robotics, and provide a hands-on Python code example to get you started.

- What is V-JEPA 2? The Core Philosophy of Representation Prediction

- The V-JEPA 2 Architecture Explained

- How V-JEPA 2 Learns: A Two-Stage Training Paradigm

- From Prediction to Planning: V-JEPA 2 in Robotics

- How to Use V-JEPA 2: A Practical Code Example

- Conclusion

- References

What is V-JEPA 2? The Core Philosophy of Representation Prediction

To truly appreciate V-JEPA 2, you must first understand its core philosophy, which deviates from popular generative approaches. Many video models try to predict future frames at the pixel level, a task that is both computationally expensive and often misguided.

- The Problem with Pixel-Level Prediction: The physical world is full of unpredictable, chaotic details like the rustling of leaves or ripples on water. Forcing a model to predict these details wastes its capacity on noise rather than on the underlying principles of motion and interaction.

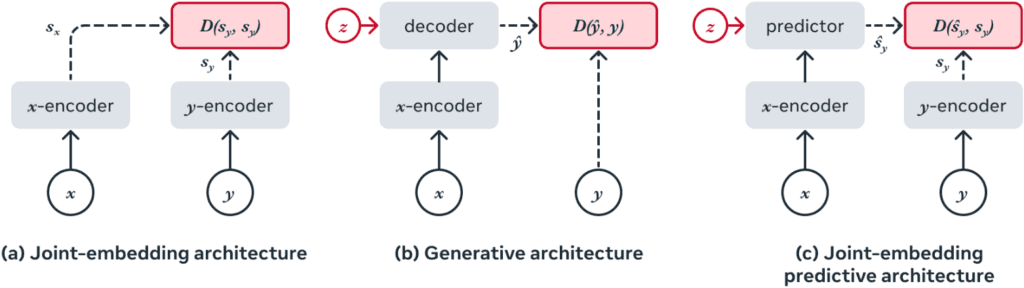

The Joint-Embedding Predictive Architecture (JEPA) offers a more elegant solution: predict in representation space.

Instead of asking, “What will the next frame look like?”, JEPA asks, “What will the abstract representation of the next frame be?”. This forces the model to learn a high-level, semantic understanding of the world, focusing on predictable dynamics rather than superficial textures.

[Image Suggestion: A diagram contrasting pixel-level prediction (blurry, uncertain future) with representation-level prediction (a clear, abstract future vector).]

The V-JEPA 2 Architecture Explained

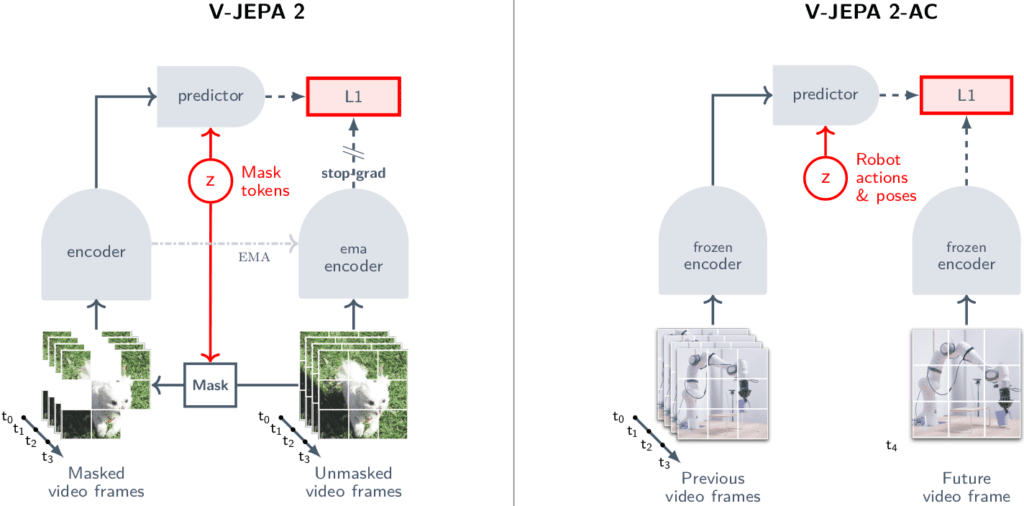

V-JEPA 2 is built upon the powerful Vision Transformer (ViT). During its self-supervised pre-training, it uses two main modules.

The Encoder: The Engine of Perception

The encoder’s job is to convert raw video clips into meaningful, abstract feature vectors.

- Input Processing: A video is divided into a grid of 3D patches called “tubelets” (e.g., 2 frames × 16×16 pixels). These tubelets are the fundamental input tokens for the model.

- Core Architecture: V-JEPA 2 employs a state-of-the-art Vision Transformer. Its most significant architectural feature is 3D Rotary Position Embeddings (3D-RoPE). Unlike static positional embeddings, 3D-RoPE dynamically encodes the temporal and spatial position of each tubelet by applying rotational matrices within the self-attention mechanism. This was a critical factor in stabilizing training at the billion-parameter scale.

- Output: The encoder outputs a sequence of high-dimensional vectors, each representing a tubelet and capturing rich, contextual information about the video.

The Predictor: The Engine of Learning

The predictor is a smaller, more lightweight ViT that is only used during the pre-training phase to teach the model about world dynamics.

- Learning Objective: The model is given a video where large portions are masked out. The predictor’s task is to use the visible parts (the context) to predict the representations of the hidden parts.

- Target Stability with EMA: To prevent the model from learning a trivial solution, the prediction target is not generated by the live encoder. Instead, it comes from a target-encoder, whose weights are a slow-moving exponential moving average (EMA) of the main encoder’s weights. This provides a stable and consistent learning signal.

- Loss Function: The training objective is to minimize the L1 distance (a type of error metric) between the predictor’s output and the target-encoder’s output.

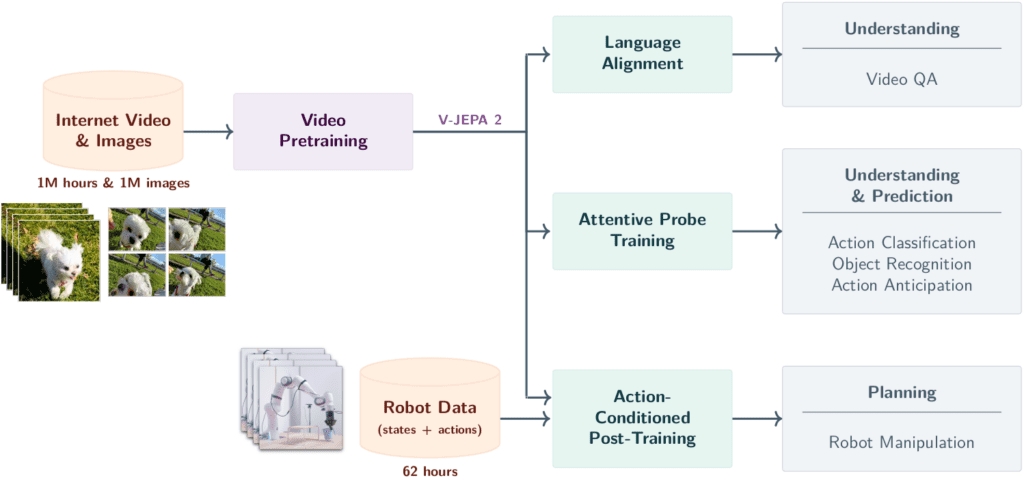

How V-JEPA 2 Learns: A Two-Stage Training Paradigm

V-JEPA 2’s incredible capabilities are the result of a deliberate, two-stage training process.

Stage 1: Action-Free Pre-training – Learning the “Physics” of the World

- Goal: To build a broad, passive understanding of physical dynamics.

- Data: The model is trained on the massive VideoMix22M dataset, featuring over 1 million hours of unlabeled public internet videos.

- Process: Using the mask-denoising objective, the model learns foundational concepts like object permanence, gravity, and motion trajectories without any human supervision.

- Result: A powerful, general-purpose video encoder. The weights of this encoder are frozen after this stage, locking in its vast knowledge.

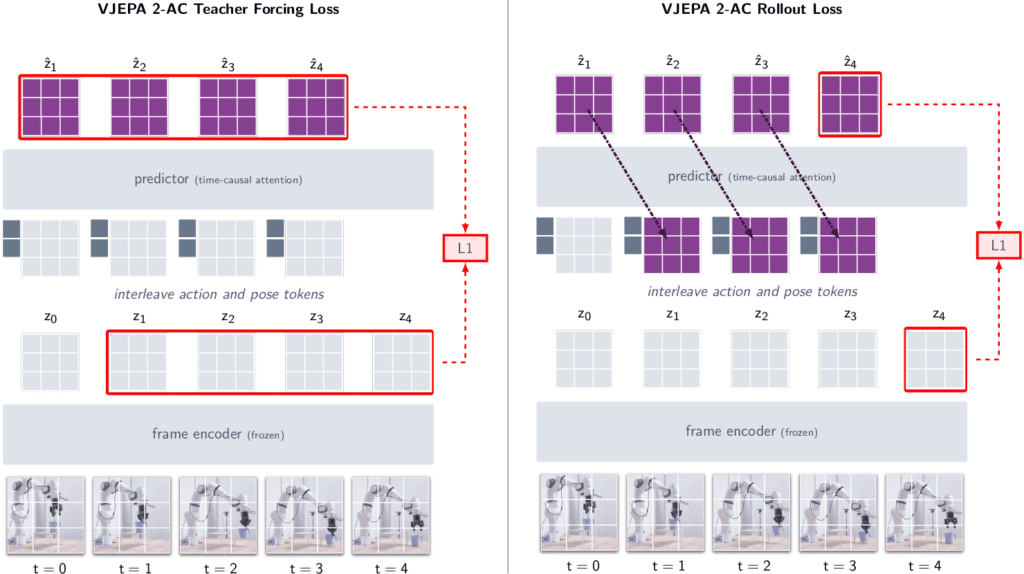

Stage 2: Action-Conditioned Post-training – Learning to Act

- Goal: To connect the model’s abstract knowledge to the causal effects of an agent’s actions.

- Model: This specialized version is called V-JEPA 2-AC (Action-Conditioned).

- Data: It is fine-tuned on a very small (less than 62 hours) portion of the Droid dataset, which contains robot arm videos paired with their corresponding action commands.

- Process: With the powerful encoder frozen, a new action-conditioned predictor learns to forecast the next state’s representation given the current state and a specific action. This efficiently teaches the model the “if I do this, then that happens” logic of interaction.

From Prediction to Planning: V-JEPA 2 in Robotics

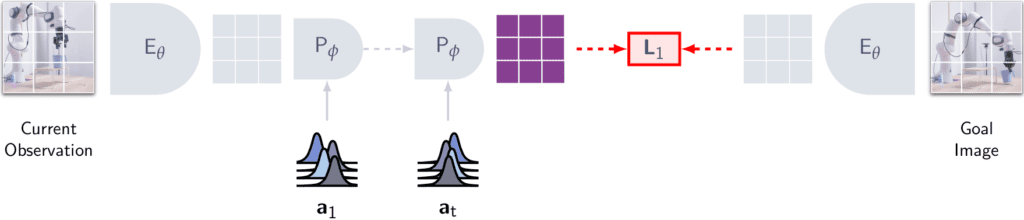

The true power of V-JEPA 2-AC is its ability to plan. It does not learn a fixed policy but uses its internal world model for Model-Predictive Control (MPC), allowing for incredible zero-shot generalization.

Here is how the planning loop works:

- Goal Specification: The robot is given a goal image (e.g., a picture of a block in its target location).

- Energy Function: The model defines an “energy” as the L1 distance between a predicted future representation (if it takes a certain action) and the goal’s representation. The lower the energy, the better the action.

- Action Optimization: The robot uses a sampling-based optimization algorithm, the Cross-Entropy Method (CEM), to efficiently search for a sequence of actions that minimizes this energy.

- Receding Horizon Control: It executes only the first action from the best sequence found, observes the new state, and then re-plans from scratch. This makes the system robust to real-world perturbations.

This planning-centric approach enables V-JEPA 2 to perform complex manipulation tasks in new environments without any task-specific training.

How to Use V-JEPA 2: A Practical Code Example

The above video is 30 seconds long with each frame dimension of (848, 480).

Meta has open-sourced the V-JEPA 2 model, making it an invaluable resource. Here is a Python script to load a pre-trained V-JEPA 2 encoder using Hugging Face Repo and use it for feature extraction.

from transformers import AutoVideoProcessor, AutoModel

import torch

from torchcodec.decoders import VideoDecoder

import numpy as np

hf_repo = "facebook/vjepa2-vitl-fpc64-256"

model = AutoModel.from_pretrained(hf_repo)

processor = AutoVideoProcessor.from_pretrained(hf_repo)

video_url = "input_video_here"

vr = VideoDecoder(video_url)

frame_idx = np.arange(0, 64) # choosing some frames. here, you can define more complex sampling strategy

video = vr.get_frames_at(indices=frame_idx).data # T x C x H x W

video = processor(video, return_tensors="pt").to(model.device)

with torch.no_grad():

video_embeddings = model.get_vision_features(**video)

print(video_embeddings.shape)

#output shape for video sample(shown above) we used is: torch.Size([1, 8192, 1024])

Understanding the Output

When you run the script, the final output will be:

torch.Size([1, 8192, 1024])

Let’s break down this shape:

- 1 (Batch Size): You processed one video clip.

- 8192 (Number of Patches): The model processed 64 frames. For each frame, it creates a grid of 16×16 patches from the 256×256 input. The model’s architecture processes tubelets of 2 frames. So, the total number of patches is (256/16) * (256/16) * (64 frames / 2 frames per tubelet) = 16 * 16 * 32 = 8192.

- 1024 (Embedding Dimension): Each of the 8192 patches is represented by a vector of 1024 numbers. This is the feature vector for that specific spatio-temporal part of the video.

Conclusion

V-JEPA 2 is a landmark achievement in building general-purpose world models. Its architectural elegance, efficient learning paradigm, and demonstrated planning capabilities provide a robust framework for the future of autonomous systems.

Future work will likely focus on developing hierarchical models that can reason across multiple timescales and integrating natural language for more intuitive human-AI collaboration. For now, V-JEPA 2 stands as a powerful, open-source tool and a clear blueprint for the next generation of AI that can perceive, reason, and act in our physical world.

References and Acknowledgments

- Official V-JEPA 2 Paper

- Official GitHub Repository

- Hugging Face Model Hub

- A special thanks to Merve Noyan for creating and sharing an insightful video demonstration on X of V-JEPA 2’s robotic control capabilities.

100K+ Learners

Join Free OpenCV Bootcamp3 Hours of Learning